Formalizing Expert Knowledge for Developing Accurate Speech Recognizers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Your Voice Assistant Is Mine: How to Abuse Speakers to Steal Information and Control Your Phone ∗ †

Your Voice Assistant is Mine: How to Abuse Speakers to Steal Information and Control Your Phone ∗ y Wenrui Diao, Xiangyu Liu, Zhe Zhou, and Kehuan Zhang Department of Information Engineering The Chinese University of Hong Kong {dw013, lx012, zz113, khzhang}@ie.cuhk.edu.hk ABSTRACT General Terms Previous research about sensor based attacks on Android platform Security focused mainly on accessing or controlling over sensitive compo- nents, such as camera, microphone and GPS. These approaches Keywords obtain data from sensors directly and need corresponding sensor invoking permissions. Android Security; Speaker; Voice Assistant; Permission Bypass- This paper presents a novel approach (GVS-Attack) to launch ing; Zero Permission Attack permission bypassing attacks from a zero-permission Android application (VoicEmployer) through the phone speaker. The idea of 1. INTRODUCTION GVS-Attack is to utilize an Android system built-in voice assistant In recent years, smartphones are becoming more and more popu- module – Google Voice Search. With Android Intent mechanism, lar, among which Android OS pushed past 80% market share [32]. VoicEmployer can bring Google Voice Search to foreground, and One attraction of smartphones is that users can install applications then plays prepared audio files (like “call number 1234 5678”) in (apps for short) as their wishes conveniently. But this convenience the background. Google Voice Search can recognize this voice also brings serious problems of malicious application, which have command and perform corresponding operations. With ingenious been noticed by both academic and industry fields. According to design, our GVS-Attack can forge SMS/Email, access privacy Kaspersky’s annual security report [34], Android platform attracted information, transmit sensitive data and achieve remote control a whopping 98.05% of known malware in 2013. -

User Guide Guía Del Usuario Del Guía GH68-43542A Printed in USA SMARTPHONE

User Guide User Guide GH68-43542A Printed in USA Guía del Usuario del Guía SMARTPHONE User Manual Please read this manual before operating your device and keep it for future reference. Legal Notices Warning: This product contains chemicals known create source code from the software. No title to or to the State of California to cause cancer and ownership in the Intellectual Property is transferred to reproductive toxicity. For more information, please call you. All applicable rights of the Intellectual Property 1-800-SAMSUNG (726-7864). shall remain with SAMSUNG and its suppliers. Intellectual Property Open Source Software Some software components of this product All Intellectual Property, as defined below, owned by incorporate source code covered under GNU General or which is otherwise the property of Samsung or its Public License (GPL), GNU Lesser General Public respective suppliers relating to the SAMSUNG Phone, License (LGPL), OpenSSL License, BSD License and including but not limited to, accessories, parts, or other open source licenses. To obtain the source code software relating there to (the “Phone System”), is covered under the open source licenses, please visit: proprietary to Samsung and protected under federal http://opensource.samsung.com. laws, state laws, and international treaty provisions. Intellectual Property includes, but is not limited to, inventions (patentable or unpatentable), patents, trade secrets, copyrights, software, computer programs, and Disclaimer of Warranties; related documentation and other works of authorship. -

SAMSUNG GALAXY S6 USER GUIDE Table of Contents

SAMSUNG GALAXY S6 USER GUIDE Table of Contents Basics 55 Camera 71 Gallery 4 Read me first 73 Smart Manager 5 Package contents 75 S Planner 6 Device layout 76 S Health 8 SIM or USIM card 79 S Voice 10 Battery 81 Music 14 Turning the device on and off 82 Video 15 Touchscreen 83 Voice Recorder 18 Home screen 85 My Files 24 Lock screen 86 Memo 25 Notification panel 86 Clock 28 Entering text 88 Calculator 31 Screen capture 89 Google apps 31 Opening apps 32 Multi window 37 Device and data management 41 Connecting to a TV Settings 43 Sharing files with contacts 91 Introduction 44 Emergency mode 91 Wi-Fi 93 Bluetooth 95 Flight mode Applications 95 Mobile hotspot and tethering 96 Data usage 45 Installing or uninstalling apps 97 Mobile networks 46 Phone 97 NFC and payment 49 Contacts 100 More connection settings 51 Messages 102 Sounds and notifications 53 Internet 103 Display 54 Email 103 Motions and gestures 2 Table of Contents 104 Applications 104 Wallpaper 105 Themes 105 Lock screen and security 110 Privacy and safety 113 Easy mode 113 Accessibility 114 Accounts 115 Backup and reset 115 Language and input 116 Battery 116 Storage 117 Date and time 117 User manual 117 About device Appendix 118 Accessibility 133 Troubleshooting 3 Basics Read me first Please read this manual before using the device to ensure safe and proper use. • Descriptions are based on the device’s default settings. • Some content may differ from your device depending on the region, service provider, model specifications, or device’s software. -

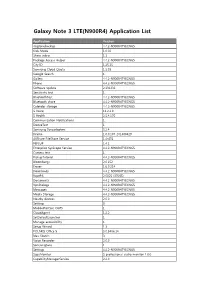

Galaxy Note 3 LTE(N900R4) Application List

Galaxy Note 3 LTE(N900R4) Application List Application Version ringtonebackup 4.4.2-N900R4TYECNG5 Kids Mode 1.0.02 Share video 1.1 Package Access Helper 4.4.2-N900R4TYECNG5 City ID 1.25.15 Samsung Cloud Quota 1.5.03 Google Search 1 Gallery 4.4.2-N900R4TYECNG5 Phone 4.4.2-N900R4TYECNG5 Software update 2.131231 Sensitivity test 1 BluetoothTest 4.4.2-N900R4TYECNG5 Bluetooth share 4.4.2-N900R4TYECNG5 Calendar storage 4.4.2-N900R4TYECNG5 S Voice 11.2.2.0 S Health 2.5.4.170 Communication Notifications 1 DeviceTest 1 Samsung Syncadapters 5.2.4 Drama 1.0.0.107_201400429 AllShare FileShare Service 1.4r476 PEN.UP 1.4.1 Enterprise SysScope Service 4.4.2-N900R4TYECNG5 Camera test 1 PickupTutorial 4.4.2-N900R4TYECNG5 Bloomberg+ 2.0.152 Eraser 1.6.0.214 Downloads 4.4.2-N900R4TYECNG5 RootPA 2.0025 (37085) Documents 4.4.2-N900R4TYECNG5 VpnDialogs 4.4.2-N900R4TYECNG5 Messages 4.4.2-N900R4TYECNG5 Media Storage 4.4.2-N900R4TYECNG5 Nearby devices 2.0.0 Settings 3 MobilePrintSvc_CUPS 1 CloudAgent 1.2.2 SetDefaultLauncher 1 Manage accessibility 1 Setup Wizard 1.3 POLARIS Office 5 5.0.3406.14 Idea Sketch 3 Voice Recorder 2.0.0 SamsungSans 1 Settings 4.4.2-N900R4TYECNG5 SapaMonitor S professional audio monitor 1.0.0 CapabilityManagerService 2.4.0 S Note 3.1.0 Samsung Link 1.8.1904 Samsung WatchON Video 14062601.1.21.78 Street View 1.8.1.2 Alarm 1 PageBuddyNotiSvc 1 Favorite Contacts 4.4.2-N900R4TYECNG5 Google Search 3.4.16.1149292.arm KNOX 2.0.0 Exchange services 4.2 GestureService 1 Weather 140211.01 Samsung Print Service Plugin 1.4.140410 Tasks provider 4.4.2-N900R4TYECNG5 -

Samsung Galaxy A8

SM-A530F SM-A530F/DS SM-A730F SM-A730F/DS User Manual English (LTN). 12/2017. Rev.1.0 www.samsung.com Table of Contents Basics Apps and features 4 Read me first 52 Installing or uninstalling apps 6 Device overheating situations and 54 Bixby solutions 70 Phone 10 Device layout and functions 75 Contacts 14 Battery 79 Messages 17 SIM or USIM card (nano-SIM card) 82 Internet 23 Memory card (microSD card) 84 Email 27 Turning the device on and off 85 Camera 28 Initial setup 100 Gallery 30 Samsung account 106 Always On Display 31 Transferring data from your previous 108 Multi window device 113 Samsung Pay 35 Understanding the screen 117 Samsung Members 47 Notification panel 118 Samsung Notes 49 Entering text 119 Calendar 120 Samsung Health 124 S Voice 126 Voice Recorder 127 My Files 128 Clock 129 Calculator 130 Radio 131 Game Launcher 134 Dual Messenger 135 Samsung Connect 139 Sharing content 140 Google apps 2 Table of Contents Settings 182 Google 182 Accessibility 142 Introduction 183 General management 142 Connections 184 Software update 143 Wi-Fi 185 User manual 146 Bluetooth 185 About phone 148 Data saver 148 NFC and payment 151 Mobile Hotspot and Tethering 152 SIM card manager (dual SIM Appendix models) 186 Troubleshooting 152 More connection settings 155 Sounds and vibration 156 Notifications 157 Display 158 Blue light filter 158 Changing the screen mode or adjusting the display color 160 Screensaver 160 Wallpapers and themes 161 Advanced features 163 Device maintenance 165 Apps 166 Lock screen and security 167 Face recognition 169 Fingerprint recognition 173 Smart Lock 173 Samsung Pass 176 Secure Folder 180 Cloud and accounts 181 Backup and restore 3 Basics Read me first Please read this manual before using the device to ensure safe and proper use. -

Samsung Galaxy Note 5 N920R6 User Manual

SMART PHONE User Manual Please read this manual before operating your device and keep it for future reference. Legal Notices Warning: This product contains chemicals known to Disclaimer of Warranties; the State of California to cause cancer, birth defects, or other reproductive harm. For more information, Exclusion of Liability please call 1-800-SAMSUNG (726-7864). EXCEPT AS SET FORTH IN THE EXPRESS WARRANTY CONTAINED ON THE WARRANTY PAGE ENCLOSED WITH THE PRODUCT, THE Intellectual Property PURCHASER TAKES THE PRODUCT “AS IS”, AND All Intellectual Property, as defined below, owned SAMSUNG MAKES NO EXPRESS OR IMPLIED by or which is otherwise the property of Samsung WARRANTY OF ANY KIND WHATSOEVER WITH or its respective suppliers relating to the SAMSUNG RESPECT TO THE PRODUCT, INCLUDING BUT Phone, including but not limited to, accessories, NOT LIMITED TO THE MERCHANTABILITY OF THE parts, or software relating there to (the “Phone PRODUCT OR ITS FITNESS FOR ANY PARTICULAR System”), is proprietary to Samsung and protected PURPOSE OR USE; THE DESIGN, CONDITION OR under federal laws, state laws, and international QUALITY OF THE PRODUCT; THE PERFORMANCE treaty provisions. Intellectual Property includes, OF THE PRODUCT; THE WORKMANSHIP OF THE but is not limited to, inventions (patentable or PRODUCT OR THE COMPONENTS CONTAINED unpatentable), patents, trade secrets, copyrights, THEREIN; OR COMPLIANCE OF THE PRODUCT software, computer programs, and related WITH THE REQUIREMENTS OF ANY LAW, RULE, documentation and other works of authorship. You SPECIFICATION OR CONTRACT PERTAINING may not infringe or otherwise violate the rights THERETO. NOTHING CONTAINED IN THE secured by the Intellectual Property. Moreover, INSTRUCTION MANUAL SHALL BE CONSTRUED you agree that you will not (and will not attempt TO CREATE AN EXPRESS OR IMPLIED WARRANTY to) modify, prepare derivative works of, reverse OF ANY KIND WHATSOEVER WITH RESPECT TO engineer, decompile, disassemble, or otherwise THE PRODUCT. -

Samsung Galaxy Note7 N930A User Manual

SMARTPHONE User Manual Please read this manual before operating your device and keep it for future reference. Legal WARNING! This product contains chemicals known to inventions (patentable or unpatentable), patents, trade the State of California to cause cancer, birth defects, or secrets, copyrights, software, computer programs, and other reproductive harm. For more information, please related documentation and other works of authorship. call 1-800-SAMSUNG (726-7864). You may not infringe or otherwise violate the rights secured by the Intellectual Property. Moreover, you Note: Water-resistant and dust-resistant based on IP68 agree that you will not (and will not attempt to) rating, which tests submersion up to 5.0 feet for up to modify, prepare derivative works of, reverse engineer, 30 minutes. decompile, disassemble, or otherwise attempt to create source code from the software. No title to or ownership Intellectual Property in the Intellectual Property is transferred to you. All All Intellectual Property, as defined below, owned by applicable rights of the Intellectual Property shall or which is otherwise the property of Samsung or its remain with SAMSUNG and its suppliers. respective suppliers relating to the SAMSUNG Phone, including but not limited to, accessories, parts, or software relating there to (the “Phone System”), is proprietary to Samsung and protected under federal laws, state laws, and international treaty provisions. Intellectual Property includes, but is not limited to, ATT_N930A_EN_UM_TN_PGC_080916_FINAL Open Source Software THE WORKMANSHIP OF THE PRODUCT OR THE Some software components of this product incorporate COMPONENTS CONTAINED THEREIN; OR COMPLIANCE source code covered under GNU General Public License OF THE PRODUCT WITH THE REQUIREMENTS OF (GPL), GNU Lesser General Public License (LGPL), ANY LAW, RULE, SPECIFICATION OR CONTRACT OpenSSL License, BSD License and other open source PERTAINING THERETO. -

Be Ready for Whatever the Workday Brings with the Samsung Galaxy S6 Edge

Ready for the next big thing. Be ready for whatever the workday brings with the Samsung Galaxy S6 edge. With next-generation power and performance, the Galaxy S6 edge delivers the productivity, multitasking and security protection your team needs today and tomorrow. Fuel your employees’ productivity with PC-like multitasking tools such as Multi Window, optimized business applications and our fastest processor yet. Protect sensitive company data with defense-grade Samsung KNOX security. Let work shine bright – even in direct sunlight – on our brilliant HD display featuring our first-ever dual-curved-edge screen. It’s time for a smartphone that’s ready to work harder for your enterprise. Enhanced Security for Your Business Give Your Employees a Premium Edge Defense-grade Samsung KNOX security and a This is what ready for success looks like. separate personal and work-partitioning Review presentations. Examine design container protect your organization’s sensitive renderings. Display product videos. Whatever data and satisfy your enterprise’s IT policy work comes through, your team will always requirements by easily integrating into your have a premium view on the 5.1" Quad HD existing or desired MDM solution. Using the Super AMOLED display, featuring the first-ever built-in fingerprint scanner coupled with a dual-curved glass screen that’s housed in a password or PIN further protects sensitive sleek, stunning metal and glass design your information with multiple layers of security. employees will love. Our most powerful, highest-resolution display to date delivers a Optimized Tools for Success sharp, vivid picture – even in bright sunlight – that brings greater detail to everything on Give your team access to the business-critical screen. -

Samsung Galaxy S Iii User Manual

GT-I9300 user manual Using this manual Thank you for choosing this Samsung mobile device. This device will provide you with high-quality mobile communication and entertainment based on Samsung’s exceptional technology and high standards. This manual has been specially designed to guide you through the functions and features of your device. Read me first ● Before using your device, read the entire manual and all safety instructions to ensure safe and proper use. ● The descriptions in this manual are based on the default settings of your device. ● The images and screenshots used in this manual may differ from the actual product. ● The contents of this manual may differ from the product, or from software provided by service providers or carriers, and are subject to change without prior notice. Access the Samsung website (www.samsung.com) for the latest version of the manual. ● Available features and additional services may vary by device, software, or service provider. ● Formatting and delivery of this manual is based on Android operating systems and may vary depending on the user’s operating system. ● Applications on this device may perform differently from comparable computer applications and may not include all functions available in the computer version. Using this manual 2 ● Applications and their functions may vary by region or hardware specifications. Samsung is not liable for performance issues caused by third-party applications. ● Samsung is not liable for performance issues or incompatibilities caused by edited registry settings or modified operating system software. Attempting to customise the operating system may cause your device or applications to work improperly. -

Voice Assistants Used on Smartphones

Voice Wars: Smart Speakers, Voice Assistants, and Strategies for Building a Successful Voice Ecosystem by Hans Wang B.S. Electrical Engineering and Computer Science University of California, Berkeley, 2009 SUBMITTED TO THE SYSTEM DESIGN AND MANAGEMENT PROGRAM IN PARTIAL FULLFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTER OF SCIENCE IN ENGINEERING AND MANAGEMENT AT THE MASSACHUSETTS INSTITUTE OF TECHNOLOGY JUNE 2019 @2019 Hans Wang. All rights reserved. The author hereby grants to MIT permission to reproduce and to distribute publicly paper and electronic copies of this thesis document in whole or in part in any medium now known or hereafter created. Signature redacted- Signature of Author: System Design & Management Program ^ ^ A -May 10, 2019 Signature redactea Certified by: V Michael A.M. Davies Senior Lecturer, Integra d Design & Management Program Signature redacted Thesis Supervisor Certified by: t / Blade Kotelly Senior Lecturer, Bernard M. Gordon-MIT Engineering Leadership Program Thesis Supervisor Signature redacted Accepted by: f Joan Rubin OF TECHNOLOGY Executive Director, System Design & Management Program JUN 2 72019 LIBRARIES MCHIVES This page is intentionally left blank 2 Voice Wars: Smart Speakers, Voice Assistants, and Strategies for Building a Successful Voice Ecosystem by Hans Wang Submitted to the System Design and Management Program on May 10, 2019 in Partial Fulfillment of the Requirements for the Degree of Master of Science in Engineering and Management ABSTRACT In recent years, voice-powered digital assistants have exploded into the consumer mainstream as an important new form of human-computer interaction. Powered by dramatic improvements in speech recognition and artificial intelligence (Al) technologies over the last decade, digital voice assistants are now abundantly prevalent in modem consumer electronic devices ranging from mobile phones, to smart speakers, to wearables. -

“Hey Speaker - Why Should I Use You?”

“HEY SPEAKER - WHY SHOULD I USE YOU?” Exploring the user acceptance of smart speakers by Jonas Hoffmann and Kasper Thuesen Master’s Thesis Business Administration and Information Systems, E-Business Supervision: Ather Nawaz Date of submission: 17th of September 2018 Character count: 222.376 / 97.7 pages Kasper Thuesen, 107905, [email protected] Jonas Hoffmann, 107594, [email protected] Abstract Voice-assistant powered smart speakers are entering private homes by storm, with the purpose of facilitating everyday tasks and simplifying their users’ lives. At the same time, they bring along an array of new challenges due to their purely voice-based interface and their fixed location inside the heart of consumer homes. Motivated by the soaring success of the technology and backed by literature about technology acceptance and user experience, this research investigates what motivates users to continuously use smart speakers in their daily lives and what makes them stop using them. In addition, it explores the gap between expectation and experience for this technology and analyses its influence on smart speaker usage. The research is carried out in two steps. First, 10 selected users are provided with a smart speaker with the task of using the product over the course of four weeks. Consequently, a focus group and several in-depth interviews are conducted with the participants about their experience. The analysis reveals that Usability, Usefulness and Sociality – each consisting of several sub- components – are the main factors that affect smart speaker usage. This research depicts one of the first user-centered analyses of smart speaker usage and opens a door for future research in the area of smart speakers. -

Inaudible Voice Commands: the Long-Range Attack and Defense

Inaudible Voice Commands: The Long-Range Attack and Defense Nirupam Roy, Sheng Shen, Haitham Hassanieh, Romit Roy Choudhury University of Illinois at Urbana-Champaign Abstract can launch from a distance of 5 ft to Amazon Echo [48] while the attack in [39] achieves 10 ft by becoming par- Recent work has shown that inaudible signals (at ultra- tially audible. In attempting to enhance range, we real- sound frequencies) can be designed in a way that they ized strong tradeoffs with inaudibility, i.e., the output of become audible to microphones. Designed well, this can the speaker no longer remains silent. This implies that empower an adversary to stand on the road and silently currently known attacks are viable in short ranges, such control Amazon Echo and Google Home-like devices in as Alice’s friend visiting Alice’s home and silently at- people’s homes. A voice command like “Alexa, open the tacking her Amazon Echo [11, 48]. However, the gen- garage door” can be a serious threat. eral, and perhaps more alarming attack, is the one in While recent work has demonstrated feasibility, two is- which the attacker parks his car on the road and controls sues remain open: (1) The attacks can only be launched voice-enabled devices in the neighborhood, and even a from within 5 ft of Amazon Echo, and increasing this person standing next to him does not hear it. This paper range makes the attack audible. (2) There is no clear so- is an attempt to achieve such an attack radius, followed lution against these ultrasound attacks, since they exploit by defenses against them.