Knowledge Graph Reasoning Over Unseen RDF Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Semantics Developer's Guide

MarkLogic Server Semantic Graph Developer’s Guide 2 MarkLogic 10 May, 2019 Last Revised: 10.0-8, October, 2021 Copyright © 2021 MarkLogic Corporation. All rights reserved. MarkLogic Server MarkLogic 10—May, 2019 Semantic Graph Developer’s Guide—Page 2 MarkLogic Server Table of Contents Table of Contents Semantic Graph Developer’s Guide 1.0 Introduction to Semantic Graphs in MarkLogic ..........................................11 1.1 Terminology ..........................................................................................................12 1.2 Linked Open Data .................................................................................................13 1.3 RDF Implementation in MarkLogic .....................................................................14 1.3.1 Using RDF in MarkLogic .........................................................................15 1.3.1.1 Storing RDF Triples in MarkLogic ...........................................17 1.3.1.2 Querying Triples .......................................................................18 1.3.2 RDF Data Model .......................................................................................20 1.3.3 Blank Node Identifiers ..............................................................................21 1.3.4 RDF Datatypes ..........................................................................................21 1.3.5 IRIs and Prefixes .......................................................................................22 1.3.5.1 IRIs ............................................................................................22 -

Binary RDF for Scalable Publishing, Exchanging and Consumption in the Web of Data

WWW 2012 – PhD Symposium April 16–20, 2012, Lyon, France Binary RDF for Scalable Publishing, Exchanging and Consumption in the Web of Data Javier D. Fernández 1;2 Supervised by: Miguel A. Martínez Prieto 1 and Claudio Gutierrez 2 1 Department of Computer Science, University of Valladolid (Spain) 2 Department of Computer Science, University of Chile (Chile) [email protected] ABSTRACT era and hence they share a document-centric view, providing The Web of Data is increasingly producing large RDF data- human-focused syntaxes, disregarding large data. sets from diverse fields of knowledge, pushing the Web to In a typical scenario within the current state-of-the-art, a data-to-data cloud. However, traditional RDF represen- efficient interchange of RDF data is limited, at most, to com- tations were inspired by a document-centric view, which pressing the verbose plain data with universal compression results in verbose/redundant data, costly to exchange and algorithms. The resultant file has no logical structure and post-process. This article discusses an ongoing doctoral the- there is no agreed way to efficiently publish such data, i.e., sis addressing efficient formats for publication, exchange and to make them (publicly) available for diverse purposes and consumption of RDF on a large scale. First, a binary serial- users. In addition, the data are hardly usable at the time of ization format for RDF, called HDT, is proposed. Then, we consumption; the consumer has to decompress the file and, focus on compressed rich-functional structures which take then, to use an appropriate external tool (e.g. -

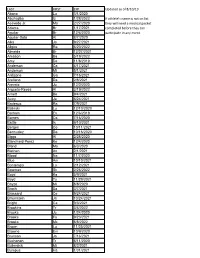

LAST FIRST EXP Updated As of 8/10/19 Abano Lu 3/1/2020 Abuhadba Iz 1/28/2022 If Athlete's Name Is Not on List Acevedo Jr

LAST FIRST EXP Updated as of 8/10/19 Abano Lu 3/1/2020 Abuhadba Iz 1/28/2022 If athlete's name is not on list Acevedo Jr. Ma 2/27/2020 they will need a medical packet Adams Br 1/17/2021 completed before they can Aguilar Br 12/6/2020 participate in any event. Aguilar-Soto Al 8/7/2020 Alka Ja 9/27/2021 Allgire Ra 6/20/2022 Almeida Br 12/27/2021 Amason Ba 5/19/2022 Amy De 11/8/2019 Anderson Ca 4/17/2021 Anderson Mi 5/1/2021 Ardizone Ga 7/16/2021 Arellano Da 2/8/2021 Arevalo Ju 12/2/2020 Argueta-Reyes Al 3/19/2022 Arnett Be 9/4/2021 Autry Ja 6/24/2021 Badeaux Ra 7/9/2021 Balinski Lu 12/10/2020 Barham Ev 12/6/2019 Barnes Ca 7/16/2020 Battle Is 9/10/2021 Bergen Co 10/11/2021 Bermudez Da 10/16/2020 Biggs Al 2/28/2020 Blanchard-Perez Ke 12/4/2020 Bland Ma 6/3/2020 Blethen An 2/1/2021 Blood Na 11/7/2020 Blue Am 10/10/2021 Bontempo Lo 2/12/2021 Bowman Sk 2/26/2022 Boyd Ka 5/9/2021 Boyd Ty 11/29/2021 Boyzo Mi 8/8/2020 Brach Sa 3/7/2021 Brassard Ce 9/24/2021 Braunstein Ja 10/24/2021 Bright Ca 9/3/2021 Brookins Tr 3/4/2022 Brooks Ju 1/24/2020 Brooks Fa 9/23/2021 Brooks Mc 8/8/2022 Brown Lu 11/25/2021 Browne Em 10/9/2020 Brunson Jo 7/16/2021 Buchanan Tr 6/11/2020 Bullerdick Mi 8/2/2021 Bumpus Ha 1/31/2021 LAST FIRST EXP Updated as of 8/10/19 Burch Co 11/7/2020 Burch Ma 9/9/2021 Butler Ga 5/14/2022 Byers Je 6/14/2021 Cain Me 6/20/2021 Cao Tr 11/19/2020 Carlson Be 5/29/2021 Cerda Da 3/9/2021 Ceruto Ri 2/14/2022 Chang Ia 2/19/2021 Channapati Di 10/31/2021 Chao Et 8/20/2021 Chase Em 8/26/2020 Chavez Fr 6/13/2020 Chavez Vi 11/14/2021 Chidambaram Ga 10/13/2019 -

The Semantic Web and Its Languages Editor: Dieter Fensel Vrije Universiteit Amsterdam [email protected]

TRENDS & CONTROVERSIES The semantic Web and its languages Editor: Dieter Fensel Vrije Universiteit Amsterdam [email protected] The Web has drastically changed the availability of electronic informa- epistemologically rich modeling primitives, provided by the frame com- tion, but its success and exponential growth have made it increasingly munity; formal semantics and efficient reasoning support, provided by difficult to find, access, present, and maintain such information for a wide description logics; and a standard proposal for syntactical exchange nota- variety of users. In reaction to this bottleneck, many new research initia- tions, provided by the Web community. (See the essay by Frank van tives and commercial enterprises have been set up to enrich available Harmelen and Ian Horrocks.) information with machine-processable semantics. Such support is essen- Another candidate for such a Web-based ontology modeling lan- tial for bringing the Web to its full potential in areas such as knowledge guage is DAML-ONT. The DARPA Agent Markup Language is a management and electronic commerce. This semantic Web will provide major, well-funded initiative, aimed at joining the many ongoing intelligent access to heterogeneous and distributed information, enabling semantic Web efforts and focused on bringing ontologies to the Web. software products (agents) to mediate between user needs and available The DAML language inherits many aspects from OIL, and the capabili- information sources. Early steps in the direction of a semantic Web were ties of the two languages are relatively similar. Both initiatives cooper- SHOE1 and later Ontobroker,2 but now many more projects exist. ate in a Joint EU/US ad hoc Agent Markup Language Committee to Originally, the Web grew mainly around HTML, which provided a achieve a joined language proposal. -

Semantic Gadgets: Pervasive Computing Meets the Semantic Web

Semantic Gadgets: Pervasive Computing Meets the Semantic Web Ora Lassila Research Fellow Agent Technology Group, Nokia Research Center October 2002 1 © NOKIA 2002-10-01 - Ora Lassila Mobility Makes Things Different • Device location is a new dimension • more information about the user and the usage context available • new applications & services are possible • Devices are different • reduced capabilities: smaller screens, slow input devices, lower bandwidth, higher latency, worse reliability, … • trusted device: always with you & has access to your private data • Usage contexts and needs are different • awkward usage situations (e.g., in the car while driving) • specific needs (“surfing” unlikely) • you are always “on” (= connected) • Dilemma: • the Internet - by design - represents a departure from physical reality but mobility grounds services & users to the physical world 2 © NOKIA 2002-10-01 - Ora Lassila 1 Some Enablers of Mobile Internet • Access to services from handheld terminals • Dynamic synthesis of content • Context-sensitivity • location is one dimension of a “context”, but there are others • New Technologies • Artificial Intelligence • machine learning: automatic customization and adaptation • automated planning: autonomous operation • “Semantic Web” • intelligent synthesis of content from multiple sources (ad hoc & on demand) • explicit representation of semantics of data & services • Ubiquitous (aka Pervasive) Computing • (a paradigm shift in personal computing) 3 © NOKIA 2002-10-01 - Ora Lassila Semantic Web: Motivation -

Review of Research

Review Of ReseaRch impact factOR : 5.7631(Uif) UGc appROved JOURnal nO. 48514 issn: 2249-894X vOlUme - 8 | issUe - 7 | apRil - 2019 __________________________________________________________________________________________________________________________ AN ANALYSIS OF CURRENT TRENDS FOR SANSKRIT AS A COMPUTER PROGRAMMING LANGUAGE Manish Tiwari1 and S. Snehlata2 1Department of Computer Science and Application, St. Aloysius College, Jabalpur. 2Student, Deparment of Computer Science and Application, St. Aloysius College, Jabalpur. ABSTRACT : Sanskrit is said to be one of the systematic language with few exception and clear rules discretion.The discussion is continued from last thirtythat language could be one of best option for computers.Sanskrit is logical and clear about its grammatical and phonetically laws, which are not amended from thousands of years. Entire Sanskrit grammar is based on only fourteen sutras called Maheshwar (Siva) sutra, Trimuni (Panini, Katyayan and Patanjali) are responsible for creation,explainable and exploration of these grammar laws.Computer as machine,requires such language to perform better and faster with less programming.Sanskrit can play important role make computer programming language flexible, logical and compact. This paper is focused on analysis of current status of research done on Sanskrit as a programming languagefor .These will the help us to knowopportunity, scope and challenges. KEYWORDS : Artificial intelligence, Natural language processing, Sanskrit, Computer, Vibhakti, Programming language. -

The What and Why of Whole Number Arithmetic: Foundational Ideas from History, Language and Societal Changes

Portland State University PDXScholar Mathematics and Statistics Faculty Fariborz Maseeh Department of Mathematics Publications and Presentations and Statistics 3-2018 The What and Why of Whole Number Arithmetic: Foundational Ideas from History, Language and Societal Changes Xu Hu Sun University of Macau Christine Chambris Université de Cergy-Pontoise Judy Sayers Stockholm University Man Keung Siu University of Hong Kong Jason Cooper Weizmann Institute of Science SeeFollow next this page and for additional additional works authors at: https:/ /pdxscholar.library.pdx.edu/mth_fac Part of the Science and Mathematics Education Commons Let us know how access to this document benefits ou.y Citation Details Sun X.H. et al. (2018) The What and Why of Whole Number Arithmetic: Foundational Ideas from History, Language and Societal Changes. In: Bartolini Bussi M., Sun X. (eds) Building the Foundation: Whole Numbers in the Primary Grades. New ICMI Study Series. Springer, Cham This Book Chapter is brought to you for free and open access. It has been accepted for inclusion in Mathematics and Statistics Faculty Publications and Presentations by an authorized administrator of PDXScholar. Please contact us if we can make this document more accessible: [email protected]. Authors Xu Hu Sun, Christine Chambris, Judy Sayers, Man Keung Siu, Jason Cooper, Jean-Luc Dorier, Sarah Inés González de Lora Sued, Eva Thanheiser, Nadia Azrou, Lynn McGarvey, Catherine Houdement, and Lisser Rye Ejersbo This book chapter is available at PDXScholar: https://pdxscholar.library.pdx.edu/mth_fac/253 Chapter 5 The What and Why of Whole Number Arithmetic: Foundational Ideas from History, Language and Societal Changes Xu Hua Sun , Christine Chambris Judy Sayers, Man Keung Siu, Jason Cooper , Jean-Luc Dorier , Sarah Inés González de Lora Sued , Eva Thanheiser , Nadia Azrou , Lynn McGarvey , Catherine Houdement , and Lisser Rye Ejersbo 5.1 Introduction Mathematics learning and teaching are deeply embedded in history, language and culture (e.g. -

Tai Lü / ᦺᦑᦟᦹᧉ Tai Lùe Romanization: KNAB 2012

Institute of the Estonian Language KNAB: Place Names Database 2012-10-11 Tai Lü / ᦺᦑᦟᦹᧉ Tai Lùe romanization: KNAB 2012 I. Consonant characters 1 ᦀ ’a 13 ᦌ sa 25 ᦘ pha 37 ᦤ da A 2 ᦁ a 14 ᦍ ya 26 ᦙ ma 38 ᦥ ba A 3 ᦂ k’a 15 ᦎ t’a 27 ᦚ f’a 39 ᦦ kw’a 4 ᦃ kh’a 16 ᦏ th’a 28 ᦛ v’a 40 ᦧ khw’a 5 ᦄ ng’a 17 ᦐ n’a 29 ᦜ l’a 41 ᦨ kwa 6 ᦅ ka 18 ᦑ ta 30 ᦝ fa 42 ᦩ khwa A 7 ᦆ kha 19 ᦒ tha 31 ᦞ va 43 ᦪ sw’a A A 8 ᦇ nga 20 ᦓ na 32 ᦟ la 44 ᦫ swa 9 ᦈ ts’a 21 ᦔ p’a 33 ᦠ h’a 45 ᧞ lae A 10 ᦉ s’a 22 ᦕ ph’a 34 ᦡ d’a 46 ᧟ laew A 11 ᦊ y’a 23 ᦖ m’a 35 ᦢ b’a 12 ᦋ tsa 24 ᦗ pa 36 ᦣ ha A Syllable-final forms of these characters: ᧅ -k, ᧂ -ng, ᧃ -n, ᧄ -m, ᧁ -u, ᧆ -d, ᧇ -b. See also Note D to Table II. II. Vowel characters (ᦀ stands for any consonant character) C 1 ᦀ a 6 ᦀᦴ u 11 ᦀᦹ ue 16 ᦀᦽ oi A 2 ᦰ ( ) 7 ᦵᦀ e 12 ᦵᦀᦲ oe 17 ᦀᦾ awy 3 ᦀᦱ aa 8 ᦶᦀ ae 13 ᦺᦀ ai 18 ᦀᦿ uei 4 ᦀᦲ i 9 ᦷᦀ o 14 ᦀᦻ aai 19 ᦀᧀ oei B D 5 ᦀᦳ ŭ,u 10 ᦀᦸ aw 15 ᦀᦼ ui A Indicates vowel shortness in the following cases: ᦀᦲᦰ ĭ [i], ᦵᦀᦰ ĕ [e], ᦶᦀᦰ ăe [ ∎ ], ᦷᦀᦰ ŏ [o], ᦀᦸᦰ ăw [ ], ᦀᦹᦰ ŭe [ ɯ ], ᦵᦀᦲᦰ ŏe [ ]. -

Exercise Sheet 1

Semantic Web, SS 2017 1 Exercise Sheet 1 RDF, RDFS and Linked Data Submit your solutions until Friday, 12.5.2017, 23h00 by uploading them to ILIAS. Later submissions won't be considered. Every solution should contain the name(s), email adress(es) and registration number(s) of its (co-)editor(s). Read the slides for more submission guidelines. 1 Knowledge Representation (4pts) 1.1 Knowledge Representation Humans usually express their knowledge in natural language. Why aren't we using, e.g., English for knowledge representation and the semantic web? 1.2 Terminological vs Concrete Knowledge What is the role of terminological knowledge (e.g. Every company is an organization.), and what is the role of facts (e.g. Microsoft is an organization. Microsoft is headquartered in Redmond.) in a semantic web system? Hint: Imagine an RDF ontology that contains either only facts (describing factual knowledge: states of aairs) or constraints (describing terminological knowledge: relations between con- cepts). What could a semantic web system do with it? Semantic Web, SS 2017 2 2 RDF (10pts) RDF graphs consist of triples having a subject, a predicate and an object. Dierent syntactic notations can be used in order to serialize RDF graphs. By now you have seen the XML and the Turtle syntax of RDF. In this task we will use the Notation3 (N3) format described at http://www.w3.org/2000/10/swap/Primer. Look at the following N3 le: @prefix model: <http://example.com/model1/> . @prefix cdk: <http://example.com/chemistrydevelopmentkit/> . @prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#> . -

Browsing the Semantic Web

Browsing the Semantic Web Ora Lassila Nokia Research Center Cambridge Cambridge, MA 02142, USA Abstract lution. Enabling browsing is further encouraged by the fact that, despite its simple data model, users and developers are Presenting RDF graphs to the end user as hypertext enables often put off by RDF’s cryptic XML-based syntax. interactive exploration and inquiry of “semantic” data. In Many tools have been constructed for searching, ex- this paper we present a tool that allows users to “see” their ploring and querying complex information spaces such as RDF data, without having to decipher syntactic represen- semistructured data representations or collections with rich tations that are typically not human-friendly. The tool is metadata. These tools often offer a mixture of features such part of a broader architecture designed for viewing many as faceted search, clustering of search results, etc. Exam- different types of data in Semantic Web formalisms, for ples of such systems include Lore [7, 8], Flamenco [26], experimenting with the caching of dynamically changing and others. More specifically, recent years have also seen a data, and for exploring the possible ad hoc integration of number of “Semantic Web browsers” emerge; examples of data from multiple sources. Additionally, this tool enables this type of software include mSpace [16], Haystack [18], browsing of semantic data on a mobile device, by adapting Magnet [23], DBin [25], semantExplorer [21], and others. the hypertext generation to better suit small screens. These systems allow the browsing of Semantic Web data and provide various ways of searching and visualizing this data. Many of them combine semantic data with “classi- 1. -

Implementation of Sanskrit Linguistics in Artificial Intelligence Programming

ISSN: 2456 - 3935 International Journal of Advances in Computer and Electronics Engineering Volume: 02 Issue: 02, February 2017, pp. 17 – 27 Implementation of Sanskrit Linguistics in Artificial Intelligence Programming Neetesh Vashishtha UG Scholar, Department of Computer Science Engineering, Jaipur Engineering College and Research Center, India Email: [email protected] Abstract: This research paper is directed towards examining the extent to which the Sanskrit language can be implemented in programming, principally in the domain of Artificial Intelligence. This paper can be divided into three major sections. The first section explains the significance of Sanskrit over other languages. The second section explores that if it’s actually beneficial to program in Sanskrit rather than English. The third section includes coding of two identical AI programs, one made to interact in English and the other in Sanskrit. They are analyzed separately and then compared collectively to seek for the advantages the Sanskrit linguistics offer in Artificial Intelligence programming. We then conclude that Sanskrit, when used for communicating with the AI machines, is remarkable with an astounding versatility and brilliant learning abilities for an AI. The Sanskrit being strict and bundled, results in a compact and unambiguous form of conversations with the AI programs. Keyword: Artificial Intelligence; Deep Learning; Java; Language linguistics; Machine Learning; Sanskrit 1. INTRODUCTION The primary objective of this paper is to present The number of possible incorrect sentences are- the possibility of adopting Sanskrit as a means of 5! – 1 = 120-1 communication with the artificial intelligence. The = 119 permutations paper also aims to extend this proposal with pro- gramming the AI in Sanskrit Linguistics. -

Common Sense Reasoning with the Semantic Web

Common Sense Reasoning with the Semantic Web Christopher C. Johnson and Push Singh MIT Summer Research Program Massachusetts Institute of Technology, Cambridge, MA 02139 [email protected], [email protected] http://groups.csail.mit.edu/dig/2005/08/Johnson-CommonSense.pdf Abstract Current HTML content on the World Wide Web has no real meaning to the computers that display the content. Rather, the content is just fodder for the human eye. This is unfortunate as in fact Web documents describe real objects and concepts, and give particular relationships between them. The goal of the World Wide Web Consortium’s (W3C) Semantic Web initiative is to formalize web content into Resource Description Framework (RDF) ontologies so that computers may reason and make decisions about content across the Web. Current W3C work has so far been concerned with creating languages in which to express formal Web ontologies and tools, but has overlooked the value and importance of implementing common sense reasoning within the Semantic Web. As Web blogging and news postings become more prominent across the Web, there will be a vast source of natural language text not represented as RDF metadata. Common sense reasoning will be needed to take full advantage of this content. In this paper we will first describe our work in converting the common sense knowledge base, ConceptNet, to RDF format and running N3 rules through the forward chaining reasoner, CWM, to further produce new concepts in ConceptNet. We will then describe an example in using ConceptNet to recommend gift ideas by analyzing the contents of a weblog.