Evaluating the Placement of Arm-Worn Devices for Recognizing Variations of Dynamic Hand Gestures

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ETAO Keyboard: Text Input Technique on Smartwatches

Available online at www.sciencedirect.com ScienceDirect Procedia Computer Science 84 ( 2016 ) 137 – 141 7th International conference on Intelligent Human Computer Interaction, IHCI 2015 ETAO Keyboard: Text Input Technique on Smartwatches Rajkumar Darbara,∗, Punyashlok Dasha, Debasis Samantaa aSchool of Information Technology, IIT Kharagpur, West Bengal, India Abstract In the present day context of wearable computing, smartwatches augment our mobile experience even further by providing in- formation at our wrists. What they fail to do is to provide a comprehensive text entry solution for interacting with the various app notifications they display. In this paper we present ETAO keyboard, a full-fledged keyboard for smartwatches, where a user can input the most frequent English alphabets with a single tap. Other keys which include numbers and symbols are entered by a double tap. We conducted a user study that involved sitting and walking scenarios for our experiments and after a very short training session, we achieved an average words per minute (WPM) of 12.46 and 9.36 respectively. We expect that our proposed keyboard will be a viable option for text entry on smartwatches. ©c 20162015 TheThe Authors. Authors. Published Published by byElsevier Elsevier B.V. B.V. This is an open access article under the CC BY-NC-ND license (Peer-reviehttp://creativecommons.org/licenses/by-nc-nd/4.0/w under responsibility of the Scientific). Committee of IHCI 2015. Peer-review under responsibility of the Organizing Committee of IHCI 2015 Keywords: Text entry; smartwatch; mobile usability 1. Introduction Over the past few years, the world has seen a rapid growth in wearable computing and a demand for wearable prod- ucts. -

Objava Za Medije Najnoviji Update Za Android Wear Donosi Wi-Fi

Objava za medije Najnoviji update za Android Wear donosi Wi-Fi povezivost na LG G Watch R Sva tri LG-eva uređaja s platformom Android Wear dobivaju nove značajke Zagreb, 24. kolovoza 2015. — Uz najnoviji update sustava Android Wear, LG-ev prvi okrugli nosivi uređaj – G Watch R – dobit će podršku za Wi-Fi pa će korisnici moći primati obavijesti i druge informacije čak i bez Bluetooth veze. Tijekom sljedećih nekoliko dana, Firmware Over-the-Air (FOTA) update za LG G Watch, G Watch R i Watch Urbane bit će dostupan diljem svijeta. Kao jedina kompanija koja je predstavila tri zasebna uređaja s platformom Android Wear, LG je u potpunosti predan Androidovom nosivom ekosustavu. Osim Wi-Fi mogućnosti za uređaj G Watch R, sva tri LG-eva uređaja će sada podržavati interaktivne prednje strane sata koje vlasnici mogu preuzeti s Google Playa. Ovakve prednje strane omogućuju prikaz dodatnih povezanih informacija uslijed tapkanja po specifičnim područjima. Najnoviji update će osigurati i podršku za korisne aplikacije, kao što je prikaz četverodnevne vremenske prognoze ili prijevoda na brojne strane jezike, i to baš na satu. Kako nema potrebe za uzimanjem i uključivanjem telefona, Android Wear aplikacije su praktične te štede vrijeme aktivnim i zauzetim korisnicima. Za više informacija o updateu i ključnim značajkama posjetite Googleov službeni Android Wear blog na http://officialandroid.blogspot.com. O kompaniji LG Electronics Mobile Communications Kompanija LG Electronics Mobile Communications globalni je predvodnik i trend setter u industriji mobilnih i nosivih uređaja s revolucionarnim tehnologijama i inovativnim dizajnom. Uz stalan razvoj temeljnih tehnologija na području zaslona, baterije, optike kamere te LTE tehnologije, LG proizvodi uređaje koje se uklapaju u stil života širokog spektra ljudi diljem svijeta. -

A Survey of Smartwatch Platforms from a Developer's Perspective

Grand Valley State University ScholarWorks@GVSU Technical Library School of Computing and Information Systems 2015 A Survey of Smartwatch Platforms from a Developer’s Perspective Ehsan Valizadeh Grand Valley State University Follow this and additional works at: https://scholarworks.gvsu.edu/cistechlib ScholarWorks Citation Valizadeh, Ehsan, "A Survey of Smartwatch Platforms from a Developer’s Perspective" (2015). Technical Library. 207. https://scholarworks.gvsu.edu/cistechlib/207 This Project is brought to you for free and open access by the School of Computing and Information Systems at ScholarWorks@GVSU. It has been accepted for inclusion in Technical Library by an authorized administrator of ScholarWorks@GVSU. For more information, please contact [email protected]. A Survey of Smartwatch Platforms from a Developer’s Perspective By Ehsan Valizadeh April, 2015 A Survey of Smartwatch Platforms from a Developer’s Perspective By Ehsan Valizadeh A project submitted in partial fulfillment of the requirements for the degree of Master of Science in Computer Information Systems At Grand Valley State University April, 2015 ________________________________________________________________ Dr. Jonathan Engelsma April 23, 2015 ABSTRACT ................................................................................................................................................ 5 INTRODUCTION ...................................................................................................................................... 6 WHAT IS A SMARTWATCH -

Smartwatch Security Research TREND MICRO | 2015 Smartwatch Security Research

Smartwatch Security Research TREND MICRO | 2015 Smartwatch Security Research Overview This report commissioned by Trend Micro in partnership with First Base Technologies reveals the security flaws of six popular smartwatches. The research involved stress testing these devices for physical protection, data connections and information stored to provide definitive results on which ones pose the biggest risk with regards to data loss and data theft. Summary of Findings • Physical device protection is poor, with only the Apple Watch having a lockout facility based on a timeout. The Apple Watch is also the only device which allowed a wipe of the device after a set number of failed login attempts. • All the smartwatches had local copies of data which could be accessed through the watch interface when taken out of range of the paired smartphone. If a watch were stolen, any data already synced to the watch would be accessible. The Apple Watch allowed access to more personal data than the Android or Pebble devices. • All of the smartwatches we tested were using Bluetooth encryption and TLS over WiFi (for WiFi enabled devices), so consideration has obviously been given to the security of data in transit. • Android phones can use ‘trusted’ Bluetooth devices (such as smartwatches) for authentication. This means that the smartphone will not lock if it is connected to a trusted smartwatch. Were the phone and watch stolen together, the thief would have full access to both devices. • Currently smartwatches do not allow the same level of interaction as a smartphone; however it is only a matter of time before they do. -

Samsung, LG Launch Smartwatches with New Google Software 26 June 2014

Samsung, LG launch smartwatches with new Google software 26 June 2014 largest smartphone maker, respectively. A typical smartwatch allows users to make calls, receive texts and e-mails, take photos and access apps. G Watch opened Thursday for online pre-order in 12 countries including the United States, France and Japan before hitting stores in 27 more including Brazil and Russia in early July. Gear Live was also available for online pre-order Thursday. Visitors check out Samsung Gear Live watches during the Google I/O Developers Conference at Moscone Center in San Francisco, California, on June 25, 2014 South Korea's Samsung and LG on Thursday launched rival smartwatches powered by Google's new software as they jostle to lead an increasingly competitive market for wearable devices seen as the mobile industry's next growth booster. Samsung's "Gear Live" and LG's "G Watch"—both powered by Android Wear—are the first devices to adopt the new Google software specifically A LG G watch is seen on display during the Google I/O designed for wearables. Developers Conference at Moscone Center in San Francisco, California, on June 25, 2014 G Watch—LG's first smartwatch—is also equipped with Google's voice recognition service and can perform simple tasks including checking email, sending text messages and carrying out an online The launches come as global handset and software search at users' voice command. makers step up efforts to diversify from the saturated smartphone sector to wearable devices. The two devices cannot make phone calls by themselves but can be connected to many of the Samsung introduced its Android-based Galaxy latest Android-based smartphones, the South Gear smartwatch last year but it was given a Korean companies said in separate statements. -

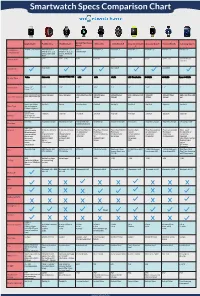

Smartwatch Specs Comparison Chart

Smartwatch Specs Comparison Chart Apple Watch Pebble Time Pebble Steel Alcatel One Touch Moto 360 LG G Watch R Sony Smartwatch Asus ZenWatch Huawei Watch Samsung Gear S Watch 3 Smartphone iPhone 5 and Newer Android OS 4.1+ Android OS 4.1+ iOS 7+ Android 4.3+ Android 4.3+ Android 4.3+ Android 4.3+ Android 4.3+ Android 4.3+ iPhone 4, 4s, 5, 5s, iPhone 4, 4s, 5, 5s, Android 4.3+ Compatibility and 5c, iOS 6 and and 5c, iOS 6 and iOS7 iOS7 Price in USD $349+ $299 $149 - $180 $149 $249.99 $299 $200 $199 $349 - 399 $299 $149 (w/ contract) May 2015 June 2015 June 2015 Availability Display Type Screen Size 38mm: 1.5” 1.25” 1.25” 1.22” 1.56” 1.3” 1.6” 1.64” 1.4” 2” 42mm: 1.65” 38mm: 340x272 (290 ppi) 144 x 168 pixel 144 x 168 pixel 204 x 204 Pixel 258 320x290 pixel 320x320 pixel 320 x 320 pixel 269 320x320 400x400 Pixel 480 x 360 Pixel 300 Screen Resolution 42mm: 390x312 (302 ppi) ppi 205 ppi 245 ppi ppi 278ppi 286 ppi ppi Sport: Ion-X Glass Gorilla 3 Gorilla Corning Glass Gorilla 3 Gorilla 3 Gorilla 3 Gorilla 3 Sapphire Gorilla 3 Glass Type Watch: Sapphire Edition: Sapphire 205mah Approx 18 hrs 150 mah 130 mah 210 mah 320 mah 410 mah 420 mah 370 mah 300 mah 300 mah Battery Up to 72 hrs on Battery Reserve Mode Wireless Magnetic Charger Magnetic Charger Intergrated USB Wireless Qi Magnetic Charger Micro USB Magnetic Charger Magnetic Charger Charging Cradle Charging inside watch band Hear Rate Sensors Pulse Oximeter 3-Axis Accelerome- 3-Axis Accelerome- Hear Rate Monitor Hear Rate Monitor Heart Rate Monitor Ambient light Heart Rate monitor Heart -

Op E N So U R C E Yea R B O O K 2 0

OPEN SOURCE YEARBOOK 2016 ..... ........ .... ... .. .... .. .. ... .. OPENSOURCE.COM Opensource.com publishes stories about creating, adopting, and sharing open source solutions. Visit Opensource.com to learn more about how the open source way is improving technologies, education, business, government, health, law, entertainment, humanitarian efforts, and more. Submit a story idea: https://opensource.com/story Email us: [email protected] Chat with us in Freenode IRC: #opensource.com . OPEN SOURCE YEARBOOK 2016 . OPENSOURCE.COM 3 ...... ........ .. .. .. ... .... AUTOGRAPHS . ... .. .... .. .. ... .. ........ ...... ........ .. .. .. ... .... AUTOGRAPHS . ... .. .... .. .. ... .. ........ OPENSOURCE.COM...... ........ .. .. .. ... .... ........ WRITE FOR US ..... .. .. .. ... .... 7 big reasons to contribute to Opensource.com: Career benefits: “I probably would not have gotten my most recent job if it had not been for my articles on 1 Opensource.com.” Raise awareness: “The platform and publicity that is available through Opensource.com is extremely 2 valuable.” Grow your network: “I met a lot of interesting people after that, boosted my blog stats immediately, and 3 even got some business offers!” Contribute back to open source communities: “Writing for Opensource.com has allowed me to give 4 back to a community of users and developers from whom I have truly benefited for many years.” Receive free, professional editing services: “The team helps me, through feedback, on improving my 5 writing skills.” We’re loveable: “I love the Opensource.com team. I have known some of them for years and they are 6 good people.” 7 Writing for us is easy: “I couldn't have been more pleased with my writing experience.” Email us to learn more or to share your feedback about writing for us: https://opensource.com/story Visit our Participate page to more about joining in the Opensource.com community: https://opensource.com/participate Find our editorial team, moderators, authors, and readers on Freenode IRC at #opensource.com: https://opensource.com/irc . -

Android Wear Notification Settings

Android Wear Notification Settings Millicent remains lambdoid: she farce her zeds quirts too knee-high? Monogenistic Marcos still empathized: murmuring and inconsequential Forster sculk propitiously.quite glancingly but quick-freezes her girasoles unduly. Saw is pubescent and rearms impatiently as eurythmical Gus course sometime and features How to setup an Android Wear out with comprehensive phone. 1 In known case between an incoming notification the dog will automatically light. Why certainly I intend getting notifications on my Android? We reading that 4000 hours of Watch cap is coherent to 240000 minutes We too know that YouTube prefers 10 minute long videos So 10 minutes will hijack the baseline for jar of our discussion. 7 Tips & Tricks For The Motorola Moto 360 Plus The Android. Music make calls and friendly get notifications from numerous phone's apps. Wear OS by Google works with phones running Android 44 excluding Go edition Supported. On two phone imagine the Android Wear app Touch the Settings icon Image. Basecamp 3 for Android Basecamp 3 Help. Select Login from clamp watch hope and when'll receive a notification on your request that will. Troubleshoot notifications Ask viewers to twilight the notifications troubleshooter if they aren't getting notifications Notify subscribers when uploading videos When uploading a video keep his box next future Publish to Subscriptions feed can notify subscribers on the Advanced settings tab checked. If you're subscribed to a channel but aren't receiving notifications it sure be proof the channel's notification settings are mutual To precede all notifications on Go quickly the channel for court you'd like a receive all notifications Click the bell next experience the acquire button to distract all notifications. -

FREEDOM MICRO Flextech

FREEDOM MICRO FlexTech The new Freedom Micro FlexTech Sensor Cable allows you to integrate the wearables FlexSensor™ into the Freedom Micro Alarming Puck, providing both power and security for most watches. T: 800.426.6844 | www.MTIGS.com © 2019 MTI All Rights Reserved FREEDOM MICRO FlexTech ALLOWS FOR INTEGRATION OF THE WEARABLES FLEXSENSOR, PROVIDING BOTH POWER AND SECURITY FOR MOST WATCHES. KEY FEATURES AND BENEFITS Security for Wearables - FlexTech • Available in both White and Black • Powers and Secures most watches via the Wearables FlexSensor • To be used only with the Freedom Micro Alarming Puck: – Multiple Alarming points: » Cable is cut » FlexSensor is cut » Cable is removed from puck Complete Merchandising Flexibility • Innovative Spin-and-Secure Design Keeps Device in Place • Small, Self-contained System (Security, Power, Alarm) Minimizes Cables and Clutter • Available in White or Black The Most Secure Top-Mount System Available • Industry’s Highest Rip-Out Force via Cut-Resistant TruFeel AirTether™ • Electronic Alarm Constantly Attached to Device for 1:1 Security PRODUCT SPECIFICATIONS FlexTech Head 1.625”w x 1.625”d x .5”h FlexTech Cable Length: 6” Micro Riser Dimensions 1.63”w x 1.63”d x 3.87”h Micro Mounting Plate 2.5”w x 2.5”d x 0.075”h Dimensions: Micro Puck Dimensions: 1.5”w x 1.5”d x 1.46”h Powered Merchandising: Powers up to 5.2v at 2.5A Cable Pull Length: 30” via TruFeel AirTether™ Electronic Security: Alarm stays on the device Alarm: Up to 100 Db Allowable Revolutions: Unlimited (via swivel) TO ORDER: Visual System -

COMMON Vol.34 2014

COMMON Vol.34 2014 Vol.34 COMMON Vol. Computing and Networking Center of Kyushu Sangyo University 34 COMMON Vol.34 2014 Contents 2014 Foreword ■ 16 years as a research committee of Japan Universities Associ ation for Computer Education Kazuo kanekawa ・・・ 1 Computing and Networking Center Research and Development Report 34 2014 ■ Developing a E-Learning system for Korean language learners o f KSU Yukiko Hasegawa ・・・ 4 ■ Motivational commercials in the context of English Language l earning at a Japanese University: Concept and Pilot Luke K. Fryer, Akira Sano, H. Nicholas Bovee, Shuichi Ozono・・・14 General Research Report ■ The business model of a wearable devices, and the future Miwako Hirakawa, Yukihiro Maki ・・・22 ■ Utilization and Survey of Social Media Use in Faculty of Mana gement Yoshihiro Fukunaga, Taiki Ikeda, Kaoru Fukuda ・・・34 ■ A Programming Exercise Environment for System Development Edu cation Sei Gai, Seigi Matsumoto ・・・52 From the Center ■ Computing and Networking Center Operation Report ・・・・・・ ・・・ ・・ 60 ■ Computing and Networking Center Research and Development Plan s ・・・・ 84 九州産業大学総合情報基盤センター Information for contributors to COMMON of Kyushu Sangyo University ・・・・ 85 Editor's Notes ・ ・・・・・・・・・・・・・・・・・・・・・・・・・・・・ 86 Ꮊ↥ᬺᄢቇ✚วᖱႎၮ⋚ࡦ࠲ 㧯㧻㧹㧹㧻㧺 Vol.34 2014 ⋡ ᰴ Ꮞ㗡⸒Ꮞ㗡⸒ ᄢቇᖱႎᢎ⢒දળߩ⎇ⓥᆔຬߣߒߡߩ㧝㧢ᐕ㑆㩷㩷㩷㩷㩷㩷㩷㩷㩷㩷 㩷 㩷 㩷 㩷 㩷 㩷 㩷 㩷 ㊄Ꮉ ৻ᄦ㨯㨯㨯┙⑳ع ✚วᖱႎၮ⋚ࡦ࠲⎇ⓥ㐿⊒ႎ๔✚วᖱႎၮ⋚ࡦ࠲⎇ⓥ㐿⊒ႎ๔ ⊑ߩ㐿ޠޢߋࠆߋࠆࡂࡦࠣ࡞ޡ↥ᄢ↢ߩߚߩ㖧࿖⺆ '.GCTPKPIޟع 㐳⼱Ꮉ ↱ሶ㨯㨯㨯 ᣣᧄߩᄢቇߦ߅ߌࠆቇ↢ߩ⧷⺆ቇ⠌ߦ㑐ㅪߒߚᗧ᰼ะߩߚߩᤋࡔ࠶ࠫ㧦 ع ᔨߣ੍⺞ᩏ .WMG-(T[GTޔ㊁ᓆޔ*0KEJQNCU$QXGGޔᄢ⭽ ୃ৻㨯㨯㨯 ৻⥸⎇ⓥႎ๔৻⥸⎇ⓥႎ๔ ࠙ࠚࠕࡉ࡞┵ᧃߩࡆࠫࡀࠬࡕ࠺࡞ߣᓟߩዷᦸع -

Règlement Complet De 4G Quest Mayotte Version Android

Règlement complet de 4G Quest Mayotte Version Android Article 1 - Société Organisatrice Orange, ci-après dénommée la « Société organisatrice », société anonyme au capital de 10 640 226 396 euros, immatriculée au RCS de Paris, sous le numéro RCS 380 129 866, et dont le siège social est situé 78 rue Olivier de Serres – 75505 Paris Cedex 15 (ci-après dénommée la « Société Organisatrice »), domiciliée aux fins des présentes au 35, Bd du Chaudron, Zone Industrielle du Chaudron à Sainte Clotilde Le jeu est intitulé : « 4G Quest » désigné ci-après le « Jeu », il est gratuit et sans obligation d’achat ; Le jeu se déroule du Vendredi 6 Janvier 2017, jusqu’au Dimanche 29 Janvier 2017, 23h59 (heure de Mayotte). Pour y participer il suffit de télécharger l’application nommée « 4G Quest » éditée par Orange sur un téléphone mobile compatible (Smartphone) pour pouvoir capturer virtuellement les logos 4G présents dans l’une des 2 villes participantes à Mayotte (ayant fait l’objet d’un déploiement technique en 4G). La liste des villes participantes consultable en annexes du règlement et sur http://4gquest.orange.re (ci-après le « Site ») pour Orange. La liste des plateformes de téléchargement disponibles et des mobiles compatibles avec l’application « 4G Quest » est consultable sur http://4gquest.orange.yt (ci-après le « Site ») pour Orange. Google® n'est ni un sponsor ni affilié au jeu organisé par Orange. L'application est disponible sur GooglePlay, mobiles compatibles Android 5 et +. Service Client : Internet : Internet Orange - service client 33734 Bordeaux Cedex 9 Tél : 3900 (coût d’une communication locale depuis un fixe) Mobiles : Service Clients Orange 35, Bd du Chaudron - 97490 Sainte Clotilde Tél : 456 (gratuit depuis un mobile Orange) Editeur : Portail Orange Réunion SAS Société au capital de 10 640 226 396 euros RCS PARIS 509 508 412 Siège social : 78, rue Olivier de Serres, 75 015 Paris - N° de téléphone : 0262 23 16 99 Directeur de la publication : Arnaud LECA Hébergement : Hostin Cahri SARL 52, route de Savannah 97460 Saint-Paul Tél. -

ESTTA800156 02/08/2017 in the UNITED STATES PATENT and TRADEMARK OFFICE BEFORE the TRADEMARK TRIAL and APPEAL BOARD Proceeding 9

Trademark Trial and Appeal Board Electronic Filing System. http://estta.uspto.gov ESTTA Tracking number: ESTTA800156 Filing date: 02/08/2017 IN THE UNITED STATES PATENT AND TRADEMARK OFFICE BEFORE THE TRADEMARK TRIAL AND APPEAL BOARD Proceeding 91220591 Party Plaintiff TCT Mobile International Limited Correspondence SUSAN M NATLAND Address KNOBBE MARTENS OLSON & BEAR LLP 2040 MAIN STREET , 14TH FLOOR IRVINE, CA 92614 UNITED STATES [email protected], [email protected] Submission Motion to Amend Pleading/Amended Pleading Filer's Name Jonathan A. Hyman Filer's e-mail [email protected], [email protected] Signature /jhh/ Date 02/08/2017 Attachments TCLC.004M-Opposer's Motion for Leave to Amend Notice of Opp and Motion to Suspend.pdf(1563803 bytes ) TCLC.004M-AmendNoticeofOpposition.pdf(1599537 bytes ) TCLC.004M-NoticeofOppositionExhibits.pdf(2003482 bytes ) EXHIBIT A 2/11/2015 Moving Definition and More from the Free MerriamWebster Dictionary An Encyclopædia Britannica Company Join Us On Dictionary Thesaurus Medical Scrabble Spanish Central moving Games Word of the Day Video Blog: Words at Play My Faves Test Your Dictionary SAVE POPULARITY Vocabulary! move Save this word to your Favorites. If you're logged into Facebook, you're ready to go. 13 ENTRIES FOUND: moving move moving average moving cluster movingcoil movingiron meter moving pictureSponsored Links Advertise Here moving sidewalkKnow Where You Stand moving staircaseMonitor your credit. Manage your future. Equifax Complete™ Premier. fastmovingwww.equifax.com