STREAMS Vs. Sockets Performance Comparison for UDP

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mac OS 8 Update

K Service Source Mac OS 8 Update Known problems, Internet Access, and Installation Mac OS 8 Update Document Contents - 1 Document Contents • Introduction • About Mac OS 8 • About Internet Access What To Do First Additional Software Auto-Dial and Auto-Disconnect Settings TCP/IP Connection Options and Internet Access Length of Configuration Names Modem Scripts & Password Length Proxies and Other Internet Config Settings Web Browser Issues Troubleshooting • About Mac OS Runtime for Java Version 1.0.2 • About Mac OS Personal Web Sharing • Installing Mac OS 8 • Upgrading Workgroup Server 9650 & 7350 Software Mac OS 8 Update Introduction - 2 Introduction Mac OS 8 is the most significant update to the Macintosh operating system since 1984. The updated system gives users PowerPC-native multitasking, an efficient desktop with new pop-up windows and spring-loaded folders, and a fully integrated suite of Internet services. This document provides information about Mac OS 8 that supplements the information in the Mac OS installation manual. For a detailed description of Mac OS 8, useful tips for using the system, troubleshooting, late-breaking news, and links for online technical support, visit the Mac OS Info Center at http://ip.apple.com/infocenter. Or browse the Mac OS 8 topic in the Apple Technical Library at http:// tilsp1.info.apple.com. Mac OS 8 Update About Mac OS 8 - 3 About Mac OS 8 Read this section for information about known problems with the Mac OS 8 update and possible solutions. Known Problems and Compatibility Issues Apple Language Kits and Mac OS 8 Apple's Language Kits require an updater for full functionality with this version of the Mac OS. -

Libioth (Slides)

libioth The definitive API for the Internet of Threads Renzo Davoli – Michael Goldweber "niversit$ o% Bolo&na '(taly) – *avier "niver#it$ (Cin!innati, "SA) ,irt-alS.-are Micro/ernel DevRoo0 1 © 2021 Davoli-Goldweber libioth. CC-BY-SA 4.0 (nternet of Thread# (IoTh) service1.company.com → 2001:1:2::1 service2.company.com → 2001:1:2::2 host.company.com→11.12.13.14 processes service3.company.com → 2001:1:2::3 2 © 2021 Davoli-Goldweber libioth. CC-BY-SA 4.0 IoTh vs Iold What is an end-node o% the (nternet4 (t depends on what is identified b$ an (P addre##. ● (nternet o% 1hreads – (oTh – 7roce##e#8threads are a-tonomou# nodes of the (nternet ● (nternet o% 9e&ac$ Devi!es 'in brie% (-old in this presentation) – (nternet o% :ost# – Internet of ;etwork Controller#. – (nternet o% ;a0e#5a!e# 2 © 2021 Davoli-Goldweber libioth. CC-BY-SA 4.0 7,M – IoTh - </ernel 4 © 2021 Davoli-Goldweber libioth. CC-BY-SA 4.0 7,M – IoTh - </ernel ● icro/ernel, Internet o% Threads, Partial Virtual a!hines have the co00on goal to create independent code units i05lementing services ● 1hey are all against monolithic i05lementation# ● 1he challenge o% this talk is to !reate !ontacts, e>5loit paralleli#0#+ that allow to share res-lts, A7I, code. = © 2021 Davoli-Goldweber libioth. CC-BY-SA 4.0 Network Sta!/ ● API to application layer TCP-IP stack TCP UDP IPv4 IPv6 ICMP(v4,v6) ● API (NPI) to data-link – (e.g. libioth uses VDE: libvdeplug) ? © 2021 Davoli-Goldweber libioth. CC-BY-SA 4.0 IoTh & </ernel User process User process TCP-IP stack TCP UDP TCP/IP stack process IPv4 IPv6 ICMP(v4,v6) TCP-IP stack TCP UDP IPv4 IPv6 ICMP(v4,v6) Data-link network server Data-link network server @ © 2021 Davoli-Goldweber libioth. -

Transport Interfaces Programming Guide

Transport Interfaces Programming Guide Sun Microsystems, Inc. 2550 Garcia Avenue Mountain View, CA 94043-1100 U.S.A. Part No: 802-5886 August 1997 Copyright 1997 Sun Microsystems, Inc. 901 San Antonio Road, Palo Alto, California 94303-4900 U.S.A. All rights reserved. This product or document is protected by copyright and distributed under licenses restricting its use, copying, distribution, and decompilation. No part of this product or document may be reproduced in any form by any means without prior written authorization of Sun and its licensors, if any. Third-party software, including font technology, is copyrighted and licensed from Sun suppliers. Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and other countries, exclusively licensed through X/Open Company, Ltd. Sun, Sun Microsystems, the Sun logo, SunSoft, SunDocs, SunExpress, and Solaris are trademarks, registered trademarks, or service marks of Sun Microsystems, Inc. in the U.S. and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. in the U.S. and other countries. Products bearing SPARC trademarks are based upon an architecture developed by Sun Microsystems, Inc. The OPEN LOOK and SunTM Graphical User Interface was developed by Sun Microsystems, Inc. for its users and licensees. Sun acknowledges the pioneering efforts of Xerox in researching and developing the concept of visual or graphical user interfaces for the computer industry. Sun holds a non-exclusive license from Xerox to the Xerox Graphical User Interface, which license also covers Sun’s licensees who implement OPEN LOOK GUIs and otherwise comply with Sun’s written license agreements. -

SYSTEM V RELEASE 4 Migration Guide

- ATlaT UN/~ SYSTEM V RELEASE 4 Migration Guide UNIX Software Operation Copyright 1990,1989,1988,1987,1986,1985,1984,1983 AT&T All Rights Reserved Printed In USA Published by Prentice-Hall, Inc. A Division of Simon & Schuster Englewood Cliffs, New Jersey 07632 No part of this publication may be reproduced or transmitted in any form or by any means-graphic, electronic, electrical, mechanical, or chemical, including photocopying, recording in any medium, tap ing, by any computer or information storage and retrieval systems, etc., without prior permissions in writing from AT&T. IMPORTANT NOTE TO USERS While every effort has been made to ensure the accuracy of all information in this document, AT&T assumes no liability to any party for any loss or damage caused by errors or omissions or by state ments of any kind in this document, its updates, supplements, or special editions, whether such er rors are omissions or statements resulting from negligence, accident, or any other cause. AT&T furth er assumes no liability arising out of the application or use of any product or system described herein; nor any liability for incidental or consequential damages arising from the use of this docu ment. AT&T disclaims all warranties regarding the information contained herein, whether expressed, implied or statutory, including implied warranties of merchantability or fitness for a particular purpose. AT&T makes no representation that the interconnection of products in the manner described herein will not infringe on existing or future patent rights, nor do the descriptions contained herein imply the granting or license to make, use or sell equipment constructed in accordance with this description. -

Chapter 1. Origins of Mac OS X

1 Chapter 1. Origins of Mac OS X "Most ideas come from previous ideas." Alan Curtis Kay The Mac OS X operating system represents a rather successful coming together of paradigms, ideologies, and technologies that have often resisted each other in the past. A good example is the cordial relationship that exists between the command-line and graphical interfaces in Mac OS X. The system is a result of the trials and tribulations of Apple and NeXT, as well as their user and developer communities. Mac OS X exemplifies how a capable system can result from the direct or indirect efforts of corporations, academic and research communities, the Open Source and Free Software movements, and, of course, individuals. Apple has been around since 1976, and many accounts of its history have been told. If the story of Apple as a company is fascinating, so is the technical history of Apple's operating systems. In this chapter,[1] we will trace the history of Mac OS X, discussing several technologies whose confluence eventually led to the modern-day Apple operating system. [1] This book's accompanying web site (www.osxbook.com) provides a more detailed technical history of all of Apple's operating systems. 1 2 2 1 1.1. Apple's Quest for the[2] Operating System [2] Whereas the word "the" is used here to designate prominence and desirability, it is an interesting coincidence that "THE" was the name of a multiprogramming system described by Edsger W. Dijkstra in a 1968 paper. It was March 1988. The Macintosh had been around for four years. -

Improving Networking

IMPERIAL COLLEGE LONDON FINALYEARPROJECT JUNE 14, 2010 Improving Networking by moving the network stack to userspace Author: Matthew WHITWORTH Supervisor: Dr. Naranker DULAY 2 Abstract In our modern, networked world the software, protocols and algorithms involved in communication are among some of the most critical parts of an operating system. The core communication software in most modern systems is the network stack, but its basic monolithic design and functioning has remained unchanged for decades. Here we present an adaptable user-space network stack, as an addition to my operating system Whitix. The ideas and concepts presented in this report, however, are applicable to any mainstream operating system. We show how re-imagining the whole architecture of networking in a modern operating system offers numerous benefits for stack-application interactivity, protocol extensibility, and improvements in network throughput and latency. 3 4 Acknowledgements I would like to thank Naranker Dulay for supervising me during the course of this project. His time spent offering constructive feedback about the progress of the project is very much appreciated. I would also like to thank my family and friends for their support, and also anybody who has contributed to Whitix in the past or offered encouragement with the project. 5 6 Contents 1 Introduction 11 1.1 Motivation.................................... 11 1.1.1 Adaptability and interactivity.................... 11 1.1.2 Multiprocessor systems and locking................ 12 1.1.3 Cache performance.......................... 14 1.2 Whitix....................................... 14 1.3 Outline...................................... 15 2 Hardware and the LDL 17 2.1 Architectural overview............................. 17 2.2 Network drivers................................. 18 2.2.1 Driver and device setup....................... -

Mac OS X: an Introduction for Support Providers

Mac OS X: An Introduction for Support Providers Course Information Purpose of Course Mac OS X is the next-generation Macintosh operating system, utilizing a highly robust UNIX core with a brand new simplified user experience. It is the first successful attempt to provide a fully-functional graphical user experience in such an implementation without requiring the user to know or understand UNIX. This course is designed to provide a theoretical foundation for support providers seeking to provide user support for Mac OS X. It assumes the student has performed this role for Mac OS 9, and seeks to ground the student in Mac OS X using Mac OS 9 terms and concepts. Author: Robert Dorsett, manager, AppleCare Product Training & Readiness. Module Length: 2 hours Audience: Phone support, Apple Solutions Experts, Service Providers. Prerequisites: Experience supporting Mac OS 9 Course map: Operating Systems 101 Mac OS 9 and Cooperative Multitasking Mac OS X: Pre-emptive Multitasking and Protected Memory. Mac OS X: Symmetric Multiprocessing Components of Mac OS X The Layered Approach Darwin Core Services Graphics Services Application Environments Aqua Useful Mac OS X Jargon Bundles Frameworks Umbrella Frameworks Mac OS X Installation Initialization Options Installation Options Version 1.0 Copyright © 2001 by Apple Computer, Inc. All Rights Reserved. 1 Startup Keys Mac OS X Setup Assistant Mac OS 9 and Classic Standard Directory Names Quick Answers: Where do my __________ go? More Directory Names A Word on Paths Security UNIX and security Multiple user implementation Root Old Stuff in New Terms INITs in Mac OS X Fonts FKEYs Printing from Mac OS X Disk First Aid and Drive Setup Startup Items Mac OS 9 Control Panels and Functionality mapped to Mac OS X New Stuff to Check Out Review Questions Review Answers Further Reading Change history: 3/19/01: Removed comment about UFS volumes not being selectable by Startup Disk. -

Post Sockets - a Modern Systems Network API Mihail Yanev (2065983) April 23, 2018

Post Sockets - A Modern Systems Network API Mihail Yanev (2065983) April 23, 2018 ABSTRACT In addition to providing native solutions for problems, es- The Berkeley Sockets API has been the de-facto standard tablishing optimal connection with a Remote point or struc- systems API for networking. However, designed more than tured data communication, we have identified that a com- three decades ago, it is not a good match for the networking mon issue with many of the previously provided networking applications today. We have identified three different sets of solutions has been leaving the products vulnerable to code problems in the Sockets API. In this paper, we present an injections and/or buffer overflow attacks. The root cause implementation of Post Sockets - a modern API, proposed by for such problems has mostly proved to be poor memory Trammell et al. that solves the identified limitations. This or resource management. For this reason, we have decided paper can be used for basis of evaluation of the maturity of to use the Rust programming language to implement the Post Sockets and if successful to promote its introduction as new API. Rust is a programming language, that provides a replacement API to Berkeley Sockets. data integrity and memory safety guarantees. It uses region based memory, freeing the programmer from the responsibil- ity of manually managing the heap resources. This in turn 1. INTRODUCTION eliminates the possibility of leaving the code vulnerable to Over the years, writing networked applications has be- resource management errors, as this is handled by the lan- come increasingly difficult. -

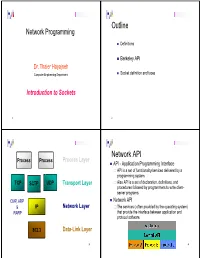

Outline Network Programming

Outline Network Programming Definitions Berkeley API Dr. Thaier Hayajneh Computer Engineering Department Socket definition and types Introduction to Sockets 1 2 Network API Process Process Process Layer API - Application Programming Interface API is a set of functionality/services delivered by a programming system. TCP SCTP UDP Transppyort Layer Also API is a set of declaration, definitions, and procedflldbdures followed by programmers titlitto write client- server programs. ICMP, ARP Network API & IP Network Layer The services ( often provided by the operating system) RARP that provide the interface between application and protocol software . 802.3 Data-Link Layer 3 4 Network API Network API wish list Generic Programming Interface. Internet Support multiple communication protocol suites OSI model Application protocol suite details (families). Application User Address (endpoint) representation independence. processor Presentation Application Provide special services for Client and Server Session Transport TCP UDP Support for message oriented and connection Network IPv4, IPv6 oriented communication. kernel Data link Data link Work with existing I/O services Physical Physical Communications Operating System independence details Presentation layer services 5 6 TCP/IP Client-Server Model TCP/IP does not include an API definition. Client 1 Server Client 2 There are a variety of APIs for use with TCP/IP: Client 3 Sockets by Berkeley XTI (X/Open Transport Interface) by AT&T One side of communication is client, and the other side is server Winsock - Windows Sockets API by Microsoft Server waits for a client request to arrive MacTCP / Open Transport by Apple Server processes the client request and sends the response back to the client Iterative or concurrent 7 8 Functions needed: Berkeley Sockets Sppyecify local and remote communication A socket is an abstract representation of a communication endpoint. -

Introduction to RAW-Sockets Jens Heuschkel, Tobias Hofmann, Thorsten Hollstein, Joel Kuepper

Introduction to RAW-sockets Jens Heuschkel, Tobias Hofmann, Thorsten Hollstein, Joel Kuepper 16.05.2017 Technical Report No. TUD-CS-2017-0111 Technische Universität Darmstadt Telecooperation Report No. TR-19, The Technical Reports Series of the TK Research Division, TU Darmstadt ISSN 1864-0516 http://www.tk.informatik.tu-darmstadt.de/de/publications/ Introduction to RAW-sockets by Heuschkel, Jens Hofmann, Tobias Hollstein, Thorsten Kuepper, Joel May 17, 2017 Abstract This document is intended to give an introduction into the programming with RAW-sockets and the related PACKET-sockets. RAW-sockets are an additional type of Internet socket available in addition to the well known DATAGRAM- and STREAM-sockets. They do allow the user to see and manipulate the information used for transmitting the data instead of hiding these details, like it is the case with the usually used STREAM- or DATAGRAM sockets. To give the reader an introduction into the subject we will first give an overview about the different APIs provided by Windows, Linux and Unix (FreeBSD, Mac OS X) and additional libraries that can be used OS-independent. In the next section we show general problems that have to be addressed by the programmer when working with RAW-sockets. We will then provide an introduction into the steps necessary to use the APIs or libraries, which functionality the different concepts provide to the programmer and what they provide to simplify using RAW and PACKET-sockets. This section includes examples of how to use the different functions provided by the APIs. Finally in the additional material we will give some complete examples that show the concepts and can be used as a basis to write own programs. -

Fast Linux I/O in the Unix Tradition

— preprint only: final version will appear in OSR, July 2008 — PipesFS: Fast Linux I/O in the Unix Tradition Willem de Bruijn Herbert Bos Vrije Universiteit Amsterdam Vrije Universiteit Amsterdam and NICTA [email protected] [email protected] ABSTRACT ory wall” [26]). To improve throughput, it is now essential This paper presents PipesFS, an I/O architecture for Linux to avoid all unnecessary memory operations. Inefficient I/O 2.6 that increases I/O throughput and adds support for het- primitives exacerbate the effects of the memory wall by in- erogeneous parallel processors by (1) collapsing many I/O curring unnecessary copying and context switching, and as interfaces onto one: the Unix pipeline, (2) increasing pipe a result of these cache misses. efficiency and (3) exploiting pipeline modularity to spread computation across all available processors. Contribution. We revisit the Unix pipeline as a generic model for streaming I/O, but modify it to reduce overhead, PipesFS extends the pipeline model to kernel I/O and com- extend it to integrate kernel processing and complement it municates with applications through a Linux virtual filesys- with support for anycore execution. We link kernel and tem (VFS), where directory nodes represent operations and userspace processing through a virtual filesystem, PipesFS, pipe nodes export live kernel data. Users can thus interact that presents kernel operations as directories and live data as with kernel I/O through existing calls like mkdir, tools like pipes. This solution avoids new interfaces and so unlocks all grep, most languages and even shell scripts. -

Linux Fast-STREAMS Installation and Reference Manual Version 0.9.2 Edition 4 Updated 2008-10-31 Package Streams-0.9.2.4

Linux Fast-STREAMS Installation and Reference Manual Version 0.9.2 Edition 4 Updated 2008-10-31 Package streams-0.9.2.4 Brian Bidulock <[email protected]> for The OpenSS7 Project <http://www.openss7.org/> Copyright c 2001-2008 OpenSS7 Corporation <http://www.openss7.com/> Copyright c 1997-2000 Brian F. G. Bidulock <[email protected]> All Rights Reserved. Published by OpenSS7 Corporation 1469 Jefferys Crescent Edmonton, Alberta T6L 6T1 Canada This is texinfo edition 4 of the Linux Fast-STREAMS manual, and is consistent with streams 0.9.2. This manual was developed under the OpenSS7 Project and was funded in part by OpenSS7 Corporation. Permission is granted to make and distribute verbatim copies of this manual provided the copyright notice and this permission notice are preserved on all copies. Permission is granted to copy and distribute modified versions of this manual under the con- ditions for verbatim copying, provided that the entire resulting derived work is distributed under the terms of a permission notice identical to this one. Permission is granted to copy and distribute translations of this manual into another lan- guage, under the same conditions as for modified versions. i Short Contents Preface ::::::::::::::::::::::::::::::::::::::::::::::::: 1 Quick Start Guide :::::::::::::::::::::::::::::::::::::::: 9 1 Introduction :::::::::::::::::::::::::::::::::::::::: 15 2 Objective ::::::::::::::::::::::::::::::::::::::::::: 17 3 Reference ::::::::::::::::::::::::::::::::::::::::::: 21 4 Development ::::::::::::::::::::::::::::::::::::::::