A Multi-Objective Approach to Tactical Maneuvering Within Real Time Strategy Games Christopher D

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Tribes: a New Turn-Based Strategy Game for AI Research

Tribes: A New Turn-Based Strategy Game for AI Research Diego Perez-Liebana, Yu-Jhen Hsu, Stavros Emmanouilidis, Bobby Dewan Akram Khaleque, Raluca D. Gaina School of Electronic Engineering and Computer Science Queen Mary University of London, UK Abstract two main methods that provide the functionality required by SFP (next - to advance the game state, and copy - to This paper introduces Tribes, a new turn-based strategy game clone it) can be computationally expensive in execution time framework. Tribes is a multi-player, multi-agent, stochastic and partially observable game that involves strategic and tac- and memory. That is one of the reasons why the more com- tical combat decisions. A good playing strategy requires the plex environments (e.g Starcraft II (Blizzard Entertainment management of a technology tree, build orders and economy. 2010)) do not provide a FM API for AI agents. The framework provides a Forward Model, which can be There has been some previous work on frameworks used by Statistical Forward Planning methods. This paper de- that incorporate FM access for SFP in strategy games. scribes the framework and the opportunities for Game AI re- Some of these benchmarks are concerned with the manage- search it brings. We further provide an analysis on the action space of this game, as well as benchmarking a series of agents ment of multiple units, such as HeroAIcademy (Justesen, (rule based, one step look-ahead, Monte Carlo, Monte Carlo Mahlmann, and Togelius 2016) and Bot Bowl (Justesen et Tree Search, and Rolling Horizon Evolution) to study their al. 2019). Santiago Ontan˜on’s´ µRTS (Ontan˜on´ et al. -

M.A.X Pc Game Download Frequently Asked Questions

m.a.x pc game download Frequently Asked Questions. Simple! To upload and share games from GOG.com. What is the easiest way to download or extract files? Please use JDownloader 2 to download game files and 7-Zip to extract them. How are download links prevented from expiring? All games are available to be voted on for a re-upload 30 days after they were last uploaded to guard against dead links. How can I support the site? Finding bugs is one way! If you run into any issues or notice anything out of place, please open an issue on GitHub. Can I donate? Donate. Do you love this site? Then donate to help keep it alive! So, how can YOU donate? What are donations used for exactly? Each donation is used to help cover operating expenses (storage server, two seedboxes, VPN tunnel and hosting). All games found on this site are archived on a high-speed storage server in a data center. We are currently using over 7 terabytes of storage. We DO NOT profit from any donations. What is the total cost to run the site? Total expenses are €91 (or $109) per month. How can I donate? You may donate via PayPal, credit/debit card, Bitcoin and other cryptocurrencies. See below for more information on each option. M.A.X.: Mechanized Assault and Exploration. Very much in the style of classic RTS games such as Dune 2, KKND and Command & Conquer, this is an enjoyable little game which doesn't score many points for being original, but does get a few for simply being well done. -

Special Tactics: a Bayesian Approach to Tactical Decision-Making Gabriel Synnaeve, Pierre Bessière

Special Tactics: a Bayesian Approach to Tactical Decision-making Gabriel Synnaeve, Pierre Bessière To cite this version: Gabriel Synnaeve, Pierre Bessière. Special Tactics: a Bayesian Approach to Tactical Decision-making. Proceedings of the IEEE Conference on Computational Intelligence and Games, Sep 2012, Granada, Spain. pp.978-1-4673-1194-6/12/ 409-416. hal-00752841 HAL Id: hal-00752841 https://hal.archives-ouvertes.fr/hal-00752841 Submitted on 16 Nov 2012 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Special Tactics: a Bayesian Approach to Tactical Decision-making Gabriel Synnaeve ([email protected]) and Pierre Bessiere` ([email protected]) Abstract—We describe a generative Bayesian model of tactical intention Strategy (tech tree, 3 min time to switch behaviors attacks in strategy games, which can be used both to predict army composition) attacks and to take tactical decisions. This model is designed to partial easily integrate and merge information from other (probabilistic) information estimations and heuristics. In particular, it handles uncertainty Tactics (army 30 sec in enemy units’ positions as well as their probable tech tree. We positions) claim that learning, being it supervised or through reinforcement, more adapts to skewed data sources. -

Game Development for Computer Science Education

Game Development for Computer Science Education Chris Johnson Monica McGill Durell Bouchard University of Wisconsin, Eau Bradley University Roanoke College Claire [email protected] [email protected] [email protected] Michael K. Bradshaw Víctor A. Bucheli Laurence D. Merkle Centre College Universidad del Valle Air Force Institute of michael.bradshaw@ victor.bucheli@ Technology centre.edu correounivalle.edu.co laurence.merkle@afit.edu Michael James Scott Z Sweedyk J. Ángel Falmouth University Harvey Mudd College Velázquez-Iturbide [email protected] [email protected] Universidad Rey Juan Carlos [email protected] Zhiping Xiao Ming Zhang University of California at Peking University Berkeley [email protected] [email protected] ABSTRACT cation, including where and how they fit into CS education. Games can be a valuable tool for enriching computer science To guide our discussions and analysis, we began with the education, since they can facilitate a number of conditions following question: in what ways can games be a valuable that promote learning: student motivation, active learning, tool for enriching computer science education? adaptivity, collaboration, and simulation. Additionally, they In our work performed prior to our first face-to-face meet- provide the instructor the ability to collect learning metrics ing, we reviewed over 120 games designed to teach comput- with relative ease. As part of 21st Annual Conference on ing concepts (which is available for separate download [5]) Innovation and Technology in Computer Science Education and reviewed several dozen papers related to game-based (ITiCSE 2016), the Game Development for Computer Sci- learning (GBL) for computing. Hainey [57] found that there ence Education working group convened to examine the cur- is \a dearth of empirical evidence in the fields of computer rent role games play in computer science (CS) education, in- science, software engineering and information systems to cluding where and how they fit into CS education. -

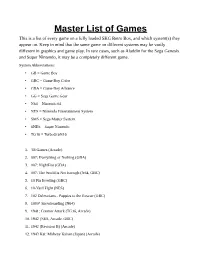

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games (Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack (TG16, Arcade) 10. 1942 (NES, Arcade, GBC) 11. 1942 (Revision B) (Arcade) 12. 1943 Kai: Midway Kaisen (Japan) (Arcade) 13. 1943: Kai (TG16) 14. 1943: The Battle of Midway (NES, Arcade) 15. 1944: The Loop Master (Arcade) 16. 1999: Hore, Mitakotoka! Seikimatsu (NES) 17. 19XX: The War Against Destiny (Arcade) 18. 2 on 2 Open Ice Challenge (Arcade) 19. 2010: The Graphic Action Game (Colecovision) 20. 2020 Super Baseball (SNES, Arcade) 21. 21-Emon (TG16) 22. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 23. 3 Count Bout (Arcade) 24. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 25. 3-D Tic-Tac-Toe (Atari 2600) 26. 3-D Ultra Pinball: Thrillride (GBC) 27. -

Evaluating the Use of Particle-Spring Systems in the Conceptual Design of Grid Shell Structures By

Evaluating the Use of Particle-Spring Systems in the Conceptual Design of Grid Shell Structures by Trevor B. Bertin B.S. Civil Engineering Worcester Polytechnic Institute, 2011 SUBMITTED TO THE DEPARTMENT OF CIVIL AND ENVIRONMENTAL ENGINEERING IN THE PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF 1 MASTER OF ENGINEERING IN CIVIL AND ENVIRONMENTAL ENGINEERING AT THE MASSACHUSETTS INSTITUTE OF TECHNOLOGY JUNE 2013 ©2013 Trevor Bertin. All Rights Reserved The author hereby grants to MIT permission to reproduce and to distribute publicly paper and electronic copies of this thesis document in whole or in part in any medium now known or hereafter created. Signature of Author: _____________________________________________________________ Department of Civil and Environmental Engineering May 10, 2013 Certified by: ___________________________________________________________________ John A. Ochsendorf Professor of Building Technology and Civil and Environmental Engineering Thesis Supervisor Accepted by: ___________________________________________________________________ Heidi M. Nepf Chair, Departmental Committee for Graduate Students 2 Evaluating the Use of Particle-Spring Systems in the Conceptual Design of Grid Shell Structures by Trevor B. Bertin Submitted to the Department of Civil and Environmental Engineering on May 10, 2013 in Partial Fulfillment of the Requirements for the Degree of Master of Engineering in Civil and Environmental Engineering ABSTRACT 3 This thesis evaluates particle-spring systems as conceptual design tools in an effort to create efficient grid shell structures. Currently many simulation tools are available to create representations of intricate geometries and forms. However, these forms can become highly complex and challenging upon their realization. A lack of understanding of these forms leads to structures that cannot support their corresponding loads due to their shape, boundary conditions or edge conditions. -

A History of Real-Time Strategy Gameplay from Decryption to Prediction: Introducing the Actional Statement

A History of Real-Time Strategy Gameplay From Decryption to Prediction: Introducing the Actional Statement Simon Dor Université de Montréal It is quite usual in strategy games to claim that strategies have a history of their own, chess being the canonical example (Murray 2002). The common strategies of a certain game change over time—often called the “metagame”— and can be retraced from a historical perspective. StarCraft II’s (Blizzard Entertainment, 2010-) community goes as far as to write histories of specific matchups (Alejandrisha et al. 2012), but every strategy game in some regards opens a space where gameplay can evolve. A 2009 walkthrough of the 1994 game Warcraft: Orcs and Humans (Blizzard Entertainment) states that the “reason most people think this game is so hard, is because they try to play it like an RTS [Real-Time Strategy game]” (Boehmer 2009, §2.03). It is quite surprising today to read this claim, considering Warcraft is widely regarded as one of the pioneers in the RTS genre, alongside with its Westwood’s earlier counterpart, Dune II: The Building of A Dynasty (Westwood Studios, 1992). In the perspective of mapping a history of the RTS genre, this kind of statement is quite problematic. Playing a RTS in 2009 is not like what was playing a strategy game in 1994, but how can we as researchers take into account a historical distance in playing habits? Warcraft’s difficulty was probably raised retrospectively, since playing habits acquired in subsequent games induce certain types of actions that do not necessarily favor victory in earlier releases. -

Identifying Players and Predicting Actions from RTS Game Replays

Identifying Players and Predicting Actions from RTS Game Replays Christopher Ballinger, Siming Liu and Sushil J. Louis Abstract— This paper investigates the problem of identifying StarCraft II, seen in Figure 1, is one of the most popular a Real-Time Strategy game player and predicting what a player multi-player RTS games with professional player leagues and will do next based on previous actions in the game. Being able world wide tournaments [2]. In South Korea, there are thirty to recognize a player’s playing style and their strategies helps us learn the strengths and weaknesses of a specific player, devise two professional StarCraft II teams with top players making counter-strategies that beat the player, and eventually helps us six-figure salaries and the average professional gamer making to build better game AI. We use machine learning algorithms more than the average Korean [3]. StarCraft II’s popularity in the WEKA toolkit to learn how to identify a StarCraft II makes obtaining larges amounts of different combat scenarios player and predict the next move of the player from features for our research easier, as the players themselves create large extracted from game replays. However, StarCraft II does not have an interface that lets us get all game state information like databases of professional and amateur replays and make the BWAPI provides for StarCraft: Broodwar, so the features them freely available on the Internet [4]. However, unlike we can use are limited. Our results reveal that using a J48 StarCraft: Broodwar, which has the StarCraft: Brood War decision tree correctly identifies a specific player out of forty- API (BWAPI) to provide detailed game state information one players 75% of the time. -

Disparo En Red 05 Disparo En Red

University of South Florida Scholar Commons Digital Collection - Science Fiction & Fantasy Digital Collection - Science Fiction & Fantasy Publications 1-9-2005 Disparo en Red 05 Disparo En Red Follow this and additional works at: http://scholarcommons.usf.edu/scifistud_pub Part of the Fiction Commons Scholar Commons Citation Disparo En Red, "Disparo en Red 05 " (2005). Digital Collection - Science Fiction & Fantasy Publications. Paper 179. http://scholarcommons.usf.edu/scifistud_pub/179 This Journal is brought to you for free and open access by the Digital Collection - Science Fiction & Fantasy at Scholar Commons. It has been accepted for inclusion in Digital Collection - Science Fiction & Fantasy Publications by an authorized administrator of Scholar Commons. For more information, please contact [email protected]. HOY: 9 de ENERO del 2005 DISPARO EN RED: Boletín electrónico de ciencia-ficción y fantasía. De frecuencia quincenal y totalmente gratis. Editores: darthmota Jartower Colaboradores: Taller de Creación ESPIRAL de ciencia ficción y fantasía. Anabel Enriquez Piñeiro Juan Pablo Noroña. Jorge Enrique Lage. 0. CONTENIDOS: 1.La frase de hoy: J.R.R. Tolkien. 2.Artículo: Anteproyecto para un canon de la CF, César Mallorquí. 3.Cuento clásico: La Puerta del Laberinto, Michael Ende. 4.Cuento made in Cuba: Los que van a morir, Erick Mota. 5.Curiosidades. Consejos de Bruce Sterling. 6.Reseñas. Dune, observaciones sobre la obra de Frank Herbert, por Yeray Rodríguez Domínguez. 7.¿Cómo contactarnos? 1. LA FRASE DE HOY: Supongo que soy un reaccionario. El mainstream de la literatura contemporánea es muy aburrido, ¿no es verdad? Yo estoy ofreciendo un saludable cambio de dieta. J.R.R. -

Outcome Prediction and Hierarchical Models in Real-Time Strategy Games

Outcome Prediction and Hierarchical Models in Real-Time Strategy Games by Adrian Marius Stanescu A thesis submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy Department of Computing Science University of Alberta ⃝c Adrian Marius Stanescu, 2018 Abstract For many years, traditional boardgames such as Chess, Checkers or Go have been the standard environments to test new Artificial Intelligence (AI) al- gorithms for achieving robust game-playing agents capable of defeating the best human players. Presently, the focus has shifted towards games that of- fer even larger action and state spaces, such as Atari and other video games. With a unique combination of strategic thinking and fine-grained tactical com- bat management, Real-Time Strategy (RTS) games have emerged as one of the most popular and challenging research environments. Besides state space complexity, RTS properties such as simultaneous actions, partial observabil- ity and real-time computing constraints make them an excellent testbed for decision making algorithms under dynamic conditions. This thesis makes contributions towards achieving human-level AI in these complex games. Specifically, we focus on learning, using abstractions and performing adversarial search in real-time domains with extremely large action and state spaces, for which forward models might not be available. We present two abstract models for combat outcome prediction that are accurate while reasonably computationally inexpensive. These models can in- form high level strategic decisions such as when to force or avoid fighting or be used as evaluation functions for look-ahead search algorithms. In both cases we obtained stronger results compared to at the time state-of-the-art heuris- tics. -

REPRESENTING UNCERTAINTY in RTS GAMES M.Sc. PROJECT REPORT

REPRESENTING UNCERTAINTY IN RTS GAMES Björn Jónsson Master of Science Software Engineering January 2012 School of Computer Science Reykjavík University M.Sc. PROJECT REPORT Representing Uncertainty in RTS Games by Björn Jónsson Project report submitted to the School of Computer Science at Reykjavík University in partial fulfillment of the requirements for the degree of Master of Science in Software Engineering January 2012 Project Report Committee: Dr. Yngvi Björnsson, Supervisor Accociate Professor, Reykjavík University, Iceland Dr. Hannes Högni Vilhjálmsson Accociate Professor, Reykjavík University, Iceland Dr. Jón Guðnason Assistant Professor, Reykjavík University, Iceland Copyright Björn Jónsson January 2012 Representing Uncertainty in RTS Games Björn Jónsson January 2012 Abstract Real-time strategy (RTS) games are partially observable environments, re- quiring players to reason under uncertainty. The main source of uncertainty in RTS games is that players do not initially know the game map, including what units the opponent has created. This information gradually improves, in part by exploring, as the game progresses. To compensate for this uncer- tainty, human players use their experience and domain knowledge to estimate the combination of units that opponents control, and make decisions based on these estimates. For RTS game AI to mimic this behavior of human players, a suitable knowledge representation is required. The order in which units can be created in RTS games is conditioned by a game specific technology tree where units represented by parent nodes in the tree need to be created before units represented by child nodes can be created. We propose the use of a Bayesian Network (BN) to represent the beliefs that RTS game AI players have about the expansion of the technology tree of their opponents. -

Narrative Representation and Ludic Rhetoric of Imperialism in Civilization 5

Narrative Representation and Ludic Rhetoric of Imperialism in Civilization 5 Masterarbeit im Fach English and American Literatures, Cultures, and Media der Philosophischen Fakultät der Christian-Albrechts-Universität zu Kiel vorgelegt von Malte Wendt Erstgutachter: Prof. Dr. Christian Huck Zweitgutachter: Tristan Emmanuel Kugland Kiel im März 2018 Table of contents 1 Introduction 1 2 Hypothesis 4 3 Methodology 5 3.1 Inclusions and exclusions 5 3.2 Structure 7 4 Relevant postcolonial concepts 10 5 Overview and categorization of Civilization 5 18 5.1 Premise and paths to victory 19 5.2 Basics on rules, mechanics, and interface 20 5.3 Categorization 23 6 Narratology: surface design 24 6.1 Paratexts and priming 25 6.1.1 Announcement trailer 25 6.1.2 Developer interview 26 6.1.3 Review and marketing 29 6.2 Civilizations and leaders 30 6.3 Universal terminology and visualizations 33 6.4 Natural, National, and World Wonders 36 6.5 Universal history and progress 39 6.6 User interface 40 7 Ludology: procedural rhetoric 43 7.1 Defining ludological terminology 43 7.2 Progress and the player element: the emperor's new toys 44 7.3 Unity and territory: the worth of a nation 48 7.4 Religion, Policies, and Ideology: one nation under God 51 7.5 Exploration and barbarians: into the heart of darkness 56 7.6 Resources, expansion, and exploitation: for gold, God, and glory 58 7.7 Collective memory and culture: look on my works 62 7.8 Cultural Victory and non-violent relations: the ballot 66 7.9 Domination Victory and war: the bullet 71 7.10 The Ex Nihilo Paradox: build like an Egyptian 73 7.11 The Designed Evolution Dilemma: me, the people 77 8 Conclusion and evaluation 79 Deutsche Zusammenfassung 83 Bibliography 87 1 Introduction “[V]ideo games – an important part of popular culture – mediate ideology, whether by default or design.” (Hayse, 2016:442) This thesis aims to uncover the imperialist and colonialist ideologies relayed in the video game Sid Meier's Civilization V (2K Games, 2010) (abbrev.