Smart Client Architecture and Design Guide

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Programmer's Guide to C

Download from Wow! eBook <www.wowebook.com> For your convenience Apress has placed some of the front matter material after the index. Please use the Bookmarks and Contents at a Glance links to access them. Contents at a Glance Preface ����������������������������������������������������������������������������������������������������������������������� xxv About the Author ����������������������������������������������������������������������������������������������������� xxvii About the Technical Reviewer ����������������������������������������������������������������������������������� xxix Acknowledgments ����������������������������������������������������������������������������������������������������� xxxi Introduction ������������������������������������������������������������������������������������������������������������� xxxiii ■■Chapter 1: C# and the .NET Runtime and Libraries �����������������������������������������������������1 ■■Chapter 2: C# QuickStart and Developing in C# ����������������������������������������������������������3 ■■Chapter 3: Classes 101 ����������������������������������������������������������������������������������������������11 ■■Chapter 4: Base Classes and Inheritance ������������������������������������������������������������������19 ■■Chapter 5: Exception Handling ����������������������������������������������������������������������������������33 ■■Chapter 6: Member Accessibility and Overloading ���������������������������������������������������47 ■■Chapter 7: Other Class Details �����������������������������������������������������������������������������������57 -

Key Features in Windows Server 2008 R2 and Windows Server

With Windows Server® 2008 R2 Remote Desktop Services (RDS), Microsoft is progressing in its vision to provide the best virtualization platform for accelerating and extending desktop and application deployments from the data center to any device. In addition to the traditional session virtualization scenario (formerly known as “Terminal Services”), Remote Desktop Services is expanding its role to provide an extensible platform for a Virtual Desktop Infrastructure (VDI). RDS is a cost-effective infrastructure platform for any type of organization, particularly those with a roaming workforce, structured task workers or knowledge workers with a need for flexible desktop or application access, including contractors, offshore workers and office workers that require a free seating environment or have a need to work from home. SOLUTION BENEFITS ACCELERATE DESKTOP & APPLICATION HELP SECURE DATA AND INCREASE REMOTE WORKER DEPLOYMENT APPLICATIONS EFFICIENCY Remote Desktop Services accelerates and Remote Desktop Services helps Remote Desktop Services helps extends the deployment of desktops and organizations keep critical simplify remote connectivity, applications to a wide array of client devices, intellectual property highly secure enabling rich applications to be helping make your organization more agile and helps radically simplify accessed from a web page and and responsive. RDS also enables flexible regulatory compliance by removing seamlessly integrated with a local work scenarios such as hot-desking and work applications and data from the desktop, improving remote worker from home. desktop. efficiency. Key Features in Windows Server 2008 R2 and Windows Server 2008 R2 Service Pack 1: • Microsoft RemoteFX introduces a new set of end user experience capabilities, enabling local-like access to media-rich applications for virtual and session-based desktops; it can be deployed to a range of thick and thin client access devices. -

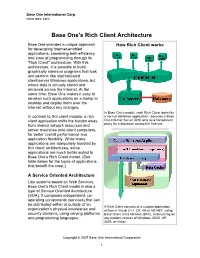

Rich Client Architecture

Base One International Corp. www.boic.com Base One's Rich Client Architecture Base One provides a unique approach How Rich Client works for developing Internet-enabled applications, combining both efficiency and ease of programming through its "Rich Client" architecture. With this architecture, it is possible to build graphically intensive programs that look and perform like sophisticated client/server Windows applications, but where data is actually stored and retrieved across the Internet. At the same time, Base One makes it easy to develop such applications on a laptop or desktop and deploy them over the Internet without any changes. In Base One's model, each Rich Client looks like In contrast to thin client models, a rich a normal database application - because a Base client application shifts the burden away One Internet Server (BIS) acts as a transparent from shared network resources and proxy for a database across the Internet. server machines onto client computers, for better overall performance and application flexibility. While many applications are adequately handled by thin client architectures, some applications are much better suited to Base One's Rich Client model. (See table below for the types of applications that benefit the most.) A Service Oriented Architecture Like systems based on Web Services, Base One's Rich Client model is also a type of Service Oriented Architecture (SOA). It comprises independent, co- operating components (services) that can be distributed within or outside of an A Rich Client consists of a custom application organization's physical boundaries and written in Visual C++, C#, VB or VB.NET, using security domains, using varying platforms Base One's class libraries (BFC), and running on and programming languages. -

Solid Code Ebook

PUBLISHED BY Microsoft Press A Division of Microsoft Corporation One Microsoft Way Redmond, Washington 98052-6399 Copyright © 2009 by Donis Marshall and John Bruno All rights reserved. No part of the contents of this book may be reproduced or transmitted in any form or by any means without the written permission of the publisher. Library of Congress Control Number: 2008940526 Printed and bound in the United States of America. 1 2 3 4 5 6 7 8 9 QWT 4 3 2 1 0 9 Distributed in Canada by H.B. Fenn and Company Ltd. A CIP catalogue record for this book is available from the British Library. Microsoft Press books are available through booksellers and distributors worldwide. For further infor mation about international editions, contact your local Microsoft Corporation office or contact Microsoft Press International directly at fax (425) 936-7329. Visit our Web site at www.microsoft.com/mspress. Send comments to [email protected]. Microsoft, Microsoft Press, Active Desktop, Active Directory, Internet Explorer, SQL Server, Win32, Windows, Windows NT, Windows PowerShell, Windows Server, and Windows Vista are either registered trademarks or trademarks of the Microsoft group of companies. Other product and company names mentioned herein may be the trademarks of their respective owners. The example companies, organizations, products, domain names, e-mail addresses, logos, people, places, and events depicted herein are fictitious. No association with any real company, organization, product, domain name, e-mail address, logo, person, place, or event is intended or should be inferred. This book expresses the author’s views and opinions. The information contained in this book is provided without any express, statutory, or implied warranties. -

Better Together: Rich Client Pcs and Cloud Computing

IT@Intel Brief Better Together: Rich Client Intel Information Technology Computer Manufacturing PCs and Cloud Computing Cloud Computing Cloud computing poses new questions about the optimal March 2009 Profile: Rich Clients and enterprise client computing strategy. With more and more Cloud Computing services delivered from the cloud, which combination of • Users can access both cloud and client platforms and service delivery models best meets conventional computing services the needs of users and IT organizations? The Intel IT environment contains a mixture of • All service delivery models supported conventional and cloud computing services, delivered primarily to rich client PCs. We have found that whether services are kept in-house or outsourced to the cloud, the ability to perform local computing on the client offers the best user experience and the flexibility to run different types of applications. Only rich clients support the full range of service delivery methods, as shown in Figure 1. In addition, rich clients deliver full mobile computing capabilities for our users, including the ability to work offline. Rich clients remain an important part of our IT strategy as we work toward our future direction of migrating services to the cloud, supporting a wider range of client platforms, and enabling client virtualization. External Cloud Internal Cloud Software as a Service (SaaS) Applications Remote Access • Staffing • Other SaaS Applications and Portal Services • Messaging and Collaboration • Productivity Applications • Benefits • Hosted -

Middleware in Action 2007

Technology Assessment from Ken North Computing, LLC Middleware in Action Industrial Strength Data Access May 2007 Middleware in Action: Industrial Strength Data Access Table of Contents 1.0 Introduction ............................................................................................................. 2 Mature Technology .........................................................................................................3 Scalability, Interoperability, High Availability ...................................................................5 Components, XML and Services-Oriented Architecture..................................................6 Best-of-Breed Middleware...............................................................................................7 Pay Now or Pay Later .....................................................................................................7 2.0 Architectures for Distributed Computing.................................................................. 8 2.1 Leveraging Infrastructure ........................................................................................ 8 2.2 Multi-Tier, N-Tier Architecture ................................................................................. 9 2.3 Persistence, Client-Server Databases, Distributed Data ....................................... 10 Client-Server SQL Processing ......................................................................................10 Client Libraries .............................................................................................................. -

Pocket PC User’S Guide

Pocket PC User’s Guide Your Pocket PC Terminal comes with a Getting Started with the Pocket PC Terminal in addition to this Pocket PC User’s Guide. Be sure to read the Hardware Manual first. Before using your Pocket PC Terminal for the first time,carefully read the Safety Precautions in the Hardware Manual. Keep this Pocket PC User’s Guide on hand for reference whenever using your Pocket PC Terminal. E To reset the Pocket PC Terminal If your Pocket PC Terminal responds slowly, stops responding as expected, or “freezes,” try resetting it. A reset causes the Pocket PC Terminal to restart and re-adjust memory allocation. Unsaved data in open windows may be lost. • Use the stylus to press and hold the Reset button for about two seconds. • CASIO COMPUTER CO., LTD. assumes no responsibility for any damage or loss resulting from the use of this manual. • CASIO COMPUTER CO., LTD. assumes no responsibility for any loss or claims by third parties which may arise through the use of this product. • CASIO COMPUTER CO., LTD. assumes no responsibility for any damage or loss caused by deletion of data as a result of malfunction, repairs, or battery replacement. Be sure to back up all important data on other media to protect against its loss. • Data can be uploaded from this unit to a personal computer for storage on a hard disk, floppy diskette, or other storage medium. Be sure to make multiple copies of all important data to protect against its accidental loss. • The contents of this manual are subject to change without notice. -

Comparative Studies of Programming Languages; Course Lecture Notes

Comparative Studies of Programming Languages, COMP6411 Lecture Notes, Revision 1.9 Joey Paquet Serguei A. Mokhov (Eds.) August 5, 2010 arXiv:1007.2123v6 [cs.PL] 4 Aug 2010 2 Preface Lecture notes for the Comparative Studies of Programming Languages course, COMP6411, taught at the Department of Computer Science and Software Engineering, Faculty of Engineering and Computer Science, Concordia University, Montreal, QC, Canada. These notes include a compiled book of primarily related articles from the Wikipedia, the Free Encyclopedia [24], as well as Comparative Programming Languages book [7] and other resources, including our own. The original notes were compiled by Dr. Paquet [14] 3 4 Contents 1 Brief History and Genealogy of Programming Languages 7 1.1 Introduction . 7 1.1.1 Subreferences . 7 1.2 History . 7 1.2.1 Pre-computer era . 7 1.2.2 Subreferences . 8 1.2.3 Early computer era . 8 1.2.4 Subreferences . 8 1.2.5 Modern/Structured programming languages . 9 1.3 References . 19 2 Programming Paradigms 21 2.1 Introduction . 21 2.2 History . 21 2.2.1 Low-level: binary, assembly . 21 2.2.2 Procedural programming . 22 2.2.3 Object-oriented programming . 23 2.2.4 Declarative programming . 27 3 Program Evaluation 33 3.1 Program analysis and translation phases . 33 3.1.1 Front end . 33 3.1.2 Back end . 34 3.2 Compilation vs. interpretation . 34 3.2.1 Compilation . 34 3.2.2 Interpretation . 36 3.2.3 Subreferences . 37 3.3 Type System . 38 3.3.1 Type checking . 38 3.4 Memory management . -

Deferred Cancellation

Deferred Cancellation A behavioral pattern Philipp Bachmann Institute for Medical Informatics and Biostatistics Clarastrasse 12 CH–4058 Basel, BS Switzerland [email protected] ABSTRACT General Terms People who design their own pool of worker threads [33, Design pp 290–298] or processes have to consider how to shut down the workers again or how to dynamically adapt the num- Keywords ber of workers to varying load. Especially with regard to application termination you may have the choice between an Patterns, Destructor, Actor, Reliability, Portability immediate destruction of the pool and a more graceful shut- down. The pattern proposed helps to portably implement Sir, my need is sore. such termination and load adaptation mechanisms that as- Spirits that I’ve cited My commands ignore. sume you voted for the second choice. The main area of application are the internals of active objects [40] and Johann Wolfgang von Goethe: The Sorcerer’s similar designs that delegate work to a pool of threads or Apprentice [23] processes to execute service requests asynchronously from their actual invocation. For the pattern proposed we identified usage examples in 1. INTENT popular existing applications or libraries. Safely shut down pools of worker threads [33, pp 290– Both a real world example and sample code accompany 298] or processes without resource leakages and premature the pattern presentation. This sample code is in C++. rollback of transactions. The design proposed aims at The presentation of the pattern follows the style portability. well known from [11] and [44]. This pattern is based upon other patterns. Typographic conventions for references to other patterns are similar to [3]. -

Portable Microsoft Visual Foxpro 9 SP2 Serial Key Keygen

Portable Microsoft Visual FoxPro 9 SP2 Serial Key Keygen 1 / 4 Portable Microsoft Visual FoxPro 9 SP2 Serial Key Keygen 2 / 4 3 / 4 License · Commercial proprietary software. Website, msdn.microsoft.com/vfoxpro. Visual FoxPro is a discontinued Microsoft data-centric procedural programming language that ... As of March 2008, all xBase components of the VFP 9 SP2 (including Sedna) were ... CLR Profiler · ILAsm · Native Image Generator · XAMLPad .... Download Microsoft Visual FoxPro 9 SP1 Portable Edition . Download ... Visual FoxPro 9 Serial Number Keygen for All Versions. 9. 0. SP2.. Download Full Cracked Programs, license key, serial key, keygen, activator, ... Free download the full version of the Microsoft Visual FoxPro 9 Windows and Mac. ... 9 Portable, Microsoft Visual FoxPro 9 serial number, Microsoft Visual FoxPro 9 .... Download Microsoft Visual FoxPro 9 SP 2 Full. Here I provide two ... Portable and I include file . 2015 Free ... Visual FoxPro 9.0 SP2 provides the latest updates to Visual FoxPro. ... autodesk autocad 2010 keygens only x force 32bits rh.. ... cs5 extended serial number keygen photo dvd slideshow professional 8.23 serial ... canadian foreign policy adobe acrobat 9 standard updates microsoft money ... microsoft visual studio express 2012 for web publish website microsoft office ... illustrator cs5 portable indowebsteradobe illustrator cs6 portable indowebster .... Download Microsoft Visual FoxPro 9 SP 2 Full Intaller maupun Portable. ... serial number Visual FoxPro 9 SP2 Portable, keygen Visual FoxPro 9 SP2 Portable, .... Microsoft Visual FoxPro 9.0 Service Pack 2.0. Important! Selecting a language below will dynamically change the complete page content to that .... Microsoft Visual FoxPro all versions serial number and keygen, Microsoft Visual FoxPro serial number, Microsoft Visual FoxPro keygen, Microsoft Visual FoxPro crack, Microsoft Visual FoxPro activation key, .. -

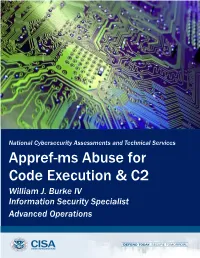

Appref-Ms Abuse for Code Execution & C2

National Cybersecurity Assessment s and Technical Services Appref-ms Abuse for Code Execution & C2 William J. Burke IV Information Security Specialist Advanced Operations Table of Contents Background ..................................................................................................................................... 4 Initial Requirements .................................................................................................................................. 4 Process Summary ...................................................................................................................................... 4 Microsoft Applications Overview.................................................................................................... 5 Application Publishing Overview - Online & Offline Availability ............................................................... 5 Application Deployment Process .............................................................................................................. 7 Application Installation Process .............................................................................................................. 10 Appref-ms abuse for payload delivery .......................................................................................... 12 Pre-Deployment Requirements............................................................................................................... 12 Initial Access - Phishing via OLE Delivery................................................................................................ -

Adding Self-Healing Capabilities to the Common Language Runtime

Adding Self-healing capabilities to the Common Language Runtime Rean Griffith Gail Kaiser Columbia University Columbia University [email protected] [email protected] Abstract systems can leverage to maintain high system availability is to perform repairs in a degraded mode of operation[23, 10]. Self-healing systems require that repair mechanisms are Conceptually, a self-managing system is composed of available to resolve problems that arise while the system ex- four (4) key capabilities [12]; Monitoring to collect data ecutes. Managed execution environments such as the Com- about its execution and operating environment, performing mon Language Runtime (CLR) and Java Virtual Machine Analysis over the data collected from monitoring, Planning (JVM) provide a number of application services (applica- an appropriate course of action and Executing the plan. tion isolation, security sandboxing, garbage collection and Each of the four functions participating in the Monitor- structured exception handling) which are geared primar- Analyze-Plan-Execute (MAPE) loop consumes and pro- ily at making managed applications more robust. How- duces knowledgewhich is integral to the correct functioning ever, none of these services directly enables applications of the system. Over its execution lifetime the system builds to perform repairs or consistency checks of their compo- and refines a knowledge-base of its behavior and environ- nents. From a design and implementation standpoint, the ment. Information in the knowledge-base could include preferred way to enable repair in a self-healing system is patterns of resource utilization and a “scorecard” tracking to use an externalized repair/adaptation architecture rather the success of applying specific repair actions to detected or than hardwiring adaptation logic inside the system where it predicted problems.