How 10 Open Source Projects Manage Unsafe Code Tim Mcnamara @�Mclicks Introduction About Me I Tweet About Rust

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

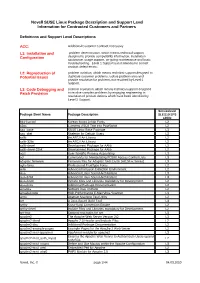

Novell SUSE Linux Package Description and Support Level Information for Contracted Customers and Partners

Novell SUSE Linux Package Description and Support Level Information for Contracted Customers and Partners Definitions and Support Level Descriptions ACC: Additional Customer Contract necessary L1: Installation and problem determination, which means technical support Configuration designed to provide compatibility information, installation assistance, usage support, on-going maintenance and basic troubleshooting. Level 1 Support is not intended to correct product defect errors. L2: Reproduction of problem isolation, which means technical support designed to Potential Issues duplicate customer problems, isolate problem area and provide resolution for problems not resolved by Level 1 Support. L3: Code Debugging and problem resolution, which means technical support designed Patch Provision to resolve complex problems by engaging engineering in resolution of product defects which have been identified by Level 2 Support. Servicelevel Package Short Name Package Description SLES10 SP3 s390x 844-ksc-pcf Korean 8x4x4 Johab Fonts L2 a2ps Converts ASCII Text into PostScript L2 aaa_base SUSE Linux Base Package L3 aaa_skel Skeleton for Default Users L3 aalib An ASCII Art Library L2 aalib-32bit An ASCII Art Library L2 aalib-devel Development Package for AAlib L2 aalib-devel-32bit Development Package for AAlib L2 acct User-Specific Process Accounting L3 acl Commands for Manipulating POSIX Access Control Lists L3 adaptec-firmware Firmware files for Adaptec SAS Cards (AIC94xx Series) L3 agfa-fonts Professional TrueType Fonts L2 aide Advanced Intrusion Detection Environment L2 alsa Advanced Linux Sound Architecture L3 alsa-32bit Advanced Linux Sound Architecture L3 alsa-devel Include Files and Libraries mandatory for Development. L2 alsa-docs Additional Package Documentation. L2 amanda Network Disk Archiver L2 amavisd-new High-Performance E-Mail Virus Scanner L3 amtu Abstract Machine Test Utility L2 ant A Java-Based Build Tool L3 anthy Kana-Kanji Conversion Engine L2 anthy-devel Include Files and Libraries mandatory for Development. -

Release 2.0.0 Florian Mounier

pygal Documentation Release 2.0.0 Florian Mounier December 01, 2016 Contents 1 Sexy python charting 1 2 Simple python charting 3 3 Index 5 Python Module Index 105 i ii CHAPTER 1 Sexy python charting 1 pygal Documentation, Release 2.0.0 2 Chapter 1. Sexy python charting CHAPTER 2 Simple python charting pygal.Bar()(1,3,3,7)(1,6,6,4).render() 3 pygal Documentation, Release 2.0.0 4 Chapter 2. Simple python charting CHAPTER 3 Index 3.1 Documentation 3.1.1 First steps Caution: First you need to install pygal, see installing. When it’s done, you are ready to make your first chart: import pygal # First import pygal bar_chart= pygal.Bar() # Then create a bar graph object bar_chart.add('Fibonacci',[0,1,1,2,3,5,8, 13, 21, 34, 55]) # Add some values bar_chart.render_to_file('bar_chart.svg') # Save the svg to a file Now you should have a svg file called bar_chart.svg in your current directory. You can open it with various programs such as your web browser, inkscape or any svg compatible viewer. The resulting chart will be tho following: bar_chart= pygal.Bar() bar_chart.add('Fibonacci',[0,1,1,2,3,5,8, 13, 21, 34, 55]) bar_chart.render() Caution: pygal relies on svg css styling. This is sadly not fully supported by gnome librsvg and therefore can lead to black svg being displayed. This is not a bug in pygal. See this bugzilla search To make a multiple series graph just add another one: bar_chart= pygal.Bar() bar_chart.add('Fibonacci',[0,1,1,2,3,5,8, 13, 21, 34, 55]) bar_chart.add('Padovan',[1,1,1,2,2,3,4,5,7,9, 12]) bar_chart.render() If you want to stack -

Seed Selection for Successful Fuzzing

Seed Selection for Successful Fuzzing Adrian Herrera Hendra Gunadi Shane Magrath ANU & DST ANU DST Australia Australia Australia Michael Norrish Mathias Payer Antony L. Hosking CSIRO’s Data61 & ANU EPFL ANU & CSIRO’s Data61 Australia Switzerland Australia ABSTRACT ACM Reference Format: Mutation-based greybox fuzzing—unquestionably the most widely- Adrian Herrera, Hendra Gunadi, Shane Magrath, Michael Norrish, Mathias Payer, and Antony L. Hosking. 2021. Seed Selection for Successful Fuzzing. used fuzzing technique—relies on a set of non-crashing seed inputs In Proceedings of the 30th ACM SIGSOFT International Symposium on Software (a corpus) to bootstrap the bug-finding process. When evaluating a Testing and Analysis (ISSTA ’21), July 11–17, 2021, Virtual, Denmark. ACM, fuzzer, common approaches for constructing this corpus include: New York, NY, USA, 14 pages. https://doi.org/10.1145/3460319.3464795 (i) using an empty file; (ii) using a single seed representative of the target’s input format; or (iii) collecting a large number of seeds (e.g., 1 INTRODUCTION by crawling the Internet). Little thought is given to how this seed Fuzzing is a dynamic analysis technique for finding bugs and vul- choice affects the fuzzing process, and there is no consensus on nerabilities in software, triggering crashes in a target program by which approach is best (or even if a best approach exists). subjecting it to a large number of (possibly malformed) inputs. To address this gap in knowledge, we systematically investigate Mutation-based fuzzing typically uses an initial set of valid seed and evaluate how seed selection affects a fuzzer’s ability to find bugs inputs from which to generate new seeds by random mutation. -

Multi Software Product Lines in the Wild

AperTO - Archivio Istituzionale Open Access dell'Università di Torino Multi software product lines in the wild This is the author's manuscript Original Citation: Availability: This version is available http://hdl.handle.net/2318/1667454 since 2020-07-06T10:51:50Z Publisher: Association for Computing Machinery Published version: DOI:10.1145/3168365.3170425 Terms of use: Open Access Anyone can freely access the full text of works made available as "Open Access". Works made available under a Creative Commons license can be used according to the terms and conditions of said license. Use of all other works requires consent of the right holder (author or publisher) if not exempted from copyright protection by the applicable law. (Article begins on next page) 27 September 2021 Multi Software Product Lines in the Wild Michael Lienhardt Ferruccio Damiani [email protected] [email protected] Università di Torino Università di Torino Italy Italy Simone Donetti Luca Paolini [email protected] [email protected] Università di Torino Università di Torino Italy Italy ABSTRACT 1 INTRODUCTION Modern software systems are often built from customizable and A Software Product Line (SPL) is a set of similar programs, called inter-dependent components. Such customizations usually define variants, with a common code base and well documented variabil- which features are offered by the components, and may depend ity [1, 6, 19]. Modern software systems are often built as complex on backend components being configured in a specific way. As assemblages of customizable components that out-grow the expres- such system become very large, with a huge number of possible siveness of SPLs. -

Pygal Documentation Release 2.0.0

pygal Documentation Release 2.0.0 Florian Mounier June 06, 2016 Contents 1 Sexy python charting 1 2 Simple python charting 3 3 Index 5 Python Module Index 97 i ii CHAPTER 1 Sexy python charting 1 pygal Documentation, Release 2.0.0 2 Chapter 1. Sexy python charting CHAPTER 2 Simple python charting pygal.Bar().add('1',[1,3,3,7]).add('2',[1,6,6,4]).render() 3 pygal Documentation, Release 2.0.0 4 Chapter 2. Simple python charting CHAPTER 3 Index 3.1 Documentation 3.1.1 First steps Caution: First you need to install pygal, see installing. When it’s done, you are ready to make your first chart: import pygal # First import pygal bar_chart= pygal.Bar() # Then create a bar graph object bar_chart.add('Fibonacci',[0,1,1,2,3,5,8, 13, 21, 34, 55]) # Add some values bar_chart.render_to_file('bar_chart.svg') # Save the svg to a file Now you should have a svg file called bar_chart.svg in your current directory. You can open it with various programs such as your web browser, inkscape or any svg compatible viewer. The resulting chart will be tho following: bar_chart= pygal.Bar() bar_chart.add('Fibonacci',[0,1,1,2,3,5,8, 13, 21, 34, 55]) bar_chart.render() Caution: pygal relies on svg css styling. This is sadly not fully supported by gnome librsvg and therefore can lead to black svg being displayed. This is not a bug in pygal. See this bugzilla search To make a multiple series graph just add another one: bar_chart= pygal.Bar() bar_chart.add('Fibonacci',[0,1,1,2,3,5,8, 13, 21, 34, 55]) bar_chart.add('Padovan',[1,1,1,2,2,3,4,5,7,9, 12]) bar_chart.render() If -

Indicators for Missing Maintainership in Collaborative Open Source Projects

TECHNISCHE UNIVERSITÄT CAROLO-WILHELMINA ZU BRAUNSCHWEIG Studienarbeit Indicators for Missing Maintainership in Collaborative Open Source Projects Andre Klapper February 04, 2013 Institute of Software Engineering and Automotive Informatics Prof. Dr.-Ing. Ina Schaefer Supervisor: Michael Dukaczewski Affidavit Hereby I, Andre Klapper, declare that I wrote the present thesis without any assis- tance from third parties and without any sources than those indicated in the thesis itself. Braunschweig / Prague, February 04, 2013 Abstract The thesis provides an attempt to use freely accessible metadata in order to identify missing maintainership in free and open source software projects by querying various data sources and rating the gathered information. GNOME and Apache are used as case studies. License This work is licensed under a Creative Commons Attribution-ShareAlike 3.0 Unported (CC BY-SA 3.0) license. Keywords Maintenance, Activity, Open Source, Free Software, Metrics, Metadata, DOAP Contents List of Tablesx 1 Introduction1 1.1 Problem and Motivation.........................1 1.2 Objective.................................2 1.3 Outline...................................3 2 Theoretical Background4 2.1 Reasons for Inactivity..........................4 2.2 Problems Caused by Inactivity......................4 2.3 Ways to Pass Maintainership.......................5 3 Data Sources in Projects7 3.1 Identification and Accessibility......................7 3.2 Potential Sources and their Exploitability................7 3.2.1 Code Repositories.........................8 3.2.2 Mailing Lists...........................9 3.2.3 IRC Chat.............................9 3.2.4 Wikis............................... 10 3.2.5 Issue Tracking Systems...................... 11 3.2.6 Forums............................... 12 3.2.7 Releases.............................. 12 3.2.8 Patch Review........................... 13 3.2.9 Social Media............................ 13 3.2.10 Other Sources.......................... -

Replacing C Library Code with Rust

Replacing C library code with Rust: What I learned with librsvg Federico Mena Quintero [email protected] GUADEC 2017 Manchester, UK What uses librsvg? GNOME platform and desktop: gtk+ indirectly through gdk-pixbuf thumbnailer via gdk-pixbuf eog gstreamer-plugins-bad What uses librsvg? Fine applications: gnome-games (gnome-chess, five-or-more, etc.) gimp gcompris claws-mail darktable What uses librsvg? Desktop environments mate-panel Evas / Enlightenment emacs-x11 What uses librsvg? Things you may not expect ImageMagick Wikipedia ← they have been awesome Long History ● First commit, Eazel, 2001 ● Experiment instead of building a DOM, stream in SVG by using libbxml2’s SAX parser ● Renderer used libart ● Gill → Sodipodi → Inkscape ● Librsvg was being written while the SVG spec was being written ● Ported to Cairo eventually – I’ll remove the last libart-ism any day now Federico takes over ● Librsvg was mostly unmaintained in 2015 ● Took over maintenance in February 2015 ● Started the Rust port in October 2016 Pain points: librsvg’s CVEs ● CVE-2011-3146 - invalid cast of RsvgNode type, crash ● CVE-2015-7557 - out-of-bounds heap read in list-of-points ● CVE-2015-7558 - Infinite loop / stack overflow from cyclic element references (thanks to Benjamin Otte for the epic fix) ● CVE-2016-4348 - NOT OUR PROBLEM - integer overflow when writing PNGs in Cairo (rowstride computation) ● CVE-2017-11464 - Division by zero in Gaussian blur code (my bad, sorry) ● Many non-CVEs found through fuzz testing and random SVGs on the net ● https://cve.mitre.org/cgi-bin/cvekey.cgi?keyword=librsvg -

1. Why POCS.Key

Symptoms of Complexity Prof. George Candea School of Computer & Communication Sciences Building Bridges A RTlClES A COMPUTER SCIENCE PERSPECTIVE OF BRIDGE DESIGN What kinds of lessonsdoes a classical engineering discipline like bridge design have for an emerging engineering discipline like computer systems Observation design?Case-study editors Alfred Spector and David Gifford consider the • insight and experienceof bridge designer Gerard Fox to find out how strong the parallels are. • bridges are normally on-time, on-budget, and don’t fall ALFRED SPECTORand DAVID GIFFORD • software projects rarely ship on-time, are often over- AS Gerry, let’s begin with an overview of THE DESIGN PROCESS bridges. AS What is the procedure for designing and con- GF In the United States, most highway bridges are budget, and rarely work exactly as specified structing a bridge? mandated by a government agency. The great major- GF It breaks down into three phases: the prelimi- ity are small bridges (with spans of less than 150 nay design phase, the main design phase, and the feet) and are part of the public highway system. construction phase. For larger bridges, several alter- There are fewer large bridges, having spans of 600 native designs are usually considered during the Blueprints for bridges must be approved... feet or more, that carry roads over bodies of water, preliminary design phase, whereas simple calcula- • gorges, or other large obstacles. There are also a tions or experience usually suffices in determining small number of superlarge bridges with spans ap- the appropriate design for small bridges. There are a proaching a mile, like the Verrazzano Narrows lot more factors to take into account with a large Bridge in New Yor:k. -

Reproducible and Customizable Deployments with GNU Guix

Reproducible and Customizable Deployments with GNU Guix Ludovic Courtes` FOSDEM 2016 The difficulty of keeping software environments under control. #1. Upgrades are hard. #2. Stateful system management is intractable. $DISTRO $DISTRO $DISTRO $DISTRO apt-get update apt-get update state 1a state 1b $DISTRO $DISTRO apt-get update apt-get update state 1a state 1b apt-get install foo apt-get remove bar state 2a state 2b $DISTRO $DISTRO apt-get update apt-get update state 1a state 1b apt-get install foo apt-get remove bar state 2a state 2b apt-get remove bar apt-get install foo state 3a state 3b $DISTRO $DISTRO apt-get update apt-get update state 1a state 1b apt-get install foo apt-get remove bar = ? state 2a state 2b apt-get remove bar apt-get install foo state 3a state 3b #3. It’s worse than this. ! “app bundles” (Docker images) Giving up? Giving up? ! “app bundles” (Docker images) “Debian and other distributions are going to be that thing you run docker on, little more.” — Jos Poortvliet, ownCloud developer http://lwn.net/Articles/670566/ It’s also that thing you run inside Docker! https://imagelayers.io/ Functional package management. gtk+ = g(glib; gcc; make; coreutils) gcc = h(make; coreutils; gcc0) ... gimp = f (gtk+; gcc; make; coreutils) where f = ./configure && make && make install gcc = h(make; coreutils; gcc0) ... where f = ./configure && make && make install gimp = f (gtk+; gcc; make; coreutils) gtk+ = g(glib; gcc; make; coreutils) where f = ./configure && make && make install gimp = f (gtk+; gcc; make; coreutils) gtk+ = g(glib; gcc; make; coreutils) gcc = h(make; coreutils; gcc0) .. -

Strong Dependencies Between Software Components

Specific Targeted Research Project Contract no.214898 Seventh Framework Programme: FP7-ICT-2007-1 Technical Report 0002 MANCOOSI Managing the Complexity of the Open Source Infrastructure Strong Dependencies between Software Components Pietro Abate ([email protected]) Jaap Boender ([email protected]) Roberto Di Cosmo ([email protected]) Stefano Zacchiroli ([email protected]) Universit`eParis Diderot, PPS UMR 7126, Paris, France May 24, 2009 Web site: www.mancoosi.org Contents 1 Introduction . .2 2 Strong dependencies . .3 3 Strong dependencies in Debian . .7 3.1 Strong vs direct sensitivity: exceptions . .9 3.2 Using strong dominance to cluster data . 11 3.3 Debian is a small world . 11 4 Efficient computation . 12 5 Applications . 13 6 Related works . 16 7 Conclusion and future work . 17 8 Acknowledgements . 18 A Case Study: Evaluation of debian structure . 21 Abstract Component-based systems often describe context requirements in terms of explicit inter-component dependencies. Studying large instances of such systems|such as free and open source software (FOSS) distributions|in terms of declared dependencies between packages is appealing. It is however also misleading when the language to express dependencies is as expressive as boolean formulae, which is often the case. In such settings, a more appropriate notion of component dependency exists: strong dependency. This paper introduces such notion as a first step towards modeling semantic, rather then syntactic, inter-component relationships. Furthermore, a notion of component sensitivity is derived from strong dependencies, with ap- plications to quality assurance and to the evaluation of upgrade risks. An empirical study of strong dependencies and sensitivity is presented, in the context of one of the largest, freely available, component-based system. -

Licensing Information User Manual Oracle Solaris 11.3 Last Updated September 2018

Licensing Information User Manual Oracle Solaris 11.3 Last Updated September 2018 Part No: E54836 September 2018 Licensing Information User Manual Oracle Solaris 11.3 Part No: E54836 Copyright © 2018, Oracle and/or its affiliates. All rights reserved. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are "commercial computer software" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, use, duplication, disclosure, modification, and adaptation of the programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, shall be subject to license terms and license restrictions applicable to the programs. -

The Checker Framework Manual: Custom Pluggable Types for Java

The Checker Framework Manual: Custom pluggable types for Java https://checkerframework.org/ Version 2.5.7 (4 Nov 2018) For the impatient: Section 1.3 (page 13) describes how to install and use pluggable type-checkers. Contents 1 Introduction 12 1.1 How to read this manual . 13 1.2 How it works: Pluggable types . 13 1.3 Installation . 13 1.4 Example use: detecting a null pointer bug . 13 2 Using a checker 15 2.1 Writing annotations . 15 2.2 Running a checker . 16 2.2.1 Using annotated libraries . 16 2.2.2 Distributing your annotated project . 17 2.2.3 Summary of command-line options . 17 2.2.4 Checker auto-discovery . 19 2.2.5 Shorthand for built-in checkers . 19 2.3 What the checker guarantees . 19 2.4 Tips about writing annotations . 20 2.4.1 Write annotations before you run a checker . 20 2.4.2 How to get started annotating legacy code . 20 2.4.3 Annotations indicate non-exceptional behavior . 22 2.4.4 Subclasses must respect superclass annotations . 22 2.4.5 Annotations on constructor invocations . 23 2.4.6 What to do if a checker issues a warning about your code . 24 3 Nullness Checker 26 3.1 What the Nullness Checker checks . 26 3.2 Nullness annotations . 27 3.2.1 Nullness qualifiers . 27 3.2.2 Nullness method annotations . 28 3.2.3 Initialization qualifiers . 28 3.2.4 Map key qualifiers . 28 3.3 Writing nullness annotations . 29 3.3.1 Implicit qualifiers . 29 3.3.2 Default annotation .