Seed Selection for Successful Fuzzing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Automatically Bypassing Android Malware Detection System

FUZZIFICATION: Anti-Fuzzing Techniques Jinho Jung, Hong Hu, David Solodukhin, Daniel Pagan, Kyu Hyung Lee*, Taesoo Kim * 1 Fuzzing Discovers Many Vulnerabilities 2 Fuzzing Discovers Many Vulnerabilities 3 Testers Find Bugs with Fuzzing Detected bugs Normal users Compilation Source Released binary Testers Compilation Distribution Fuzzing 4 But Attackers Also Find Bugs Detected bugs Normal users Compilation Attackers Source Released binary Testers Compilation Distribution Fuzzing 5 Our work: Make the Fuzzing Only Effective to the Testers Detected bugs Normal users Fuzzification ? Fortified binary Attackers Source Compilation Binary Testers Compilation Distribution Fuzzing 6 Threat Model Detected bugs Normal users Fuzzification Fortified binary Attackers Source Compilation Binary Testers Compilation Distribution Fuzzing 7 Threat Model Detected bugs Normal users Fuzzification Fortified binary Attackers Source Compilation Binary Testers Compilation Distribution Fuzzing Adversaries try to find vulnerabilities from fuzzing 8 Threat Model Detected bugs Normal users Fuzzification Fortified binary Attackers Source Compilation Binary Testers Compilation Distribution Fuzzing Adversaries only have a copy of fortified binary 9 Threat Model Detected bugs Normal users Fuzzification Fortified binary Attackers Source Compilation Binary Testers Compilation Distribution Fuzzing Adversaries know Fuzzification and try to nullify 10 Research Goals Detected bugs Normal users Fuzzification Fortified binary Attackers Source Compilation Binary Testers Compilation -

Designing and Packaging Printer and Scanner Drivers

Designing and Packaging Printer and Scanner Drivers OpenPrinting MC on Linux Plumbers 2020 August 28, 2020 Till Kamppeter, OpenPrinting What we had ● Printer drivers – PPD files – Filters, perhaps also backends – All has to be in CUPS-specific directories ● Scanner drivers – Shared libraries with SANE ABI in SANE-specific directories ● Packaging – Binaries were built specific to destination distro and packaged in DEB or RPM packages – For each distro drivers need to be built, packaged, and tested separately – As files need to be in specific directories drivers cannot be installed with CUPS in a Snap or with scanning user applications in Snaps What we want ● Sandboxed packaging – Snaps – Distribution-independent: Install from Snap Store on any distro running snapd – More security: Every package with all its libraries and files in its own sandbox, fine-grained control for communication between packages – All-Snap distributions ● But – You cannot drop driver files into directories of a snapped CUPS or snapped user applications, Snaps do not see the system’s files – Snaps only communicate via IP or D-Bus, not by files ● Also – CUPS is deprecating support for PPD files, working by itself only in driverless IPP mode. The New Architecture ● Printer/Scanner Applications emulating an IPP device – Easily snappable: Communicates only via IP – Multi-function device support, Printing, Scanning, and Fax Out can be done in one Snap/Application – Web admin interface for vendor/device-specific GUI – Behaves like a network printer/scanner/multi-function -

The Interplay of Compile-Time and Run-Time Options for Performance Prediction Luc Lesoil, Mathieu Acher, Xhevahire Tërnava, Arnaud Blouin, Jean-Marc Jézéquel

The Interplay of Compile-time and Run-time Options for Performance Prediction Luc Lesoil, Mathieu Acher, Xhevahire Tërnava, Arnaud Blouin, Jean-Marc Jézéquel To cite this version: Luc Lesoil, Mathieu Acher, Xhevahire Tërnava, Arnaud Blouin, Jean-Marc Jézéquel. The Interplay of Compile-time and Run-time Options for Performance Prediction. SPLC 2021 - 25th ACM Inter- national Systems and Software Product Line Conference - Volume A, Sep 2021, Leicester, United Kingdom. pp.1-12, 10.1145/3461001.3471149. hal-03286127 HAL Id: hal-03286127 https://hal.archives-ouvertes.fr/hal-03286127 Submitted on 15 Jul 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. The Interplay of Compile-time and Run-time Options for Performance Prediction Luc Lesoil, Mathieu Acher, Xhevahire Tërnava, Arnaud Blouin, Jean-Marc Jézéquel Univ Rennes, INSA Rennes, CNRS, Inria, IRISA Rennes, France [email protected] ABSTRACT Both compile-time and run-time options can be configured to reach Many software projects are configurable through compile-time op- specific functional and performance goals. tions (e.g., using ./configure) and also through run-time options (e.g., Existing studies consider either compile-time or run-time op- command-line parameters, fed to the software at execution time). -

“实时交互”的im技术,将会有什么新机遇? 2019-08-26 袁武林

ᭆʼn ᩪᭆŊ ᶾݐᩪᭆᔜߝ᧞ᑕݎ ғמஙے ᤒ 下载APPڜහਁʼn Ŋ ឴ݐռᓉݎ 开篇词 | 搞懂“实时交互”的IM技术,将会有什么新机遇? 2019-08-26 袁武林 即时消息技术剖析与实战 进入课程 讲述:袁武林 时长 13:14 大小 12.13M 你好,我是袁武林。我来自新浪微博,目前在微博主要负责消息箱和直播互动相关的业务。 接下来的一段时间,我会给你带来一个即时消息技术方面的专栏课程。 你可能会很好奇,为什么是来自微博的技术人来讲这个课程,微博会用到 IM 的技术吗? 在我回答之前,先请你思考一个问题: 除了 QQ 和微信,你知道还有什么 App 会用到即时(实时)消息技术吗? 其实,除了 QQ 和微信外,陌陌、抖音等直播业务为主的 App 也都深度用到了 IM 相关的 技术。 比如在线学习软件中的“实时在线白板”,导航打车软件中的“实时位置共享”,以及和我 们生活密切相关的智能家居的“远程控制”,也都会通过 IM 技术来提升人和人、人和物的 实时互动性。 我觉得可以这么理解:包括聊天、直播、在线客服、物联网等这些业务领域在内,所有需 要“实时互动”“高实时性”的场景,都需要、也应该用到 IM 技术。 微博因为其多重的业务需求,在许多业务中都应用到了 IM 技术,目前除了我负责的消息箱 和直播互动业务外,还有其他业务也逐渐来通过我们的 IM 通用服务,提升各自业务的用户 体验。 为什么这么多场景都用到了 IM 技术呢,IM 的技术究竟是什么呢? 所以,在正式开始讲解技术之前,我想先从应用场景的角度,带你了解一下 IM 技术是什 么,它为互联网带来了哪些巨大变革,以及自身蕴含着怎样的价值。 什么是 IM 系统? 我们不妨先看一段旧闻: 2014 年 Facebook 以 190 亿美元的价格,收购了当时火爆的即时通信工具 WhatsApp,而此时 WhatsApp 仅有 50 名员工。 是的,也就是说这 50 名员工人均创造了 3.8 亿美元的价值。这里,我们不去讨论当时谷歌 和 Facebook 为争抢 WhatsApp 发起的价格战,从而推动这笔交易水涨船高的合理性,从 另一个侧面我们看到的是:依托于 IM 技术的社交软件,在完成了“连接人与人”的使命 后,体现出的巨大价值。 同样的价值体现也发生在国内。1996 年,几名以色列大学生发明的即时聊天软件 ICQ 一 时间风靡全球,3 年后的深圳,它的效仿者在中国悄然出现,通过熟人关系的快速构建,在 一票基于陌生人关系的网络聊天室中脱颖而出,逐渐成为国内社交网络的巨头。 那时候这个聊天工具还叫 OICQ,后来更名为 QQ,说到这,大家应该知道我说的是哪家公 司了,没错,这家公司叫腾讯。在之后的数年里,腾讯正是通过不断优化升级 IM 相关的功 能和架构,凭借 QQ 和微信这两大 IM 工具,牢牢控制了强关系领域的社交圈。 ےۓ由此可见,IM 技术作为互联网实时互动场景的底层架构,在整个互动生态圈的价值所在。᧗ 随着互联网的发展,人们对于实时互动的要求越来越高。于是,IMᴠྊෙๅ 技术不止应用于 QQ、 微信这样的面向聊天的软件,它其实有着宽广的应用场景和足够有想象力的前景。甚至在不 。ғ 系统已经根植于我们的互联网生活中,成为各大 App 必不可少的模块מஙݎ知不觉之间,IMḒ 除了我在前面图中列出的业务之外,如果你希望在自己的 App 里加上实时聊天或者弹幕的 功能,通过 IM 云服务商提供的 SDK 就能快速实现(当然如果需求比较简单,你也可以自 己动手来实现)。 比如,在极客时间 App 中,我们可以加上一个支持大家点对点聊天的功能,或者增加针对 某一门课程的独立聊天室。 例子太多,我就不做一一列举了。其实我想说的是:IM -

Forensic Identification of Unique Kali Systems Through the Use of File Hashes and Names

Forensic Identification of Unique Kali Systems Through the Use of File Hashes and Names Troy Ward January 25, 2021 Troy Ward Table of Contents Introduction .................................................................................................................................................. 4 Kali Linux ....................................................................................................................................................... 4 Methodology ................................................................................................................................................. 4 Analysis ......................................................................................................................................................... 5 Unique Names ............................................................................................................................................... 6 Eth0.lease .................................................................................................................................................. 6 Font Config ................................................................................................................................................ 7 LightDM ..................................................................................................................................................... 8 System.Journal ......................................................................................................................................... -

Papermerge Documentation

Papermerge Feb 29, 2020 Contents 1 Requirements 3 1.1 Python..................................................3 1.2 Imagemagick...............................................3 1.3 Poppler..................................................3 1.4 Tesseract.................................................4 1.5 Database.................................................4 2 Installation 5 2.1 OS Specific Packages..........................................5 2.1.1 1. Web App + Workers Machine................................5 2.1.1.1 Ubuntu Bionic 18.04 (LTS)..............................5 2.1.2 2. Web App Machine......................................6 2.1.2.1 Ubuntu Bionic 18.04 (LTS)..............................6 2.1.3 3. Worker Machine.......................................6 2.2 Manual Way...............................................6 2.2.1 Package Dependencies.....................................6 2.2.2 Web App............................................7 2.2.3 Worker.............................................9 2.2.4 Recurring Commands...................................... 10 2.3 Systemd................................................. 11 2.3.1 Package Dependencies..................................... 11 2.3.2 Web App............................................ 11 2.4 Docker.................................................. 13 2.5 Ansible (Semiautomated)........................................ 13 2.6 Jenkins + Ansible (Fully Automated Deployment)........................... 14 3 Languages Support 15 4 REST API 17 4.1 How It Works?............................................. -

Moonshine: Optimizing OS Fuzzer Seed Selection with Trace Distillation

MoonShine: Optimizing OS Fuzzer Seed Selection with Trace Distillation Shankara Pailoor, Andrew Aday, and Suman Jana Columbia University Abstract bug in system call implementations might allow an un- privileged user-level process to completely compromise OS fuzzers primarily test the system-call interface be- the system. tween the OS kernel and user-level applications for secu- OS fuzzers usually start with a set of synthetic seed rity vulnerabilities. The effectiveness of all existing evo- programs , i.e., a sequence of system calls, and itera- lutionary OS fuzzers depends heavily on the quality and tively mutate their arguments/orderings using evolution- diversity of their seed system call sequences. However, ary guidance to maximize the achieved code coverage. generating good seeds for OS fuzzing is a hard problem It is well-known that the performance of evolutionary as the behavior of each system call depends heavily on fuzzers depend critically on the quality and diversity of the OS kernel state created by the previously executed their seeds [31, 39]. Ideally, the synthetic seed programs system calls. Therefore, popular evolutionary OS fuzzers for OS fuzzers should each contain a small number of often rely on hand-coded rules for generating valid seed system calls that exercise diverse functionality in the OS sequences of system calls that can bootstrap the fuzzing kernel. process. Unfortunately, this approach severely restricts However, the behavior of each system call heavily de- the diversity of the seed system call sequences and there- pends on the shared kernel state created by the previous fore limits the effectiveness of the fuzzers. -

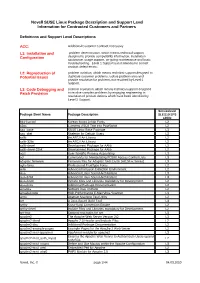

Novell SUSE Linux Package Description and Support Level Information for Contracted Customers and Partners

Novell SUSE Linux Package Description and Support Level Information for Contracted Customers and Partners Definitions and Support Level Descriptions ACC: Additional Customer Contract necessary L1: Installation and problem determination, which means technical support Configuration designed to provide compatibility information, installation assistance, usage support, on-going maintenance and basic troubleshooting. Level 1 Support is not intended to correct product defect errors. L2: Reproduction of problem isolation, which means technical support designed to Potential Issues duplicate customer problems, isolate problem area and provide resolution for problems not resolved by Level 1 Support. L3: Code Debugging and problem resolution, which means technical support designed Patch Provision to resolve complex problems by engaging engineering in resolution of product defects which have been identified by Level 2 Support. Servicelevel Package Short Name Package Description SLES10 SP3 s390x 844-ksc-pcf Korean 8x4x4 Johab Fonts L2 a2ps Converts ASCII Text into PostScript L2 aaa_base SUSE Linux Base Package L3 aaa_skel Skeleton for Default Users L3 aalib An ASCII Art Library L2 aalib-32bit An ASCII Art Library L2 aalib-devel Development Package for AAlib L2 aalib-devel-32bit Development Package for AAlib L2 acct User-Specific Process Accounting L3 acl Commands for Manipulating POSIX Access Control Lists L3 adaptec-firmware Firmware files for Adaptec SAS Cards (AIC94xx Series) L3 agfa-fonts Professional TrueType Fonts L2 aide Advanced Intrusion Detection Environment L2 alsa Advanced Linux Sound Architecture L3 alsa-32bit Advanced Linux Sound Architecture L3 alsa-devel Include Files and Libraries mandatory for Development. L2 alsa-docs Additional Package Documentation. L2 amanda Network Disk Archiver L2 amavisd-new High-Performance E-Mail Virus Scanner L3 amtu Abstract Machine Test Utility L2 ant A Java-Based Build Tool L3 anthy Kana-Kanji Conversion Engine L2 anthy-devel Include Files and Libraries mandatory for Development. -

Active Fuzzing for Testing and Securing Cyber-Physical Systems

Active Fuzzing for Testing and Securing Cyber-Physical Systems Yuqi Chen Bohan Xuan Christopher M. Poskitt Singapore Management University Zhejiang University Singapore Management University Singapore China Singapore [email protected] [email protected] [email protected] Jun Sun Fan Zhang∗ Singapore Management University Zhejiang University Singapore China [email protected] [email protected] ABSTRACT KEYWORDS Cyber-physical systems (CPSs) in critical infrastructure face a per- Cyber-physical systems; fuzzing; active learning; benchmark gen- vasive threat from attackers, motivating research into a variety of eration; testing defence mechanisms countermeasures for securing them. Assessing the effectiveness of ACM Reference Format: these countermeasures is challenging, however, as realistic bench- Yuqi Chen, Bohan Xuan, Christopher M. Poskitt, Jun Sun, and Fan Zhang. marks of attacks are difficult to manually construct, blindly testing 2020. Active Fuzzing for Testing and Securing Cyber-Physical Systems. In is ineffective due to the enormous search spaces and resource re- Proceedings of the 29th ACM SIGSOFT International Symposium on Software quirements, and intelligent fuzzing approaches require impractical Testing and Analysis (ISSTA ’20), July 18–22, 2020, Virtual Event, USA. ACM, amounts of data and network access. In this work, we propose active New York, NY, USA, 13 pages. https://doi.org/10.1145/3395363.3397376 fuzzing, an automatic approach for finding test suites of packet- level CPS network attacks, targeting scenarios in which attackers 1 INTRODUCTION can observe sensors and manipulate packets, but have no existing knowledge about the payload encodings. Our approach learns re- Cyber-physical systems (CPSs), characterised by their tight and gression models for predicting sensor values that will result from complex integration of computational and physical processes, are sampled network packets, and uses these predictions to guide a often used in the automation of critical public infrastructure [78]. -

The Art, Science, and Engineering of Fuzzing: a Survey

1 The Art, Science, and Engineering of Fuzzing: A Survey Valentin J.M. Manes,` HyungSeok Han, Choongwoo Han, Sang Kil Cha, Manuel Egele, Edward J. Schwartz, and Maverick Woo Abstract—Among the many software vulnerability discovery techniques available today, fuzzing has remained highly popular due to its conceptual simplicity, its low barrier to deployment, and its vast amount of empirical evidence in discovering real-world software vulnerabilities. At a high level, fuzzing refers to a process of repeatedly running a program with generated inputs that may be syntactically or semantically malformed. While researchers and practitioners alike have invested a large and diverse effort towards improving fuzzing in recent years, this surge of work has also made it difficult to gain a comprehensive and coherent view of fuzzing. To help preserve and bring coherence to the vast literature of fuzzing, this paper presents a unified, general-purpose model of fuzzing together with a taxonomy of the current fuzzing literature. We methodically explore the design decisions at every stage of our model fuzzer by surveying the related literature and innovations in the art, science, and engineering that make modern-day fuzzers effective. Index Terms—software security, automated software testing, fuzzing. ✦ 1 INTRODUCTION Figure 1 on p. 5) and an increasing number of fuzzing Ever since its introduction in the early 1990s [152], fuzzing studies appear at major security conferences (e.g. [225], has remained one of the most widely-deployed techniques [52], [37], [176], [83], [239]). In addition, the blogosphere is to discover software security vulnerabilities. At a high level, filled with many success stories of fuzzing, some of which fuzzing refers to a process of repeatedly running a program also contain what we consider to be gems that warrant a with generated inputs that may be syntactically or seman- permanent place in the literature. -

Psofuzzer: a Target-Oriented Software Vulnerability Detection Technology Based on Particle Swarm Optimization

applied sciences Article PSOFuzzer: A Target-Oriented Software Vulnerability Detection Technology Based on Particle Swarm Optimization Chen Chen 1,2,*, Han Xu 1 and Baojiang Cui 1 1 School of Cyberspace Security, Beijing University of Posts and Telecommunications, Beijing 100876, China; [email protected] (H.X.); [email protected] (B.C.) 2 School of Information and Navigation, Air Force Engineering University, Xi’an 710077, China * Correspondence: [email protected] Abstract: Coverage-oriented and target-oriented fuzzing are widely used in vulnerability detection. Compared with coverage-oriented fuzzing, target-oriented fuzzing concentrates more computing resources on suspected vulnerable points to improve the testing efficiency. However, the sample generation algorithm used in target-oriented vulnerability detection technology has some problems, such as weak guidance, weak sample penetration, and difficult sample generation. This paper proposes a new target-oriented fuzzer, PSOFuzzer, that uses particle swarm optimization to generate samples. PSOFuzzer can quickly learn high-quality features in historical samples and implant them into new samples that can be led to execute the suspected vulnerable point. The experimental results show that PSOFuzzer can generate more samples in the test process to reach the target point and can trigger vulnerabilities with 79% and 423% higher probability than AFLGo and Sidewinder, respectively, on tested software programs. Keywords: fuzzing; model-based fuzzing; vulnerability detection; code coverage; open-source program; directed fuzzing; static instrumentation; source code instrumentation Citation: Chen, C.; Han, X.; Cui, B. PSOFuzzer: A Target-Oriented 1. Introduction Software Vulnerability Detection The appearance of an increasing number of software vulnerabilities has made auto- Technology Based on Particle Swarm matic vulnerability detection technology a widespread concern in industry and academia. -

Configuration Fuzzing Testing Framework for Software Vulnerability Detection

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Columbia University Academic Commons CONFU: Configuration Fuzzing Testing Framework for Software Vulnerability Detection Huning Dai, Christian Murphy, Gail Kaiser Department of Computer Science Columbia University New York, NY 10027 USA ABSTRACT Many software security vulnerabilities only reveal themselves under certain conditions, i.e., particular configurations and inputs together with a certain runtime environment. One approach to detecting these vulnerabilities is fuzz testing. However, typical fuzz testing makes no guarantees regarding the syntactic and semantic validity of the input, or of how much of the input space will be explored. To address these problems, we present a new testing methodology called Configuration Fuzzing. Configuration Fuzzing is a technique whereby the configuration of the running application is mutated at certain execution points, in order to check for vulnerabilities that only arise in certain conditions. As the application runs in the deployment environment, this testing technique continuously fuzzes the configuration and checks "security invariants'' that, if violated, indicate a vulnerability. We discuss the approach and introduce a prototype framework called ConFu (CONfiguration FUzzing testing framework) for implementation. We also present the results of case studies that demonstrate the approach's feasibility and evaluate its performance. Keywords: Vulnerability; Configuration Fuzzing; Fuzz testing; In Vivo testing; Security invariants INTRODUCTION As the Internet has grown in popularity, security testing is undoubtedly becoming a crucial part of the development process for commercial software, especially for server applications. However, it is impossible in terms of time and cost to test all configurations or to simulate all system environments before releasing the software into the field, not to mention the fact that software distributors may later add more configuration options.