Bomberman Tournament

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bomberman Story Ds Walkthrough Guide

Bomberman story ds walkthrough guide Continue Comments Share Hudson Soft (JP), Ubisoft (International) One player, multiplayer Japan: May 19, 2005 North America: June 21, 2005 Europe: July 1, 2005 Bomberman is a game in the Bomberman series released in 2005 for the Nintendo DS. One player This game is very similar to the original Bomberman. The player must navigate the mazes and defeat the enemies before finding a way out. In this game, there is more than one item in each level and they are stored in inventory on the second screen. By clicking on the subject with a stylus, the player can activate the item at any time. They may choose to use the items immediately or be more conservative with them. There are 10 worlds each with 10 stages. The last stage is always the boss and there is a bonus stage after the fifth stage. In bonus level, the player is invincible and must defeat all enemies on one of the screens. They can then move to another screen to collect as many items as they can with the remaining time. Worlds/Bosses This section is a stub. You can help by expanding it. Multiplayer this game can wirelessly communicate with other Nintedo DS systems to play multiplayer. You can play a multi-card game or play the same card. There is no difference in gameplay depending on one card or multiple cards to play. With a wireless connection, you can play with up to 8 players at a time. When you die in a multiplayer game, you send to the bottom screen to shoot missiles at other players still alive. -

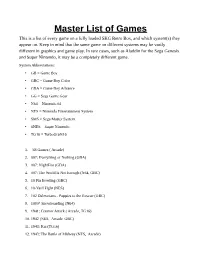

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games ( Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack ( Arcade, TG16) 10. 1942 (NES, Arcade, GBC) 11. 1943: Kai (TG16) 12. 1943: The Battle of Midway (NES, Arcade) 13. 1944: The Loop Master ( Arcade) 14. 1999: Hore, Mitakotoka! Seikimatsu (NES) 15. 19XX: The War Against Destiny ( Arcade) 16. 2 on 2 Open Ice Challenge ( Arcade) 17. 2010: The Graphic Action Game (Colecovision) 18. 2020 Super Baseball ( Arcade, SNES) 19. 21-Emon (TG16) 20. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 21. 3 Count Bout ( Arcade) 22. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 23. 3-D Tic-Tac-Toe (Atari 2600) 24. 3-D Ultra Pinball: Thrillride (GBC) 25. 3-D WorldRunner (NES) 26. 3D Asteroids (Atari 7800) 27. -

Video Game Archive: Nintendo 64

Video Game Archive: Nintendo 64 An Interactive Qualifying Project submitted to the Faculty of WORCESTER POLYTECHNIC INSTITUTE in partial fulfilment of the requirements for the degree of Bachelor of Science by James R. McAleese Janelle Knight Edward Matava Matthew Hurlbut-Coke Date: 22nd March 2021 Report Submitted to: Professor Dean O’Donnell Worcester Polytechnic Institute This report represents work of one or more WPI undergraduate students submitted to the faculty as evidence of a degree requirement. WPI routinely publishes these reports on its web site without editorial or peer review. Abstract This project was an attempt to expand and document the Gordon Library’s Video Game Archive more specifically, the Nintendo 64 (N64) collection. We made the N64 and related accessories and games more accessible to the WPI community and created an exhibition on The History of 3D Games and Twitch Plays Paper Mario, featuring the N64. 2 Table of Contents Abstract…………………………………………………………………………………………………… 2 Table of Contents…………………………………………………………………………………………. 3 Table of Figures……………………………………………………………………………………………5 Acknowledgements……………………………………………………………………………………….. 7 Executive Summary………………………………………………………………………………………. 8 1-Introduction…………………………………………………………………………………………….. 9 2-Background………………………………………………………………………………………… . 11 2.1 - A Brief of History of Nintendo Co., Ltd. Prior to the Release of the N64 in 1996:……………. 11 2.2 - The Console and its Competitors:………………………………………………………………. 16 Development of the Console……………………………………………………………………...16 -

Diplomová Práce

České vysoké učení technické Fakulta elektrotechnická DIPLOMOVÁ PRÁCE Hraní obecných her s neúplnou informací General Game Playing in Imperfect Information Games Prohlášení Prohlašuji, že jsem svou diplomovou práci vypracoval samostatně a použil jsem pouze podklady (literaturu, projekty, SW atd.) uvedené v přiloženém seznamu. V Praze, dne 7.5.2011 ……………………………………. podpis Acknowledgements Here I would like to thank my advisor Mgr. Viliam Lisý, MSc. for his time and valuable advice which was a great help in completing this work. I would also like to thank my family for their unflinching support during my studies. Tomáš Motal Abstrakt Název: Hraní obecných her s neúplnou informací Autor: Tomáš Motal email: [email protected] Oddělení: Katedra kybernetiky Fakulta elektrotechnická, České vysoké učení technické v Praze Technická 2 166 27 Praha 6 Česká Republika Vedoucí práce: Mgr. Viliam Lisý, MSc. email: [email protected] Oponent: RNDr. Jan Hric email: [email protected] Abstrakt Cílem hraní obecných her je vytvořit inteligentní agenty, kteří budou schopni hrát konceptuálně odlišné hry pouze na základě zadaných pravidel. V této práci jsme se zaměřili na hraní obecných her s neúplnou informací. Neúplná informace s sebou přináší nové výzvy, které je nutno řešit. Například hráč musí být schopen se vypořádat s prvkem náhody ve hře či s tím, že neví přesně, ve kterém stavu světa se právě nachází. Hlavním přínosem této práce je TIIGR, jeden z prvních obecných hráčů her s neúplnou informací který plně podporuje jazyk pro psaní her s neúplnou informací GDL-II. Pro usuzování o hře tento hráč využívá metodu založenou na simulacích. Přesněji, využívá metodu Monte Carlo se statistickým vzorkováním. -

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games (Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack (TG16, Arcade) 10. 1942 (NES, Arcade, GBC) 11. 1942 (Revision B) (Arcade) 12. 1943 Kai: Midway Kaisen (Japan) (Arcade) 13. 1943: Kai (TG16) 14. 1943: The Battle of Midway (NES, Arcade) 15. 1944: The Loop Master (Arcade) 16. 1999: Hore, Mitakotoka! Seikimatsu (NES) 17. 19XX: The War Against Destiny (Arcade) 18. 2 on 2 Open Ice Challenge (Arcade) 19. 2010: The Graphic Action Game (Colecovision) 20. 2020 Super Baseball (SNES, Arcade) 21. 21-Emon (TG16) 22. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 23. 3 Count Bout (Arcade) 24. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 25. 3-D Tic-Tac-Toe (Atari 2600) 26. 3-D Ultra Pinball: Thrillride (GBC) 27. -

Descargar Bomberman Blast Wii Iso

Descargar bomberman blast wii iso Continue Progress continues We have already had 10,965 updates with Dolphin 5.0. Follow Dolphin's ongoing progress through the Dolphin blog: August/September 2019 Progress Report. The Bomberman Blast Wii IsoBomberman Blast Wii ControlsBomberman Blast is an action game developed and published by Hudson Soft for the Wii and WiiWare. It is part of the Bomberman series. This is the second in the bomberman downloadable games trilogy, after Bomberman Live for Xbox Live Arcade, followed by Bomberman Ultra for PlayStation Network. The game was released in two versions: full featured retail release and downloadable, lower-priced WiiWare. Bomberman Blast is originally a retail game. Featuring a whole new story mode, just like the old games as well as the 8-player online combat mode, it seemed like Hudson was trying. Vicky's dolphin emulator needs your help! Dolphin can play thousands of games and changes happen all the time. Help us keep up! Join us and help us make this the best resource for the dolphin. Bomberman BlastDeveloper (s)Hudson SoftPublisher (s)Hudson EntertainmentSeriesBombermanPlatform (s)WiiRelease Date (s) JP September 25, 2008Genre (s)ActionMode (s)Single game, Multiplayer (8)Entry TechniquesWi Remote, GameCube ControllerCompatibility5PerfectIGameSRB668 The WiiWare VersionDolphin Forum threadOpen IssuesSearch GoogleSearch WikipediaBomberman Blast (Bomberman in Japan) is a brand new addition to the Bomberman series available on WiiWare. Up to eight players can fight online at the same time via Nintendo Wi-Fi Connection. Simple controls make this a great game for family and friends to enjoy at any time. You can summon new items by shaking the Wii Remote Controller, creating new levels of Bomberman excitement. -

10-Yard Fight 1942 1943

10-Yard Fight 1942 1943 - The Battle of Midway 2048 (tsone) 3-D WorldRunner 720 Degrees 8 Eyes Abadox - The Deadly Inner War Action 52 (Rev A) (Unl) Addams Family, The - Pugsley's Scavenger Hunt Addams Family, The Advanced Dungeons & Dragons - DragonStrike Advanced Dungeons & Dragons - Heroes of the Lance Advanced Dungeons & Dragons - Hillsfar Advanced Dungeons & Dragons - Pool of Radiance Adventure Island Adventure Island II Adventure Island III Adventures in the Magic Kingdom Adventures of Bayou Billy, The Adventures of Dino Riki Adventures of Gilligan's Island, The Adventures of Lolo Adventures of Lolo 2 Adventures of Lolo 3 Adventures of Rad Gravity, The Adventures of Rocky and Bullwinkle and Friends, The Adventures of Tom Sawyer After Burner (Unl) Air Fortress Airwolf Al Unser Jr. Turbo Racing Aladdin (Europe) Alfred Chicken Alien 3 Alien Syndrome (Unl) All-Pro Basketball Alpha Mission Amagon American Gladiators Anticipation Arch Rivals - A Basketbrawl! Archon Arkanoid Arkista's Ring Asterix (Europe) (En,Fr,De,Es,It) Astyanax Athena Athletic World Attack of the Killer Tomatoes Baby Boomer (Unl) Back to the Future Back to the Future Part II & III Bad Dudes Bad News Baseball Bad Street Brawler Balloon Fight Bandai Golf - Challenge Pebble Beach Bandit Kings of Ancient China Barbie (Rev A) Bard's Tale, The Barker Bill's Trick Shooting Baseball Baseball Simulator 1.000 Baseball Stars Baseball Stars II Bases Loaded (Rev B) Bases Loaded 3 Bases Loaded 4 Bases Loaded II - Second Season Batman - Return of the Joker Batman - The Video Game -

Bomberman-Quickstart 4.Pdf

Bomberman English QS 6/8/00 10:00 AM Page 2 TM Bomberman English QS 6/8/00 10:00 AM Page 4 NATION NATION Meet the Fuse that Just Won’t Lose. 95 double-click “My Computer,” then Controls double-click the CD-ROM icon, then <Space Bar> • Drop Bomb or those of you not familiar with double-click the setup icon. or • Drop Spooge the Bomberman experience, I will Online Manual / Help Joystick • Grab spare you the details. The Contains the manual you are holding in Button 1 • Throw Bomb Fdynamics of the game are as easy as your hands. <Enter> • Action Button 1-2-3. About Bomberman or • Stop kicked Bomb 1. Drop a bomb. This contains information not ready at Joystick • Punch Bomb 2. Run like hell. the time of this writing. You should Button 2 • Activate Trigger 3. Watch your back (and your front, read this in its entirety as it will contain your left, etc. Just watch out.) information on the various tools <Cursor Keys> Move Bomberman Getting Started System Requirements available, as well as any changes in MINIMUM: IBM or 100% compatible Pentium® Start Game functionality omitted within Battle Mode Setup 90, 16 MB of RAM, 40 MB free this manual. This is a 1-10 player no-holds barred hard disk space, CD-ROM drive, LocalBus or PCI When you start the game it action game! Play either against SVGA video card, Sound Blaster® Exit Game automatically detects the number of human or computer opponents in this or 100% compatible sound card, Windows® 95 This will end your current session of gamepads on your machine. -

Bomberman As an Artificial Intelligence Platform

MSc 2.º CICLO FCUP 2016 Bomberman Bomberman as an as an Artificial Intelligence Intelligence an Artificial as Artificial Intelligence Platform Manuel António da Cruz Lopes Dissertação de Mestrado apresentada à Faculdade de Ciências da Universidade do Porto em Área Científica 2016 Platform Manuel António António Manuelda Cruz Lopes !" #$ %& $''''''(''''''(''''''''' Manuel Ant´onioda Cruz Lopes Bomberman as an Artificial Intelligence Platform Departamento de Ci^enciade Computadores Faculdade de Ci^enciasda Universidade do Porto 2016 Manuel Ant´onioda Cruz Lopes Bomberman as an Artificial Intelligence Platform Tese submetida Faculdade de Ci^enciasda Universidade do Porto para obteno do grau de Mestre em Ci^enciade Computadores Advisors: Prof. Pedro Ribeiro Departamento de Ci^enciade Computadores Faculdade de Ci^enciasda Universidade do Porto Junho de 2016 2 Para os meus pais e para o meu irm~ao 3 4 Acknowledgments I would like to thank my supervisor, Prof. Pedro Ribeiro, for the opportunity to work on this research topic, giving me enough freedom to experiment and learn on my own, and yet he has always been there to guide me. Also to my friends and family that gave me the moral support needed to endure this journey. Thank you. 5 6 Abstract Computer games have a long tradition in the Artificial Intelligence (AI) field. They serve the purpose of establishing challenging goals with long term positive repercus- sions, with researchers trying to develop AIs capable of beating the world human experts. They also provide a good amount of exposition in mainstream media, whilst also giving an engaging environment for teaching computer science concepts in general and AI methods in particular. -

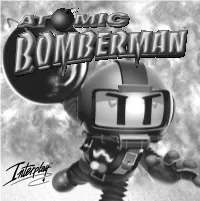

CSSE220 Bomberman Programming Assignment – Team Project

CSSE220 BomberMan programming assignment – Team Project You will write a game that is patterned off the 1980’s BomberMan game. You can find a description of the game, and much more information here: http://strategywiki.org/wiki/Bomberman Also, the original NES instructions manual: http://www.gamesdatabase.org/Media/SYSTEM/Nintendo_NES/manual/Formated/Bomberman_- _1987_-_Hudson_Soft.pdf You can also find an online playable version of the game here: http://game-oldies.com/play-online/bomberman-nintendo-nes# Table of Contents Essential features of your program ........................................................................................ 3 Nice features to add .......................................................................................................................3 Additional features that you might add include ................................................................................... 4 A major goal of this project.................................................................................................... 4 Parallel work ..................................................................................................................................4 Development cycles .......................................................................................................................4 Milestones (all due at the beginning of class, except as noted) ............................................... 5 Cycle 0: UML Class Diagram ............................................................................................................5 -

Fall Guys: Ultimate Knockout Und Super Bomberman R Online Treffen Sich Im Crossover

** PRESSEMELDUNG ** 27. Mai 2021 FALL GUYS: ULTIMATE KNOCKOUT UND SUPER BOMBERMAN R ONLINE TREFFEN SICH IM CROSSOVER SUPER BOMBERMAN R ONLINE erscheint heute für PlayStation, Xbox, Switch und Steam als Free-to-Play-Titel und lädt den “Bean Bomber” aus Fall Guys ein Konami Digital Entertainment B.V. und Mediatonic verkünden heute ein besonderes Crossover zwischen dem neu veröffentlichten SUPER BOMBERMAN R ONLINE und dem „bohnigen Stolper Royale“ Fall Guys: Ultimate Knockout. Als Teil der Zusammenarbeit können alle Spieler von SUPER BOMBERMAN R ONLINE einen exklusiven „Bean Bomber“-Spielcharakter* erhalten. Somit ist dies das erste Mal, dass es ein Fall Guy in ein anderes Spiel geschafft hat. *Bis auf Weiteres im Spiel auf allen Plattformen verfügbar. Kein zusätzlicher Kauf notwendig. Der “Bean Bomber” kann über das Schlachtfeld hechten, um nicht von unzähligen Bomben getroffen zu werden, während Spieler im neuen „Kampf 64“-Onlinemodus gegeneinander antreten. Zusätzlich wird auch die Bomberman-Reihe in Fall Guys: Ultimate Knockout Einzug halten, nämlich mit dem neuen „Bomberman“-Kostüm! Der ikonische Charakter schlägt am 4. Juni 2021 im Blunderdome ein und wird im Ingame-Shop von Fall Guys für insgesamt zehn Kronen erhältlich sein. “Bean Bomber”-Charakter in SBRO “Bomberman”-Kostüm in Fall Guys Gameplay des “Bean Bombers” in SBRO “Bean Bomber”-Profil in SBRO SUPER BOMBERMAN R ONLINE ist ab heute für PlayStation®4, Xbox One, Nintendo Switch™ und PC via Steam als downloadexklusiver Free-to-Play-Titel verfügbar. Das Spiel ist ebenfalls auf PlayStation®5 und Xbox Series X|S spielbar. PlayStation Store – LINK Microsoft Store (Xbox) – LINK Nintendo eShop – LINK Steam – LINK Google Stadia – LINK KONAMIs Richtlinien für erstellte Nutzerinhalte (bspw. -

SUPER BOMBERMAN R a Fast Paced 1 to 8 Player Party Action Game That Fully Utilizes the New and Unique Nintendo Switch™!

RELEASE DATE March 3rd 2017 GENRE Action PRICE $49.99 HAVE A BLAST!! SUPER BOMBERMAN R A fast paced 1 to 8 player party action game that fully utilizes the new and unique Nintendo Switch™! Bomberman, the iconic party-battle game, is returning as “SUPER BOMBERMAN R” on the Nintendo Switch and will be released alongside the launch of the new system for home and on-the-go fun! Dive into the simple yet addictive gameplay In SUPER BOMBERMAN R by controlling the main character, placing bombs, and battling enemies and rivals with a modern visual style including 3D stages and photo-realistic graphics. Take out CPU-controlled adversaries in ‘Story’ mode to clear a series of 50 unique stages in order to save the galaxy. SUPER BOMBERMAN R also makes full use of the Nintendo Switch’s first of its kind system capabilities which allows gamers to play where ever, whenever and with whomever they choose. Players can go head-to-head in a heated “Battle” mode where up to eight players are dropped within a maze until the “last man standing” is declared the winner. All iconic Bomberman elements return to the game including a dynamite list of items found within the destructible areas of each maze. Removing a wall will often reveal a power-up to extend the rage of the Nintendo Switch™ explosion or endow Bomberman with a useful skill including faster movement, the ability to kick or throw bombs and more! As players gain ever more devastating capabilities, they must use all their skill and ingenuity to ensure their opponents get caught in a blast while avoiding it’s fiery touch! Features: • +50 levels of single player or Co-Op maps! • 3D stages with dynamic environments • Bomberman’s siblings and well-known enemies are back with rich personality • Battle mode for maximum of 8 players, local connection battles, and online battles Marketing Calendar JAN FEB MAR APR Advertising PR Community Blood Nintendo Crossover Language Violence Retail / POP ©2017 Konami Digital Entertainment ©2017 Nintendo.