Service-Oriented and Evidence-Aware Mobile Cloud Computing Abstract

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Google Is a Strong Performer in Enterprise Public Cloud Platforms Excerpted from the Forrester Wave™: Enterprise Public Cloud Platforms, Q4 2014 by John R

FOR CIOS DECEMBER 29, 2014 Google Is A Strong Performer In Enterprise Public Cloud Platforms Excerpted From The Forrester Wave™: Enterprise Public Cloud Platforms, Q4 2014 by John R. Rymer and James Staten with Peter Burris, Christopher Mines, and Dominique Whittaker GOOGLE, NOW A FULL-SERVICE PLATFORM, IS RUNNING TO CATCH THE LEADERS Since our last analysis, Google has made significant improvements to its cloud platform — adding an IaaS service, innovated with new big data solutions (based on its homegrown dremel architecture), and added partners. Google is popular among web developers — we estimate that it has between 10,000 and 99,000 customers. But Google Cloud Platform lacks several key certifications, monitoring and security controls, and application services important to CIOs and provided by AWS and Microsoft.1 Google has also been slow to position its cloud platform as the home for applications that want to leverage the broad set of Google services such as Android, AdSense, Search, Maps, and so many other technologies. Look for that to be a key focus in 2015, and for a faster cadence of new features. Forrester Wave™: Enterprise Public Cloud Forrester Wave™: Enterprise Public Cloud Platforms For CIOs, Q4 ‘14 Platforms For Rapid Developers, Q4 ‘14 Risky Strong Risky Strong Bets Contenders Performers Leaders Bets Contenders Performers Leaders Strong Strong Amazon Web Services MIOsoft Microsoft Salesforce Cordys* Mendix MIOsoft Salesforce (Q2 2013) OutSystems OutSystems Google Mendix Acquia Current Rackspace* IBM Current offering (Q2 2013) offering Cordys* (Q2 2013) Engine Yard Acquia CenturyLink Google, with a Forrester score of 2.35, is a Strong Performer in this Dimension Data GoGrid Forrester Wave. -

Deliverable No. 5.3 Techniques to Build the Cloud Infrastructure Available to the Community

Deliverable No. 5.3 Techniques to build the cloud infrastructure available to the community Grant Agreement No.: 600841 Deliverable No.: D5.3 Deliverable Name: Techniques to build the cloud infrastructure available to the community Contractual Submission Date: 31/03/2015 Actual Submission Date: 31/03/2015 Dissemination Level PU Public X PP Restricted to other programme participants (including the Commission Services) RE Restricted to a group specified by the consortium (including the Commission Services) CO Confidential, only for members of the consortium (including the Commission Services) Grant Agreement no. 600841 D5.3 – Techniques to build the cloud infrastructure available to the community COVER AND CONTROL PAGE OF DOCUMENT Project Acronym: CHIC Project Full Name: Computational Horizons In Cancer (CHIC): Developing Meta- and Hyper-Multiscale Models and Repositories for In Silico Oncology Deliverable No.: D5.3 Document name: Techniques to build the cloud infrastructure available to the community Nature (R, P, D, O)1 R Dissemination Level (PU, PP, PU RE, CO)2 Version: 1.0 Actual Submission Date: 31/03/2015 Editor: Manolis Tsiknakis Institution: FORTH E-Mail: [email protected] ABSTRACT: This deliverable reports on the technologies, techniques and configuration needed to install, configure, maintain and run a private cloud infrastructure for productive usage. KEYWORD LIST: Cloud infrastructure, OpenStack, Eucalyptus, CloudStack, VMware vSphere, virtualization, computation, storage, security, architecture. The research leading to these results has received funding from the European Community's Seventh Framework Programme (FP7/2007-2013) under grant agreement no 600841. The author is solely responsible for its content, it does not represent the opinion of the European Community and the Community is not responsible for any use that might be made of data appearing therein. -

D1.5 Final Business Models

ITEA 2 Project 10014 EASI-CLOUDS - Extended Architecture and Service Infrastructure for Cloud-Aware Software Deliverable D1.5 – Final Business Models for EASI-CLOUDS Task 1.3: Business model(s) for the EASI-CLOUDS eco-system Editor: Atos, Gearshift Security public Version 1.0 Melanie Jekal, Alexander Krebs, Markku Authors Nurmela, Juhana Peltonen, Florian Röhr, Jan-Frédéric Plogmeier, Jörn Altmann, (alphabetically) Maurice Gagnaire, Mario Lopez-Ramos Pages 95 Deliverable 1.5 – Final Business Models for EASI-CLOUDS v1.0 Abstract The purpose of the business working group within the EASI-CLOUDS project is to investigate the commercial potential of the EASI-CLOUDS platform, and the brokerage and federation- based business models that it would help to enable. Our described approach is both ‘top down’ and ‘bottom up’; we begin by summarizing existing studies on the cloud market, and review how the EASI-CLOUDS project partners are positioned on the cloud value chain. We review emerging trends, concepts, business models and value drivers in the cloud market, and present results from a survey targeted at top cloud bloggers and cloud professionals. We then review how the EASI-CLOUDS infrastructure components create value both directly and by facilitating brokerage and federation. We then examine how cloud market opportunities can be grasped through different business models. Specifically, we examine value creation and value capture in different generic business models that may benefit from the EASI-CLOUDS infrastructure. We conclude by providing recommendations on how the different EASI-CLOUDS demonstrators may be commercialized through different business models. © EASI-CLOUDS Consortium. 2 Deliverable 1.5 – Final Business Models for EASI-CLOUDS v1.0 Table of contents Table of contents ........................................................................................................................... -

Cloud Computing: a Taxonomy of Platform and Infrastructure-Level Offerings David Hilley College of Computing Georgia Institute of Technology

Cloud Computing: A Taxonomy of Platform and Infrastructure-level Offerings David Hilley College of Computing Georgia Institute of Technology April 2009 Cloud Computing: A Taxonomy of Platform and Infrastructure-level Offerings David Hilley 1 Introduction Cloud computing is a buzzword and umbrella term applied to several nascent trends in the turbulent landscape of information technology. Computing in the “cloud” alludes to ubiquitous and inexhaustible on-demand IT resources accessible through the Internet. Practically every new Internet-based service from Gmail [1] to Amazon Web Services [2] to Microsoft Online Services [3] to even Facebook [4] have been labeled “cloud” offerings, either officially or externally. Although cloud computing has garnered significant interest, factors such as unclear terminology, non-existent product “paper launches”, and opportunistic marketing have led to a significant lack of clarity surrounding discussions of cloud computing technology and products. The need for clarity is well-recognized within the industry [5] and by industry observers [6]. Perhaps more importantly, due to the relative infancy of the industry, currently-available product offerings are not standardized. Neither providers nor potential consumers really know what a “good” cloud computing product offering should look like and what classes of products are appropriate. Consequently, products are not easily comparable. The scope of various product offerings differ and overlap in complicated ways – for example, Ama- zon’s EC2 service [7] and Google’s App Engine [8] partially overlap in scope and applicability. EC2 is more flexible but also lower-level, while App Engine subsumes some functionality in Amazon Web Services suite of offerings [2] external to EC2. -

Cutter IT Journal

Cutter The Journal of IT Journal Information Technology Management Vol. 26, No. 3 March 2013 “Cloud service providers, the IT industry, professional The Emerging Cloud Ecosystem: and industry associations, governments, and IT pro- Innovative New Services and fessionals all have a role to Business Models play in shaping, fostering, and harnessing the full potential of the emerging cloud ecosystem.” Opening Statement — San Murugesan, by San Murugesan . 3 Guest Editor Merging IaaS with PaaS to Deliver Robust Development Tools by Beth Cohen . 6 Intrusion Detection as a Service (IDaaS) in an Open Source Cloud Infrastructure by John Prakash Veigas and K Chandra Sekaran . 12 Cloud Ecology: Surviving in the Jungle by Claude R. Baudoin . 19 The Promise of a Diverse, Interoperable Cloud Ecosystem — And Recommendations for Realizing It by Kathy L. Grise . 26 NOT FOR DISTRIBUTION For authorized use, contact Cutter Consortium: +1 781 648 8700 [email protected] Cutter IT Journal About Cutter IT Journal Cutter IT Journal® Cutter Business Technology Council: Part of Cutter Consortium’s mission is to Cutter IT Journal subscribers consider the Rob Austin, Ron Blitstein, Tom DeMarco, Lynne Ellyn, Israel Gat, Vince Kellen, foster debate and dialogue on the business Journal a “consultancy in print” and liken Tim Lister, Lou Mazzucchelli, technology issues challenging enterprises each month’s issue to the impassioned Ken Orr, and Robert D. Scott today, helping organizations leverage IT for debates they participate in at the end of Editor Emeritus: Ed Yourdon competitive advantage and business success. a day at a conference. Publisher: Karen Fine Coburn Cutter’s philosophy is that most of the issues Group Publisher: Chris Generali that managers face are complex enough to Every facet of IT — application integration, Managing Editor: Karen Pasley merit examination that goes beyond simple security, portfolio management, and testing, Production Editor: Linda M. -

An Lc-Tools Tutirial

An lc-tools Tutirial Roman Bogorodskiy [email protected] May 30, 2011 Contents 0.1 Introduction An lc-tools is a set of command line tools to manage various Cloud (aka IaaS) Providers. It’s written in Python and uses libcloud to interact with provider’s API.xx 0.2 Getting Started 0.2.1 Installing Dependencies The only external dependency is libcloud. Please visit libcloud download page to get information how to download and install it. Installing from PyPI Latest stable version of lc-tools could be installed from PyPI: easy_install lctools Installing from source To get latest development version you can checkout sources from project’s github page: git clone https://github.com/novel/lc-tools.git Now you should have all the sources and should be ready to proceed to installation. As lc-tools use setuptools installation process is fairly simple: $ cd lc-tools $ sudo python setup.py install This will install all the tools and you will be able to use them after configu- ration (you will know how to configure lc-tools in the next section: ??). However, there are some additional tools available in lc-tools that’s not installed by default – it’s various provider specific tools. 1 What are provider specific tools, you might ask. You see, libcloud is de- signed to provide an unified API to the cloud, so its model is almost an inter- section of APIs of various cloud providers. However, various provider can have its specific API calls, for example, provider Foo might have a call to return information how many servers could be created in your current account or, say, what’s the maximum allowed rate of requests to the API per minute. -

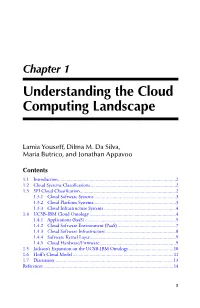

Understanding the Cloud Computing Landscape

Chapter 1 Understanding the Cloud Computing Landscape Lamia Youseff, Dilma M. Da Silva, Maria Butrico, and Jonathan Appavoo Contents 1.1 Introduction .................................................................................................2 1.2 Cloud Systems Classifications ......................................................................2 1.3 SPI Cloud Classification ...............................................................................2 1.3.1 Cloud Software Systems ...................................................................3 1.3.2 Cloud Platform Systems ....................................................................3 1.3.3 Cloud Infrastructure Systems ...........................................................4 1.4 UCSB-IBM Cloud Ontology .......................................................................4 1.4.1 Applications (SaaS) ...........................................................................5 1.4.2 Cloud Software Environment (PaaS) ................................................7 1.4.3 Cloud Software Infrastructure ..........................................................8 1.4.4 Software Kernel Layer .......................................................................9 1.4.5 Cloud Hardware/Firmware ...............................................................9 1.5 Jackson’s Expansion on the UCSB-IBM Ontology .....................................10 1.6 Hoff’s Cloud Model ...................................................................................11 1.7 Discussion ..................................................................................................13 -

Distributed Anomaly Detection and Prevention for Virtual Platforms

Distributed Anomaly Detection and Prevention for Virtual Platforms Dissertation zur Erlangung des mathematisch-naturwissenschaftlichen Doktorgrades \Doctor rerum naturalium" der Georg-August-Universit¨atG¨ottingen im Promotionsprogramm Computer Science (PCS) der Georg-August University School of Science (GAUSS) vorgelegt von Ali Imran Jehangiri aus Mansehra, Pakistan G¨ottingen,2015 Betreuungsausschuss Prof. Dr. Ramin Yahyapour, Gesellschaft f¨urwissenschaftliche Datenverarbeitung G¨ottingen mbH (GWDG), Institut f¨urInformatik, Georg-August-Universit¨atG¨ottingen Prof. Dr. Stephan Waack, Institut f¨urInformatik, Georg-August-Universit¨atG¨ottingen Mitglieder der Pr¨ufungskommission Referent: Prof. Dr. Ramin Yahyapour, Gesellschaft f¨urwissenschaftliche Datenverarbeitung G¨ottingen mbH (GWDG), Institut f¨urInformatik, Georg-August-Universit¨atG¨ottingen Korreferent: Prof. Dr. Andrei Tchernykh, Computer Science Department, CICESE Research Center, Ensenada, Baja California, Mexico Weitere Mitglieder der Pr¨ufungskommission Prof. Dr. Carsten Damm, Institut f¨urInformatik, Georg-August-Universit¨atG¨ottingen Prof. Dr. Dieter Hogrefe, Institut f¨urInformatik, Georg-August-Universit¨atG¨ottingen Prof. Dr. Xiaoming Fu, Institut f¨urInformatik, Georg-August-Universit¨atG¨ottingen Prof. Dr. Winfried Kurth, Abteilung Okoinformatik,¨ Biometrie und Waldwachstum, Georg-August- Universit¨atG¨ottingen Tag der m¨undlichen Pr¨ufung:17. 07 2015 i Abstract An increasing number of applications are being hosted on cloud based plat- forms [69]. Cloud platforms are serving as a general computing facility and applications being hosted on these platforms range from simple multi- tier web applications to complex social networking, eCommerce and Big Data applications. High availability, performance and auto-scaling are key requirements of Cloud based applications. Cloud platforms serve these requirements using dynamic provisioning of resources in on-demand, multi- tenant fashion. -

HPC Management and Engineering in the Hybrid Cloud

Universidade de Aveiro Departamento de Eletrónica, 2015 Telecomunicações e Informática André Frederico Gestão e engenharia de CAP na Nuvem Híbrida Guilhoto Monteiro HPC Management and Engineering in the Hybrid Cloud Universidade de Aveiro Departamento de Eletrónica, 2015 Telecomunicações e Informática André Frederico Gestão e engenharia de CAP na Nuvem Híbrida Guilhoto Monteiro HPC Management and Engineering in the Hybrid Cloud Tese apresentada às Universidades do Minho, Aveiro e Porto para cumprimento dos requisitos necessários à obtenção do grau de Doutor em Informática no âmbito do doutoramento conjunto MAP-i, realizada sob a orientação científica do Doutor Cláudio Jorge Vieira Teixeira, equiparado a Investigador Auxiliar, e do Doutor Joaquim Manuel Henriques de Sousa Pinto, Professor Auxiliar ambos do Departamento de Eletrónica, Telecomunicações e Informática da Universidade de Aveiro. Ao meu pai, o verdadeiro “engenheiro” que me inspirou na procura das coisas inovadoras, à minha mãe pela seu acompanhamento e exigência na educação e à mulher da minha vida pelo encorajamento, trabalho suplementar e paciência extra. o júri / the jury presidente / president Prof. Doutor Domingos Moreira Cardoso Professor Catedrático da Universidade de Aveiro vogais / examiners committee Prof. Doutor Fernando Manuel Augusto Silva Professor Catedrático da Faculdade de Ciências da Universidade do Porto Prof. Doutor Alfredo Moreira Caseiro Rocha Professor Associado com Agregação da Universidade de Aveiro Prof. Doutor Ignacio Blanquer Professor Associado da Universidade Politécnica de Valência Prof. Doutor José Miguel Oliveira Monteiro Sales Dias Professor Associado Convidado do Instituto Universitário de Lisboa Prof. Doutor Filipe João Boavida Mendonça Machado Araújo Professor Auxiliar da Faculdade de Ciências e Tecnologia da Universidade de Coimbra Prof. -

Cloud Computing Oct 7 2008

CS 683 Emerging Technologies Fall Semester, 2008 Doc 10 Cloud Computing Oct 7 2008 Copyright ©, All rights reserved. 2008 SDSU & Roger Whitney, 5500 Campanile Drive, San Diego, CA 92182-7700 USA. OpenContent (http:// www.opencontent.org/openpub/) license defines the copyright on this document. References Amazon Simple Storage Service Getting Started Guide, http://docs.amazonwebservices.com/ AmazonS3/2006-03-01/gsg/ Amazon Simple Storage Service, http://aws.amazon.com/s3/ Twenty-One Experts Define Cloud Computing, http://cloudcomputing.sys-con.com/node/612375/ print Cloud Computing Community Wiki, http://wiki.cloudcommunity.org/wiki/Main_Page Cloud computing, http://en.wikipedia.org/wiki/Cloud_computing Reading Cloud computing, http://en.wikipedia.org/wiki/Cloud_computing Amazon Simple Storage Service Getting Started Guide, http://docs.amazonwebservices.com/ AmazonS3/2006-03-01/gsg/ 2 Cloud Computing 3 Examples Google Apps iTunes Store Bittorent Skype Web mail Facebook Google Maps 4 In the Beginning "computation may someday be organized as a public utility" John McCarthy 1960 5 Wikipedia Definition IT-related capabilities are provided “as a service” Services accesses anywhere via network access IEEE It is a paradigm in which information is permanently stored in servers on the Internet and cached temporarily on clients that include desktops, entertainment centers, table computers, notebooks, wall computers, handhelds, etc. 6 Key Characteristics Capital expenditure minimized for users Device and location independence Performance Reliability -

Delivery Services Model of Cloud Computing: a Perspective Overview

International Journal of Innovative Computing, Information and Control ICIC International c 2012 ISSN 1349-4198 Volume 8, Number 8, August 2012 pp. 5873{5884 DELIVERY SERVICES MODEL OF CLOUD COMPUTING: A PERSPECTIVE OVERVIEW Feng-Tse Lin and Chieh-Hung Huang Department of Applied Mathematics Chinese Culture University No. 55, Hwa-Kang Road, Yang-Min-Shan, Taipei 111, Taiwan [email protected] Received March 2011; revised August 2011 Abstract. Cloud computing is a consequence of economic, commercial, cultural and technological conditions that have combined to cause a disruptive shift in the IT industry towards a service-based economy. It is a style of computing where massively scalable IT- enabled capabilities are provided as a service over the network and give rise to the \As a Service" business. The evolution of Cloud computing can handle massive data as per on demand service. Supporting this transition is a range of technologies from cluster- ing to virtualization. This study presents an expanded delivery services model of Cloud computing for enterprise and business. The characteristics and the challenges of Cloud computing are analyzed and discussed. The offerings from some Cloud service providers are also outlined. Keywords: Cloud computing, Virtualization, Cloud infrastructure, Cloud services, Everything-as-a-Service 1. Introduction. In the IBM technical white paper of Cloud computing, the concept of Cloud computing has developed from earlier ideas such as grid and utility computing, and aims to provide a completely Internet-driven, dynamic and scalable service-oriented IT environment, which can be accessed from anywhere using any web-capable device [6]. With the Cloud computing technology, user's computer no longer has to do all the heavy computing process or data storage. -

An Explanatory Case Study on Cloud Computing Applications in the Built Environment

Automation in Construction 44 (2014) 152–162 Contents lists available at ScienceDirect Automation in Construction journal homepage: www.elsevier.com/locate/autcon An explanatory case study on cloud computing applications in the built environment Heap-Yih Chong a,b,⁎, John Son Wong a, Xiangyu Wang b,c a Faculty of Engineering & Science, Universiti Tunku Abdul Rahman, Malaysia b Australasian Joint Research Centre for Building Information Modelling (BIM), School of Built Environment, Curtin University, Australia c Department of Housing and Interior Design, Kyung Hee University, Seoul, Republic of Korea article info abstract Article history: Fragmentation of practices is one of the key issues in the built environment. However, with advances in Information Received 12 September 2013 and Communication Technology (ICT), particularly cloud computing, the fragmentation of working practices can be Received and revised form 21 February 2014 potentially overcome. The technology could enhance communication and information flow in various stages along a Accepted 15 April 2014 project life cycle. Due to the increasing demands and the newly developed cloud computing applications, it is critical Available online xxxx to review and identify the appropriate cloud computing applications in the built environment. A total of forty two cloud computing applications consisting of general cloud applications, Building Information Modelling (BIM), and Keywords: Cloud computing project management cloud applications were selected and critically reviewed. A decision-making model was also BIM developed to assist parties in selecting a suitable application. The explanatory case study has discovered numerous Project management possible cloud computing applications in various disciplines, for example, Google Apps, Autodesk BIM 360, and Critical review Viewpoint are the applications with the most features.