Long Short-Term Memory Recurrent Neural Network for Detecting Ddos Flooding Attacks Within Tensorflow Implementation Framework

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Data Science Initiative 1

Data Science Initiative 1 3 credits in data and computational science, 1 credit in societal implications and opportunities, Data Science Initiative 1 elective credit to be drawn from a wide range of focused applications or deeper theoretical exploration, and 1 credit capstone experience. Director We also offer an option as a 5-th Year Master's Program if you are an Sohini Ramachandran undergraduate at Brown. This allows you to substitute maximally 2 credits Direfctor of Graduate Studies with courses you have already taken. Samuel S. Watson Master of Science in Data Science Brown University's Data Science Initiative serves as a campus hub Semester I for research and education in data science. Engaging partners across DATA 1010 Probability, Statistics, and Machine 2 campus and beyond, the DSI 's mission is to facilitate and conduct both Learning domain-driven and fundamental research in data science, increase data DATA 1030 Hands-on Data Science 1 fluency and educate the next generation of data scientists, and ultimately DATA 1050 Data Engineering 1 explore the impact of the data revolution on culture, society, and social justice. We envision our role in the university and beyond as something to Semester II build over time, with the flexibility to meet the changing needs of Brown’s DATA 2020 Statistical Learning 1 students and research community. DATA 2040 Deep Learning and Special Topics in Data 1 The Master’s Program in Data Science (Master of Science, Science ScM) prepares students from a wide range of disciplinary backgrounds DATA 2080 Data and Society 1 for distinctive careers in data science. -

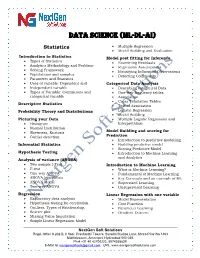

Data Science (ML-DL-Ai)

Data science (ML-DL-ai) Statistics Multiple Regression Model Building and Evaluation Introduction to Statistics Model post fitting for Inference Types of Statistics Examining Residuals Analytics Methodology and Problem- Regression Assumptions Solving Framework Identifying Influential Observations Populations and samples Detecting Collinearity Parameter and Statistics Uses of variable: Dependent and Categorical Data Analysis Independent variable Describing categorical Data Types of Variable: Continuous and One-way frequency tables categorical variable Association Cross Tabulation Tables Descriptive Statistics Test of Association Probability Theory and Distributions Logistic Regression Model Building Picturing your Data Multiple Logistic Regression and Histogram Interpretation Normal Distribution Skewness, Kurtosis Model Building and scoring for Outlier detection Prediction Introduction to predictive modelling Inferential Statistics Building predictive model Scoring Predictive Model Hypothesis Testing Introduction to Machine Learning and Analytics Analysis of variance (ANOVA) Two sample t-Test Introduction to Machine Learning F-test What is Machine Learning? One-way ANOVA Fundamental of Machine Learning ANOVA hypothesis Key Concepts and an example of ML ANOVA Model Supervised Learning Two-way ANOVA Unsupervised Learning Regression Linear Regression with one variable Exploratory data analysis Model Representation Hypothesis testing for correlation Cost Function Outliers, Types of Relationship, -

Data Science: the Big Picture Data Science with R Exploratory Data Analysis with R Data Visualization with R (3-Part)

Deep Learning: The Future of AI @MatthewRenze #DevoxxUK Human Cat Dog Car Job Postings for Machine Learning Source: Indeed.com Average Salary by Job Type (USA) $108,000 $101,000 $100,000 Source: Stack Overflow 2017 What is deep learning? What can it do for me? How do I get started? What is deep learning? Deep Learning Deep Learning Artificial intelligence Machine learning Neural network Multiple hidden layers Hierarchical representations Makes predictions with data Deep Learning Artificial intelligence Machine learning Neural network Multiple hidden layers Hierarchical representations Makes predictions with data Artificial Machine Deep Intelligence Learning Learning Artificial Intelligence Explicit programming Explicit programming Encoding domain knowledge Explicit programming Encoding domain knowledge Statistical patterns detection Machine Learning Machine Learning Artificial Machine Statistics Intelligence Learning 푓 푥 푓 푥 Prediction Data Function 푓 푥 Prediction Data Function Cat Dog 푓 푥 Prediction Data Function Cat Dog Is cat? 푓 푥 Prediction Data Function Cat Dog Is cat? Yes Artificial Neuron 푥1 푥2 푦 푥3 inputs neuron outputs Artificial Neuron Σ Artificial Neuron Σ Artificial Neuron 휔1 휔2 휔3 Artificial Neuron 휔0 Artificial Neuron 휔0 휔1 휔2 휔3 Artificial Neuron 푥 1 휔0 휔1 푥2 휑 휔2 Σ 푦 푚 휔3 푥3 푦푘 = 휑 푤푘푗푥푗 푗=0 Artificial Neural Network Artificial Neural Network input hidden output Artificial Neural Network Forward propagation Artificial Neural Network Forward propagation Backward propagation Artificial Neural Network Artificial Neural Network -

Explainable Deep Learning Models in Medical Image Analysis

Journal of Imaging Review Explainable Deep Learning Models in Medical Image Analysis Amitojdeep Singh 1,2,* , Sourya Sengupta 1,2 and Vasudevan Lakshminarayanan 1,2 1 Theoretical and Experimental Epistemology Laboratory, School of Optometry and Vision Science, University of Waterloo, Waterloo, ON N2L 3G1, Canada; [email protected] (S.S.); [email protected] (V.L.) 2 Department of Systems Design Engineering, University of Waterloo, Waterloo, ON N2L 3G1, Canada * Correspondence: [email protected] Received: 28 May 2020; Accepted: 17 June 2020; Published: 20 June 2020 Abstract: Deep learning methods have been very effective for a variety of medical diagnostic tasks and have even outperformed human experts on some of those. However, the black-box nature of the algorithms has restricted their clinical use. Recent explainability studies aim to show the features that influence the decision of a model the most. The majority of literature reviews of this area have focused on taxonomy, ethics, and the need for explanations. A review of the current applications of explainable deep learning for different medical imaging tasks is presented here. The various approaches, challenges for clinical deployment, and the areas requiring further research are discussed here from a practical standpoint of a deep learning researcher designing a system for the clinical end-users. Keywords: explainability; explainable AI; XAI; deep learning; medical imaging; diagnosis 1. Introduction Computer-aided diagnostics (CAD) using artificial intelligence (AI) provides a promising way to make the diagnosis process more efficient and available to the masses. Deep learning is the leading artificial intelligence (AI) method for a wide range of tasks including medical imaging problems. -

Comparative Study of Deep Learning Software Frameworks

Comparative Study of Deep Learning Software Frameworks Soheil Bahrampour, Naveen Ramakrishnan, Lukas Schott, Mohak Shah Research and Technology Center, Robert Bosch LLC {Soheil.Bahrampour, Naveen.Ramakrishnan, fixed-term.Lukas.Schott, Mohak.Shah}@us.bosch.com ABSTRACT such as dropout and weight decay [2]. As the popular- Deep learning methods have resulted in significant perfor- ity of the deep learning methods have increased over the mance improvements in several application domains and as last few years, several deep learning software frameworks such several software frameworks have been developed to have appeared to enable efficient development and imple- facilitate their implementation. This paper presents a com- mentation of these methods. The list of available frame- parative study of five deep learning frameworks, namely works includes, but is not limited to, Caffe, DeepLearning4J, Caffe, Neon, TensorFlow, Theano, and Torch, on three as- deepmat, Eblearn, Neon, PyLearn, TensorFlow, Theano, pects: extensibility, hardware utilization, and speed. The Torch, etc. Different frameworks try to optimize different as- study is performed on several types of deep learning ar- pects of training or deployment of a deep learning algorithm. chitectures and we evaluate the performance of the above For instance, Caffe emphasises ease of use where standard frameworks when employed on a single machine for both layers can be easily configured without hard-coding while (multi-threaded) CPU and GPU (Nvidia Titan X) settings. Theano provides automatic differentiation capabilities which The speed performance metrics used here include the gradi- facilitates flexibility to modify architecture for research and ent computation time, which is important during the train- development. Several of these frameworks have received ing phase of deep networks, and the forward time, which wide attention from the research community and are well- is important from the deployment perspective of trained developed allowing efficient training of deep networks with networks. -

Reinforcement Learning in Videogames

Reinforcement Learning in Videogames Alex` Os´esLaza Final Project Director: Javier B´ejarAlonso FIB • UPC 20 de junio de 2017 2 Acknowledgements First I would like and appreciate the work and effort that my director, Javier B´ejar, has put into me. Either solving a ton of questions that I had or guiding me throughout the whole project, he has helped me a lot to make a good final project. I would also love to thank my family and friends, that supported me throughout the entirety of the career and specially during this project. 3 4 Abstract (English) While there are still a lot of projects and papers focused on: given a game, discover and measure which is the best algorithm for it, I decided to twist things around and decided to focus on two algorithms and its parameters be able to tell which games will be best approachable with it. To do this, I will be implementing both algorithms Q-Learning and SARSA, helping myself with Neural Networks to be able to represent the vast state space that the games have. The idea is to implement the algorithms as general as possible.This way in case someone wanted to use my algorithms for their game, it would take the less amount of time possible to adapt the game for the algorithm. I will be using some games that are used to make Artificial Intelligence competitions so I have a base to work with, having more time to focus on the actual algorithm implementation and results comparison. 5 6 Abstract (Catal`a) Mentre ja existeixen molts projectes i estudis centrats en: donat un joc, descobrir i mesurar quin es el millor algoritme per aquell joc, he decidit donar-li la volta i centrar-me en donat dos algorismes i els seus par`ametres,ser capa¸cde trobar quin tipus de jocs es beneficien m´esde la configuraci´odonada. -

Distributed Negative Sampling for Word Embeddings Stergios Stergiou Zygimantas Straznickas Yahoo Research MIT [email protected] [email protected]

Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence (AAAI-17) Distributed Negative Sampling for Word Embeddings Stergios Stergiou Zygimantas Straznickas Yahoo Research MIT [email protected] [email protected] Rolina Wu Kostas Tsioutsiouliklis University of Waterloo Yahoo Research [email protected] [email protected] Abstract Training for such large vocabularies presents several chal- lenges. Word2Vec needs to maintain two d-dimensional vec- Word2Vec recently popularized dense vector word represen- tors of single-precision floating point numbers for each vo- tations as fixed-length features for machine learning algo- cabulary word. All vectors need to be kept in main memory rithms and is in widespread use today. In this paper we in- to achieve acceptable training latency, which is impractical vestigate one of its core components, Negative Sampling, and propose efficient distributed algorithms that allow us to scale for contemporary commodity servers. As a concrete exam- to vocabulary sizes of more than 1 billion unique words and ple, Ordentlich et al. (Ordentlich et al. 2016) describe an corpus sizes of more than 1 trillion words. application in which they use word embeddings to match search queries to online ads. Their dictionary comprises ≈ 200 million composite words which implies a main mem- Introduction ory requirement of ≈ 800 GB for d = 500. A complemen- tary challenge is that corpora sizes themselves increase. The Recently, Mikolov et al (Mikolov et al. 2013; Mikolov and largest reported dataset that has been used was 100 billion Dean 2013) introduced Word2Vec, a suite of algorithms words (Mikolov and Dean 2013) which required training for unsupervised training of dense vector representations of time in the order of days. -

Comparative Study of Caffe, Neon, Theano, and Torch

Workshop track - ICLR 2016 COMPARATIVE STUDY OF CAFFE,NEON,THEANO, AND TORCH FOR DEEP LEARNING Soheil Bahrampour, Naveen Ramakrishnan, Lukas Schott, Mohak Shah Bosch Research and Technology Center fSoheil.Bahrampour,Naveen.Ramakrishnan, fixed-term.Lukas.Schott,[email protected] ABSTRACT Deep learning methods have resulted in significant performance improvements in several application domains and as such several software frameworks have been developed to facilitate their implementation. This paper presents a comparative study of four deep learning frameworks, namely Caffe, Neon, Theano, and Torch, on three aspects: extensibility, hardware utilization, and speed. The study is per- formed on several types of deep learning architectures and we evaluate the per- formance of the above frameworks when employed on a single machine for both (multi-threaded) CPU and GPU (Nvidia Titan X) settings. The speed performance metrics used here include the gradient computation time, which is important dur- ing the training phase of deep networks, and the forward time, which is important from the deployment perspective of trained networks. For convolutional networks, we also report how each of these frameworks support various convolutional algo- rithms and their corresponding performance. From our experiments, we observe that Theano and Torch are the most easily extensible frameworks. We observe that Torch is best suited for any deep architecture on CPU, followed by Theano. It also achieves the best performance on the GPU for large convolutional and fully connected networks, followed closely by Neon. Theano achieves the best perfor- mance on GPU for training and deployment of LSTM networks. Finally Caffe is the easiest for evaluating the performance of standard deep architectures. -

BUSEM at Semeval-2017 Task 4A Sentiment Analysis with Word

BUSEM at SemEval-2017 Task 4 Sentiment Analysis with Word Embedding and Long Short Term Memory RNN Approaches Deger Ayata1, Murat Saraclar 1, Arzucan Ozgur2 1Electrical & Electronical Engineering Department, Bogaziçi University 2Computer Engineering Department, Bogaziçi University Istanbul , Turkey {deger.ayata, murat.saraclar, arzucan.ozgur}@boun.edu.tr Abstract uses word embeddings for feature representation and Support Vector Machine This paper describes our approach for (SVM), Random Forest (RF) and Naive Bayes SemEval-2017 Task 4: Sentiment Analysis in (NB) algorithms for classification Twitter Twitter. We have participated in Subtask A: messages into negative, neutral and positive Message Polarity Classification subtask and polarity. The second system is based on Long developed two systems. The first system Short Term Memory Recurrent Neural uses word embeddings for feature representation and Support Vector Machine, Networks (LSTM) and uses word indexes as Random Forest and Naive Bayes algorithms sequence of inputs for feature representation. for the classification of Twitter messages into The remainder of this article is structured as negative, neutral and positive polarity. The follows: Section 2 contains information about second system is based on Long Short Term the system description and Section 3 explains Memory Recurrent Neural Networks and methods, models, tools and software packages uses word indexes as sequence of inputs for used in this work. Test cases and datasets are feature representation. explained in Section 4. Results are given in Section 5 with discussions. Finally, section 6 summarizes the conclusions and future work. 1 Introduction 2 System Description Sentiment analysis is extracting subjective information from source materials, via natural We have developed two independent language processing, computational linguistics, systems. -

Reinforcement Learning Data Science Africa 2018 Abuja, Nigeria (12 Nov - 16 Nov 2018)

Reinforcement Learning Data Science Africa 2018 Abuja, Nigeria (12 Nov - 16 Nov 2018) Chika Yinka-Banjo, PhD Ayorkor Korsah, PhD University of Lagos Ashesi University Nigeria Ghana Outline • Introduction to Machine learning • Reinforcement learning definitions • Example reinforcement learning problems • The Markov decision process • The optimal policy • Value function & Q-value function • Bellman Equation • Q-learning • Building a simple Q-learning agent (coding) • Recap • Where to go from here? Introduction to Machine learning • Artificial Intelligence (AI) is the study and design of Intelligent agents. • An Intelligent agent can perceive its environment through sensors and it can act on its environment through actuators. • E.g. Agent: Humanoid robot • Environment: Earth? • Sensors: Camera, tactile sensor etc. • Actuators: Motors, grippers etc. • Machine learning is a subfield of Artificial Intelligence Branches of AI Introduction to Machine learning • Machine learning techniques learn from data without being explicitly programmed to do so. • Machine learning models enable the agent to learn from its own experience by extracting useful information from feedback from its environment. • Three types of learning feedback: • Supervised learning • Unsupervised learning • Reinforcement learning Branches of Machine learning Supervised learning • Supervised learning: the machine learning model is trained on many labelled examples of input-output pairs. • Such that when presented with a novel input, the model can estimate accurately what the correct output should be. • Data(x, y): x is input data, y is label Supervised learning task in the form of classification • Goal: learn a function to map x -> y • Examples include; Classification, regression object detection, image captioning etc. Unsupervised learning • Unsupervised learning: here the model extract useful information from unlabeled and unstructured data. -

An Artificial Neural Networks Primer with Financial Applications Examples in Financial Distress Predictions and Foreign Exchange Hybrid Trading System ’

‘An Artificial Neural Networks Primer with Financial Applications Examples in Financial Distress Predictions and Foreign Exchange Hybrid Trading System ’ by Dr Clarence N W Tan, PhD Bachelor of Science in Electrical Engineering Computers (1986), University of Southern California, Los Angeles, California, USA Master of Science in Industrial and Systems Engineering (1989), University of Southern California Los Angeles, California, USA Masters of Business Administration (1989) University of Southern California Los Angeles, California, USA Graduate Diploma in Applied Finance and Investment (1996) Securities Institute of Australia Diploma in Technical Analysis (1996) Australian Technical Analysts Association Doctor of Philosophy Bond University (1997) URL: http://w3.to/ctan/ E-mail: [email protected] School of Information Technology, Bond University, Gold Coast, QLD 4229, Australia Table of Contents Table of Contents 1. INTRODUCTION TO ARTIFICIAL INTELLIGENCE AND ARTIFICIAL NEURAL NETWORKS ..........................................................................................................................................2 1.1 INTRODUCTION ...........................................................................................................................2 1.2 ARTIFICIAL INTELLIGENCE ..........................................................................................................2 1.3 ARTIFICIAL INTELLIGENCE IN FINANCE .......................................................................................4 1.3.1 Expert System -

![Lecture 23: Recurrent Neural Network [SCS4049-02] Machine Learning and Data Science](https://docslib.b-cdn.net/cover/5074/lecture-23-recurrent-neural-network-scs4049-02-machine-learning-and-data-science-675074.webp)

Lecture 23: Recurrent Neural Network [SCS4049-02] Machine Learning and Data Science

Lecture 23: Recurrent Neural Network [SCS4049-02] Machine Learning and Data Science Seongsik Park ([email protected]) AI Department, Dongguk University Tentative schedule week topic date (수 / 월) 1 Machine Learning Introduction & Basic Mathematics 09.02 / 09.07 2 Python Practice I & Regression 09.09 / 09.14 3 AI Department Seminar I & Clustering I 09.16 / 09.21 4 Clustering II & Classification I 09.23 / 09.28 5 Classification II (추석) / 10.05 6 Python Practice II & Support Vector Machine I 10.07 / 10.12 7 Support Vector Machine II & Decision Tree and Ensemble Learning 10.14 / 10.19 8 Mid-Term Practice & Mid-Term Exam 10.21 / 10.26 9 휴강 & Dimensional Reduction I 10.28 / 11.02 10 Dimensional Reduction II & Neural networks and Back Propagation I 11.04 / 11.09 11 Neural networks and Back Propagation II & III 11.11 / 11.16 12 AI Department Seminar II & Convolutional Neural Network 11.18 / 11.23 13 Python Practice III & Recurrent Neural network 11.25 / 11.30 14 Model Optimization & Autoencoders 12.02 / 12.07 15 Final exam (휴강)/ 12.14 1/22 Introduction of recurrent neural network (RNN) • Rumelhart, et. al., Learning Internal Representations by Error Propagation (1986) • fx(1); :::; x(t−1); x(t); x(t+1); :::; x(N)g와 같은 sequence를 처리하는데 특화 • RNN 은 긴 시퀀스를 처리할 수 있으며, 길이가 변동적인 시퀀스도 처리 가능 • Parameter sharing: 전체 sequence에서 파라미터를 공유함 • CNN은 시퀀스를 다룰 수 없나? • 시간 축으로 1-D convolution ) 시간 지연 신경망도 가능하지만 깊이가 얕음 (shallow) • RNN은 깊은 구조(deep)가 가능함 • Data type • 일반적으로 xt 는 시계열 데이터 (time series, temporal data) • 여기서 t = 1; 2; :::; N은 time step 또는 sequence 내에서의 순서 • 전체 길이 N은 변동적 2/22 Recurrent Neurons Up to now we have mostly looked at feedforward neural networks, where the activa‐ tions flow only in one direction, from the input layer to the output layer (except for a few networks in Appendix E).