Best Practices in Evaluating Transit Performance Survey

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mammoth Summer Transit Map -2021

TOWN TROLLEY SERVICE Get across town with stops at Snowcreek Athletic Club, Minaret Village Shopping Center, The Village, Canyon Lodge and Juniper Springs Resort every 20 minutes. The open-air Lakes Basin Trolley leaves The Village for the Mammoth Lakes Basin every 30 minutes, MAMMOTH LAKES TRANSIT stopping at most of the lakes in the Mammoth Lakes Basin and providing access to area hiking trails. Also tows a 14-bike trailer for access to cycling. SUMMER SERVICE TROLLEY SERVICE ROUTE DATES TIMES FREQUENCY LOCATION TOWN Snowcreek Athletic Club May 28 – Jun 25 7 am – 10 pm Every 30 minutes See map inside COVID-19 REGULATIONS to The Village Jun 26 – Sep 6 7 am – 2 am Every 20 minutes (30 minutes after midnight) See map inside TROLLEY Masks are required. Please do not ride the bus if you are ill. to Canyon Lodge Sep 7 – Nov 19 7 am – 10 pm Every 30 minutes See map inside Schedules are subject to change without notice, please see TROLLEY SERVICE ROUTE DATES TIMES FREQUENCY LOCATION TIME LAST BUS estransit.com for current schedules. The Village to May 28 – Jun 25 9 am – 6 pm Weekends & holidays every 30 minutes, The Village / Stop #90 :00 and :30 5:00 LAKES BASIN WELCOME ABOARD! The Mammoth Transit summer system operates from TRANSIT MAP Lakes Basin weekdays every 60 minutes Lake Mary Marina / Stop #100 :19 and :49 5:19 TROLLEY Jun 26 – Aug 22 9 am – 6 pm Every 30 minutes Horseshoe Lake / Stop #104 :30 and :00 5:30 May 28 through November 19, 2021 and offers a convenient, fun and friendly (with 14-bike trailer) Aug 23 – Sep 6 9 am – 6 pm Weekends & holiday every 30 minutes, Tamarack Lodge (last bus)/Stop #95 :42 and :12 5:42 alternative to getting around Mammoth Lakes. -

Circulator Bus Transit Cost

Transit CIRCULATOR BUS TRANSIT COST DowntownDC, www.downtowndc.org TI SORT STATE ON REGI AL IPACT LOCAL RID OR OR C SPOT WO URDLS CITPRIAT FUNDIN TRANSIT ANCY More Information: tti.tamu.edu/policy/how-to-fix-congestion SUCCESS STORIES Description How Will This Help? Washington, D.C. Circulator bus transit is a short-distance, • Improves mobility and The DC Circulator is a circular, fixed-route transit mode that circulation in target areas. cooperative effort of the District takes riders around a specific area with • Fosters the redevelopment of Columbia Department of major destinations. It may include street- of urban spaces into walk- Transportation, the Washington cars, rubber-tire trolleys, electric buses, able, mixed-use, high-density Metropolitan Area Transit or compressed natural gas buses. environments. Authority, DC Surface Transit, and First Transit. The DC Two common types of circulator bus • Improves parking availability in transit are downtown circulators and areas with shortages. Circulator began service in neighborhood circulators. A circulator • Reduces environmental 2005 and has bus system targeted at tourists/visitors impacts from private/individual experienced is more likely to use vehicle colors to be transportation. increasing clearly identifiable. ridership each Implementation Issues year. Target Market There are many barriers, constraints, and Most downtown circulators are orient- obstacles to successfully implement, ed toward employee and tourist/visi- Philadelphia, Pennsylvania operate, and maintain a circulator bus. tor markets. Neighborhood circulators The Independence Visitor However, funding is the major constraint meet the mobility needs of transit-reliant Center Corporation manages in most programs. Inadequate funding populations, such as low-income and the downtown circulator, and costs were the most common mobility-challenged people. -

Bus Schedules and Routes

City of Simi Valley Transit City of Simi Valley Transit provides a convenient We’ve Got Connections! and inexpensive way to travel in and around City of Simi Valley Transit is pleased to provide the City. Modern air-conditioned buses transport connections with other major transit systems: you in comfort to most major points of interest: ADA/Dial-A-Ride. 805-583-6464 shopping, schools, parks, public buildings and more! Amtrak Info: . 800-872-7245 LA Metro Information: ......323-466-3876 To fully enjoy the benefits of using the Metrolink: . 800-371-5465 buses, please remember: Moorpark City Transit: . .805-517-6315 • Food an beverages are not allowed on the TDD Information ...........800-735-2929 buses. Thousand Oaks Transit ......805-375-5467 • Please extinguish all smoking materials before VCTC:. 800-438-1112 boarding a bus. Free transfers are available upon request to L A • Radios, tape recorders, and electronic devices Metro and VCTC. must be turned off when boarding a bus. Headphones are allowed. All buses are wheelchair accessible. It is recommended that passengers call prior to • Drivers cannot make change. Please be starting a trip to confirm the closest bus stop prepared to pay the EXACT FARE. location. All buses are equipped with bike racks. • For Lost & Found items, call City of Simi Valley Transit at (805) 583-6456. Items may Fares be retrieved from the Simi Valley Police Full Fare Department Monday through Friday, 8:00 a.m. Single Trip. $1.50 to 4:00 p.m. Unlimited Day Pass . $5.00 • Bus Stops are located approximately ¼ to ½ 21-Ride Pass .....................$25.00 mile apart along routes within Simi Valley. -

Transit Service Design Guidelines

Transit Service Design Guidelines Department of Rail and Public Transportation November 2008 Transit Service Design Guidelines Why were these guidelines for new transit service developed? In FY2008 alone, six communities in Virginia contacted the Virginia Department of Rail and Public Transportation about starting new transit service in their community. They and many other communities throughout Virginia are interested in learning how new transit services can enhance travel choices and mobility and help to achieve other goals, such as quality of life, economic opportunity, and environmental quality. They have heard about or seen successful transit systems in other parts of the state, the nation, or the world, and wonder how similar systems might serve their communities. They need objective and understandable information about transit and whether it might be appropriate for them. These guidelines will help local governments, transit providers and citizens better understand the types of transit systems and services that are available to meet community and regional transportation needs. The guidelines also help the Virginia Department of Rail and Public Transportation (DRPT) in making recommendations to the Commonwealth Transportation Board for transit investments, by 1) providing information on the types of systems or services that are best matched to community needs and local land use decisions, and 2) ensuring that resources are used effectively to achieve local, regional, and Commonwealth goals. Who were these guidelines developed for? These guidelines are intended for three different audiences: local governments, transit providers and citizens. Therefore, some will choose to read the entire document while others may only be interested in certain sections. -

The Relationship Between Safety, Capacity, and Operating Speed on Bus Rapid Transit

THE RELATIONSHIP BETWEEN SAFETY, CAPACITY, AND OPERATING SPEED ON BUS RAPID TRANSIT NICOLAE DUDUTA,EMBARQ CLAUDIA ADRIAZOLA-STEIL,EMBARQ DARIO HIDALGO, EMBARQ LUIS ANTONIO LINDAU,EMBARQ PAULA MANOELA DOS SANTOS, EMBARQ EMAIL FOR CORRESPONDENCE: [email protected] This is an abridged version of the paper presented at the conference. The full version is being submitted elsewhere. Details on the full paper can be obtained from the author. The Relationship between Safety, Capacity, and Operating Speed on Bus Rapid Transit DUDUTA, Nicolae; ADRIAZOLA-STEIL Claudia; HIDALGO, Dario; LINDAU, Luis Antonio; SANTOS, Paula Manoela; THE RELATIONSHIP BETWEEN SAFETY, CAPACITY, AND OPERATING SPEED ON BUS RAPID TRANSIT CASE STUDY: TRANSOESTE BRT, RIO DE JANEIRO Nicolae Duduta1, Claudia Adriazola-Steil1, Dario Hidalgo1, Luis Antonio Lindau2, Paula Manoela dos Santos2 1: EMBARQ – the WRI Center for Sustainable Transport, 10 G St. NE Suite 800, Washington DC, 2: EMBARQ Brasil, Rua Luciana de Abreu, 471/801 90570-060 Porto Alegre/RS, Brazil Email for correspondence: [email protected] th 13 WCTR, 2013 – Rio de Janeiro, Brazil 1 The Relationship between Safety, Capacity, and Operating Speed on Bus Rapid Transit DUDUTA, Nicolae; ADRIAZOLA-STEIL Claudia; HIDALGO, Dario; LINDAU, Luis Antonio; SANTOS, Paula Manoela; ABSTRACT There is a growing body of research on the traffic safety aspects of Bus Rapid Transit (BRT) corridors in Latin American cities. The findings suggest that some BRT design features – such as center lane configurations, left turn prohibitions, and signalized mid-block pedestrian crossings with refuge islands – can significantly improve safety on the corridors where BRTs operate. However, there is still a gap in knowledge about how the different safety features might impact the operational performance of the BRT. -

Comparison Between Bus Rapid Transit and Light-Rail Transit Systems: a Multi-Criteria Decision Analysis Approach

Urban Transport XXIII 143 COMPARISON BETWEEN BUS RAPID TRANSIT AND LIGHT-RAIL TRANSIT SYSTEMS: A MULTI-CRITERIA DECISION ANALYSIS APPROACH MARÍA EUGENIA LÓPEZ LAMBAS1, NADIA GIUFFRIDA2, MATTEO IGNACCOLO2 & GIUSEPPE INTURRI2 1TRANSyT, Transport Research Centre, Universidad Politécnica de Madrid, Spain 2Department of Civil Engineering and Architecture (DICAR), University of Catania, Italy ABSTRACT The construction choice between two different transport systems in urban areas, as in the case of Light-Rail Transit (LRT) and Bus Rapid Transit (BRT) solutions, is often performed on the basis of cost-benefit analysis and geometrical constraints due to the available space for the infrastructure. Classical economic analysis techniques are often unable to take into account some of the non-monetary parameters which have a huge impact on the final result of the choice, since they often include social acceptance and sustainability aspects. The application of Multi-Criteria Decision Analysis (MCDA) techniques can aid decision makers in the selection process, with the possibility to compare non-homogeneous criteria, both qualitative and quantitative, and allowing the generation of an objective ranking of the different alternatives. The coupling of MCDA and Geographic Information System (GIS) environments also permits an easier and faster analysis of spatial parameters, and a clearer representation of indicator comparisons. Based on these assumptions, a LRT and BRT system will be analysed according to their own transportation, economic, social and environmental impacts as a hypothetical exercise; moreover, through the use of MCDA techniques a global score for both systems will be determined, in order to allow for a fully comprehensive comparison. Keywords: BHLS, urban transport, transit systems, TOPSIS. -

The Influence of Passenger Load, Driving Cycle, Fuel Price and Different

Transportation https://doi.org/10.1007/s11116-018-9925-0 The infuence of passenger load, driving cycle, fuel price and diferent types of buses on the cost of transport service in the BRT system in Curitiba, Brazil Dennis Dreier1 · Semida Silveira1 · Dilip Khatiwada1 · Keiko V. O. Fonseca2 · Rafael Nieweglowski3 · Renan Schepanski3 © The Author(s) 2018 Abstract This study analyses the infuence of passenger load, driving cycle, fuel price and four diferent types of buses on the cost of transport service for one bus rapid transit (BRT) route in Curitiba, Brazil. First, the energy use is estimated for diferent passenger loads and driving cycles for a conventional bi-articulated bus (ConvBi), a hybrid-electric two- axle bus (HybTw), a hybrid-electric articulated bus (HybAr) and a plug-in hybrid-electric two-axle bus (PlugTw). Then, the fuel cost and uncertainty are estimated considering the fuel price trends in the past. Based on this and additional cost data, replacement scenarios for the currently operated ConvBi feet are determined using a techno-economic optimisa- tion model. The lowest fuel cost ranges for the passenger load are estimated for PlugTw amounting to (0.198–0.289) USD/km, followed by (0.255–0.315) USD/km for HybTw, (0.298–0.375) USD/km for HybAr and (0.552–0.809) USD/km for ConvBi. In contrast, C the coefcient of variation ( v ) of the combined standard uncertainty is the highest for C PlugTw ( v : 15–17%) due to stronger sensitivity to varying bus driver behaviour, whereas C it is the least for ConvBi ( v : 8%). -

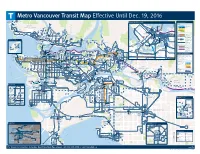

For Transit Information, Including Real-Time Next Bus, Please Call 604.953.3333 Or Visit Translink.Ca

Metro Vancouver Transit Map Effective Until Dec. 19, 2016 259 to Lions Bay Ferries to Vancouver Island, C12 to Brunswick Beach Bowen Island and Sunshine Coast Downtown Vancouver Transit Services £ m C Grouse Mountain Skyride minute walk SkyTrain Horseshoe Bay COAL HARBOUR C West End Coal Harbour C WEST Community Community High frequency rail service. Canada Line Centre Centre Waterfront END Early morning to late Vancouver Convention evening. £ Centre C Canada Expo Line Burrard Tourism Place Vancouver Millennium Line C Capilano Salmon Millennium Line Hatchery C Evergreen Extension Caulfeild ROBSON C SFU Harbour Evelyne Capilano Buses Vancouver Centre Suspension GASTOWN Saller City Centre BCIT Centre Bridge Vancouver £ Lynn Canyon Frequent bus service, with SFU Ecology Centre Art Gallery B-Line Woodward's limited stops. UBC Robson Sq £ VFS £ C Regular Bus Service Library Municipal St Paul's Vancouver Carnegie Service at least once an hour Law Edgemont Hall Community Centre CHINATOWN Lynn Hospital Courts during the daytime (or College Village Westview Valley Queen -

Skopje Tram-Bus Project

Skopje Tram-Bus Project Non-Technical Summary July 2020 1 Table of Contents 1. Background ................................................................................................................. 1 Introduction ........................................................................................................................... 1 Overview of the Project ......................................................................................................... 1 Project Timeline and Stages ................................................................................................. 4 2. Key Environmental, Health & Safety and Social Findings ........................................ 4 Overview ............................................................................................................................... 4 Project Benefits and Impacts ................................................................................................. 5 Project Benefits ..................................................................................................................... 5 Project Impacts and Risks ..................................................................................................... 5 3. How will Stakeholders be Engaged in the Project? .................................................. 7 What is the Stakeholder Engagement Plan? ......................................................................... 7 Who are the Key Stakeholders? ........................................................................................... -

Bi-Articulated Bi-Articulated

Bi-articulated Bus AGG 300 Handbuch_121x175_Doppel-Gelenkbus_en.indd 1 22.11.16 12:14 OMSI 2 Bi-articulated bus AGG 300 Developed by: Darius Bode Manual: Darius Bode, Aerosoft OMSI 2 Bi-articulated bus AGG 300 Manual Copyright: © 2016 / Aerosoft GmbH Airport Paderborn/Lippstadt D-33142 Bueren, Germany Tel: +49 (0) 29 55 / 76 03-10 Fax: +49 (0) 29 55 / 76 03-33 E-Mail: [email protected] Internet: www.aerosoft.de Add-on for www.aerosoft.com All trademarks and brand names are trademarks or registered of their respective owners. All rights reserved. OMSI 2 - The Omnibus simulator 2 3 Aerosoft GmbH 2016 OMSI 2 Bi-articulated bus AGG 300 Inhalt Introduction ...............................................................6 Bi-articulated AGG 300 and city bus A 330 ................. 6 Vehicle operation ......................................................8 Dashboard .................................................................. 8 Window console ....................................................... 10 Control lights ............................................................ 11 Main information display ........................................... 12 Ticket printer ............................................................. 13 Door controls ............................................................ 16 Stop display .............................................................. 16 Level control .............................................................. 16 Lights, energy-save and schoolbus function ............... 17 Air conditioning -

Merced County Board of Supervisors Summary

MERCED COUNTY BOARD OF SUPERVISORS SUMMARY ACTION MINUTES Regular Meeting TUESDAY, MAY 16, 2000 Closed Session – 9:00 a.m. Regular Meeting – 10:00 a.m. MERCED COUNTY GLORIA CORTEZ KEENE…..DISTRICT 1 ADMINISTRATION BUILDING KATHLEEN M. CROOKHAM….DISTRICT 2 2222 “M” STREET JOE RIVERO…..DISTRICT 3 BOARD ROOM, THIRD FLOOR DEIDRE F. KELSEY….DISTRICT 4 MERCED, CALIFORNIA 95340 CHAIRMAN JERRY O’BANION…..DISTRICT 5 (209) 385-7366 Lydia A. Beiswanger, Chief Clerk GREGORY B. WELLMAN DENNIS MYERS COUNTY ADMINISTRATIVE OFFICER COUNTY COUNSEL All supporting documentation is available for public review in the Office of the Clerk of the Board of Supervisors located in the County Administration Building, Third Floor, 2222 “M” Street, Merced, California, 95340 During regular business hours, 8:00 a.m. – 5:00 p.m., Monday through Friday. (209) 385-7366 SCHEDULED ITEMS 9:00 A. M. CLOSED SESSION - CONFERENCE WITH LABOR NEGOTIATOR: It is the intention of the Board to meet in Closed Session pursuant to Section 54957.8 concerning a conference with County of Merced negotiators, Gregory Wellman-County Administrator and Bev Morse-Assistant County Administrator concerning negotiations with American Federation of State, County and Municipal Employees (AFSCME), Units 4, 5, 6, and 8 CLOSED SESSION : It is the intention of the Board to meet in Closed Session pursuant to Government Code Section 54957, Subdivision (e): PUBLIC EMPLOYEE PERFORMANCE EVALUATION Title: Human Services Agency Director 1 CLOSED SESSION - CONFERENCE WITH LEGAL COUNSEL – ANTICIPATED LITIGATION: It is the intention of the Board to meet in Closed Session concerning significant exposure to litigation pursuant to subdivision (b) of Section 54956.9: One Case RECONVENED AT 10:00 A.M. -

Regular Meeting of the Technical Review Board

Regular Meeting of the Technical Review Board Merced County Association of Governments Transit Joint Powers Authority for Merced County Merced County Regional Waste Management Authority LOCATION Meeting will be held at: City of Gustine Council Chambers 352 Fifth Street Gustine, CA 95332 DATE Wednesday, June 9, 2021 TIME 8:30 A.M. Technical Review Board Members Stephanie Dietz, Chair City Manager of Merced Jim Brown CEO of County of Merced Doug Dunford City Manager of Gustine Darrell Fonseca City Manager of Dos Palos Jose Antonio Ramirez City Manager of Livingston Gary Brizzee Acting City Manager of Los Banos Lori Waterman City Manager of Atwater 001 Welcome to the Technical Review Board Meeting! AGENDA At least 72 hours prior to each regular Technical Review Board convening, a complete agenda packet is available for review on the MCAG website at www.mcagov.org. All public records relating to an open session item and copies of staff reports or other written documentation relating to items of business referred to on the agenda are on file at MCAG. Persons with questions concerning agenda items may call MCAG to make an inquiry regarding the nature of items described on the agenda. INTERPRETING SERVICES Interpreting Services are not provided at Technical Review Board (TRB) meetings unless requested at least three (3) business days in advance. Please contact Eva Garibay at (209) 723-3153 x 108 during regular business hours to request interpreting services. Servicios de interpreté no son ofrecidos en las juntas de Technical Review Board (TRB) de MCAG al menos que se soliciten tres (3) días de negoción antes de la junta.