Multi-Language Software Metrics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Command Line Interface

Command Line Interface Squore 21.0.2 Last updated 2021-08-19 Table of Contents Preface. 1 Foreword. 1 Licence. 1 Warranty . 1 Responsabilities . 2 Contacting Vector Informatik GmbH Product Support. 2 Getting the Latest Version of this Manual . 2 1. Introduction . 3 2. Installing Squore Agent . 4 Prerequisites . 4 Download . 4 Upgrade . 4 Uninstall . 5 3. Using Squore Agent . 6 Command Line Structure . 6 Command Line Reference . 6 Squore Agent Options. 6 Project Build Parameters . 7 Exit Codes. 13 4. Managing Credentials . 14 Saving Credentials . 14 Encrypting Credentials . 15 Migrating Old Credentials Format . 16 5. Advanced Configuration . 17 Defining Server Dependencies . 17 Adding config.xml File . 17 Using Java System Properties. 18 Setting up HTTPS . 18 Appendix A: Repository Connectors . 19 ClearCase . 19 CVS . 19 Folder Path . 20 Folder (use GNATHub). 21 Git. 21 Perforce . 23 PTC Integrity . 25 SVN . 26 Synergy. 28 TFS . 30 Zip Upload . 32 Using Multiple Nodes . 32 Appendix B: Data Providers . 34 AntiC . 34 Automotive Coverage Import . 34 Automotive Tag Import. 35 Axivion. 35 BullseyeCoverage Code Coverage Analyzer. 36 CANoe. 36 Cantata . 38 CheckStyle. .. -

Avatud Lähtekoodiga Vahendite Kohandamine Microsoft Visual C++ Tarkvaralahenduste Kvaliteedi Analüüsiks Sonarqube Serveris

TALLINNA TEHNIKAÜLIKOOL Infotehnoloogia teaduskond Tarkvarateaduse instituut Anton Ašot Roolaid 980774IAPB AVATUD LÄHTEKOODIGA VAHENDITE KOHANDAMINE MICROSOFT VISUAL C++ TARKVARALAHENDUSTE KVALITEEDI ANALÜÜSIKS SONARQUBE SERVERIS Bakalaureusetöö Juhendaja: Juhan-Peep Ernits PhD Tallinn 2019 Autorideklaratsioon Kinnitan, et olen koostanud antud lõputöö iseseisvalt ning seda ei ole kellegi teise poolt varem kaitsmisele esitatud. Kõik töö koostamisel kasutatud teiste autorite tööd, olulised seisukohad, kirjandusallikatest ja mujalt pärinevad andmed on töös viidatud. Autor: Anton Ašot Roolaid 21.05.2019 2 Annotatsioon Käesolevas bakalaureusetöös uuritakse, kuidas on võimalik saavutada suure hulga C++ lähtekoodi kvaliteedi paranemist, kui ettevõttes kasutatakse arenduseks Microsoft Visual Studiot ning koodikaetuse ja staatilise analüüsi ülevaate saamiseks SonarQube serverit (Community Edition). Seejuures SonarSource'i poolt pakutava tasulise SonarCFamily for C/C++ analüsaatori (mille eelduseks on SonarQube serveri Developer Edition) asemel kasutatakse tasuta ja vaba alternatiivi: SonarQube C++ Community pluginat. Analüüsivahenditena eelistatakse avatud lähtekoodiga vabu tarkvaravahendeid. Valituks osutuvad koodi kaetuse analüüsi utiliit OpenCppCoverage ja staatilise analüüsi utiliit Cppcheck. Siiski selgub, et nende utiliitide töö korraldamiseks ja väljundi sobitamiseks SonarQube Scanneri vajadustega tuleb kirjutada paar skripti: üks PowerShellis ja teine Windowsi pakkfailina. Regulaarselt ajastatud analüüside käivitamist tagab QuickBuild, -

Write Your Own Rules and Enforce Them Continuously

Ultimate Architecture Enforcement Write Your Own Rules and Enforce Them Continuously SATURN May 2017 Paulo Merson Brazilian Federal Court of Accounts Agenda Architecture conformance Custom checks lab Sonarqube Custom checks at TCU Lessons learned 2 Exercise 0 – setup Open www.dontpad.com/saturn17 Follow the steps for “Exercise 0” Pre-requisites for all exercises: • JDK 1.7+ • Java IDE of your choice • maven 3 Consequences of lack of conformance Lower maintainability, mainly because of undesired dependencies • Code becomes brittle, hard to understand and change Possible negative effect • on reliability, portability, performance, interoperability, security, and other qualities • caused by deviation from design decisions that addressed these quality requirements 4 Factors that influence architecture conformance How effective the architecture documentation is Turnover among developers Haste to fix bugs or implement features Size of the system Distributed teams (outsourcing, offshoring) Accountability for violating design constraints 5 How to avoid code and architecture disparity? 1) Communicate the architecture to developers • Create multiple views • Structural diagrams + behavior diagrams • Capture rationale Not the focus of this tutorial 6 How to avoid code and architecture disparity? 2) Automate architecture conformance analysis • Often done with static analysis tools 7 Built-in checks and custom checks Static analysis tools come with many built-in checks • They are useful to spot bugs and improve your overall code quality • But they’re -

Ics-Fd-Qa Project • Objective • Present Scope • QA Calling Sequence • Present Status & Tools • Integration Into Projects & Kobe • Some Issues • Conclusion

ics-fd-qa project • Objective • Present scope • QA calling sequence • Present status & tools • Integration into projects & kobe • Some issues • Conclusion • Your input, discussion Objective • Provide integrated code quality assessment • Standard analysis method, but ignore coding styles a.f.a.p. • High quality code scans, try to find “fishy” pieces automatically • Report only false-true, keep noise down • Graphical output with sonarqube, fancy, motivating • Support and encourage easy documentation writing (sphinx, …) • Provide clear code fix proposals if available • Provide q.a. history • Have minimal impact on projects • Existing build chains are sacred • Be able to digest lots of legacy code and give orientation for “hot spots” Present Scope • ICS-FD section internal • C/C++ code, no java, no control+ • Cmake build chain ( qmake/Qt/bear come later ) • Static code analysis: clang-tidy, cppcheck (more tools can be easily added, but clang-tidy is the upshot) • Push results to tmp-sonarqube, will have own fd instance later (dixit ics- in) • Integrated with docker, gitlab-CI and uses a bit of nexus • Therefore is kobe-compatible , see later • Can update existing sphinx-doc “almost for free”: tools are included in the docker image QA Sonarqube Ics-fd-qa (gitlab, nexus) Ics-fd qa webpage Sonar-scanner transmission Docker: clang 7.0.1 Calling • Code issues Python 3, Sphinx, • Proposed corrections Doxygen, Breathe, sequence • Statistics Trigger with payload Sonar-scanner, break, • Graphs • Artifact URL cmake 3, … • Project name stages: Push new sources • Control flags 1. Fetch sources URL & unpack 2. analysis: clang-tidy, cppcheck, aux results, output & logs artifacts C/C++ 3. Push to sonarqube Trigger project using sonar-scanner Pipeline Build: ADDED stage: • gitlab • all sources • Pull qa scripts from nexus • cmake are available • Analyze json-db for full • Produce a source dependencies build • Pack sources into artifact Nexus command (URL) Temp json-db • Trigger with payload repo QA calling sequence • works mostly blind. -

A Graduate Certificate

ADUS Technologies s.r.o. Fraňa Kráľa 2049 058 01 Poprad, Slovakia T: +421 (0) 949 407 310 | E: [email protected] www.adus-technologies.com Mykhailo Riabets [email protected] EDUCATION 2005 to The Torun secondary school (engineering) 2012 A graduate certificate 2015 to Uzhgorod National University – Faculty of Information Technologies 2019 Bachelor PROFESSIONAL EXPERIENCE 12/2018 to ADUS Technologies s.r.o., Poprad, Slovakia present ELVIS – a software product for a Swiss Customer that allows you to create powerful online applications, design services and systems for any kind of business. Utilized technologies: TypeScript, Angular 11, Sass, Git, IntelliJ IDEA, Sonarqube, JWT authentication, Rest Api, YouTrack Denta5 – an administrative system for managing clients and their orders, which allows clients to create orders containing a large number of attachments such as dental scans and dental aids. Utilized technologies: TypeScript, Angular 11, Sass, Git, IntelliJ IDEA, YouTrack Treves – internal system for organizing and synchronizing work in the company, planning the production of products and reporting on the products produced. Utilized technologies: TypeScript, Angular 11, Sass, Git, IntelliJ IDEA, YouTrack Reactoo Node.js backend – Node.js platfrom developer for UK Customer specializing in live streaming. Custom solution based on AWS Lambdas and heavy use of customized JavaScript solutions o Successful refactoring of code to use CI and Lambda layers o Backend and front-end tasks node.js + React Utilized technologies: JavaScript, AWS Lamda, Lambda Layers, ffmpeg Utimate solution – React & Angular Developer Developing internal platfrom system for corporate purposes Utilized technologies: TypeScript, Angular9, NgRx, Git, IntelliJ IDEA, React. Angular Common – Angular Developer It is an internal core library based on Angular 9 and Prime NG UI components. -

Canarys Automations Private Limited

Canarys Automations Private Limited Sheshadri Srinivas [email protected] https://www.ecanarys.com About Canarys Over 25+ years in the industry, having offices in Bangalore, India and USA, Canarys is a comprehensive solution provider specializing in DevOps, Mobile Apps, MS Dynamics, TFS Consulting, Staffing and Application Development across a broad spectrum of domains. About Canarys Canarys is a DevOps and Azure (Cloud Platform) Gold Partner. Canarys is also Azure CSP-Cloud Solutions Provider. Canarys has been providing ALM and DevOps Consulting services for Professional services for Consulting services consulting services in Microsoft customers in Microsoft Corp-USA through MCS from 2+ India from last 10+ years APAC region from last 3 from 3+ years years on Microsoft TFS, VSTS, years on TFS, VSTS and Azure and Xamarin Azure Canarys was alsoawarded as Our Services DevOps-VSTS Microsoft Mobile MS Azure Software Staffing Consulting Dynamics Solutions Solutions Services Solutions Canarys has adopted best practices and optimized processes to improve overall service delivery to its customers. Our business model has been refined by the experience gained, partnering with leading organizations for end-to-end solutions. Business Region USA INDIA EUROPE Asia Pacific VSTS/TFS DevOps Consulting Version DevOps/ALM 02 Upgrades 03 01 Migration Best Practises Release Process Deployment & 04 Automation 05 Tech Support VSTS/TFS DevOps Consulting Assess the customer about their current DevOps methodologies, release cycles, goals and understand their pain points Propose Microsoft DevOps solution using existing tooling and Microsoft Platform Evangelize the customer about Microsoft DevOps tools through a workshop or an event and demonstrate the features and usage Migration of TFS work loads to Visual Studio Team Services (VSTS). -

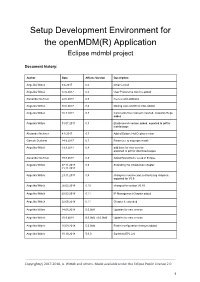

Setup Development Environment for the Openmdm(R) Application Eclipse Mdmbl Project

Setup Development Environment for the openMDM(R) Application Eclipse mdmbl project Document history: Author Date Affects Version Description Angelika Wittek 9.6.2017 0.6 initial version Angelika Wittek 12.6.2017 0.6 User Preference Service added Alexander Nehmer 22.6.2017 0.6 Review and additions Angelika Wittek 30.6.2017 0.6 Mailing Lists and ECA infos added Angelika Wittek 18.7.2017 0.7 Comments from Ganesh inserted, Glassfish Bugs added Angelika Wittek 31.07.2017 0.7 Elasticsearch version added, exported to pdf for mdmbl page Alexander Nehmer 4.8.2017 0.7 Added Eclipse Hot Deploy section Ganesh Deshvini 14.8.2017 0.7 Parameter to skip npm install Angelika Wittek 13.9.2017 0.8 additions for new version exported to pdf for download pages Alexander Nehmer 19.9.2017 0.8 Added SonarQube setup in Eclipse Angelika Wittek 07.11.2017 0.9 Extending the introduction chapter 21.11.2017 Angelika Wittek 23.11.2017 0.9 changes in service.xml, restructuring chapters, exported for V0.9 Angelika Wittek 26.02.2018 0.10 changes for version V0.10 Angelika Wittek 20.03.2018 0.11 IP Management Chapter added Angelika Wittek 02.05.2018 0.11 Chapter 6 extended Angelika Wittek 14.05.2018 5.0.0M1 Updates for new version Angelika Wittek 10.9.2018 5.0.0M2 / 5.0.0M3 Updates for new version Angelika Wittek 25.09.2018 5.0.0M4 Realm configuration changes added. Angelika Wittek 10.10.2018 5.0.0 Switch to EPL 2.0 Copyright(c) 2017-2018, A. -

Efficient Feature Selection for Static Analysis Vulnerability Prediction

sensors Article Efficient Feature Selection for Static Analysis Vulnerability Prediction Katarzyna Filus 1 , Paweł Boryszko 1 , Joanna Doma ´nska 1,* , Miltiadis Siavvas 2 and Erol Gelenbe 1 1 Institute of Theoretical and Applied Informatics, Polish Academy of Sciences, Baltycka 5, 44-100 Gliwice, Poland; kfi[email protected] (K.F.); [email protected] (P.B.); [email protected] (E.G.) 2 Information Technologies Institute, Centre for Research & Technology Hellas, 6th km Harilaou-Thermi, 57001 Thessaloniki, Greece; [email protected] * Correspondence: [email protected] Abstract: Common software vulnerabilities can result in severe security breaches, financial losses, and reputation deterioration and require research effort to improve software security. The acceleration of the software production cycle, limited testing resources, and the lack of security expertise among programmers require the identification of efficient software vulnerability predictors to highlight the system components on which testing should be focused. Although static code analyzers are often used to improve software quality together with machine learning and data mining for software vulnerability prediction, the work regarding the selection and evaluation of different types of relevant vulnerability features is still limited. Thus, in this paper, we examine features generated by SonarQube and CCCC tools, to identify those that can be used for software vulnerability prediction. We investigate the suitability of thirty-three different features to train thirteen distinct machine learning algorithms to design vulnerability predictors and identify the most relevant features that should be used for training. Our evaluation is based on a comprehensive feature selection process based on the correlation analysis of the features, together with four well-known feature selection techniques. -

How Developers Engage with Static Analysis Tools in Different Contexts

Noname manuscript No. (will be inserted by the editor) How Developers Engage with Static Analysis Tools in Different Contexts Carmine Vassallo · Sebastiano Panichella · Fabio Palomba · Sebastian Proksch · Harald C. Gall · Andy Zaidman This is an author-generated version. The final publication will be available at Springer. Abstract Automatic static analysis tools (ASATs) are instruments that sup- port code quality assessment by automatically detecting defects and design issues. Despite their popularity, they are characterized by (i) a high false positive rate and (ii) the low comprehensibility of the generated warnings. However, no prior studies have investigated the usage of ASATs in different development contexts (e.g., code reviews, regular development), nor how open source projects integrate ASATs into their workflows. These perspectives are paramount to improve the prioritization of the identified warnings. To shed light on the actual ASATs usage practices, in this paper we first survey 56 developers (66% from industry and 34% from open source projects) and inter- view 11 industrial experts leveraging ASATs in their workflow with the aim of understanding how they use ASATs in different contexts. Furthermore, to in- vestigate how ASATs are being used in the workflows of open source projects, we manually inspect the contribution guidelines of 176 open-source systems and extract the ASATs' configuration and build files from their corresponding GitHub repositories. Our study highlights that (i) 71% of developers do pay attention to different warning categories depending on the development con- text; (ii) 63% of our respondents rely on specific factors (e.g., team policies and composition) when prioritizing warnings to fix during their programming; and (iii) 66% of the projects define how to use specific ASATs, but only 37% enforce their usage for new contributions. -

Bachelor Degree Project Do Software Code Smell Checkers Smell Themselves?

Bachelor Degree Project Do Software Code Smell Checkers Smell Themselves? A Self Reflection Author: Amelie Löwe Author: Stefanos Bampovits Supervisor: Francis Palma Semester: VT/HT 2020 Subject: Computer Science Abstract Code smells are defined as poor implementation and coding practices, and as a result decrease the overall quality of a source code. A number of code smell detection tools are available to automatically detect poor implementation choices, i.e., code smells. The detection of code smells is essential in order to improve the quality of the source code. This report aims to evaluate the accuracy and quality of seven different open-source code smell detection tools, with the purpose of establishing their level of trustworthiness. To assess the trustworthiness of a tool, we utilize a controlled experiment in which several versions of each tool are scrutinized using the most recent version of the same tool. In particular, we wanted to verify to what extent the code smell detection tools that reveal code smells in other systems, contain smells themselves. We further study the evolution of code smells in the tools in terms of number, types of code smells and code smell density. Keywords: Code smells, Automatic detection, Code smell density, Refactoring, Best practices. Preface We would like to thank our supervisor Francis Palma for the continuous support, helpful advice and valuable guidance throughout this bachelor thesis process. Furthermore, we would also like to acknowledge all of the cups of coffee we consumed in the past couple -

Setting up Your Development Environment ONAP Developer Set-Up

Setting Up Your Development Environment ONAP Developer Set-Up Follow the steps below to set up the development environment on your client machine, and to establish credentials with which you may access the repositories. ONAP Developer Set-Up Create a Linux Foundation Account Basic Setup Git Java Maven Proxy settings for Maven (if required) OS Specific Instructions MAC/OSX (in review under 10.15.7) Redhat/CentOS (in review under RHEL 8.3) Ubuntu (in review under 20.04 ) SSH Connection (Recommended) HTTPS Connection Proxy setting for IntelliJ and Git (if required) IDEs Eclipse Install Eclipse ONAP Eclipse Java Formatter Install useful plugins for Eclipse Set up Sonar towards ONAP for Eclipse Setting up the ONAP Checkstyle for Eclipse Spread blueprint to other projects for Eclipse Configure ONAP copyright for Eclipse IntelliJ Download & Install Configure ONAP code CheckStyle Plugin for IntelliJ Configure ONAP code style auto formatting for IntelliJ (using the same checkstyle rules and automating it for you ) Configure ONAP copyright for IntelliJ Set up SonarLint towards ONAP for IntelliJ Optional Tools & Utilities Python git-review (optional) Node-JS Local SonarQube Setup Other Optional Tools Troubleshooting & Know Issues Windows Limitations Hack oparent to fix "curly bracket" issue Appendix Create a Linux Foundation Account Follow the instructions on the identity portal to create a Linux Foundation account and get access to the ONAP Gerrit instance. Verify that you can log in at https://gerrit.onap.org/ and that you can see the ONAP list of repositories. Basic Setup Git Install git for your OS in accordance with https://www.atlassian.com/git/tutorials/install-git Since this is common for all OS, we will also use it to generate our SSH keys as well : ssh-keygen This should generate a private and public ssh key. -

Dr. Neil Ernst Department of Computer Science University of Victoria

Technical Debt in Practice Dr. Neil Ernst Department of Computer Science University of Victoria [email protected] 1 2 Technical Debt Research angle: Identify and understand when, and why, we take short-cuts in our engineering approach to software. Practical angle: unpaid technical debt generates interest: increased defect counts, low quality (e.g. latency) slow releases. However: TD is everywhere and incurring debt is not always bad! source: https://erikbern.com/2016/12/05/the-half-life-of-code.html 3 Software Will Not Go Away Linux Kernel, additions by year 4 5 Software enters the Moneyball era Moneyball: identify the key attributes in winning games, measure players against those attributes, manage teams to maximize those attributes On Base + Slugging Wins Above Replacement Software analytics: identify key attributes in delivering software, measure delivery against those attributes, manage teams to maximize those attributes Mean time to repair Cycle time (feature idea to customer) Technical Debt 6 Technical Debt in Practice What It Is Why It Matters Identifying TD Managing TD Avoiding TD 7 “Technical debt occurs when a design or construction approach is taken that's expedient in the short term, but that creates a technical context that increases complexity and cost in the long term.” Steve McConnell (Code Complete) 8 “Shipping first time code is like going into debt. A little debt speeds development so long as it is paid back promptly with a rewrite... The danger occurs when the debt is not repaid. Every minute spent on not-quite-right