Developments in Parrondo's Paradox (Derek Abbott)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Quantum Aspects of Life / Editors, Derek Abbott, Paul C.W

Quantum Aspectsof Life P581tp.indd 1 8/18/08 8:42:58 AM This page intentionally left blank foreword by SIR ROGER PENROSE editors Derek Abbott (University of Adelaide, Australia) Paul C. W. Davies (Arizona State University, USAU Arun K. Pati (Institute of Physics, Orissa, India) Imperial College Press ICP P581tp.indd 2 8/18/08 8:42:58 AM Published by Imperial College Press 57 Shelton Street Covent Garden London WC2H 9HE Distributed by World Scientific Publishing Co. Pte. Ltd. 5 Toh Tuck Link, Singapore 596224 USA office: 27 Warren Street, Suite 401-402, Hackensack, NJ 07601 UK office: 57 Shelton Street, Covent Garden, London WC2H 9HE Library of Congress Cataloging-in-Publication Data Quantum aspects of life / editors, Derek Abbott, Paul C.W. Davies, Arun K. Pati ; foreword by Sir Roger Penrose. p. ; cm. Includes bibliographical references and index. ISBN-13: 978-1-84816-253-2 (hardcover : alk. paper) ISBN-10: 1-84816-253-7 (hardcover : alk. paper) ISBN-13: 978-1-84816-267-9 (pbk. : alk. paper) ISBN-10: 1-84816-267-7 (pbk. : alk. paper) 1. Quantum biochemistry. I. Abbott, Derek, 1960– II. Davies, P. C. W. III. Pati, Arun K. [DNLM: 1. Biogenesis. 2. Quantum Theory. 3. Evolution, Molecular. QH 325 Q15 2008] QP517.Q34.Q36 2008 576.8'3--dc22 2008029345 British Library Cataloguing-in-Publication Data A catalogue record for this book is available from the British Library. Photo credit: Abigail P. Abbott for the photo on cover and title page. Copyright © 2008 by Imperial College Press All rights reserved. This book, or parts thereof, may not be reproduced in any form or by any means, electronic or mechanical, including photocopying, recording or any information storage and retrieval system now known or to be invented, without written permission from the Publisher. -

Sex Offender Registration, Monitoring and Risk Management

ProFESSOR DEREK ABBott T-rays to the rescue! Professor Derek Abbott describes the fascinating and largely untapped potential of T-rays, discussing why this exciting electromagnetic radiation has been little exploited and sharing his ambitions for novel future applications can you open with a short introduction to whole host of metamaterial structures for How has the ability to produce T-rays in the terahertz radiation? manipulating THz radiation. laboratory been advanced? Terahertz (or T-ray) radiation is considered could you explain the ways in which Microwaves are generated by using high-speed to be a part of the spectrum between 0.1 and T-rays are being explored for use in oscillating devices, while infrared is generated 10 terahertz (THz). It’s sandwiched between disease identification? thermally or by other light sources. Infrared the world of microwaves and that of infrared. sources become very dim as we approach Traditionally, this part of the spectrum was In the field, THz radiation is being used to the THz region and, to this day, high-speed called the submillimetre wave region and was non-invasively detect diseases, from the plant electronic devices struggle to generate waves used for passive detection by astronomers. kingdom through to humans. For example, in much above 500 gigahertz. However, recent However, active in-lab experiments did not the timber industry there has been interest advances in femtosecond laser technology have take off until the mid-1990s, as it was then that in detecting nematode disease in pinewood. facilitated the convenient generation of short efficient techniques for THz generation were In humans, THz has been used to detect the bursts of THz radiation, thus opening up a new developed using femtosecond lasers. -

Jaruwongrungsee, Kata; Tuantranont, Adisorn; Fumeaux, Christophe

ACCEPTED VERSION Withayachumnankul, Withawat; Jaruwongrungsee, Kata; Tuantranont, Adisorn; Fumeaux, Christophe; Abbott, Derek Metamaterial-based microfluidic sensor for dielectric characterization Sensors and Actuators A-Physical, 2013; 189:233-237 © 2012 Elsevier B.V. All rights reserved. NOTICE: this is the author’s version of a work that was accepted for publication in Sensors and Actuators A-Physical. Changes resulting from the publishing process, such as peer review, editing, corrections, structural formatting, and other quality control mechanisms may not be reflected in this document. Changes may have been made to this work since it was submitted for publication. A definitive version was subsequently published in Sensors and Actuators A-Physical, 2013; 189:233-237. DOI: 10.1016/j.sna.2012.10.027 PERMISSIONS http://www.elsevier.com/journal-authors/policies/open-access-policies/article-posting- policy#accepted-author-manuscript Elsevier's AAM Policy: Authors retain the right to use the accepted author manuscript for personal use, internal institutional use and for permitted scholarly posting provided that these are not for purposes of commercial use or systematic distribution. Elsevier believes that individual authors should be able to distribute their AAMs for their personal voluntary needs and interests, e.g. posting to their websites or their institution’s repository, e-mailing to colleagues. However, our policies differ regarding the systematic aggregation or distribution of AAMs to ensure the sustainability of the journals to which AAMs are submitted. Therefore, deposit in, or posting to, subject- oriented or centralized repositories (such as PubMed Central), or institutional repositories with systematic posting mandates is permitted only under specific agreements between Elsevier and the repository, agency or institution, and only consistent with the publisher’s policies concerning such repositories. -

Too Good to Be True: When Overwhelming Evidence Fails to Convince

Too good to be true: when overwhelming evidence fails to convince Lachlan J. Gunn,1, ∗ Fran¸coisChapeau-Blondeau,2, y Mark D. McDonnell,3, z Bruce R. Davis,1, x Andrew Allison,1, { and Derek Abbott1, ∗∗ 1School of Electrical and Electronic Engineering, The University of Adelaide 5005, Adelaide, Australia 2Laboratoire Angevin de Recherche en Ing´enieriedes Syst`emes(LARIS), University of Angers, 62 avenue Notre Dame du Lac, 49000 Angers, France 3School of Information Technology and Mathematical Sciences, University of South Australia, Mawson Lakes SA, 5095, Australia.yy Is it possible for a large sequence of measurements or observations, which support a hypothesis, to counterintuitively decrease our confidence? Can unanimous support be too good to be true? The assumption of independence is often made in good faith, however rarely is consideration given to whether a systemic failure has occurred. Taking this into account can cause certainty in a hypothesis to decrease as the evidence for it becomes apparently stronger. We perform a probabilistic Bayesian analysis of this effect with examples based on (i) archaeological evidence, (ii) weighing of legal evidence, and (iii) cryptographic primality testing. We find that even with surprisingly low systemic failure rates high confidence is very difficult to achieve and in particular we find that certain analyses of cryptographically-important numerical tests are highly optimistic, underestimating their false-negative rate by as much as a factor of 280. INTRODUCTION when they can|surprisingly|disimprove confidence in the final outcome. We choose the striking example that In a number of branches of science, it is now well- increasing confirmatory identifications in a police line-up known that deleterious effects can conspire to produce or identity parade can, under certain conditions, reduce a benefit or desired positive outcome. -

Plenary Debate: Quantum Effects in Biology: Trivial Or Not?

March 24, 2008 16:45 WSPC/167-FNL 00430 Fluctuation and Noise Letters VFluctuationol. 8, No. 1 and(2008)Noise000{000Letters Vc ol.World8, No.Scien1 (2008)tific PublishingC5{C26 Company c World Scientific Publishing Company PLENARY DEBATE: QUANTUM EFFECTS IN BIOLOGY: TRIVIAL OR NOT? DEREK ABBOTT Centre for Biomedical Engineering (CBME) and Department of Electrical & Electronic Engineering, The University of Adelaide, SA 5005, Australia JULIO GEA-BANACLOCHE Department of Physics, University of Arkansas, Fayetteville, AR 72701, USA PAUL C. W. DAVIES Beyond: Center for Fundamental Concepts in Science, Arizona State University P.O. Box 876505, Tempe, AZ 85287-6505, USA STUART HAMEROFF Department of Anesthesiology and Psychology, Center for Consciousness Studies University of Arizona, Tucson, AZ 85721, USA ANTON ZEILINGER Institut fr Experimentalphysik, Universit¨at Innsbruck Technikerstrasse 25, 6020 Innsbruck, Austria JENS EISERT QOLS, Blackett Laboratory, Imperial College London, Prince Consort Road London SW7 2BW, United Kingdom and Institute for Mathematical Sciences, Imperial College London Prince's Gate, London SW7 2PE, United Kingdom HOWARD WISEMAN Centre for Quantum Computer Technology, Centre for Quantum Dynamics, Griffith University Brisbane, Qld. 4111, Australia SERGEY M. BEZRUKOV Laboratory of Physical and Structural Biology, NICHD, National Institutes of Health Bethesda, MD 20892-0924, USA HANS FRAUENFELDER Theory Division T10, Los Alamos National Laboratory, Los Alamos, New Mexico 87545, USA Received 20 December 2007 Accepted 2 January 2008 Communicated by Derek Abbott C5 1 March 24, 2008 16:45 WSPC/167-FNL 00430 2C6 D.D.AbbAbbottottetetal.al. 6:00pm, Friday, May 28th, 2004, Costa Meloneras Hotel, Canary Islands, Spain Second International Symposium on Fluctuations and Noise (FaN'04). -

Keeping the Energy Debate Clean: How Do

CONTRIBUTED PAPER Keeping the Energy Debate Clean: How Do We Supply the World’s Energy Needs? Proposed solutions include: Sensible energy conservation; Solar thermal collection using parabolic reflectors; Hydrogen used as an energy carrier in combustion engines and for energy storage and transportation. By Derek Abbott, Fellow IEEE ABSTRACT | We take a fresh look at the major nonrenewable conserving them imminently. What is often forgotten in the and renewable energy sources and examine their long-term energy debate is that oil, natural gas, and coal are not only viability, scalability, and the sustainability of the resources that used as energy sources, but we also rely on them for they use. We achieve this by asking what would happen if each embodying many crucial physical products. It is this fact that energy source was a single supply of power for the world, as a requires us to develop a solar hydrogen platform with urgency. gedanken experiment. From this perspective, a solar hydrogen It is argued that a solar future is unavoidable, as ultimately economy emerges as a dominant solution to the world’s humankind has no other choice. energy needs. If we globally tap sunlight over only 1% of the incident area at only an energy conversion efficiency of 1%, it is KEYWORDS | Consolidated utility time; economics of energy; simple to show that this meets our current world energy electrolysis; energy; energy efficiency; energy generation; consumption. As 9% of the planet surface area is taken up by energy policy; energy supply; hydrogen; hydrogen liquefaction; desert and efficiencies well over 1% are possible, in practice, hydrogen storage; hydrogen transfer; hydrogen transport; this opens up many exciting future opportunities. -

Paul Davies; Oxford University Press 2006)

Curriculum Vitae Paul C.W. Davies Beyond: Center for Fundamental Concepts in Science Arizona State University http://beyond.asu.edu P.O. Box 871504, Tempe, AZ 85287-1504 (480) 727-0774 Fax: 480 965 7954 [email protected] Nationality: British & Australian Education/degrees BSc First Class in Physics, University College London, 1967 Ph.D, Physics Department, University College London, 1970 DSc honoris causa, Macquarie University, Sydney (2006) DSc honoris causa,, Chapman University, California (2009) Professional Appointments 2006- Director, Beyond: Center for Fundamental Concepts in Science, Co-Director ASU Cosmology Initiative, College Professor, Arizona State University 2001 - 2006 Professor of Natural Philosophy, Macquarie University 1998 - Visiting Professor, Department of Physics, Imperial College London 1998 - Adjunct Professor, Department of Physics, University of Queensland 1993 - 1997 Professor of Natural Philosophy, Department of Physics, The University of Adelaide 1990 - 1993 Professor of Mathematical Physics, The University of Adelaide 1980 - 1990 Professor of Theoretical Physics, University of Newcastle upon Tyne 1972 - 1980 Lecturer in Mathematics, King's College, University of London 1970 - 1972 Research Fellow, Institute of Theoretical Astronomy, University of Cambridge Professional organizations Fellow, UK Institute of Physics Chartered Physicist (CPhys), UK Institute of Physics Fellow, Australian Institute of Physics Fellow, World Economic Forum Fellow, Royal Literary Society Honorary Fellow, Indian Astronomical Society -

Stochastic Resonance: from Suprathreshold Stochastic Resonance to Stochastic Signal Quantization Mark D

Cambridge University Press 978-0-521-88262-0 - Stochastic Resonance: From Suprathreshold Stochastic Resonance to Stochastic Signal Quantization Mark D. McDonnell, Nigel G. Stocks, Charles E. M. Pearce and Derek Abbott Frontmatter More information STOCHASTIC RESONANCE From Suprathreshold Stochastic Resonance to Stochastic Signal Quantization Stochastic resonance occurs when random noise provides a signal processing bene- fit, and has been observed in many physical and biological systems. Many aspects have been hotly debated by scientists for nearly 30 years, with one of the main questions being whether biological neurons utilise stochastic resonance. This book addresses in detail various theoretical aspects of stochastic resonance with a focus on its extension to suprathreshold stochastic resonance, in the context of stochastic signal quantization theory. Models in which suprathreshold stochastic resonance occur support significantly enhanced “noise benefits”, and exploitation of the effect may prove extremely useful in the design of future engineering systems such as distributed sensor networks, nano-electronics, and biomedical prosthetics. For the first time, this book reviews and systemizes the topic in a way that brings clarity to a range of researchers from computational neuroscientists through to electronic engineers. To set the scene, the initial chapters review stochastic reso- nance and outline some of the controversies and debates that have surrounded it. The book then discusses the suprathreshold stochastic resonance effect as a form of stochastic quantization. Next, it considers various extensions, such as optimiza- tion and tradeoffs for stochastic quantizers. On the topic of biomedical prosthetics, the book culminates in a chapter on the application of suprathreshold stochastic resonance to the design of cochlear implants. -

A Hybrid CMOS-Memristor Neuromorphic Synapse Mostafa Rahimi Azghadi, Member, IEEE, Bernabe Linares-Barranco, Fellow, IEEE, Derek Abbott, Fellow, IEEE, Philip H.W

IEEE TRANSACTIONS ON BIOMEDICAL CIRCUITS AND SYSTEMS 1 A Hybrid CMOS-memristor Neuromorphic Synapse Mostafa Rahimi Azghadi, Member, IEEE, Bernabe Linares-Barranco, Fellow, IEEE, Derek Abbott, Fellow, IEEE, Philip H.W. Leong, Senior Member, IEEE Abstract—Although data processing technology continues to that is capable of faithfully reproducing a larger regime advance at an astonishing rate, computers with brain-like pro- of experimental data that takes into account conventional cessing capabilities still elude us. It is envisioned that such STDP [11], frequency-dependent STDP [12], triplet [13], [14] computers may be achieved by the fusion of neuroscience and nano-electronics to realize a brain-inspired platform. This paper and quadruplet [14], [15] plasticity experiments. In a recent proposes a high-performance nano-scale Complementary Metal study, Wei et al. replicated the outcome of a variety of synaptic Oxide Semiconductor (CMOS)-memristive circuit, which mimics plasticity experiments including STDP, frequency-dependent a number of essential learning properties of biological synapses. STDP, triplet, and quadruplet spike interactions, using a TiO2 The proposed synaptic circuit that is composed of memristors and memristor [16]. CMOS transistors, alters its memristance in response to timing differences among its pre- and post-synaptic action potentials, This paper proposes a new hybrid CMOS-memristive circuit giving rise to a family of Spike Timing Dependent Plasticity that aims to emulate all the aforementioned experimental data, (STDP). The presented design advances preceding memristive with minimal errors close to those reported in a phenomeno- synapse designs with regards to the ability to replicate essential logical model of Triplet STDP (TSTDP) rule presented in [14]. -

ACCEPTED VERSION Omid Kavehei, Said Al-Sarawi, Kyoung-Rok Cho

ACCEPTED VERSION Omid Kavehei, Said Al-Sarawi, Kyoung-Rok Cho, Kamran Eshraghian and Derek Abbott An Analytical Approach for Memristive Nanoarchitectures IEEE Transactions on Nanotechnology, 2012; 11(0002):374-385 © 2011 IEEE DOI: 10.1109/TNANO.2011.2174802 © 2011 IEEE Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works.” PERMISSIONS http://www.ieee.org/publications_standards/publications/rights/rights_policies.html Authors and/or their employers shall have the right to post the accepted version of IEEE-copyrighted articles on their own personal servers or the servers of their institutions or employers without permission from IEEE, provided that the posted version includes a prominently displayed IEEE copyright notice (as shown in 8.1.9.B, above) and, when published, a full citation to the original IEEE publication, including a Digital Object Identifier (DOI). Authors shall not post the final, published versions of their articles. 18 March 2015 http://hdl.handle.net/2440/71514 MANUSCRIPT TO IEEE TRANSACTIONS ON NANOTECHNOLOGY. PREVIOUS ID: TNANO-00235-2011 1 An Analytical Approach for Memristive Nanoarchitectures Omid Kavehei, Student Member, IEEE, Said Al-Sarawi, Member, IEEE, Kyoung-Rok Cho, Member, IEEE, Kamran Eshraghian, and Derek Abbott, Fellow, IEEE Abstract—As conventional memory technologies are that the performance characteristics of these emerging challenged by their technological physical limits, technologies are rather promising when compared with emerging technologies driven by novel materials are the curent large memory arrays based on Static RAM becoming an attractive option for future memory ar- chitectures. -

Nuclear Power Is Not "Commercially Viable." This Conclusion Holds for Victoria, Given the Facts in Point (A) Above

LC EPC Inquiry into Nuclear Prohibition submission 23 Inquiry Name: Inquiry into Nuclear Prohibition Professor Derek Abbott University of Adelaide, SA 5005 SUBMISSION CONTENT: ‐‐ a) Nuclear is not suited to the modern electricity grid. Due to rapid advances in power electronics, changing nature of demand, and greater variety of generators, the grid needs storage and generators that respond rapidly. Nuclear has a slow response and thus is not an ideal source, leading to a poor or even negative return on investment. b) The first attachment is my article in AQ discussing a number of broader reasons why nuclear is also inappropriate in Australia. c) It is well‐known that the recent SA Royal Commission into Nuclear found that nuclear power is not "commercially viable." This conclusion holds for Victoria, given the facts in point (a) above. d) The World Nuclear Status report can be downloaded from here: https://www.worldnuclearreport.org/IMG/pdf/wnisr2019‐v2‐lr.pdf ....it shows that the nuclear industry is in spiraling decline around the world. Thus, investment in a declining global industry is another reason to proceed with caution. This decline began before Fukushima, and the real reasons are to do with point (a) and resulting economic factors. e) To put Australia's uranium mining industry into perspective, note that in Australia we earn more exporting almonds than uranium (!) Our income on uranium exports has rapidly declined over that last decade. Ten years ago, we earned $100 for every pound in weight of uranium oxide, and that has gradually dropped to only $25. This is because the nuclear industry is in decline, and large production from Kazakhstan floods the market dropping the price. -

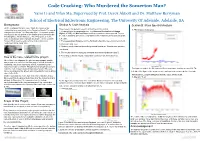

Who Murdered the Somerton Man? Yami Li and Yifan Ma, Supervised by Prof

Code Cracking: Who Murdered the Somerton Man? Yami Li and Yifan Ma, Supervised by Prof. Derek Abbott and Dr. Matthew Berryman School of Electrical &Electronic Engineering, The University Of Adelaide, Adelaide, SA Background: Section A: Code Analysis Section B: Mass Spectral Analysis On the morning of 1st December 1948 , the corpse of an Here presents the general research procedures in this section: unidentified man was found on the Somerton Beach. The man is 1. Pb-Content analysis 1. Prepared texts for comparison use. The Universal Declaration of Human always referred to as “The Somerton Man”. As a paper scratch Rights (UDHR), the War and Peace and their translations were selected. To meet was found in the fob pocket of the dead man’s trousers with the the code's standard, all texts were transformed into initialisms using Java and gedit Persian phrase Tamam Shud (i.e. “The End”) printed on, the text editor. case is also known as the Tamam Shud case. For 65 years the Tamam Shud case has endured one of Australia’s most 2. The Levenshtein Distance and the Simhash algorithms were implemented and mysterious and peculiar case. customized using Java. 3. Similarity check tests had been designed and carried out. Results were saved in .csv format. 4. The results had been analyzed, reshaped and selectively plotted using R. Figure1. The Somerton Man Figure2. The Somerton Man How is the case related to the project: 5. According to the test results, reasonable conclusion has been drawn out. After Police’s investigation, the aforementioned paper scratch Meaningful Results were presented below: was proved to be torn from the final page of a book.