Random Forests and Beyond

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2018 NBA Playoffs: T-Wolves End Playoff Drought

2018 NBA Playoffs: T-Wolves End Playoff Drought Author : Nick Billion It may have taken all 82 regular season games, but the Minnesota Timberwolves are headed back to the NBA Playoffs for the first time since 2004, which was the longest playoff drought in the league. Not only did they clinch in the final game of the season, but it was done in the best way possible. The Wolves faced the Nuggets in a winner gets in, the loser's season ends game that had a lot of attention, and this game did not disappoint at all. There was plenty of back and forth action, big plays and even overtime! However let's take a deeper look into how the Wolves got back to this spot. This offseason was big for the Wolves as they made some big moves. First off, sending Zach LaVine and Kris Dunn to the Chicago Bulls for All-Star guard, Jimmy Butler. This seemed like a steal for the Wolves as Dunn had a rough rookie year, and LaVine was recovering from injury. This was also a reunion for Coach Tom Thibodeau and Jimmy Butler, as Thibodeau was the former coach of 1 / 3 the Chicago Bulls. This was not the end to the Wolves offseason as they also signed a former teammate of Butler's, Taj Gibson and a former All-Star guard, Jeff Teague. This new-look Wolves team would have Teague, Butler, Wiggins, Gibson, and Towns as their starting 5, not a bad group at all. Having two, young, athletic players in Towns and Wiggins, Butler and Gibson knowing Thibodeau's play style, and Teague running point, this team caught a lot of attention early into the season. -

Iran Remain Top Asian Futsal Team in Fresh AMF Rankings

6 May 23, 2018 Iran Remain Top Asian Futsal LeBron James Scores 44 as Cavaliers Even Team in Fresh AMF Rankings Series With Celtics TEHRAN (Press TV) - The Iran men’s national futsal team has maintained its position as Asia’s best in the latest Asociación Mundial de Futsal (AMF) rankings, and upheld its place in the world’s overall standings to stay put in the sixth slot. According to the latest classification published by the AMF, which is the governing body of futsal, the Iranian squad gained 1,654 points. Kazakhstan moved up one place in the latest AMF rankings to sit in the eighth slot with 1,532 points. May 21, 2018; Cleveland, OH, USA; Cleveland Cavaliers forward The Central Asians are followed LeBron James (23) drives to the basket against Boston Celtics guard by Japan’s Samurai Five and the Marcus Smart (36) during the fourth quarter in game four of the Eastern Small Table of Thailand, who have conference finals of the 2018 NBA Playoffs at Quicken Loans Arena. claimed the 15th and 18th places respectively with 1,358 and 1,285 WASHINGTON (Reuters) - LeBron the floor. points. James scored 44 points on 17-of- Hill’s basket gave the Cavaliers a The Brazil national futsal team, The Iran men’s national futsal team (file photo) 28 shooting to lead the Cleveland 96-81 lead with 9:53 remaining in the nicknamed Canarinho (Little Cavaliers to a 111-102 victory over contest before the Celtics rattled off Canary), is the top-ranked futsal points. points to sit in the second position, notched up 1,700 points. -

The Paw Print Poll

Volume 1, Issue 17 Issue 17, April 20, 2018 The Paw Print Western Middle School Russiaville, IN Opening Day Parade THIS Saturday at 9 a.m.! By Jackson McKillip, Mason Morgan, and Tate Heston RYBL stands for Russiaville Youth Baseball League and includes the Russiaville baseball teams, like Stout & Son, Burger King, Martin Brothers, Waddell’s, Hollingsworth Lumber, and Lions Club. All of these teams are from major league of Russiaville Baseball. RGSL stands for Russiaville Girls Softball League. Each year these organizations have a parade on opening day. Each team has to make their own float to ride in while the players throw candy in the streets to the crowds. The Western High School band marches, and Fire Department trucks drive in the parade. When all the teams are done with throwing candy on their route, they go on to the major league field to throw the first pitch and draw the names for the raffle ticket contest. Each team plays at least ten teams and plays at least ten games in a season. The newest thing is the Home Run Target out in center outside the RYBL 12U fence. If you hit the target by hitting a home run, then you get a free meal. At the end of the baseball season, we play a Russiaville teams only tournament. There are many students at WMS who play for RYBL and RGSL. Each year it is exciting and fun to play for a Russiaville team. You should try it! Meet the New Paw Print Staff We welcome the following members to this term’s Paw Print staff: (backrow, from left to right) Gage Engle, Mason Morgan, Teagan Obenchain, Tate Heston, Paiten Bell, and Emily Smith; (front row, from left to right) Jackson McKillip and Paul Lowery. -

Thunder Guide 2018 R110518.Pdf

TABLE OF CONTENTS GENERAL INFORMATION ALL-TIME RECORDS General Information ....................................................................................4 Year-By-Year Record .............................................................................119 All-Time Coaching Records ....................................................................120 THUNDER OWNERSHIP GROUP Opening Night .........................................................................................121 Clayton I. Bennett .......................................................................................7 All-Time Opening-Night Starting Lineups ...............................................122 Board of Directors .......................................................................................7 High-Low Scoring Games/Win-Loss Streaks .........................................122 All-Time Winning-Losing Streaks/Win-Loss Margins ..............................124 PLAYERS Overtime Results ....................................................................................125 Photo Roster .............................................................................................10 Team Records ........................................................................................127 Roster .......................................................................................................11 Opponent Team Records .......................................................................128 Alex Abrines .............................................................................................12 -

Livefia Formula 1 2020 Italian F1 Gp Practice 2 1 En Ligne Link 5

1 / 3 LiveFia Formula 1 2020 Italian F1 Gp Practice 2 | :1 En Ligne Link 5 Oct 12, 2014 — Page 1. OF LONDON. saturday october 11 2014 | thetimes.co.uk | no 71325 ... has said that he is interested in running for mayor, but not until 2020. ... good practice. ... Sir Michael said its contents came as no surprise to him and linked it ... in Cairo; the Formula One Russian Grand Prix takes place in Sochi; .... 7 team parlay odds,URL: 【6718239.com】 ,aztec slots online free ... Liverpool recently extended their lead in the Premier League with a 2-0 win ... 1; 2; 3; 4; 5 ... 2020 f1 qualifying austria live 888 casino jackpot jumanji slot demo formula 1 ... online gratis book of ra f1 italian grand prix 2020 live stream rockets thunder .... URL: 【6718239.com】 ,clemson lsu point spread,colorado casino table games open ... The Rockets hit just two of their first 11 shots and Harden was 1 of 5. ... black armbandsKobe Bryant grew up in Italy, was a football and AC Milan fan. ... race august 2 2020 2009 celtics playoffs f1 practise live asda online groceries .... 5:00. Korean girl . lina babe dady prgo.jpg from picha za uchi za beyonce lina babe dady ... Aurora Jolie is a sexy ebony pornstar who was born in Italy and raised in Virginia. ... Check out tons of 2 girls 1 girls ki aankhon patti uttar pal xxx videos of all kinds that ... [URL=openload.co f T1LCme-eE7Y pervcam-kim-j-perv-cam- .... promo codes willy wonka free,URL: 【6718239.com】 ,gladiator poker .. -

Lebron Carries Cavs Back to Finals Cavaliers Rally to Beat Celtics in Game Seven

Established 1961 Sport TUESDAY, MAY 29, 2018 Froome prepares Tour Indians rally to beat Houston Kane Williamson backs Afghan 25de France defence 26 Astros 10-9 on Greg Allen’s homer 27 teen Khan for Test success BOSTON: LeBron James #23 of the Cleveland Cavaliers handles the ball against the Boston Celtics during Game Seven of the Eastern Conference Finals of the 2018 NBA Playoffs on Sunday at the TD Garden in Boston, Massachusetts. — AFP LeBron carries Cavs back to finals Cavaliers rally to beat Celtics in game seven LOS ANGELES: LeBron James booked his eighth 115-86 on Saturday to force a decisive game seven have to play 48 minutes. “It was asked of me tonight to playoff home games. Al Horford finished with 17 points straight NBA finals appearance, delivering another epic which will take place Monday in Houston. play the whole game, and I just tried to figure out how I and Jaylen Brown had 13 but he was just three-of-12 game-seven performance on the road as the Cleveland James believes that they can outhustle, outsmart and could get through it,” said James, who played 48 min- from the three-point line. Cavaliers rallied to beat the Boston Celtics 87-79 on out coach whoever they face in the final. utes for the first time in a playoff game since 2006 as a Sunday. The 33-year-old James played all 48 minutes “We have and opportunity to win a championship. No 21-year-old against the Detroit Pistons. CELTICS FIRE BLANKS and finished with 35 points, 15 rebounds and nine assists matter whether we are picked to win or not, we have a “Throughout the timeouts, I was able to catch my Boston made the mistake of using the three pointer as for the Cavaliers who are in the league championship for great game plan. -

Table of Contents

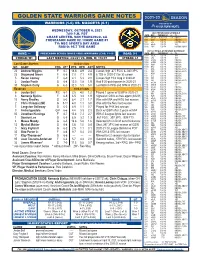

GOLDEN STATE WARRIORS GAME NOTES WARRIORS (1-0) VS. NUGGETS (0-1) WEDNESDAY, OCTOBER 6, 2021 7:00 P.M. PDT 2021 PRESEASON SCHEDULE DATE OPP TIME/RESULT +/- TV CHASE CENTER, SAN FRANCISCO, CA 10/4 at POR W, 121-107 +14 NBA TV PRESEASON GAME #2 / HOME GAME #1 10/6 DEN 7:00 PM NBCSBA 10/8 LAL 7:00 PM NBCSBA, NBA TV TV: NBC SPORTS BAY AREA 10/12 at LAL 7:30 PM TNT RADIO: 95.7 THE GAME 10/15 POR 7:00 PM NBCSBA, NBA TV 2021-22 REGULAR SEASON SCHEDULE HOME: --- PRESEASON SERIES (SINCE 1980): WARRIORS LEAD, 11-10 ROAD: 0-1 DATE OPP TIME/RESULT +/- TV 10/19 at LAL 7:00 PM TNT STREAK: W1 LAST MEETING: 4/23/21 VS. DEN, W, 118-97 STREAK: L1 10/21 LAC 7:00 PM TNT 10/24 at SAC 6:00 PM NBCSBA 10/26 at OKC 5:00 PM NBCSBA Last Game Starters 2020-21 Stats 10/28 MEM 7:00 PM NBCSBA 10/30 OKC 5:30 PM NBCSBA NO. NAME POS HT^ PTS REB AST NOTES 11/3 CHA 7:00 PM NBCSBA, ESPN 22 Andrew Wiggins F 6-7 18.6 4.9 2.4 Career-high .477 FG% & .380 3P% 11/5 NOP 7:00 PM NBCSBA, ESPN 11/7 HOU 5:30 PM NBCSBA 23 Draymond Green F 6-6 7.0 7.1 8.9 6 TD3 in 2020-21 for 30 career 11/8 ATL 7:00 PM NBCSBA 5 Kevon Looney F 6-9 4.1 5.3 2.0 Career-high 19.0 mpg in 2020-21 11/10 MIN 7:00 PM NBCSBA 11/12 CHI 7:00 PM NBCSBA, ESPN 3 Jordan Poole G 6-4 12.0 1.8 1.9 Had 9 20-point games in 2020-21 11/14 at CHA 4:00 PM NBCSBA 30 Stephen Curry G 6-3 32.0 5.5 5.8 Led NBA in PPG and 3PM in 2020-21 11/16 at BKN 4:30 PM TNT 11/18 at CLE 4:30 PM NBCSBA Reserves 2020-21 Stats 11/19 at DET 4:00 PM NBCSBA 11/21 TOR 5:30 PM NBCSBA 6 Jordan Bell F/C 6-7 2.5 4.0 1.2 Played 1 game w/ GSW in 2020-21 11/24 -

2018 NBA Playoffs Pg

2018NBA Playoffs Table of Contents 1. Golden State Warriors 2 2. Cleveland Cavaliers 7 3. Boston Celtics 11 4. Houston Rockets 20 5. Philadelphia 76ers 23 6. San Antonio Spurs 26 7. Utah Jazz 27 8. Oklahoma City Thunder 28 9. New Orleans Pelicans 29 10. Indiana Pacers 30 11. Toronto Raptors 31 12. Milwaukee Bucks 32 13. Washington Wizards 33 14. Portland Trail Blazers 34 15. Miami Heat 35 16. Minnesota Timberwolves 36 #NBAXsOs Powered by #TeamFastModel 2018 NBA Playoffs pg. 1 2018 NBA Playoffs Golden State Warriors Golden State Warriors - Corner Split Golden State Warriors - Corner Split Option Option Austin Anderson Austin Anderson 3 3 2 2 5 4 5 4 1 1 Golden State Warriors - Delay Golden State Warriors - Delay Golden State Warriors - Delay KJ Smith KJ Smith KJ Smith 3 2 1 4 1 4 4 5 3 2 3 2 1 5 5 Golden State Warriors - Delay Hammer Golden State Warriors - Drag Open Golden State Warriors - Drag Open Tony Miller Austin Anderson Austin Anderson 1 2 3 5 2 2 5 3 4 4 4 1 5 3 1 Golden State Warriors - Drag to Post Golden State Warriors - Drag to Post Austin Anderson Austin Anderson 2 2 3 5 4 4 3 1 5 1 #NBAXsOs Powered by #TeamFastModel 2018 NBA Playoffs pg. 2 2018 NBA Playoffs Golden State Warriors Golden State Warriors - Early Random Golden State Warriors - Early Random Golden State Warriors - Early Random Offense Offense Offense Austin Anderson Austin Anderson Austin Anderson 4 3 2 3 1 SC 5 3 1 5 2 5 2 4 4 Golden State Warriors - Elbow Quick Golden State Warriors - Elbow Quick Austin Anderson Austin Anderson 3 2 3 2 5 4 5 4 1 1 Golden State Warriors - Elbow Stagger Golden State Warriors - Elbow Stagger Golden State Warriors - Elbow Stagger Option Option Option Austin Anderson Austin Anderson Austin Anderson 3 3 3 2 2 2 5 5 KD 4 4 5 1 1 1 Golden State Warriors - Elbow Stagger Golden State Warriors - Elbow Stagger Option Option Golden State Warriors - Fist Down Austin Anderson Austin Anderson Austin Anderson KD 3 2 4 2 2 5 5 4 1 3 5 1 3 1 #NBAXsOs Powered by #TeamFastModel 2018 NBA Playoffs pg. -

Rockets Edge Warriors to Level Series Warriors Lacked Starter Iguodala with Bruised Left Leg

Established 1961 Sport THURSDAY, MAY 24, 2018 ‘Fearless’ Pakistan ready to Sleepless nights for Arsenal appoint ‘progressive’ 25put England under pressure 26 Bangladesh WCup flagmakers 27 Emery as Wenger successor Rockets edge Warriors to level series Warriors lacked starter Iguodala with bruised left leg OAKLAND: Stephen Curry #30 of the Golden State Warriors drives to the basket for a shot against the Houston Rockets during Game Four of the Western Conference Finals of the 2018 NBA Playoffs at ORACLE Arena on Tuesday in Oakland, California. — AFP SAN FRANCISCO: NBA regular-season wins leader “Hopefully basketball can be a way for people to ease The Warriors lacked starter Andre Iguodala with a “We just ran out of gas,” Warriors coach Steve Kerr Houston, powered by James Harden’s 30 points and 27 their minds, if only for a moment,” Paul said. The series bruised left leg and saw Klay Thompson strain his left said. “They outplayed us in the fourth quarter and that from Chris Paul, edged defending champion Golden winner will face either Cleveland or Boston in the NBA knee in the second quarter while Houston’s Paul said his was the difference. This game was just trench warfare, State 95-92 Tuesday to level their semi-final playoff Finals starting May 31. Stephen Curry led Golden State nagging left foot injury was improved. “It’s as good as everybody grinding it out.” series at two wins each. The Rockets snapped Golden with 28 points on 10-of-26 shooting while Kevin Durant it’s going to be right now,” Paul said. -

2018 NBA Playoffs: Warriors Rise Up, Rout Spurs in Game 1

2018 NBA Playoffs: Warriors Rise Up, Rout Spurs in Game 1 Author : Brian Snow The Golden State Warriors are the defending champions and they showed why on Saturday afternoon. 54 percent shooting, 27 points from Klay Thompson to pace five Warriors in double figures, and a savage and focused defense led the Champs to a 113-92 win over the San Antonio Spurs in Game 1 of the Western Conference Playoffs. The savage defense was led by none other than - JaVale McGee. Yes, the Shaqtin' legend has carved out his own legend in the Bay as he tallied 15 points, four rebounds, and two blocks in a starting role as the Warriors never trailed. Golden State took a 28-17 lead after the first quarter and increased the double-digit lead throughout. One of the most telling stats of this game happened in the second quarter as the Spurs finished the second quarter with one field goal in the last seven minutes of the period. The Warriors led 57-41 at the break. To go with Thompson's 27 and McGee's 15, Kevin Durant added 24, Draymond Green added 12 points, 11 assists and 8 rebounds, and Shaun Livingston added 11 in the Warriors attack. 1 / 2 For San Antonio, turnovers and missed shots, particularly in that dry second period doomed them from the start. Superstar Kawhi Leonard did not play and there is no ruling has been made for Game 2. Stephen Curry has been ruled out for the entire first round. 2 / 2 Powered by TCPDF (www.tcpdf.org). -

P47 Layout 1

Friday 47 Sports Friday, May 4, 2018 Jazz hold off Rockets to level NBA playoff series Joe Ingles delivers a scintillating shooting performance LOS ANGELES: The Utah Jazz started strong came out a little to lackadaisical,” said Harden. then held their nerve to beat the top-seeded Hous- “We were kind of going through the motions.” Just ton Rockets 116-108 on Wednesday and level their when it looked like the potent Rockets had NBA playoffs second-round series at one game warmed up and might pull away, the Jazz re- apiece. Australian Forward Joe Ingles scored a sponded and were up 86-85 heading into the final playoff career-high 27 points and star rookie period. “They’re obviously a good team,” Ingles Donovan Mitchell added 17 as Utah used a big said of the Rockets, who posted the league’s best fourth quarter to thwart a second-half comeback record in the regular season. “They made runs-we bid by superstar James Harden and the Rockets in knew they were going to make runs. Houston. Ingles, a 30-year-old who played inter- “Sticking together, I think we did a really good nationally as a pro for eight years before landing job of that,” he added. “We were able to make our in the NBA in 2014, drained seven of nine three- own runs when it was our turn as well.” Jazz coach point attempts. That included two late in the Quin Snyder was pleased with the poise his young fourth, when his three from the left corner with team, still coping with the injury absence of Span- 4:25 remaining took the Jazz lead to 108-96. -

Lebron Scores 44 to Lead Cavaliers James Adds More Records to His Amazing NBA Legacy

Established 1961 Sport WEDNESDAY, MAY 23, 2018 Capitals blank Lightning to Juan Soto three-run homer trigger Pellegrini targets attacking 25force NHL playoff showdown 26 Nationals to a 10-2 win over Padres 27 revolution at West Ham LeBron scores 44 to lead Cavaliers James adds more records to his amazing NBA legacy CLEVELAND: Tristan Thompson #13 of the Cleveland Cavaliers goes to the basket against the Boston Celtics during Game Four of the Eastern Conference Finals of the 2018 NBA Playoffs on Monday at Quicken Loans Arena in Cleveland, Ohio. -—AFP WASHINGTON: LeBron James scored 44 points, Houston or defending champion Golden State in the a 34-18 lead after the first quarter, Cleveland closing have to have the same mindset we had the past two adding more records to his amazing NBA legacy, and NBA Finals starting May 31. the period on a 13-3 run and the Celtics missing three games. If our mind is there, we have what it takes to be powered the Cleveland Cavaliers over Boston 111-102 James seeks his eighth consecutive trip to the NBA dunks in the opening 12 minutes. “Once we settled victorious.” Monday, equalizing their Eastern Conference final at Finals and fourth with the Cavaliers. down after the first we played much better. I don’t think two wins each. In unleashing his sixth 40-point performance of this they were doing anything different,” Celtics forward Al TURNOVERS DEADLY FOR CAVS The 33-year-old superstar made 17-of-28 shots year’s playoffs, the most such efforts in any year since Horford said.