Lecture 2 - Introduction to Polytopes

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Geometry of Generalized Permutohedra

Geometry of Generalized Permutohedra by Jeffrey Samuel Doker A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Mathematics in the Graduate Division of the University of California, Berkeley Committee in charge: Federico Ardila, Co-chair Lior Pachter, Co-chair Matthias Beck Bernd Sturmfels Lauren Williams Satish Rao Fall 2011 Geometry of Generalized Permutohedra Copyright 2011 by Jeffrey Samuel Doker 1 Abstract Geometry of Generalized Permutohedra by Jeffrey Samuel Doker Doctor of Philosophy in Mathematics University of California, Berkeley Federico Ardila and Lior Pachter, Co-chairs We study generalized permutohedra and some of the geometric properties they exhibit. We decompose matroid polytopes (and several related polytopes) into signed Minkowski sums of simplices and compute their volumes. We define the associahedron and multiplihe- dron in terms of trees and show them to be generalized permutohedra. We also generalize the multiplihedron to a broader class of generalized permutohedra, and describe their face lattices, vertices, and volumes. A family of interesting polynomials that we call composition polynomials arises from the study of multiplihedra, and we analyze several of their surprising properties. Finally, we look at generalized permutohedra of different root systems and study the Minkowski sums of faces of the crosspolytope. i To Joe and Sue ii Contents List of Figures iii 1 Introduction 1 2 Matroid polytopes and their volumes 3 2.1 Introduction . .3 2.2 Matroid polytopes are generalized permutohedra . .4 2.3 The volume of a matroid polytope . .8 2.4 Independent set polytopes . 11 2.5 Truncation flag matroids . 14 3 Geometry and generalizations of multiplihedra 18 3.1 Introduction . -

Lecture 3 1 Geometry of Linear Programs

ORIE 6300 Mathematical Programming I September 2, 2014 Lecture 3 Lecturer: David P. Williamson Scribe: Divya Singhvi Last time we discussed how to take dual of an LP in two different ways. Today we will talk about the geometry of linear programs. 1 Geometry of Linear Programs First we need some definitions. Definition 1 A set S ⊆ <n is convex if 8x; y 2 S, λx + (1 − λ)y 2 S, 8λ 2 [0; 1]. Figure 1: Examples of convex and non convex sets Given a set of inequalities we define the feasible region as P = fx 2 <n : Ax ≤ bg. We say that P is a polyhedron. Which points on this figure can have the optimal value? Our intuition from last time is that Figure 2: Example of a polyhedron. \Circled" corners are feasible and \squared" are non feasible optimal solutions to linear programming problems occur at \corners" of the feasible region. What we'd like to do now is to consider formal definitions of the \corners" of the feasible region. 3-1 One idea is that a point in the polyhedron is a corner if there is some objective function that is minimized there uniquely. Definition 2 x 2 P is a vertex of P if 9c 2 <n with cT x < cT y; 8y 6= x; y 2 P . Another idea is that a point x 2 P is a corner if there are no small perturbations of x that are in P . Definition 3 Let P be a convex set in <n. Then x 2 P is an extreme point of P if x cannot be written as λy + (1 − λ)z for y; z 2 P , y; z 6= x, 0 ≤ λ ≤ 1. -

Two Poset Polytopes

Discrete Comput Geom 1:9-23 (1986) G eometrv)i.~.reh, ~ ( :*mllmlati~ml © l~fi $1~ter-Vtrlq New Yorklu¢. t¢ Two Poset Polytopes Richard P. Stanley* Department of Mathematics, Massachusetts Institute of Technology, Cambridge, MA 02139 Abstract. Two convex polytopes, called the order polytope d)(P) and chain polytope <~(P), are associated with a finite poset P. There is a close interplay between the combinatorial structure of P and the geometric structure of E~(P). For instance, the order polynomial fl(P, m) of P and Ehrhart poly- nomial i(~9(P),m) of O(P) are related by f~(P,m+l)=i(d)(P),m). A "transfer map" then allows us to transfer properties of O(P) to W(P). In particular, we transfer known inequalities involving linear extensions of P to some new inequalities. I. The Order Polytope Our aim is to investigate two convex polytopes associated with a finite partially ordered set (poset) P. The first of these, which we call the "order polytope" and denote by O(P), has been the subject of considerable scrutiny, both explicit and implicit, Much of what we say about the order polytope will be essentially a review of well-known results, albeit ones scattered throughout the literature, sometimes in a rather obscure form. The second polytope, called the "chain polytope" and denoted if(P), seems never to have been previously considered per se. It is a special case of the vertex-packing polytope of a graph (see Section 2) but has many special properties not in general valid or meaningful for graphs. -

Computational Geometry – Problem Session Convex Hull & Line Segment Intersection

Computational Geometry { Problem Session Convex Hull & Line Segment Intersection LEHRSTUHL FUR¨ ALGORITHMIK · INSTITUT FUR¨ THEORETISCHE INFORMATIK · FAKULTAT¨ FUR¨ INFORMATIK Guido Bruckner¨ 04.05.2018 Guido Bruckner¨ · Computational Geometry { Problem Session Modus Operandi To register for the oral exam we expect you to present an original solution for at least one problem in the exercise session. • this is about working together • don't worry if your idea doesn't work! Guido Bruckner¨ · Computational Geometry { Problem Session Outline Convex Hull Line Segment Intersection Guido Bruckner¨ · Computational Geometry { Problem Session Definition of Convex Hull Def: A region S ⊆ R2 is called convex, when for two points p; q 2 S then line pq 2 S. The convex hull CH(S) of S is the smallest convex region containing S. Guido Bruckner¨ · Computational Geometry { Problem Session Definition of Convex Hull Def: A region S ⊆ R2 is called convex, when for two points p; q 2 S then line pq 2 S. The convex hull CH(S) of S is the smallest convex region containing S. Guido Bruckner¨ · Computational Geometry { Problem Session Definition of Convex Hull Def: A region S ⊆ R2 is called convex, when for two points p; q 2 S then line pq 2 S. The convex hull CH(S) of S is the smallest convex region containing S. In physics: Guido Bruckner¨ · Computational Geometry { Problem Session Definition of Convex Hull Def: A region S ⊆ R2 is called convex, when for two points p; q 2 S then line pq 2 S. The convex hull CH(S) of S is the smallest convex region containing S. -

1 Lifts of Polytopes

Lecture 5: Lifts of polytopes and non-negative rank CSE 599S: Entropy optimality, Winter 2016 Instructor: James R. Lee Last updated: January 24, 2016 1 Lifts of polytopes 1.1 Polytopes and inequalities Recall that the convex hull of a subset X n is defined by ⊆ conv X λx + 1 λ x0 : x; x0 X; λ 0; 1 : ( ) f ( − ) 2 2 [ ]g A d-dimensional convex polytope P d is the convex hull of a finite set of points in d: ⊆ P conv x1;:::; xk (f g) d for some x1;:::; xk . 2 Every polytope has a dual representation: It is a closed and bounded set defined by a family of linear inequalities P x d : Ax 6 b f 2 g for some matrix A m d. 2 × Let us define a measure of complexity for P: Define γ P to be the smallest number m such that for some C s d ; y s ; A m d ; b m, we have ( ) 2 × 2 2 × 2 P x d : Cx y and Ax 6 b : f 2 g In other words, this is the minimum number of inequalities needed to describe P. If P is full- dimensional, then this is precisely the number of facets of P (a facet is a maximal proper face of P). Thinking of γ P as a measure of complexity makes sense from the point of view of optimization: Interior point( methods) can efficiently optimize linear functions over P (to arbitrary accuracy) in time that is polynomial in γ P . ( ) 1.2 Lifts of polytopes Many simple polytopes require a large number of inequalities to describe. -

Simplicial Complexes

46 III Complexes III.1 Simplicial Complexes There are many ways to represent a topological space, one being a collection of simplices that are glued to each other in a structured manner. Such a collection can easily grow large but all its elements are simple. This is not so convenient for hand-calculations but close to ideal for computer implementations. In this book, we use simplicial complexes as the primary representation of topology. Rd k Simplices. Let u0; u1; : : : ; uk be points in . A point x = i=0 λiui is an affine combination of the ui if the λi sum to 1. The affine hull is the set of affine combinations. It is a k-plane if the k + 1 points are affinely Pindependent by which we mean that any two affine combinations, x = λiui and y = µiui, are the same iff λi = µi for all i. The k + 1 points are affinely independent iff P d P the k vectors ui − u0, for 1 ≤ i ≤ k, are linearly independent. In R we can have at most d linearly independent vectors and therefore at most d+1 affinely independent points. An affine combination x = λiui is a convex combination if all λi are non- negative. The convex hull is the set of convex combinations. A k-simplex is the P convex hull of k + 1 affinely independent points, σ = conv fu0; u1; : : : ; ukg. We sometimes say the ui span σ. Its dimension is dim σ = k. We use special names of the first few dimensions, vertex for 0-simplex, edge for 1-simplex, triangle for 2-simplex, and tetrahedron for 3-simplex; see Figure III.1. -

Degrees of Freedom in Quadratic Goodness of Fit

Submitted to the Annals of Statistics DEGREES OF FREEDOM IN QUADRATIC GOODNESS OF FIT By Bruce G. Lindsay∗, Marianthi Markatouy and Surajit Ray Pennsylvania State University, Columbia University, Boston University We study the effect of degrees of freedom on the level and power of quadratic distance based tests. The concept of an eigendepth index is introduced and discussed in the context of selecting the optimal de- grees of freedom, where optimality refers to high power. We introduce the class of diffusion kernels by the properties we seek these kernels to have and give a method for constructing them by exponentiating the rate matrix of a Markov chain. Product kernels and their spectral decomposition are discussed and shown useful for high dimensional data problems. 1. Introduction. Lindsay et al. (2008) developed a general theory for good- ness of fit testing based on quadratic distances. This class of tests is enormous, encompassing many of the tests found in the literature. It includes tests based on characteristic functions, density estimation, and the chi-squared tests, as well as providing quadratic approximations to many other tests, such as those based on likelihood ratios. The flexibility of the methodology is particularly important for enabling statisticians to readily construct tests for model fit in higher dimensions and in more complex data. ∗Supported by NSF grant DMS-04-05637 ySupported by NSF grant DMS-05-04957 AMS 2000 subject classifications: Primary 62F99, 62F03; secondary 62H15, 62F05 Keywords and phrases: Degrees of freedom, eigendepth, high dimensional goodness of fit, Markov diffusion kernels, quadratic distance, spectral decomposition in high dimensions 1 2 LINDSAY ET AL. -

Archimedean Solids

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln MAT Exam Expository Papers Math in the Middle Institute Partnership 7-2008 Archimedean Solids Anna Anderson University of Nebraska-Lincoln Follow this and additional works at: https://digitalcommons.unl.edu/mathmidexppap Part of the Science and Mathematics Education Commons Anderson, Anna, "Archimedean Solids" (2008). MAT Exam Expository Papers. 4. https://digitalcommons.unl.edu/mathmidexppap/4 This Article is brought to you for free and open access by the Math in the Middle Institute Partnership at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in MAT Exam Expository Papers by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. Archimedean Solids Anna Anderson In partial fulfillment of the requirements for the Master of Arts in Teaching with a Specialization in the Teaching of Middle Level Mathematics in the Department of Mathematics. Jim Lewis, Advisor July 2008 2 Archimedean Solids A polygon is a simple, closed, planar figure with sides formed by joining line segments, where each line segment intersects exactly two others. If all of the sides have the same length and all of the angles are congruent, the polygon is called regular. The sum of the angles of a regular polygon with n sides, where n is 3 or more, is 180° x (n – 2) degrees. If a regular polygon were connected with other regular polygons in three dimensional space, a polyhedron could be created. In geometry, a polyhedron is a three- dimensional solid which consists of a collection of polygons joined at their edges. The word polyhedron is derived from the Greek word poly (many) and the Indo-European term hedron (seat). -

Slices, Slabs, and Sections of the Unit Hypercube

Slices, Slabs, and Sections of the Unit Hypercube Jean-Luc Marichal Michael J. Mossinghoff Institute of Mathematics Department of Mathematics University of Luxembourg Davidson College 162A, avenue de la Fa¨ıencerie Davidson, NC 28035-6996 L-1511 Luxembourg USA Luxembourg [email protected] [email protected] Submitted: July 27, 2006; Revised: August 6, 2007; Accepted: January 21, 2008; Published: January 29, 2008 Abstract Using combinatorial methods, we derive several formulas for the volume of convex bodies obtained by intersecting a unit hypercube with a halfspace, or with a hyperplane of codimension 1, or with a flat defined by two parallel hyperplanes. We also describe some of the history of these problems, dating to P´olya’s Ph.D. thesis, and we discuss several applications of these formulas. Subject Class: Primary: 52A38, 52B11; Secondary: 05A19, 60D05. Keywords: Cube slicing, hyperplane section, signed decomposition, volume, Eulerian numbers. 1 Introduction In this note we study the volumes of portions of n-dimensional cubes determined by hyper- planes. More precisely, we study slices created by intersecting a hypercube with a halfspace, slabs formed as the portion of a hypercube lying between two parallel hyperplanes, and sections obtained by intersecting a hypercube with a hyperplane. These objects occur natu- rally in several fields, including probability, number theory, geometry, physics, and analysis. In this paper we describe an elementary combinatorial method for calculating volumes of arbitrary slices, slabs, and sections of a unit cube. We also describe some applications that tie these geometric results to problems in analysis and combinatorics. Some of the results we obtain here have in fact appeared earlier in other contexts. -

Locally Solid Riesz Spaces with Applications to Economics / Charalambos D

http://dx.doi.org/10.1090/surv/105 alambos D. Alipr Lie University \ Burkinshaw na University-Purdue EDITORIAL COMMITTEE Jerry L. Bona Michael P. Loss Peter S. Landweber, Chair Tudor Stefan Ratiu J. T. Stafford 2000 Mathematics Subject Classification. Primary 46A40, 46B40, 47B60, 47B65, 91B50; Secondary 28A33. Selected excerpts in this Second Edition are reprinted with the permissions of Cambridge University Press, the Canadian Mathematical Bulletin, Elsevier Science/Academic Press, and the Illinois Journal of Mathematics. For additional information and updates on this book, visit www.ams.org/bookpages/surv-105 Library of Congress Cataloging-in-Publication Data Aliprantis, Charalambos D. Locally solid Riesz spaces with applications to economics / Charalambos D. Aliprantis, Owen Burkinshaw.—2nd ed. p. cm. — (Mathematical surveys and monographs, ISSN 0076-5376 ; v. 105) Rev. ed. of: Locally solid Riesz spaces. 1978. Includes bibliographical references and index. ISBN 0-8218-3408-8 (alk. paper) 1. Riesz spaces. 2. Economics, Mathematical. I. Burkinshaw, Owen. II. Aliprantis, Char alambos D. III. Locally solid Riesz spaces. IV. Title. V. Mathematical surveys and mono graphs ; no. 105. QA322 .A39 2003 bib'.73—dc22 2003057948 Copying and reprinting. Individual readers of this publication, and nonprofit libraries acting for them, are permitted to make fair use of the material, such as to copy a chapter for use in teaching or research. Permission is granted to quote brief passages from this publication in reviews, provided the customary acknowledgment of the source is given. Republication, systematic copying, or multiple reproduction of any material in this publication is permitted only under license from the American Mathematical Society. -

On the Ekeland Variational Principle with Applications and Detours

Lectures on The Ekeland Variational Principle with Applications and Detours By D. G. De Figueiredo Tata Institute of Fundamental Research, Bombay 1989 Author D. G. De Figueiredo Departmento de Mathematica Universidade de Brasilia 70.910 – Brasilia-DF BRAZIL c Tata Institute of Fundamental Research, 1989 ISBN 3-540- 51179-2-Springer-Verlag, Berlin, Heidelberg. New York. Tokyo ISBN 0-387- 51179-2-Springer-Verlag, New York. Heidelberg. Berlin. Tokyo No part of this book may be reproduced in any form by print, microfilm or any other means with- out written permission from the Tata Institute of Fundamental Research, Colaba, Bombay 400 005 Printed by INSDOC Regional Centre, Indian Institute of Science Campus, Bangalore 560012 and published by H. Goetze, Springer-Verlag, Heidelberg, West Germany PRINTED IN INDIA Preface Since its appearance in 1972 the variational principle of Ekeland has found many applications in different fields in Analysis. The best refer- ences for those are by Ekeland himself: his survey article [23] and his book with J.-P. Aubin [2]. Not all material presented here appears in those places. Some are scattered around and there lies my motivation in writing these notes. Since they are intended to students I included a lot of related material. Those are the detours. A chapter on Nemyt- skii mappings may sound strange. However I believe it is useful, since their properties so often used are seldom proved. We always say to the students: go and look in Krasnoselskii or Vainberg! I think some of the proofs presented here are more straightforward. There are two chapters on applications to PDE. -

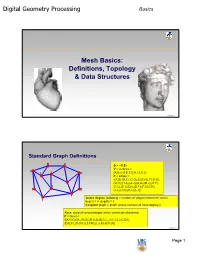

Digital Geometry Processing Mesh Basics

Digital Geometry Processing Basics Mesh Basics: Definitions, Topology & Data Structures 1 © Alla Sheffer Standard Graph Definitions G = <V,E> V = vertices = {A,B,C,D,E,F,G,H,I,J,K,L} E = edges = {(A,B),(B,C),(C,D),(D,E),(E,F),(F,G), (G,H),(H,A),(A,J),(A,G),(B,J),(K,F), (C,L),(C,I),(D,I),(D,F),(F,I),(G,K), (J,L),(J,K),(K,L),(L,I)} Vertex degree (valence) = number of edges incident on vertex deg(J) = 4, deg(H) = 2 k-regular graph = graph whose vertices all have degree k Face: cycle of vertices/edges which cannot be shortened F = faces = {(A,H,G),(A,J,K,G),(B,A,J),(B,C,L,J),(C,I,L),(C,D,I), (D,E,F),(D,I,F),(L,I,F,K),(L,J,K),(K,F,G)} © Alla Sheffer Page 1 Digital Geometry Processing Basics Connectivity Graph is connected if there is a path of edges connecting every two vertices Graph is k-connected if between every two vertices there are k edge-disjoint paths Graph G’=<V’,E’> is a subgraph of graph G=<V,E> if V’ is a subset of V and E’ is the subset of E incident on V’ Connected component of a graph: maximal connected subgraph Subset V’ of V is an independent set in G if the subgraph it induces does not contain any edges of E © Alla Sheffer Graph Embedding Graph is embedded in Rd if each vertex is assigned a position in Rd Embedding in R2 Embedding in R3 © Alla Sheffer Page 2 Digital Geometry Processing Basics Planar Graphs Planar Graph Plane Graph Planar graph: graph whose vertices and edges can Straight Line Plane Graph be embedded in R2 such that its edges do not intersect Every planar graph can be drawn as a straight-line plane graph ©