View of the Related Literature

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Oral History T-0001 Interviewees: Chick Finney and Martin Luther Mackay Interviewer: Irene Cortinovis Jazzman Project April 6, 1971

ORAL HISTORY T-0001 INTERVIEWEES: CHICK FINNEY AND MARTIN LUTHER MACKAY INTERVIEWER: IRENE CORTINOVIS JAZZMAN PROJECT APRIL 6, 1971 This transcript is a part of the Oral History Collection (S0829), available at The State Historical Society of Missouri. If you would like more information, please contact us at [email protected]. Today is April 6, 1971 and this is Irene Cortinovis of the Archives of the University of Missouri. I have with me today Mr. Chick Finney and Mr. Martin L. MacKay who have agreed to make a tape recording with me for our Oral History Section. They are musicians from St. Louis of long standing and we are going to talk today about their early lives and also about their experiences on the music scene in St. Louis. CORTINOVIS: First, I'll ask you a few questions, gentlemen. Did you ever play on any of the Mississippi riverboats, the J.S, The St. Paul or the President? FINNEY: I never did play on any of those name boats, any of those that you just named, Mrs. Cortinovis, but I was a member of the St. Louis Crackerjacks and we played on kind of an unknown boat that went down the river to Cincinnati and parts of Kentucky. But I just can't think of the name of the boat, because it was a small boat. Do you need the name of the boat? CORTINOVIS: No. I don't need the name of the boat. FINNEY: Mrs. Cortinovis, this is Martin McKay who is a name drummer who played with all the big bands from Count Basie to Duke Ellington. -

Senior Focus: Mary Ann Erickson

Vol. 21 No. 3 • Fall 2016 www.tclifelong.org Senior Circle www.tompkinscounty.org/cofa A circle is a group of people in which everyone has a front seat. same time, we are still challenged Senior Focus: Mary Ann Erickson by many of the same issues that Gerontologist and the New Board President of Lifelong aging humans have always faced like declines in health and losses in our social network. I think it’s sometimes hard for us Baby Mary Ann Erickson became the new President of was a pivotal time for me, as I was Boomers to walk the line between the Board of Lifelong at the end of May. Recently involved with this research at every overconfident optimism about aging we had the opportunity to ask her a few questions stage, from developing the interview and unwarranted pessimism. We about her connection to Lifelong and her interest instrument to entering and using the need to prepare for both the in gerontology. data. I did some of the interviews possibilities and the challenges.” for this study, some of which I This is Mary Ann’s sixth year on the board having Any advice for the sandwich been recommended by colleagues from Ithaca remember quite clearly more than 10 years later. I used the Retirement generation? “Most of my peers are College’s Gerontology Institute. Mary Ann is an in this group, caring both for and Well-Being Study data for my Mary Ann Erickson, Associate Professor and Chair, Gerontology children and for aging dissertation.” Lifelong Board President Faculty, Planned Studies Program. -

Fresno-Commission-Fo

“If you are working on a problem you can solve in your lifetime, you’re thinking too small.” Wes Jackson I have been blessed to spend time with some of our nation’s most prominent civil rights leaders— truly extraordinary people. When I listen to them tell their stories about how hard they fought to combat the issues of their day, how long it took them, and the fact that they never stopped fighting, it grounds me. Those extraordinary people worked at what they knew they would never finish in their lifetimes. I have come to understand that the historical arc of this country always bends toward progress. It doesn’t come without a fight, and it doesn’t come in a single lifetime. It is the job of each generation of leaders to run the race with truth, honor, and integrity, then hand the baton to the next generation to continue the fight. That is what our foremothers and forefathers did. It is what we must do, for we are at that moment in history yet again. We have been passed the baton, and our job is to stretch this work as far as we can and run as hard as we can, to then hand it off to the next generation because we can see their outstretched hands. This project has been deeply emotional for me. It brought me back to my youthful days in Los Angeles when I would be constantly harassed, handcuffed, searched at gunpoint - all illegal, but I don’t know that then. I can still feel the terror I felt every time I saw a police cruiser. -

Most Requested Songs of 2019

Top 200 Most Requested Songs Based on millions of requests made through the DJ Intelligence music request system at weddings & parties in 2019 RANK ARTIST SONG 1 Whitney Houston I Wanna Dance With Somebody (Who Loves Me) 2 Mark Ronson Feat. Bruno Mars Uptown Funk 3 Journey Don't Stop Believin' 4 Cupid Cupid Shuffle 5 Neil Diamond Sweet Caroline (Good Times Never Seemed So Good) 6 Walk The Moon Shut Up And Dance 7 Justin Timberlake Can't Stop The Feeling! 8 Earth, Wind & Fire September 9 Usher Feat. Ludacris & Lil' Jon Yeah 10 V.I.C. Wobble 11 DJ Casper Cha Cha Slide 12 Outkast Hey Ya! 13 Black Eyed Peas I Gotta Feeling 14 Bon Jovi Livin' On A Prayer 15 ABBA Dancing Queen 16 Bruno Mars 24k Magic 17 Garth Brooks Friends In Low Places 18 Spice Girls Wannabe 19 AC/DC You Shook Me All Night Long 20 Kenny Loggins Footloose 21 Backstreet Boys Everybody (Backstreet's Back) 22 Isley Brothers Shout 23 B-52's Love Shack 24 Van Morrison Brown Eyed Girl 25 Bruno Mars Marry You 26 Miley Cyrus Party In The U.S.A. 27 Taylor Swift Shake It Off 28 Luis Fonsi & Daddy Yankee Feat. Justin Bieber Despacito 29 Montell Jordan This Is How We Do It 30 Beatles Twist And Shout 31 Ed Sheeran Thinking Out Loud 32 Sir Mix-A-Lot Baby Got Back 33 Maroon 5 Sugar 34 Ed Sheeran Perfect 35 Def Leppard Pour Some Sugar On Me 36 Killers Mr. Brightside 37 Pharrell Williams Happy 38 Toto Africa 39 Chris Stapleton Tennessee Whiskey 40 Flo Rida Feat. -

Top 200 Most Requested Songs

Top 200 Most Requested Songs Based on millions of requests made through the DJ Intelligence® music request system at weddings & parties in 2013 RANK ARTIST SONG 1 Journey Don't Stop Believin' 2 Cupid Cupid Shuffle 3 Black Eyed Peas I Gotta Feeling 4 Lmfao Sexy And I Know It 5 Bon Jovi Livin' On A Prayer 6 AC/DC You Shook Me All Night Long 7 Morrison, Van Brown Eyed Girl 8 Psy Gangnam Style 9 DJ Casper Cha Cha Slide 10 Diamond, Neil Sweet Caroline (Good Times Never Seemed So Good) 11 B-52's Love Shack 12 Beyonce Single Ladies (Put A Ring On It) 13 Maroon 5 Feat. Christina Aguilera Moves Like Jagger 14 Jepsen, Carly Rae Call Me Maybe 15 V.I.C. Wobble 16 Def Leppard Pour Some Sugar On Me 17 Beatles Twist And Shout 18 Usher Feat. Ludacris & Lil' Jon Yeah 19 Macklemore & Ryan Lewis Feat. Wanz Thrift Shop 20 Jackson, Michael Billie Jean 21 Rihanna Feat. Calvin Harris We Found Love 22 Lmfao Feat. Lauren Bennett And Goon Rock Party Rock Anthem 23 Pink Raise Your Glass 24 Outkast Hey Ya! 25 Isley Brothers Shout 26 Sir Mix-A-Lot Baby Got Back 27 Lynyrd Skynyrd Sweet Home Alabama 28 Mars, Bruno Marry You 29 Timberlake, Justin Sexyback 30 Brooks, Garth Friends In Low Places 31 Lumineers Ho Hey 32 Lady Gaga Feat. Colby O'donis Just Dance 33 Sinatra, Frank The Way You Look Tonight 34 Sister Sledge We Are Family 35 Clapton, Eric Wonderful Tonight 36 Temptations My Girl 37 Loggins, Kenny Footloose 38 Train Marry Me 39 Kool & The Gang Celebration 40 Daft Punk Feat. -

06.22.21 Approved Minutes

MINUTES OF THE BUCKSKIN FIRE DEPARTMENT DISTRICT FIRE BOARD 06/22/21-Open Meeting: Minutes to be approved at open public meeting on Tuesday, 07/20/2021. A Budget Workshop and Public Hearing of the Buckskin Fire Board convened on June 22, 2021, that started at 6:00 pm in the classroom of the Buckskin Fire District, located at: 8500 Riverside Drive, Parker, AZ 85344 that convened at 6:00 pm. The following matters were discussed at the Open Meeting Public Hearing 1. Call to Order: Budget Hearing open at 6:00 pm 2. Roll Call. Members Present: Chairman Jeff Daniel, Don Rountree, John Mihelich, Jeff McCormack, and Wayne Posey. Staff Present: Chief Maloney, Barbara Cole, Captain Weatherford, Captain Chambers, Lt. Fernandes, FF Maxwell, and FF Anderson. Public Present: Amanda Weatherford 3. (Discussion): Open to the Public for Comments. No Comments from the Public 4. Adjourn: Budget Hearing Closed: Public Budget Hearing closed at 6:02 pm Agenda Open Meeting 5. Convene into Open Meeting: 6:03 pm 6. Call to the Public. Consideration and discussion of comments from the public. Those wishing to address the Buckskin Fire District Board need not request permission in advance Pursuant to A.R.S. § 38-431.01(G), the Fire District Board is not permitted to discuss or take any action on items raised in the call to the public that is not specifically identified on the Agenda. However, individual Board members may be permitted to respond to criticism directed to them. Otherwise, the Board may direct that staff review the matter or ask that the matter be placed on a future agenda. -

August-September

Portland Peace Choir Newsletter Volume 2 No. 8, August/September 2017 PEAA VolunteCer Effort Eof the PoMrtland PeaEce ChoirAL TMhIeS PSoIOrtlNan Sd TPAeTacEe MChEoNir T strives to exemplify the principles of peace, Meet Our New equality, justice, It’s been an interesting summer for the stewardship of the Earth, DPortlainrd ePeace Cthooir, wr ith one change after unity and cooperation. another looming and forcing us out of our We sing music from comfort zone. We’re starting our new sea - diverse cultures and son off with a new director, in a new loca - traditions to inspire peace tion, and we will be singing music chosen in ourselves, our families, in a new way. our communities, and the world. We were all a bit uneasy about the prospect of finding a new director, but the search, interviewing, auditioning and selection processes all went surprisingly smoothly and we In This Issue were able to find a very talented and capable Director to lead us into the future with energy, optimism and enthusiasm. • Meet Our New Director! • Where In the World is the Kristin Gordon George is a musician, teacher, and the Artistic Di - rector of Resonate Choral Arts, an intergenerational womens’ choir. PPC? She also served as Music Director of the Metropolitan Community • Ready ... Set ... Sing! Church of Portland for nine years, where she directed the choir and • Beat the Heat band and arranged musical works for large group ensembles. She’s been writing music since childhood, and her repertoire now in - cludes contemporary songs, musical theater, cantatas and award- winning choral music. -

Most Requested Songs of 2009

Top 200 Most Requested Songs Based on nearly 2 million requests made at weddings & parties through the DJ Intelligence music request system in 2009 RANK ARTIST SONG 1 AC/DC You Shook Me All Night Long 2 Journey Don't Stop Believin' 3 Lady Gaga Feat. Colby O'donis Just Dance 4 Bon Jovi Livin' On A Prayer 5 Def Leppard Pour Some Sugar On Me 6 Morrison, Van Brown Eyed Girl 7 Beyonce Single Ladies (Put A Ring On It) 8 Timberlake, Justin Sexyback 9 B-52's Love Shack 10 Lynyrd Skynyrd Sweet Home Alabama 11 ABBA Dancing Queen 12 Diamond, Neil Sweet Caroline (Good Times Never Seemed So Good) 13 Black Eyed Peas Boom Boom Pow 14 Rihanna Don't Stop The Music 15 Jackson, Michael Billie Jean 16 Outkast Hey Ya! 17 Sister Sledge We Are Family 18 Sir Mix-A-Lot Baby Got Back 19 Kool & The Gang Celebration 20 Cupid Cupid Shuffle 21 Clapton, Eric Wonderful Tonight 22 Black Eyed Peas I Gotta Feeling 23 Lady Gaga Poker Face 24 Beatles Twist And Shout 25 James, Etta At Last 26 Black Eyed Peas Let's Get It Started 27 Usher Feat. Ludacris & Lil' Jon Yeah 28 Jackson, Michael Thriller 29 DJ Casper Cha Cha Slide 30 Mraz, Jason I'm Yours 31 Commodores Brick House 32 Brooks, Garth Friends In Low Places 33 Temptations My Girl 34 Foundations Build Me Up Buttercup 35 Vanilla Ice Ice Ice Baby 36 Bee Gees Stayin' Alive 37 Sinatra, Frank The Way You Look Tonight 38 Village People Y.M.C.A. -

¬タᆰmichael Jackson Mtv Awards 1995 Full

Rafael Ahmed Shared on Google+ · 1 year ago 1080p HD Remastered. Best version on YouTube Reply · 415 Hide replies ramy ninio 1 year ago Il est vraiment très fort Michael Jackson !!! Reply · Angelo Oducado 1 year ago When you make videos like this I recommend you leave the aspect ratio to 4:3 or maybe add effects to the black bars Reply · Yehya Jackson 11 months ago Hello My Friend. Please Upload To Your Youtube Channel Michael Jackson's This Is It Full Movie DVD Version And Dangerous World Tour (Live In Bucharest 1992). Reply · Moonwalker Holly 11 months ago Hello and thanks for sharing this :). I put it into my favs. Every other video that I've found of this performance is really blurry. The quality on this is really good. I love it. Reply · Jarvis Hardy 11 months ago Thank cause everyother 15 mn version is fucked damn mike was a bad motherfucker Reply · 6 Moonwalker Holly 11 months ago +Jarvis Hardy I like him bad. He's my naughty boy ;) Reply · 1 Nathan Peneha A.K.A Thepenjoker 11 months ago DopeSweet as Reply · vaibhav yadav 9 months ago I want to download michael jackson bucharest concert in HD. Please tell the source. Reply · L. Kooll 9 months ago buy it lol, its always better to have the original stuff like me, I have the original bucharest concert in DVD Reply · 1 vaibhav yadav 9 months ago +L. Kooll yes you are right. I should buy one. Reply · 1 L. Kooll 9 months ago +vaibhav yadav :) do it, you will see you wont regret it. -

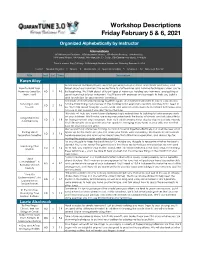

Workshop Descriptions Friday February 5 & 6, 2021

Workshop Descriptions Friday February 5 & 6, 2021 Organized Alphebetically by Instructor Abbreviations: HD=Hammered Dulcimer, MD=Mountain Dulcimer, BP=Bowed Psaltery, AH=Autoharp, PW=Penny Whistle, UK=Ukulele, MA=Mandolin, G= Guitar, CB+Clawhammer Banjo, FI=Fiddle Time is shown: Day (F=Friday, S=Saturday)-Session Number ex: Saturday Session 4 = S-4 Levels:1 = Absolute Beginner 2 = Novice 3 = Intermediate 4 = Upper Intermediate 5 = Advanced All = Non-Level Specific Title Inst. Lvl. Time Description Karen Alley As hammered dulcimer players, we often get wrapped up in notes and chords and tunes, and How to Hold Your forget about our hammers! The easiest time to start learning solid hammer technique is when you’re Hammers (and Use HD 1 F-3 first beginning. We’ll talk about different types of hammers, holding your hammers, and getting a Them, Too!) good sound out of your instrument. You’ll leave with exercises and concepts to help you build a solid foundation for your hammer technique. Someday soon we will be playing together again, and everyone will want to join in! Jam sessions Surviving a Jam can be intimidating if you’re new to the dulcimer (and even if you’re not!), but they don’t need to HD 2 F-4 Session be. We’ll talk about how jam sessions work, and work on simple melody and chord techniques you can use to join in even if you don’t know the tune. This class will help you move beyond playing single melody lines to building full solo arrangements on your dulcimer. -

Skain's Domain - Episode 13

Skain's Domain - Episode 13 Skain's Domain Episode 13 - June 22, 2020 0:00:00 Adam Meeks: Alright. Hey guys, thanks for joining us again this week. You're tuning into Skain's Domain with Wynton Marsalis, and I'm Adam Meeks your technical host for the evening. Tonight, we're joined by members of the Jazz at Lincoln Center Orchestra including Chris Crenshaw, Kenny Rampton, Elliot Mason, Camille Thurman, Ted Nash, Paul Nedzela, Walter Blanding, Dan Nimmer, and Carlos Henriquez. If you have a question you'd like to ask, we will have you use the raise hand feature. To do this, click participants on the bottom of your Zoom window, and then in the participants tab, press the raise hand button. Please also just make sure that your full name appears as your user name so we can call you when it's your turn. And before we do that, I'd like to hand the mic over to Mr. Wynton Marsalis to kick things off. Go ahead, Wynton. 0:00:47 Wynton Marsalis: Alright. Thank you very much, Adam. Welcome everybody back again this week for Skain's Domain where we discuss issues significant and trivial, and the more trivial they are, the more we... More impassioned we are about it. Today, I have the blessing of having the members of the Jazz at Lincoln Center Orchestra. I missed them so much. And we spent so many times, so many years playing each other's music on bandstands. For our younger members, some started as students, for our older members, we've been here and I just wanna.. -

Tech Times 10/2019

Springfield Technical Community College Advising Period for Priority Registration begins Monday, October 28 Fall Semester 2019 Volume • 14 – Issue • 3 Tie-’Dive’ Into The New Semester: STCC’s Opening Picnic Fall 2019 by Jesse Mongeon veryone loves summer For example, the YWCA had cookouts. On September a banner that read “YWCA is E11th, STCC hosted their on a mission” and at their table, annual Opening Day Picnic, an they asked students to fill out the outdoor cookout event meant to prompt “#STCCWeCan”. Some help familiarize new and returning of the responses were, “#STC- students with services and activi- CWeCan support each other,” and ties available on campus as well as “#STCCWeCan be someone’s provide a great social atmosphere sunshine.” Some of the informa- for students to interact and meet tion tables gave out free items new people. Each year, the picnic is This year, the picnic offered for Health and Wellness center, the to students as advertisement. packed with great food, music, and students to tie-dye a free t-shirt. It Dental Hygiene program, Disabil- The Office of Student Activities information tables. was first come first serve, and the ity Services, the Student Success gave out student planners, Big The menu for the event this line got long very quickly. Many Center, the Career Development E pamphlets, and fanny packs. year was hotdogs and hamburgers, students were very excited about Center, the STCC Foundation The library table was giving out italian pasta salad, a bag of chips, this, and loved the idea of per- Scholarships, the GLBTA Club, information about free ebooks a selection of cookies and brown- sonalizing a shirt for themselves and the Armory Square Child and book themed button pins.