Martingale Approach to Stochastic Differential Games of Control

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Frecker's Saddlery

Frecker’s Saddlery Frecker’s 13654 N 115 E Idaho Falls, Idaho 83401 addlery (208) 538-7393 S [email protected] Kent and Dave’s Price List SADDLES FULL TOOLED Base Price 3850.00 5X 2100.00 Padded Seat 350.00 7X 3800.00 Swelled Forks 100.00 9X 5000.00 Crupper Ring 30.00 Dyed Background add 40% to tooling cost Breeching Rings 20.00 Rawhide Braided Hobble Ring 60.00 PARTIAL TOOLED Leather Braided Hobble Ring 50.00 3 Panel 600.00 5 Panel 950.00 7 Panel 1600.00 STIRRUPS Galvanized Plain 75.00 PARTIAL TOOLED/BASKET Heavy Monel Plain 175.00 3 Panel 500.00 Heavy Brass Plain 185.00 5 Panel 700.00 Leather Lined add 55.00 7 Panel 800.00 Heel Blocks add 15.00 Plain Half Cap add 75.00 FULL BASKET STAMP Stamped Half Cap add 95.00 #7 Stamp 1850.00 Tooled Half Cap add 165.00 #12 Stamp 1200.00 Bulldog Tapadero Plain 290.00 Bulldog Tapadero Stamped 350.00 PARTIAL BASKET STAMP Bulldog Tapadero Tooled 550.00 3 Panel #7 550.00 Parade Tapadero Plain 450.00 5 Panel #7 700.00 Parade Tapadero Stamped (outside) 500.00 7 Panel #7 950.00 Parade Tapadero Tooled (outside) 950.00 3 Panel #12 300.00 Eagle Beak Tapaderos Tooled (outside) 1300.00 5 Panel #12 350.00 7 Panel #12 550.00 BREAST COLLARS FULL BASKET/TOOLED Brannaman Martingale Plain 125.00 #7 Basket/Floral Pattern 2300.00 Brannaman Martingale Stamped 155.00 #12 Basket/Floral 1500.00 Brannaman Martingale Basket/Tooled 195.00 Brannaman Martingale Tooled 325.00 BORDER STAMPS 3 Piece Martingale Plain 135.00 Bead 150.00 3 Piece Martingale Stamped 160.00 ½” Wide 250.00 3 Piece Martingale Basket/Tooled 265.00 -

Public Auction

PUBLIC AUCTION Mary Sellon Estate • Location & Auction Site: 9424 Leversee Road • Janesville, Iowa 50647 Sale on July 10th, 2021 • Starts at 9:00 AM Preview All Day on July 9th, 2021 or by appointment. SELLING WITH 2 AUCTION RINGS ALL DAY , SO BRING A FRIEND! LUNCH STAND ON GROUNDS! Mary was an avid collector and antique dealer her entire adult life. She always said she collected the There are collections of toys, banks, bookends, inkwells, doorstops, many items of furniture that were odd and unusual. We started with old horse equipment when nobody else wanted it and branched out used to display other items as well as actual old wood and glass display cases both large and small. into many other things, saddles, bits, spurs, stirrups, rosettes and just about anything that ever touched This will be one of the largest offerings of US Army horse equipment this year. Look the list over and a horse. Just about every collector of antiques will hopefully find something of interest at this sale. inspect the actual offering July 9th, and July 10th before the sale. Hope to see you there! SADDLES HORSE BITS STIRRUPS (S.P.) SPURS 1. U.S. Army Pack Saddle with both 39. Australian saddle 97. U.S. civil War- severe 117. US Calvary bits All Model 136. Professor Beery double 1 P.R. - Smaller iron 19th 1 P.R. - Side saddle S.P. 1 P.R. - Scott’s safety 1 P.R. - Unusual iron spurs 1 P.R. - Brass spurs canvas panniers good condition 40. U.S. 1904- Very good condition bit- No.3- No Lip Bar No 1909 - all stamped US size rein curb bit - iron century S.P. -

MU Guide PUBLISHED by MU EXTENSION, UNIVERSITY of MISSOURI-COLUMBIA Muextension.Missouri.Edu

Horses AGRICULTURAL MU Guide PUBLISHED BY MU EXTENSION, UNIVERSITY OF MISSOURI-COLUMBIA muextension.missouri.edu Choosing, Assembling and Using Bridles Wayne Loch, Department of Animal Sciences Bridles are used to control horses and achieve desired performance. Although horses can be worked without them or with substitutes, a bridle with one or two bits can add extra finesse. The bridle allows you to communicate and control your mount. For it to work properly, you need to select the bridle carefully according to the needs of you and your horse as well as the type of performance you expect. It must also be assembled correctly. Although there are many styles of bridles, the procedures for assembling and using them are similar. The three basic parts of a bridle All bridles have three basic parts: bit, reins and headstall (Figure 1). The bit is the primary means of communication. The reins allow you to manipulate the bit and also serve as a secondary means of communica- tion. The headstall holds the bit in place and may apply Figure 1. A bridle consists of a bit, reins and headstall. pressure to the poll. The bit is the most important part of the bridle The cheekpieces and shanks of curb and Pelham bits because it is the major tool of communication and must also fit properly. If the horse has a narrow mouth control. Choose one that is suitable for the kind of perfor- and heavy jaws, you might bend them outward slightly. mance you desire and one that is suitable for your horse. Cheekpieces must lie along the horse’s cheeks. -

2019 Saddleseat Horse Division

2019 SADDLESEAT HORSE DIVISION Contents General Rules Saddleseat Division Classes Saddleseat Equitation Scoring The Saddleseat Division is an Open Division, and NOT eligible for High Point awards. Classes Walk and Trot Pleasure Three-Gaited Show Pleasure Three-Gaited Country Pleasure Five-Gaited Show Pleasure Five-Gaited Country Pleasure Pleasure Equitation • Ground Handling OI: open to all breeds and disciplines. Rules are posted separately. All 4-H’ers riding or driving horses at 4-H events or activities are required to wear an ASTM-SEI Equestrian Helmet at all times. SS-1 GENERAL RULES All 4-H’ers riding or driving horses and/or ponies at 4-H events or activities are required to wear an ASTM-SEI Equestrian helmet at all times. Cruelty, abuse or inhumane treatment of any horse in the show ring or in the stable area will not be tolerated by the show management, and the offender will be barred from the show area for the duration of the show. Evidence of any inhumane treatment to a horse including but not limited to blood, whip marks that raise welts or abusive whipping, in or out of the show ring, shall result in disqualification of that horse and that exhibitor for the entire show and shall result in the forfeiture of all ribbons, awards and points won. SADDLESEAT DIVISION CLASSES WALK AND TROT PLEASURE - Entries must show in a flat, cutback English saddle with full bridle, pelham, or snaffle. Use of a standing martingale, bosal, mechanical hackamore, draw reins and/or tie down is prohibited. However, the use of a running or German martingale with only a single snaffle or work snaffle bridle is acceptable. -

Tory Leather LLC Equestrian Equipment Catalog Proudly Made in the USA TORY and YOU

Tory Leather LLC Equestrian Equipment Catalog Proudly Made in the USA TORY AND YOU As we continue our growth and changes with the merchandise that we manufacture, we must also make changes in order to serve you more proficiently. Following are our Terms and Policies that we ask you to read. • TERMS: Our terms are 2% 10 - Net 30 to approved dealers with accounts in good standing. This means that you can take a 2% discount from the subtotal if paid within 10 days. If you do not pay in that 10 day time, the complete balance is due in 30 days. Do not include the shipping when figuring the 2% discount. • FIRST TIME ORDERS will be shipped C.O.D., Certified Check or Credit Card unless other arrangements are made with the credit manager. • We accept MasterCard, Visa, Discover, and AMEX (AMEX pending approval). • A $10.00 SERVICE CHARGE will be added to all orders under $50.00. • There will be a $25.00 Service Charge on ALL RETURNED CHECKS. • We reserve the right to refuse shipments to accounts with a PAST DUE BALANCE of 30 days or more. • All past due accounts are subject to finance charges. • An account TURNED OVER FOR COLLECTION will be liable for all collection fees and court costs that are involved in settling the account. • Please INSPECT ALL ORDERS ON RECEIVING THEM - ANY SHORTAGES OR DAMAGES MUST BE REPORTED WITHIN 48 HOURS. • No RETURNS will be accepted unless you phone and request a return authorization. Tory will not accept any returned items that are special or custom orders unless defective. -

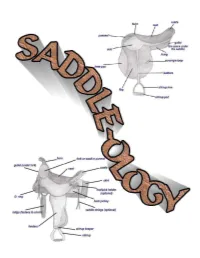

Saddleology (PDF)

This manual is intended for 4-H use and created for Maine 4-H members, leaders, extension agents and staff. COVER CREATED BY CATHY THOMAS PHOTOS OF SADDLES COURSTESY OF: www.horsesaddleshop.com & www.western-saddle-guide.com & www.libbys-tack.com & www.statelinetack.com & www.wikipedia.com & Cathy Thomas & Terry Swazey (permission given to alter photo for teaching purposes) REFERENCE LIST: Western Saddle Guide Dictionary of Equine Terms Verlane Desgrange Created by Cathy Thomas © Cathy Thomas 2008 TABLE OF CONTENTS Introduction.................................................................................4 Saddle Parts - Western..................................................................5-7 Saddle Parts - English...................................................................8-9 Fitting a saddle........................................................................10-15 Fitting the rider...........................................................................15 Other considerations.....................................................................16 Saddle Types & Functions - Western...............................................17-20 Saddle Types & Functions - English.................................................21-23 Latigo Straps...............................................................................24 Latigo Knots................................................................................25 Cinch Buckle...............................................................................26 Buying the right size -

English Equestrian Equipment List.Pdf

UNIVERSITY OF FINDLAY EQUIPMENT LIST FOR ENGLISH EQUESTRIAN STUDIES REQUIRED: 1. One leather halter with brass name plate naming student**, one black breakaway halter 2. Two black cotton leads and SEPARATE chain attachment 3. Saddle: Hunter/Jumper-close contact, Eventing-All-purpose 4. Leather and/or fleece lined double elastic girth: 48”-52” sizes are recommended (Professional’s Choice, etc.) and girth extender 5. English bridle (H/J-Brown, Eventing-black or brown) with flash attachment or separate figure 8 noseband 6. Bits-5” or 5 ½” Smooth and Slow Twist Snaffle, 5”-5 ½” Loose ring snaffle (French link or Dr. Bristol acceptable) 7. H/J-Standing and running martingale with rubber stop. (Wait to purchase until your horse is assigned, unless you already own one-as sizes may vary) 8. Eventing-Running martingale and rein stoppers (Wait to purchase until your horse is assigned, unless you already own one-as sizes may vary) 9. One white fleece saddle pad, one Mattes pad with shims, one all-purpose saddle pad, and three white baby or square pads clearly marked with your name (conservative colors only) 10. Front and Hind Boots (Eskadron, Equifit, Askan Sports Boots, Woof boots, etc) AND polo wraps (Dark colors only, black preferred) 11. Standing wraps/stable bandages in conservative colors and white pillow quilts or No Bows (stitched twice long ways) Quilt measurements: 2 at 12” and 2 at 14” 12. Clippers with blades sizes 10 and 40 (Andis T-84 or Oster Variable Speed blade combo for body clipping) (or comparable), AND an outdoor extension cord 13. -

Dressage Attire & Equipment

Dressage Attire & Equipment updated 4/1/16 ACKNOWLEDGEMENTS The USEF Licensed Officials and Education Departments would like to thank the following for their contributions to this booklet: USEF Dressage Committee USEF Dressage Department Janine Malone – Dressage Technical Delegate, Editor Lisa Gorretta – Dressage Technical Delegate, Assistant Editor Jean Kraus – Dressage Technical Delegate, Assistant Editor Copyright © 2016 Do not reproduce without permission of: United States Equestrian Federation, Inc. 4047 Iron Works Parkway Lexington, KY 40511 www.usef.org 2 Dressage Equipment Booklet Updated 4/1/16 Introduction The purpose of this pamphlet is to assist Exhibitors as well as USEF Dressage Technical Delegates, Dressage Judges and Stewards who officiate Dressage classes at any Federation licensed competition. Exhibitors and Officials must be familiar with USEF Dressage Rules DR120 and DR121 in the current USEF Rule Book, plus the accompanying photos and drawings. Illustration through photos and drawings have been used to indicate what makes a particular piece of equipment or attire legal or illegal for use at Federation licensed competitions offering Dressage classes. In no way does this booklet supersede the most current USEF Rule Book. The USEF Bylaws, General Rules, and Dressage Rules are found HERE on the USEF website. Please be advised that the USEF Dressage Department only gives advisory opinions, not binding opinions, regarding the rules since ultimately it is the Federation Hearing Committee which applies facts and circumstances to the relevant rules and determines whether or not each fact constitutes a violation of the rules; and then only after a protest or charge of rule violation is brought before them. -

Dog Scout Troop Guidebook 2011-9-19

DOG SCOUTS OF AMERICA Dog Scout Troop Guidebook forming and maintaining Active DSA Troops Date: 9/19/11 Inside: ♦ Starting a Dog Scout Troop ♦ Maintaining an Active Troop ♦ Becoming a Troop Leader, Scoutmaster, or Merit Badge Evaluator ♦ DSA Mission, Vision, and Values ♦ Policies and Procedures ♦ Fundraising, Managing Money, and Nonprofit Status and Rules DSA Troop Guidebook 9/19/2011 Page 2 Www.dogscouts.org Dog Scout Troop Guidebook Table of Contents Summary of Most Recent Updates ..... Page 4 Definitions ....... Page 8 Section 1: Understanding the DSA mission Mission, Values and Vision statement ...... Page 12 Dog Scout Laws ...... Page 13 The “Dog Scout Way” ...... Page 15 Section 2: Your role in DSA and how to start a troop Your role as a troop leader ...... Page 18 Goals of a troop ..... Page 19 Helping dogs with issues .. Page 20 Troop event rules ...... Page 24 Troop policies ..... Page 26 Junior troops ... Page 32 Optional non-profit status ..... Page 34 Getting started ... Page 36 Avoiding burnout ... Page 42 Frequently Asked Questions ... Page 46 Section 3: Understanding DSA programs What DSA offers and provides to its members and leaders .. Page 56 Educational Programs .. Page 58 Troop recognition program .. Page 62 Section 4: Understanding the merit badge system Is my dog a Dog Scout? .. Page 64 How to earn badges . Page 64 Dog Scouts of America www.dogscouts.org DSA Troop Guidebook 9/19/2011 Dog Scouts of America Page 3 The Purpose of This Guidebook The purpose of this guidebook is to introduce you to Dog Scouts of America, its mission, values, vision and its programs and help you understand how troops fit into the goals of DSA. -

Stillwater County 4-H & FFA Fair 2020-2021

Stillwater County 4-H & FFA Fair 2020-2021 Front Cover Designed By: Courtlyn Robertus – Park City Wranglers 4-H Club NOTES 2 Table of Contents Department 12 – Engineering & Technology .......................... 28 Fair Schedule .............................................................................. 4 Aerospace ............................................................................ 28 4-H General Rules .................................................................... 5-6 Bicycle .................................................................................. 28 Grievance Policy .......................................................................... 7 Electricity ............................................................................. 29 Live/Silent Auction ...................................................................... 8 Robotics ............................................................................... 29 General Livestock Rules ............................................................ 8-9 Small Engines ....................................................................... 29 Market Sale Policy..................................................................... 11 Welding ...........................................................................29-30 Carcass Policy ............................................................................ 12 Woodworking ...................................................................... 30 Department 1 – Market Animals .............................................. -

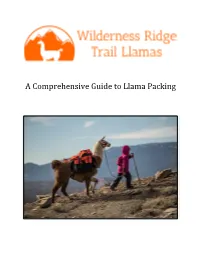

A Comprehensive Guide to Llama Packing

A Comprehensive Guide to Llama Packing 2 Contents Forward...................................................................................................................................................... 5 1. About the Llamas ................................................................................................................................... 7 2. Llama Handling ...................................................................................................................................... 9 3. Llama LNT ........................................................................................................................................... 13 4. Prior to Trailhead ................................................................................................................................. 14 5. At the Trailhead ................................................................................................................................... 19 6. On the Trail .......................................................................................................................................... 27 7. At Camp ............................................................................................................................................... 33 8. Llama 1st Aid ....................................................................................................................................... 37 9. Troubleshooting .................................................................................................................................. -

The Abcs of Living with Dogs

The ABCs of Living with Dogs Care and training resources About Best Friends Animal Society Best Friends Animal Society is a leading national animal welfare orga- nization dedicated to ending the killing of dogs and cats in America’s shelters. In addition to running lifesaving programs in partnership with more than 2,500 animal welfare groups across the country, Best Friends has regional centers in New York City, Los Angeles, Atlanta and Salt Lake City, and operates the nation’s largest no-kill sanctuary for companion animals. Founded in 1984, Best Friends is a pioneer in the no-kill movement and has helped reduce the number of animals killed in shelters na- tionwide from 17 million per year to about 800,000. That means there are still nearly 2,200 dogs and cats killed every day in shelters, just because they don’t have safe places to call home. We are determined to bring the country to no-kill by the year 2025. Working collabora- tively with shelters, rescue groups, other organizations and you, we will end the killing and Save Them All. For more information, visit bestfriends.org. About Sherry Woodard Sherry Woodard, Best Friends’ resident animal behavior consultant, wrote many of the resources in this manual. As an expert in animal train- ing, behavior and care, she develops resources, provides consulting ser- vices, leads workshops and speaks nationwide to promote animal welfare. Before joining Best Friends, Sherry, a nationally certified professional dog trainer, worked with dogs, cats, horses, and a variety of other ani- mals. She also worked in veterinary clinics, where she gained valuable experience in companion-animal medical care and dentistry.