Cybersecurity and Domestic Surveillance Or Why Trusted Computing Shouldn’T Be: Agency, Trust and the Future of Computing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

"Putting Trust Into Computing: Where Does It Fit?"

"Putting Trust Into Computing: Where Does it Fit?" Monday, February 14, 2005 9:00 a.m. – 12:00 p.m. Copyright© 2005 Trusted Computing Group - Other names and brands are properties of their respective owners. Slide #1 Agenda 09:00am Introduction Jim Ward, IBM, TCG Board President / Chair 09:05am Trusted Network Connect Overview Thomas Hardjono, VeriSign 9:45am Open Source Solutions Dr. Dave Safford, IBM 10:25am Writing and Using Trusted Applications Ralph Engers, Utimaco Safeware AG; George Kastrinakis, Wave Systems; William Whyte, NTRU Cryptosystems, Inc. 11:15am Customer Case Studies Stacy Cannady, IBM; Manny Novoa, HP 11:50am Q&A Mark Schiller, HP; Jim Ward, IBM; Brian Berger, Wave Systems Copyright© 2005 Trusted Computing Group - Other names and brands are properties of their respective owners. Slide #2 Agenda 09:00am Introduction Jim Ward, IBM, TCG Board President / Chair Jim Ward is a Senior Technical Staff Member and security architect within the IBM software group division. Ward has been a core contributor in the security standards space and currently serves as the President and Board Chair of the Trusted Computing Group. Copyright© 2005 Trusted Computing Group - Other names and brands are properties of their respective owners. Slide #3 TCG Mission Develop and promote open, vendor-neutral, industry standard specifications for trusted computing building blocks and software interfaces across multiple platforms Copyright© 2005 Trusted Computing Group - Other names and brands are properties of their respective owners. Slide #4 TCG Board of Directors Copyright© 2005 Trusted Computing Group - Other names and brands are properties of their respective owners. Slide #5 TCG Organization Board of Directors Jim Ward, IBM, President and Chairman, Geoffrey Strongin, AMD, Mark Schiller, HP, David Riss, Intel, Steve Heil, Microsoft, Tom Tahan, Sun, Nicholas Szeto, Sony, Bob Thibadeau, Seagate, Thomas Hardjono, VeriSign Marketing Workgroup Technical Committee Advisory Council Administration Brian Berger, Wave Systems Graeme Proudler, HP Invited Participants VTM, Inc. -

Open Source TPM Support

Open Source TPM support Open source application and support software for TPM is available for several operating systems like Linux, Android and in different programming languages supporting the following scenarios: - embedded Systems - servers - mobile communication and portable devices (e.g. tablet computer or smartphone) Open source implementations can also be ported to other platforms and processors and may be act as a starting point for the development of new applications. Some open source projects from the following list are supported by Infineon while other packages are separately developed by independent parties. The following list of Open Source software utilizing Trusted Computing and/or TPM software makes no claim to be complete and represents a limited number of projects: 1. Linux TPM Driver ( http://www.kernel.org ) Linux device driver for Trusted Platform Modules (TPM) in standard kernel (Vanilla). 2. I2C driver for TPM The driver is available on Linux kernel.org ( https://lkml.org/lkml/2011/7/22/137 ) 3. Trusted GRUB ( http://sourceforge.net/projects/trustedgrub ) Trusted GRUB extends the GRUB bootloader for Linux platforms with TPM support. This makes it possible to provide a secure Bootstrap architecture; Code is in general useful for initializing a Trusted Platform Module and execute integrity measurement based on Trusted Computing. 4. UBoot based on TPM with I2C ( http://git.chromium.org/gitweb/?p=chromiumos/third_party/u- boot.git;a=tree;f=drivers/tpm/slb9635_i2c;hb=chromeos-v2011.03 ) UBoot involving TPM using I2C interface. 5. The TROUSERS project ( http://sourceforge.net/projects/trousers ): An open-source TCG Software Stack implementation created and released by IBM. -

Trusted System Concepts

Trusted System Concepts Trusted System Concepts Marshall D. Abrams, Ph.D. Michael V. Joyce The MITRE Corporation 7525 Colshire Drive McLean, VA 22102 703-883-6938 [email protected] This is the first of three related papers exploring how contemporary computer architecture affects security. Key issues in this changing environment, such as distributed systems and the need to support multiple access control policies, necessitates a generalization of the Trusted Computing Base paradigm. This paper develops a conceptual framework with which to address the implications of the growing reliance on Policy-Enforcing Applications in distributed environments. 1 INTRODUCTION A significant evolution in computer software architecture has taken place over the last quarter century. The centralized time-sharing systems of the 1970s and early 1980s are rapidly being superseded by the distributed architectures of the 1990s. As an integral part of the architecture evolution, the composition of the system access control policy has changed. Instead of a single policy, the system access control policy is more likely to be a composite of several constituent policies implemented in applications that create objects and enforce their own unique access control policies. This paper first provides a survey that explains how the security community developed the accepted concepts and criteria that addressed the time-shared architectures. Second, the paper focuses on the changes currently ongoing, providing insight into the driving forces and probable directions. This paper presents contemporary thinking; it summarizes and generalizes vertical and horizontal extensions to the Trusted Computing Base (TCB) concept. While attempting to be logical and rigorous, formalism is avoided. This paper was first published in Computers & Security, Vol. -

Multiple Stakeholder Model Revision 3.40

R E Multiple Stakeholder Model F Published E Family “2.0” R Level 00 Revision 3.40 2 May 2016 E N C Contact: [email protected] E TCG PUBLISHED Copyright © TCG 2012-2016 TCG Published - Multiple Stakeholder Model Copyright TCG Copyright ©2012-2016 Trusted Computing Group, Incorporated. Disclaimer THIS REFERENCE DOCUMENT IS PROVIDED "AS IS" WITH NO WARRANTIES WHATSOEVER, INCLUDING ANY WARRANTY OF MERCHANTABILITY, NONINFRINGEMENT, FITNESS FOR ANY PARTICULAR PURPOSE, OR ANY WARRANTY OTHERWISE ARISING OUT OF ANY PROPOSAL, WHITE PAPER, OR SAMPLE. Without limitation, TCG disclaims all liability, including liability for infringement of any proprietary rights, relating to use of information in this reference document, and TCG disclaims all liability for cost of procurement of substitute goods or services, lost profits, loss of use, loss of data or any incidental, consequential, direct, indirect, or special damages, whether under contract, tort, warranty or otherwise, arising in any way out of use or reliance upon this document or any information herein. No license, express or implied, by estoppel or otherwise, to any TCG or TCG member intellectual property rights is granted herein. Contact the Trusted Computing Group at www.trustedcomputinggroup.org for information on TCG licensing through membership agreements. Any marks and brands contained herein are the property of their respective owners. Page ii TCG PUBLISHED Family “2.0” 2 May 2016 Copyright © TCG 2012-2016 Level 00 Revision 3.40 Published- Multiple Stakeholder Model Copyright TCG Acknowledgments The TCG wishes to thank all those who contributed to this reference document. Ronald Aigner Microsoft Bo Bjerrum Intel Corporation Alec Brusilovsky InterDigital Communications, LLC David Challener Johns Hopkins University, Applied Physics Lab Michael Chan Samsun Semiconductor Inc. -

Trusted Computing

Grazer Linux Tag 2006 Linux Powered Trusted Computing DI Martin Pirker <[email protected]> DI Thomas Winkler <[email protected]> GLT 2006 - Trusted Live Linux - Martin Pirker + Thomas Winkler, IAIK TU Graz 1 Übersicht ● Einführung und Motivation ● Trusted Computing - Geschichte ● TPM Chips: Hardware, Funktion und Anwendungen ● Chain of Trust ● Kryptografische Schlüssel und Schlüsselverwaltung ● Identitäten ● Attestierung ● Virtualisierung ● Stand der Dinge unter Linux: Treiber, Middleware, Anwendungen GLT 2006 - Trusted Live Linux - Martin Pirker + Thomas Winkler, IAIK TU Graz 2 Einführung ● einschlägige Medien voll mit Meldungen über Sicherheitsprobleme ● ständig neue Initiativen um diese Probleme zu lösen ● ein Ansatz: Trusted Computing von der Trusted Computing Group (TCG) ● bietet Möglichkeit festzustellen welche Software auf einem Computer ausgeführt wird ● soll Vertrauen in Computer erhöhen GLT 2006 - Trusted Live Linux - Martin Pirker + Thomas Winkler, IAIK TU Graz 3 Motivation (1/2) ● bis heute mehrere Millionen Rechner mit TPMs ausgeliefert – wer im Publikum hat schon ein TPM in seinem Rechner? – wer weiß was es kann und verwendet ihn? GLT 2006 - Trusted Live Linux - Martin Pirker + Thomas Winkler, IAIK TU Graz 4 Motivation (2/2) ● viele unklare und teilweise einseitige Medienberichte ● nächstes Microsoft Betriebssystem soll Trusted Computing unterstützen ● welche Perspektiven gibt es im Open Source Bereich? ● Ziele des Vortrags: – welche Technologien stecken hinter Trusted Computing? – verstehen der Wechselwirkungen und Konsequenzen – Anwendung(en) und Nutzen im Open Source Bereich GLT 2006 - Trusted Live Linux - Martin Pirker + Thomas Winkler, IAIK TU Graz 5 Trusted Computing - Organisation ● ursprünglich: TCPA – Trusted Computing Platform Alliance ● jetzt: TCG – Trusted Computing Group ● Führungspositionen (Promotors): – AMD, HP, IBM, Infineon, Intel, Lenovo, Microsoft, Sun ● Abstufungen der Mitgliedschaft bis hin zu akademischen Beobachtern (z.B. -

TRUSTED COMPUTING GROUP (TCG) TIMELINE February 2011

TRUSTED COMPUTING GROUP (TCG) TIMELINE February 2011 2003 Trusted Computing Group is announced with membership of 14 companies, including Promoters and board members AMD, Hewlett-Packard, IBM, Intel Corporation, Microsoft, Sony Corporation and Sun Microsystems, Inc. TCG structure and vision are created to enable extension of trusted computing beyond the PC into the enterprise. TCG adopts existing specifications for the Trusted Platform Module (TPM) security chip for clients and publishes as an open industry specification. 2004 TCG announces the Trusted Platform Module (TPM) specification 1.2. Manufacturers begin shipping a variety of enterprise desktop and notebook PCs equipped with TPMs and software to enable applications including data and file encryption, secure email, single sign-on, storage of certificates and passwords and other applications. Members begin participating in work groups to address security in mobile devices, storage, servers, peripherals and infrastructure requirements. TCG also announces the Trusted Network Connect (TNC) subgroup to work on an open specification for network access control and endpoint integrity and the addition of almost 30 new members to focus on the effort. Group starts to define requirements and use cases for an open specification. Membership expands to 98 companies by end of the year, with participation broadening beyond semiconductor and client companies to software developers, networking and storage companies. The group initiates an advisory council of leading IT, privacy, finance and security industry experts. The group also begins a liaison program with other industry standards groups and begins a mentoring program for those researchers and academic institutions doing research on trusted computing and related topics. 2005 The group expands its board of directors. -

Trusted Computer System Evaluation Criteria

DoD 5200.28-STD Supersedes CSC-STD-00l-83, dtd l5 Aug 83 Library No. S225,7ll DEPARTMENT OF DEFENSE STANDARD DEPARTMENT OF DEFENSE TRUSTED COMPUTER SYSTEM EVALUATION CRITERIA DECEMBER l985 December 26, l985 Page 1 FOREWORD This publication, DoD 5200.28-STD, "Department of Defense Trusted Computer System Evaluation Criteria," is issued under the authority of an in accordance with DoD Directive 5200.28, "Security Requirements for Automatic Data Processing (ADP) Systems," and in furtherance of responsibilities assigned by DoD Directive 52l5.l, "Computer Security Evaluation Center." Its purpose is to provide technical hardware/firmware/software security criteria and associated technical evaluation methodologies in support of the overall ADP system security policy, evaluation and approval/accreditation responsibilities promulgated by DoD Directive 5200.28. The provisions of this document apply to the Office of the Secretary of Defense (ASD), the Military Departments, the Organization of the Joint Chiefs of Staff, the Unified and Specified Commands, the Defense Agencies and activities administratively supported by OSD (hereafter called "DoD Components"). This publication is effective immediately and is mandatory for use by all DoD Components in carrying out ADP system technical security evaluation activities applicable to the processing and storage of classified and other sensitive DoD information and applications as set forth herein. Recommendations for revisions to this publication are encouraged and will be reviewed biannually by the National Computer Security Center through a formal review process. Address all proposals for revision through appropriate channels to: National Computer Security Center, Attention: Chief, Computer Security Standards. DoD Components may obtain copies of this publication through their own publications channels. -

Working Document on Trusted Computing Platforms and in Particular on the Work Done by the Trusted Computing Group (TCG Group)

ARTICLE 29 Data Protection Working Party 11816/03/EN WP 86 Working Document on Trusted Computing Platforms and in particular on the work done by the Trusted Computing Group (TCG group) Adopted on 23 January 2004 This Working Party was set up under Article 29 of Directive 95/46/EC. It is an independent European advisory body on data protection and privacy. Its tasks are described in Article 30 of Directive 95/46/EC and Article 14 of Directive 97/66/EC. The secretariat is provided by Directorate E (Services, Copyright, Industrial Property and Data Protection) of the European Commission, Internal Market Directorate-General, B-1049 Brussels, Belgium, Office No C100-6/136. Website: www.europa.eu.int/comm/privacy Working Document on Trusted Computing Platforms and in particular on the work done by the Trusted Computing Group (TCG group) THE WORKING PARTY ON THE PROTECTION OF INDIVIDUALS WITH REGARD TO THE PROCESSING OF PERSONAL DATA set up by Directive 95/46/EC of the European Parliament and of the Council of 24 October 19951, having regard to Articles 29 and 30 paragraphs 1 (a) and 3 of that Directive, having regard to its Rules of Procedure and in particular to articles 12 and 14 thereof, has adopted the present Working Document: Background and future perspectives for trusted computing platforms The concept of trusted computing platforms originates from the computer industry’s observation that the current personal computer model is not conducive to guaranteeing security, as demonstrated by virus attacks, the possibility of spying on data being input, pirating of software and works of art, etc. -

TCG Guidance for Secure Update of Software and Firmware on Embedded Systems

R E TCG Guidance for Secure Update of Software and Firmware on F Embedded Systems E R Version 1.0 Revision 72 E February 10, 2020 N Contact: C [email protected] E Published TCG Guidance for Secure Update of Software and Firmware on Embedded Systems | Version 1.0 | Revision 72 | 2/10/2020 | Published © TCG 2020 TCG Guidance for Secure Update of Software and Firmware on Embedded Systems DISCLAIMERS, NOTICES, AND LICENSE TERMS THIS DOCUMENT IS PROVIDED "AS IS" WITH NO WARRANTIES WHATSOEVER, INCLUDING ANY WARRANTY OF MERCHANTABILITY, NONINFRINGEMENT, FITNESS FOR ANY PARTICULAR PURPOSE, OR ANY WARRANTY OTHERWISE ARISING OUT OF ANY PROPOSAL, DOCUMENT OR SAMPLE. Without limitation, TCG disclaims all liability, including liability for infringement of any proprietary rights, relating to use of information in this document and to the implementation of this document, and TCG disclaims all liability for cost of procurement of substitute goods or services, lost profits, loss of use, loss of data or any incidental, consequential, direct, indirect, or special damages, whether under contract, tort, warranty or otherwise, arising in any way out of use or reliance upon this document or any information herein. This document is copyrighted by Trusted Computing Group (TCG), and no license, express or implied, is granted herein other than as follows: You may not copy or reproduce the document or distribute it to others without written permission from TCG, except that you may freely do so for the purposes of (a) examining or implementing TCG documents or (b) developing, testing, or promoting information technology standards and best practices, so long as you distribute the document with these disclaimers, notices, and license terms. -

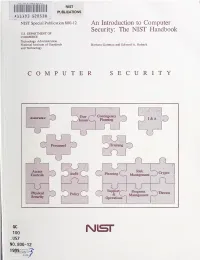

An Introduction to Computer Security: the NIST Handbook U.S

HATl INST. OF STAND & TECH R.I.C. NIST PUBLICATIONS AlllOB SEDS3fl NIST Special Publication 800-12 An Introduction to Computer Security: The NIST Handbook U.S. DEPARTMENT OF COMMERCE Technology Administration National Institute of Standards Barbara Guttman and Edward A. Roback and Technology COMPUTER SECURITY Contingency Assurance User 1) Issues Planniii^ I&A Personnel Trairang f Access Risk Audit Planning ) Crypto \ Controls O Managen»nt U ^ J Support/-"^ Program Kiysfcal ~^Tiireats Policy & v_ Management Security Operations i QC 100 Nisr .U57 NO. 800-12 1995 The National Institute of Standards and Technology was established in 1988 by Congress to "assist industry in the development of technology . needed to improve product quality, to modernize manufacturing processes, to ensure product reliability . and to facilitate rapid commercialization ... of products based on new scientific discoveries." NIST, originally founded as the National Bureau of Standards in 1901, works to strengthen U.S. industry's competitiveness; advance science and engineering; and improve public health, safety, and the environment. One of the agency's basic functions is to develop, maintain, and retain custody of the national standards of measurement, and provide the means and methods for comparing standards used in science, engineering, manufacturing, commerce, industry, and education with the standards adopted or recognized by the Federal Government. As an agency of the U.S. Commerce Department's Technology Administration, NIST conducts basic and applied research in the physical sciences and engineering, and develops measurement techniques, test methods, standards, and related services. The Institute does generic and precompetitive work on new and advanced technologies. NIST's research facilities are located at Gaithersburg, MD 20899, and at Boulder, CO 80303. -

Trusted Computing & Digital Rights Management – Theory & Effects

School of Mathematics and Systems Engineering Reports from MSI - Rapporter från MSI Trusted Computing & Digital Rights Management – Theory & Effects Daniel Gustafsson Tomas Stewén Sep MSI Report 04086 2004 Växjö University ISSN 1650-2647 SE-351 95 VÄXJÖ ISRN VXU/MSI/DA/E/--04086/--SE Preface First we want to thank Pernilla Rönn, Lena Johansson and Jonas Stewén that made it possible for us to carry out this master thesis at AerotechTelub. A special thank to Lena Johansson, our supervisor at AerotechTelub, for her help with ideas and opinions. A special thank to Jonas Stewén, employee at AerotechTelub, for his help with material and his opinions on our work. We also want to thank Ola Flygt our supervisor and Mathias Hedenborg our examiner at Växjö University. Växjö, June 2004 Abstract Trusted Computing Platform Alliance (TCPA), now known as Trusted Computing Group (TCG), is a trusted computing initiative created by IBM, Microsoft, HP, Compaq, Intel and several other smaller companies. Their goal is to create a secure trusted platform with help of new hardware and software. TCG have developed a new chip, the Trusted Platform Module (TPM) that is the core of this initiative, which is attached to the motherboard. An analysis is made on the TCG’s specifications and a summary is written of the different parts and functionalities implemented by this group. Microsoft is in the development stage for an operating system that can make use of TCG’s TPM and other new hardware. This initiative of the operating system is called NGSCB (Next Generation Secure Computing Base) former known as Palladium. -

Expanding Malware Defense by Securing Software Installations*

Expanding Malware Defense by Securing Software Installations? Weiqing Sun1, R. Sekar1, Zhenkai Liang2 and V.N. Venkatakrishnan3 1 Department of Computer Science, Stony Brook University 2 Department of Computer Science, Carnegie Mellon University 3 Department of Computer Science, University of Illinois, Chicago. Abstract. Software installation provides an attractive entry vector for malware: since installations are performed with administrator privileges, malware can easily get the en- hanced level of access needed to install backdoors, spyware, rootkits, or “bot” software, and to hide these installations from users. Previous research has been focused mainly on securing the execution phase of untrusted software, while largely ignoring the safety of installations. Even security-enhanced operating systems such as SELinux and Vista don't usually impose restrictions during software installs, expecting the system administrator to “know what she is doing.” This paper addresses this “gap in armor” by securing software installations. Our technique can support a diversity of package managers and software installers. It is based on a framework that simplifies the development and enforcement of policies that govern safety of installations. We present a simple policy that can be used to prevent untrusted software from modifying any of the files used by benign software packages, thus blocking the most common mechanism used by malware to ensure that it is run automatically after each system reboot. While the scope of our technique is limited to the installation phase, it can be easily combined with approaches for secure execution, e.g., by ensuring that all future runs of an untrusted package will take place within an administrator-specified sandbox.