50 Ways to Leak Your Data: an Exploration of Apps' Circumvention of the Android Permissions System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Protecting Sensitive and Personal Information from Ransomware-Caused Data Breaches

Protecting Sensitive and Personal Information from Ransomware-Caused Data Breaches OVERVIEW Over the past several years, the Cybersecurity and Infrastructure Security Ransomware is a serious and increasing threat to all government and Agency (CISA) and our partners have responded to a significant number of private sector organizations, including ransomware incidents, including recent attacks against a U.S. pipeline critical infrastructure organizations. In company and a U.S. software company, which affected managed service response, the U.S. government providers (MSPs) and their downstream customers. launched StopRansomware.gov, a centralized, whole-of-government Ransomware is malware designed to encrypt files on a device, rendering webpage providing ransomware files and the systems that rely on them unusable. Traditionally, malicious resources, guidance, and alerts. actors demand ransom in exchange for decryption. Over time, malicious actors have adjusted their ransomware tactics to be more destructive and impactful. Malicious actors increasingly exfiltrate data and then threaten to sell or leak it—including sensitive or personal information—if the ransom is not paid. These data breaches can cause financial loss to the victim organization and erode customer trust. All organizations are at risk of falling victim to a ransomware incident and are responsible for protecting sensitive and personal data stored on their systems. This fact sheet provides information for all government and private sector organizations, including critical infrastructure organizations, on preventing and responding to ransomware-caused data breaches. CISA encourages organizations to adopt a heightened state of awareness and implement the recommendations below. PREVENTING RANSOMWARE ATTACKS 1. Maintain offline, encrypted backups of data and regularly test your backups. -

About the Sony Hack

All About the Sony Hack Sony Pictures Entertainment was hacked in late November by a group called the Guardians of Peace. The hackers stole a significant amount of data off of Sony’s servers, including employee conversations through email and other documents, executive salaries, and copies of unreleased January/February 2015 Sony movies. Sony’s network was down for a few days as administrators worked to assess the damage. According to the FBI, the hackers are believed have ties with the North Korean government, which has denied any involvement with the hack and has even offered to help the United States discover the identities of the hackers. Various analysts and security experts have stated that it is unlikely All About the Sony Hack that the North Korean government is involved, claiming that the government likely doesn’t have the Learn how Sony was attacked and infrastructure to succeed in a hack of this magnitude. what the potential ramifications are. The hackers quickly turned their focus to an upcoming Sony film, “The Interview,” a comedy about Securing Your Files in Cloud two Americans who assassinate North Korean leader Kim Jong-un. The hackers contacted Storage reporters on Dec. 16, threatening to commit acts of terrorism towards people going to see the Storing files in the cloud is easy movie, which was scheduled to be released on Dec. 25. Despite the lack of credible evidence that and convenient—but definitely not attacks would take place, Sony decided to postpone the movie’s release. On Dec. 19, President risk-free. Obama went on record calling the movie’s cancelation a mistake. -

The 2014 Sony Hack and the Role of International Law

The 2014 Sony Hack and the Role of International Law Clare Sullivan* INTRODUCTION 2014 has been dubbed “the year of the hack” because of the number of hacks reported by the U.S. federal government and major U.S. corporations in busi- nesses ranging from retail to banking and communications. According to one report there were 1,541 incidents resulting in the breach of 1,023,108,267 records, a 78 percent increase in the number of personal data records compro- mised compared to 2013.1 However, the 2014 hack of Sony Pictures Entertain- ment Inc. (Sony) was unique in nature and in the way it was orchestrated and its effects. Based in Culver City, California, Sony is the movie making and entertain- ment unit of Sony Corporation of America,2 the U.S. arm of Japanese electron- ics company Sony Corporation.3 The hack, discovered in November 2014, did not follow the usual pattern of hackers attempting illicit activities against a business. It did not specifically target credit card and banking information, nor did the hackers appear to have the usual motive of personal financial gain. The nature of the wrong and the harm inflicted was more wide ranging and their motivation was apparently ideological. Identifying the source and nature of the wrong and harm is crucial for the allocation of legal consequences. Analysis of the wrong and the harm show that the 2014 Sony hack4 was more than a breach of privacy and a criminal act. If, as the United States maintains, the Democratic People’s Republic of Korea (herein- after North Korea) was behind the Sony hack, the incident is governed by international law. -

Attack on Sony 2014 Sammy Lui

Attack on Sony 2014 Sammy Lui 1 Index • Overview • Timeline • Tools • Wiper Malware • Implications • Need for physical security • Employees – Accomplices? • Dangers of Cyberterrorism • Danger to Other Companies • Damage and Repercussions • Dangers of Malware • Defense • Reparations • Aftermath • Similar Attacks • Sony Attack 2011 • Target Attack • NotPetya • Sources 2 Overview • Attack lead by the Guardians of Peace hacker group • Stole huge amounts of data from Sony’s network and leaked it online on Wikileaks • Data leaks spanned over a few weeks • Threatening Sony to not release The Interview with a terrorist attack 3 Timeline • 11/24/14 - Employees find Terabytes of data stolen from computers and threat messages • 11/26/14 - Hackers post 5 Sony movies to file sharing networks • 12/1/14 - Hackers leak emails and password protected files • 12/3/14 – Hackers leak files with plaintext credentials and internal and external account credentials • 12/5/14 – Hackers release invitation along with financial data from Sony 4 Timeline • 12/07/14 – Hackers threaten several employees to sign statement disassociating themselves with Sony • 12/08/14 - Hackers threaten Sony to not release The Interview • 12/16/14 – Hackers leaks personal emails from employees. Last day of data leaks. • 12/25/14 - Sony releases The Interview to select movie theaters and online • 12/26/14 –No further messages from the hackers 5 Tools • Targeted attack • Inside attack • Wikileaks to leak data • The hackers used a Wiper malware to infiltrate and steal data from Sony employee -

Account Protections a Google Perspective

Account Protections a Google Perspective Elie Bursztein Google, @elie with the help of many Googlers updated March 2021 Security and Privacy Group Slides available here: https://elie.net/account Security and Privacy Group 4 in 10 US Internet users report having their online information compromised Source the United States of P@ssw0rd$ - Harris / Google poll Security and Privacy Group How do attacker compromise accounts? Security and Privacy Group Main source of compromised accounts Data breach Phishing Keyloggers Security and Privacy Group The blackmarket is fueling the account compromised ecosystem Security and Privacy Group Accounts and hacking tools are readily available on the blackmarket Security and Privacy Group Volume of credentials stolen in 2016: a lower bound Data breach Phishing Keyloggers 4.3B+ 12M+ 1M+ Data Breaches, Phishing, or Malware? Understanding the Risks of Stolen Credentials CCS’17 Security and Privacy Group Data breach Phishing Keyloggers Stolen credentials volume credentials Stolen Targeted attack Risk Security and Privacy Group Stolen credential origin takeaways The black market Password reuse is Phishing and fuels account the largest source keyloggers poses compromise of compromise a significant risk Security and Privacy Group How can we prevent account compromise? Security and Privacy Group Defense in depth leveraging many competing technologies Security and Privacy Group Increasing security comes at the expense of additional friction including lock-out risk, monetary cost, and user education Security and Privacy -

The Ultimate Guide to Vendor Data Breach Response

The Ultimate Guide to Vendor Data Breach Response This paper was authored by Corvus’s VP of Smart Breach Response Lauren Winchester in collaboration with Dominic Paluzzi, Co-Chair of the Data Privacy and Cybersecurity practice at the law firm McDonald Hopkins. Commercial insurance reimagined. The Ultimate Guide to Vendor Data Breach Response Cyber insurance brokers help their clients plan for what happens when they experience a data breach or ransomware incident. Something often overlooked is how that plan may change when the breach is at a vendor and the investigation is outside of their control. Most organizations are in the midst of a decade-old shift to deeper integration with managed service providers, software-as-a-service tools, and other cloud-based software solutions. Having worked with thousands of brokers and policyholders, we’ve observed an unspoken assumption that these vendors, with their highly advanced products, are also par- agons of cybersecurity. That’s a misplaced assumption for three reasons. The bigger and more complex an organization, generally the harder it is to keep safe. Vendors may have excellent security teams and practices, but 1. face a sisyphean task given their scale. The adversarial question. These companies may be at greater risk because criminals see them as a rich target -- if they can infiltrate the vendor, they 2. can potentially extend their attack to hundreds or thousands of customer organizations. Some providers have an air of invincibility about their exposure, likely for the same reason their customers instinctively trust them. A survey by 3. Coveware shows a large disconnect between what MSPs believe to be the cost and consequences of an attack, and what they are in reality. -

Transparent Data Protection for Smartphones and Tablets

Transparent data protection for smartphones and tablets. Dell Data Protection | Mobile Edition Secure iOS Devices Dell Data Protection | Mobile Edition enables Today employees, partners and vendors alike work any administrators to enforce policies at the user level and time, anywhere, using any device to download and share commands at the device level. Device management files. Personally owned smartphones and tablets, like commands are sent to managed iPhone and iPad devices desktops and laptops, have become a standard work tool. via the Apple Push Notification Service (APNS). Policies, Accordingly, most organizations are embracing the bring restrictions and commands are implemented in iOS as a your own device movement, recognizing it helps to both configuration profile, which are deployed over-the-air. reduce equipment costs and increase productivity. Secure Android Devices But without proper encryption and password enforcement, Managed through the Microsoft Exchange ActiveSync data accessed on mobile devices, whether from a corporate (EAS) protocol, Android mobile device protection lets you server or a public cloud, is unprotected. If the device is lost set and enforce policies at the user level and commands or stolen, or if data is accessed through an open wireless at the device level. Device management commands hotspot, it could be hijacked, putting companies in jeopardy are sent and enforced on Android devices via Exchange of a security breach and compliance violations. IT teams are ActiveSync. Allow, block and remote wipe commands are struggling to strike a balance between protecting data and deployed over-the-air. impeding worker productivity by restricting data access. iOS-Only Policies Dell Data Protection | Mobile Edition helps to put IT back Grace period before device locks Force iTunes password in control of data security by enabling you to protect data Reset passcode Allow untrusted https certs accessed on smartphones and tablets running iOS or Remote lock Allow iCloud backup Android operating systems. -

The Department of Justice Versus Apple Inc. -- the Great Encryption Debate Between Privacy and National Security

Catholic University Journal of Law and Technology Volume 27 Issue 2 Spring 2019 Article 3 2019 The Department of Justice Versus Apple Inc. -- The Great Encryption Debate Between Privacy and National Security Julia P. Eckart Follow this and additional works at: https://scholarship.law.edu/jlt Part of the Communications Law Commons, Constitutional Law Commons, First Amendment Commons, Intellectual Property Law Commons, Internet Law Commons, Other Law Commons, Privacy Law Commons, and the Science and Technology Law Commons Recommended Citation Julia P. Eckart, The Department of Justice Versus Apple Inc. -- The Great Encryption Debate Between Privacy and National Security, 27 Cath. U. J. L. & Tech 1 (2019). Available at: https://scholarship.law.edu/jlt/vol27/iss2/3 This Article is brought to you for free and open access by CUA Law Scholarship Repository. It has been accepted for inclusion in Catholic University Journal of Law and Technology by an authorized editor of CUA Law Scholarship Repository. For more information, please contact [email protected]. THE DEPARTMENT OF JUSTICE VERSUS APPLE INC.—THE GREAT ENCRYPTION DEBATE BETWEEN PRIVACY AND NATIONAL SECURITY Julia P. Eckart* I. THE FACTS UNDERLYING THE DOJ–APPLE DISPUTE ........................3 A. Timeline of the Parties’ Court-Filed Documents ......................................6 B. Issues Presented in the DOJ–Apple Litigation ..........................................8 II. APPLE’S iOS9.0 SECURITY GUIDE ..........................................................9 A. Some of Apple’s Encryption and Non-Encryption Security Features ........9 B. Other Hardware and Software System Security Features .......................11 III. DOES THIS CASE PERTAIN TO A SINGLE IPHONE OR ALL IPHONES? .......................................................................................................12 A. DOJ’s Position—It is About One, Single iPhone ....................................12 B. -

SONY Pictures Entertainment - Hack of the Century 1

SONY Pictures Entertainment - Hack of the Century 1 SONY Pictures Entertainment - Hack of the Century SONY Pictures Entertainment - Hack of the Century 2 Submitted to the 2016 Arthur Page Society Case Study Competition Table of Contents Executive Summary ............................................................................................................................................... 3 Hacking Overview ................................................................................................................................................. 3 Guardians of Peace ........................................................................................................................................... 3 The Interview .................................................................................................................................................... 4 Sony Inc. ................................................................................................................................................................ 4 Sony Background .............................................................................................................................................. 4 Sony Pictures Entertainment ............................................................................................................................ 4 Sony Pictures Entertainment Market ................................................................................................................... 5 Market and Industry Analysis -

Microsoft Data Breach: Risk, Regulation and Managing a Crisis

MICROSOFT DATA BREACH: RISK, REGULATION AND MANAGING A CRISIS The emergency directive (ED) issued on Tuesday 2nd March by the US Cybersecurity and Infrastructure Security Agency (CISA), indicating that FIVE STEPS TO TAKE Microsoft's Exchange Server application had suffered a significant data NOW breach, serves to highlight the importance of continued and vigilant cyber 1. Security measures must not security systems within businesses. only be 'adequate' but also In tandem with the ED, CISA also issued a twitter alert urging "ALL checked and verified organizations across ALL sectors to follow guidance to address the 2. Ensure vendors and partners widespread domestic and international exploitation" of four vulnerabilities in maintain high data protection Microsoft's Exchange email application. However, the fix has come too late, standards as reports have suggested that 30,000 Microsoft Exchange servers have already been compromised, although the precise number is unconfirmed. 3. Ensure Board and senior management engagement on The initial cyber-attack is believed to have been carried out by a Chinese Cyber risk State sponsored hacking group called Hafnium who initially exploited a zero- day vulnerability. The attacks included three steps. First, it would gain access 4. Conduct employee security to an Exchange Server either with stolen passwords or by using the previously awareness training undiscovered vulnerabilities to disguise itself as someone who should have 5. Ensure a robust crisis response access. Second, it would create what's called a web shell to control the plan - and test it compromised server remotely. Third, it would use that remote access – run from the U.S.-based private servers – to steal data from an organization's network that relied on the Exchange Server software. -

Symantec Intelligence Report MAY 2013 P

SYMANTEC INTELLIGENCE REPORT MAY 2013 p. 2 Symantec Corporation Symantec Intelligence Report :: MAY 2013 CONTENTS CONTENTS 3 Executive Summary 17 Spam URL Distribution Based on Top Level Domain Name* 18 Top 5 Activity for Spam Destination by Geography 18 Top 5 Activity for Spam Destination by Company Size 4 BIG NUMBERS 18 Top 5 Activity for Spam Destination by Industry 7 TIMELINE 19 MALWARE 8 May Security Timeline 20 Malware 20 Proportion of Email Traffic in Which Virus Was Detected 20 Top 5 Activity for Malware Destination by Geographic Location 9 DATA BREACHES 21 Top 5 Activity for Malware Destination by Industry 10 Data Breaches 21 Top 5 Activity for Malware Destination by Company Size 10 Timeline of Data Breaches, Jan 2012 – May 2013 21 Top 10 Email Virus Sources 11 Top Causes of Data Breaches in 2013 22 Top 10 Most Frequently Blocked Malware 11 Top Ten Sectors by Number of Data Breaches 22 Policy Based Filtering 23 Next Month 12 MOBILE 23 About Symantec 13 Mobile 23 More Information 13 Cumulative Mobile Android Malware 14 Mobile Vulnerabilities Publicly Disclosed 15 SPAM 16 Spam 16 Global Spam Volume Per Day 17 Top 10 Sources of Spam 17 Average Spam Message Size* 17 Spam by Category p. 3 Symantec Corporation Symantec Intelligence Report :: MAY 2013 Executive Summary Welcome to the May Symantec Intelligence report. This report includes many of the statistics that we have published on a monthly basis over the last few years, along with updates to material previously published in the annual Internet Security Threat Report. In it we will look at the threat landscape, digging deeper into the trends that appear over time. -

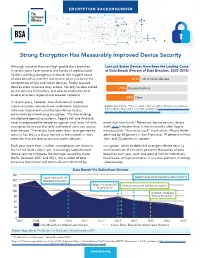

Strong Encryption Has Measurably Improved Device Security

ENCRYPTION BACKGROUNDER Strong Encryption Has Measurably Improved Device Security Although some of the most high-profile data breaches Lost and Stolen Devices Have Been the Leading Cause in recent years have come at the hands of sophisticated of Data Breach (Percent of Data Breaches, 2005–2015) hackers wielding dangerous malware, the biggest cause of data breaches over the last several years has been the 41% Lost or stolen devices compromise of lost and stolen devices. Poorly secured devices allow attackers easy access, not only to data stored 25% Malware/hacking on the devices themselves, but also to credentials that enable attackers to penetrate broader networks. 34% Other In recent years, however, manufacturers of mobile communications devices have undertaken substantial Source: Trend Micro, “Follow the Data: Dissecting Data Breaches and Debunk- ing the Myths” (September 22, 2015), available at https://www.trendmicro.com/ measures to prevent unauthorized device access, vinfo/us/security/news/cyber-attacks/follow-the-data. particularly by introducing encryption. The two leading smartphone operating systems, Apple’s IoS and Android, have incorporated file-based encryption and, later, full-disk more than two-thirds.1 Moreover, device security deters encryption to ensure that only authorized users can access theft: data indicates that, in the six months after Apple their devices. The results have been clear: stronger device introduced its “Activation Lock” mechanism, iPhone thefts security has led to a sharp decline in the number of data declined by 38 percent in San Francisco, 19 percent in New breaches that are due to lost and stolen devices. York, and 24 percent in London.2 Each year, more than 1 million smartphones are stolen in Encryption, which enables the strongest device security the United States alone; yet, increasingly sophisticated mechanisms on the market, prevents thousands of data device security mitigates the damage caused by these breaches02 each04 year, each06 one posing08 risks to individuals,01 00 thefts.