Apple 1013 (Part 4 of 4) U.S

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

George R. Lewycky 30 Regent St, Apt

George R. Lewycky 30 Regent St, Apt. # 709, Jersey City, NJ 07302 [email protected] (646) 252 8882 http://georgenet.net/resume SUMMARY Over three decades of IT experience in various industries and lines of business with assorted technologies, databases, languages and operating systems. COMPUTER EXPERIENCE AND SKILLS Databases: Oracle, SQL Server, ADABAS (relational), dBase III, Access, Filemaker Pro 5 Oracle: Oracle Financials 11.5.7, Utilities, PL/SQL, SQL*Plus, SQL*Loader, TOAD SQL Server: T-SQL, SQL Server 2008 R2/2010/2012, Microsoft SQL Server Management Studio, SourceSafe, SSRS Languages : SQL, VB.NET, COBOL, JCL, SAS/SPSS (Statistics), CICS, ADA/SQL Operating Systems : IBM MVS & z/OS, Windows, UNIX (SCO & Sun), Mac, DOS (PC's), X-Windows, PICK and Primos Mainframe : JCL, MVS Utilities (DFSORT), COBOL, VSAM NOTE: Supplemental PDF’s available for Oracle, SQL Server, Mainframe & ADABAS at http://georgenet.net/resume DATA PROCESSING SKILLS • Data Analysis; ETL Methodology (Extract, Transform and Load); Interfaces, Data Migration and Cleansing • Packaged (Oracle eBusiness, Real World) and Custom/Internal Applications • Developing algorithms, techniques to re-engineer improve the procedure & processing flow • User interaction on all levels including documentation and training • Experience in Multi-Platform environments; Adaptable to new languages, software packages and industries EMPLOYMENT State Government Agency, NY, NY November 1997 to Present Senior Computer Programmer/Analyst Internet Technologies: February 2010 to present Involved in a major team effort replacing a customized Filemaker Pro 5 database/application, used as a customer service tool into a custom intranet and internet application under Visual Studio 2008 and ASP.NET 3.5 with SQL Server 2008 R2 backend in order to expedite customer claims in a diverse customized and complex customer claim processing system within various departments. -

Operating-System Examples

OPERATING SYSTEM CONCEPTS Sixth Edition ABRAHAM SILBERSCHATZ Bell Laboratories PETER BAER GALVIN Corporate Technologies, Inc. GREG GAGNE Westminster College JOHN WILEY & SONS, INC, New York / Chichester / Weinheim / Brisbane / Singapore / Toronto ACQUISITIONS EDITOR Paul Crockett SENIOR MARKETING MANAGER Katherine Hepburn SENIOR PRODUCTION EDITOR Ken Santor COVER DESIGNER Madelyn Lesure COVER ART Susan E. Cyr SENIOR ILLUSTRATION COORDINATOR Anna Melhorn This book was set in Palatino by Abraham Silberschatz and printed and bound by Courier-Westford. The cover was printed by Phoenix Color Corporation. This book is printed on acid-free paper. The paper in this book was manufactured by a mill whose forest manage- ment programs include sustained yield harvesting of its timberlands. Sustained yield harvesting principles ensure that the numbers of trees cut each year does not exceed the amount of new growth. Copyright O 2002 John Wiley & Sons, Inc. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except as permitted under Sections 107 or 108 of the 1976 United States Copyright Act, without either the prior written permis- sion of the Publisher, or authorization through payment of the appropriate per- copy fee to the Copyright Clearance Center, 222 Rosewood Drive, Danvers, MA 01923, (508) 750-8400, fax (508) 750-4470. Requests to the Publisher for permis- sion should be addressed to the Permissions Department, John Wiley & Sons, Inc., 605 Third Avenue, New York, NY 10158-0012, (212) 850-6011, fax (212) 850- ISBN 0-471-41743-2 Printed in the United States of America PREFACE Operating systems are an essential part of any computer system. -

Ingeniería De Software

Licenciatura Ingeniería de Software Nº de RVOE: 20210877 Nº de RVOE: 20210877 Licenciatura Ingeniería de Software Idioma: Español Modalidad: No escolarizada (100% en línea) Duración: aprox. 4 años Fecha acuerdo RVOE: 04/08/2020 Acceso web: www.techtitute.com/informatica/licenciatura/licenciatura-ingenieria-software Índice 01 02 03 Presentación Plan de estudios Objetivos y competencias pág. 4 pág. 8 pág. 34 04 05 06 ¿Por qué nuestro programa? Excellence Pack Salidas profesionales pág. 44 pág. 48 pág. 52 07 08 09 Metodología Requisitos de acceso y Titulación proceso de admisión pág. 56 pág. 64 pág. 68 01 Presentación En el mundo de la hiperconectividad, concebir la vida sin softwares que faciliten las comunicaciones, la ciencia e incluso las labores diarias parece casi imposible. Así, mientras estas tecnologías avanzan, el mercado precisa nuevos profesionales que sean capaces de cubrir de forma efectiva la alta demanda de creación, desarrollo y mantenimiento de nuevos softwares. Esta carrera universitaria aporta al alumno la capacidad de insertarse en un mercado laboral altamente demandado, contado con conocimientos que le permitan intervenir con eficiencia en todo el Ciclo de Vida del programa, desde la concepción hasta la implementación del proyecto. Una oportunidad única para entender los fundamentos matemáticos, científicos y tecnológicos que permiten la máxima optimización de las TIC´s y el desarrollo software mediante la administración de recursos humanos e informáticos para satisfacer las necesidades de los sectores económicos. Este -

Oklahoma Economic and Job Growth

Oil and Natural Gas Stimulate Oklahoma Economic and Job Growth Oil and natural gas are driving the U.S. economy through a major energy boom and that boom is rippling through the economy of Oklahoma, supporting business activity across the state. This finding grows out of a new American Petroleum Institute survey of domestic oil and natural gas $39 BILLION vendors,1 which offers a glimpse into the job and business creation engine that THE INDUSTRY CONTRIBUTES is the current oil and natural gas industry. The survey shows that at least 2,513 businesses, spread across all five of Oklahoma’s congressional districts, are part TO OKLAHOMA’S ECONOMY of the larger oil and natural gas supply chain. The survey’s snapshot of state-by-state income, is in terms of salary.3 While the activity reinforces the impressive level average annual salary in Oklahoma across of industry success throughout the all industries and sectors is $42,733, the country that is documented in a recent average salary in the oil and gas industry 364,300 PriceWaterhouseCoopers study conducted (excluding gas stations) is very significantly for the American Petroleum Institute.2 higher—$93,992 annually. Overall the The study found that the oil and natural industry supports $39 billion of the OKLAHOMA JOBS gas industry in Oklahoma supports some Oklahoma economy. That’s 23.1 percent 364,300 jobs, which is 16.8 percent of the of the state’s total economic activity. state’s total employment. The amount of supported BY OIL AND Oklahoma labor income supported by the Oklahoma ranks 5th in oil and 4th in natural natural GAS industry oil and natural gas industry comes to $23.3 gas production.4 That makes it one of the billion annually. -

Test Results

PORTABILITY OF IARGE SAS (R) CODE ACROSS OS BATCH, GKS, VKSnl AND THE SAS(R) SYSTEII FOR PERSONAL COIIP\ITERS Larry McEvoy, Washington University ABSTRACT After our scoring program was initially developed, it was scheduled to be used in a The SAS(R)Companion for the eMS Operating large scale multi-centered research project. System (1986 Edition) states on page 1, Each of the five centers in the project "Generally speaking, SAS programs and their extensively reviewed and critiqued the code results are the same, regardless of the host over the course of a year. After this review operating system. n The purpose of this paper process was complete, the sentiment was to is to determine the extent to which SAS freeze the code. In this way other users Institute is generally speaking. could use the same exact program and compare their findings to those in this large scale In the Department of Psychiatry at Washington project. Such standardization would greatly University. we have developed a large SAS facilitate cross-study comparisons in a field program of over 1 1 600 statements which scores plagued by inconsistent definitions and Widely a standardized psychiatric interview and variant measures. creates a data set of over 1,700 variables. Other institutions often wish to run this It was only after SAS Institute greatly scoring program on machines other than an IBM expanded the types of machines and operating mainframe. In addition to aiding such users of systems under which SAS software could run our own program, it is hoped that this' that we had to think of how the program could exercise of running large SAS code under be used in these different environments. -

Using C-Kermit 2Nd Edition

<< Previous file Chapter 1 Introduction An ever-increasing amount of communication is electronic and digital: computers talking to computers Ð directly, over the telephone system, through networks. When you want two computers to communicate, it is usually for one of two reasons: to interact directly with another computer or to transfer data between the two computers. Kermit software gives you both these capabilities, and a lot more too. C-Kermit is a communications software program written in the C language. It is available for many different kinds of computers and operating systems, including literally hundreds of UNIX varieties (HP-UX, AIX, Solaris, IRIX, SCO, Linux, ...), Digital Equipment Corporation (Open)VMS, Microsoft Windows NT and 95, IBM OS/2, Stratus VOS, Data General AOS/VS, Microware OS-9, the Apple Macintosh, the Commodore Amiga, and the Atari ST. On all these platforms, C-Kermit's services include: • Connection establishment. This means making dialup modem connections or (in most cases) network connections, including TCP/IP Telnet or Rlogin, X.25, LAT, NET- BIOS, or other types of networks. For dialup connections, C-Kermit supports a wide range of modems and an extremely sophisticated yet easy-to-use dialing directory. And C-Kermit accepts incoming connections from other computers too. • Terminal sessions. An interactive terminal connection can be made to another com- puter via modem or network. The Windows 95, Windows NT, and OS/2 versions of C-Kermit also emulate specific types of terminals, such as the Digital Equipment Cor- poration VT320, the Wyse 60, or the ANSI terminal types used for accessing BBSs or PC UNIX consoles, with lots of extras such as scrollback, key mapping, printer con- trol, colors, and mouse shortcuts. -

The Linux Kernel

The Linux Kernel Copyright c 1996-1999 David A Rusling [email protected] REVIEW, Version 0.8-3 February 21, 2000 This book is for Linux enthusiasts who want to know how the Linux kernel works. It is not an internals manual. Rather it describes the principles and mechanisms that Linux uses; how and why the Linux kernel works the way that it does. Linux is a moving tar- get; this book is based upon the current, stable, 2.0.33 sources as those are what most individuals and companies are now using. This book is freely distributable, you may copy and redistribute it under certain conditions. Please refer to the copyright and distribution statement. For Gill, Esther and Stephen Legal Notice UNIX is a trademark of Univel. Linux is a trademark of Linus Torvalds, and has no connection to UNIXTM or Univel. Copyright c 1996,1997,1998,1999 David A Rusling 3 Foxglove Close, Wokingham, Berkshire RG41 3NF, UK [email protected] This book (\The Linux Kernel") may be reproduced and distributed in whole or in part, without fee, subject to the following conditions: The copyright notice above and this permission notice must be preserved • complete on all complete or partial copies. Any translation or derived work must be approved by the author in writing • before distribution. If you distribute this work in part, instructions for obtaining the complete • version of this manual must be included, and a means for obtaining a com- plete version provided. Small portions may be reproduced as illustrations for reviews or quotes in • other works without this permission notice if proper citation is given. -

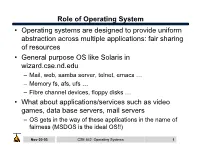

Role of Operating System

Role of Operating System • Operating systems are designed to provide uniform abstraction across multiple applications: fair sharing of resources • General purpose OS like Solaris in wizard.cse.nd.edu – Mail, web, samba server, telnet, emacs … – Memory fs, afs, ufs … – Fibre channel devices, floppy disks … • What about applications/services such as video games, data base servers, mail servers – OS gets in the way of these applications in the name of fairness (MSDOS is the ideal OS!!) Nov-20-03 CSE 542: Operating Systems 1 What is the role of OS? • Create multiple virtual machines that each user can control all to themselves – IBM 360/370 … • Monolithic kernel: Linux – One kernel provides all services. – New paradigms are harder to implement – May not be optimal for any one application • Microkernel: Mach – Microkernel provides minimal service – Application servers provide OS functionality • Nanokernel: OS is implemented as application level libraries Nov-20-03 CSE 542: Operating Systems 2 Case study: Multics • Goal: Develop a convenient, interactive, useable time shared computer system that could support many users. – Bell Labs and GE in 1965 joined an effort underway at MIT (CTSS) on Multics (Multiplexed Information and Computing Service) mainframe timesharing system. • Multics was designed to the swiss army knife of OS • Multics achieved most of the these goals, but it took a long time – One of the negative contribution was the development of simple yet powerful abstractions (UNIX) Nov-20-03 CSE 542: Operating Systems 3 Multics: Designed to be the ultimate OS • “One of the overall design goals is to create a computing system which is capable of meeting almost all of the present and near-future requirements of a large computer utility. -

Mxload: Read Multics Backup Tapes on UNIX

mxload: Read Multics Backup Tapes on UNIX W. Olin Sibert Oxford Systems, Inc. 32 Oldham Road Arlington, Massachusetts U. S. A. 02174 ABSTRACT Oxford Systems’ mxload package is software for reading Multics backup_dump tapes on UNIX systems. It includes programs for reloading data, for making maps from dump tapes, and for conversion of special Multics data formats, such as archives, mailboxes, and Forum meetings. Wherever possible, Multics attributes (owner, timestamps, etc.) are converted to UNIX equivalents, and numerous options are available for controlling the reload. The product, its style of use, features, limitations, and customer experience are described. UNIX is a registered trademark of AT&T. Multics is a registered trademark of Bull HN Information Systems, Inc. AOS/VS is a registered trademark of Data General Corporation. DEC and Ultrix are registered trademarks of Digital Equipment Corporation. NP-1 is a trademark of Gould Corporation. IBM and VM/CMS are registered trademarks of International Business Machines Corporation. MS-DOS is a registered trademark of Microsoft Corporation. mxload is a trademark of Oxford Systems, Inc. Primos is a registered trademark of Prime Computers, Inc. Sun and SunOS are registered trademarks of Sun Microsystems, Inc. INTRODUCTION As Multics users integrate other systems into their Multics environments, and migrate Multics applications to those other systems, they are invariably faced with a difficult question: ‘‘How do I get my data over there, anyway?’’ Over the years, various ad-hoc techniques have evolved, but none are particularly satisfactory for large-scale transportation of Multics hierarchies to other systems. While individual files can be transferred fairly easily, it has been extremely difficult to transfer of entire hierarchies while preserving directory structure, names, segment attributes, etc. -

CPL Is a First Aid Kit

CPL is a First Aid Kit by Dr AA Grainger University of Tasmania Computing Centre Abstract Do you realise how useful and powerful CPL programs can be? CPL is a sort of First Aid Kit for System Administrators. CPL lets you place a ‘real program’ into the PRIMOS environment, combine two or more system utilities into a new tool, or patch over nasty or treacherous holes in a program or a PRIMOS interface. A survey of more than 100 CPL programs written over several years shows both the good and bad sides of CPL in the PRIMOS environment and highlights the high rate of change in PRIMOS itself. What are some of the ‘tricks’ for creating powerful CPL programs? One of the most useful approaches is to have a more general CPL create another which is specifically tailored to do the task at hand. This paper explains a number of techniques which allow a CPL to examine the PRIMOS environment and generate tailored CPL commands based on what it finds. From our experience with CPLs, we suggest a number of ‘wish list’ improvements to the PRIMOS environment. Biography Dr Tony Grainger is the Systems Programmer at the University of Tasmania Computing Centre. He has been responsible for Prime 9955-11,750 and 2755 machines since 1985 Prior to 1985, Dr Grainger was involved for several years with the development of the Centre’s data communications networks. He has held a number of other computing-related and research posts within the University, as well as acting as a consultant to industry. -

Primos User Manual General Information 1 General Information

User Manual Manufacturer and Contact SEH Computertechnik GmbH Phone: +49 (0)521 94226-29 Suedring 11 Fax: +49 (0)521 94226-99 33647 Bielefeld Support: +49 (0)521 94226-44 Germany Email: [email protected] Web: http://www.seh.de Document Type: User Manual Title: primos Version: 2.0 Legal Notices SEH Computertechnik GmbH has endeavored to ensure that the information in this documentation is correct. If you detect any inaccuracies please inform us at the address indicated above. SEH Computertechnik GmbH will not accept any liability for any error or omission. The information in this manual is subject to change without notification. The product documentation gives you valuable information about your product. Keep the documentation for further reference during the life cycle of the product. All rights are reserved. Copying, other reproduction, or translation without the prior written consent from SEH Computertechnik GmbH is prohibited. © 2017 SEH Computertechnik GmbH iPad, iPhone, iPod, and iPod touch are trademarks of Apple Inc., registered in the U.S. and other countries. AirPrint and the AirPrint logo are trademarks of Apple Inc. All trademarks, registered trademarks, logos and product names are property of their respective owners. Contents 1 General Information ...................................................................................................................1 1.1 primos...........................................................................................................................................................................2 -

PRIMOS Commands Reference Guide PRIMOS Commands Reference Guide

Prime Computer, Inc. r FDR3108-190L PRIMOS Conimands Reference viuide Revision 19.0 •MB • { PRIMOS Commands Reference Guide PRIMOS Commands Reference Guide Sarah Lamb and Alice Landy Prime Computer, Inc. 500 Old Connecticut Path Framingham, Massachusetts 01701 COPYRIGHT INFORMATION The information in this document is subject to change without notice and should not be construed as a commitment by Prime Computer Corporation. Prime Computer Corpora tion assumes no responsibility for any errors that may appear in this document. The software described in this document is furnished under a license and may be used or copied only in accordance with the terms of such license. Copyright © 1982 by Prime Computer, Incorporated 500 Old Connecticut Path Framingham, Massachusetts 01701 PRIME and PRIMOS are registered trademarks of Prime Computer, Inc. PRIMENET, RINGNET, PRIME INFORMATION, and THE PROGRAMMER'S COMPANION are trademarks of Prime Computer, Inc. MEDUSA is a trademark of Cambridge Interactive Systems Limited, Cambridge, England. PRINTING HISTORY - PRIMOS Commands Reference Guide Edition Date Number Documents Rev *First Edition January 1979 FDR3108-101 16.3 *Second Edition May 1980 FDR3108-101 17.2 *Third Edition January 1981 FDR3108-101 18.1 Fourth Edition July 1982 FDR3108-190 19.0 These editions are out of print. HOW TO ORDER TECHNICAL DOCUMENTS U.S. Customers Prime Employees Software Distribution Communications Services Prime Computer, Inc. MS 15-13, Prime Park 1 New York Ave. Natick, MA 01760 Framingham, MA 01701 (617) 655-8000, X4837 (617)879-2960X2053, 2054 Customers Outside U.S. PRIME INFORMATION Contact your local Prime Contact your PRIME subsidiary or distributor. INFORMATION dealer.