Travail De Bachelor 2013

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Trident Development Framework

Trident Development Framework Tom MacAdam Jim Covill Kathleen Svendsen Martec Limited Prepared By: Martec Limited 1800 Brunswick Street, Suite 400 Halifax, Nova Scotia B3J 3J8 Canada Contractor's Document Number: TR-14-85 (Control Number: 14.28008.1110) Contract Project Manager: David Whitehouse, 902-425-5101 PWGSC Contract Number: W7707-145679/001/HAL CSA: Malcolm Smith, Warship Performance, 902-426-3100 x383 The scientific or technical validity of this Contract Report is entirely the responsibility of the Contractor and the contents do not necessarily have the approval or endorsement of the Department of National Defence of Canada. Contract Report DRDC-RDDC-2014-C328 December 2014 © Her Majesty the Queen in Right of Canada, as represented by the Minister of National Defence, 2014 © Sa Majesté la Reine (en droit du Canada), telle que représentée par le ministre de la Défense nationale, 2014 Working together for a safer world Trident Development Framework Martec Technical Report # TR-14-85 Control Number: 14.28008.1110 December 2014 Prepared for: DRDC Atlantic 9 Grove Street Dartmouth, Nova Scotia B2Y 3Z7 Martec Limited tel. 902.425.5101 1888 Brunswick Street, Suite 400 fax. 902.421.1923 Halifax, Nova Scotia B3J 3J8 Canada email. [email protected] www.martec.com REVISION CONTROL REVISION REVISION DATE Draft Release 0.1 10 Nov 2014 Draft Release 0.2 2 Dec 2014 Final Release 10 Dec 2014 PROPRIETARY NOTICE This report was prepared under Contract W7707-145679/001/HAL, Defence R&D Canada (DRDC) Atlantic and contains information proprietary to Martec Limited. The information contained herein may be used and/or further developed by DRDC Atlantic for their purposes only. -

Msbridge: Opensees Pushover and Earthquake Analysis of Multi-Span Bridges - User Manual

STRUCTURAL SYSTEMS RESEARCH PROJECT Report No. SSRP–14/04 MSBRIDGE: OPENSEES PUSHOVER AND EARTHQUAKE ANALYSIS OF MULTI-SPAN BRIDGES - USER MANUAL by AHMED ELGAMAL JINCHI LU KEVIN MACKIE Final Report Submitted to the California Department of Transportation (Caltrans) under Contract No. 65A0445. Department of Structural Engineering May 2014 University of California, San Diego La Jolla, California 92093-0085 University of California, San Diego Department of Structural Engineering Structural Systems Research Project Report No. SSRP-14/03 MSBridge: OpenSees Pushover and Earthquake Analysis of Multi-span Bridges - User Manual by Ahmed Elgamal Professor of Geotechnical Engineering Jinchi Lu Assistant Project Scientist Kevin Mackie Associate Professor of Structural Engineering at University of Central Florida Final Report Submitted to the California Department of Transportation under Contract No. 65A0445 Department of Structural Engineering University of California, San Diego La Jolla, California 92093-0085 May 2014 ii Technical Report Documentation Page 1. Report No. 2. Government Accession No. 3. Recipient’s Catalog No. 4. Title and Subtitle 5. Report Date MSBridge: OpenSees Pushover and Earthquake Analysis May 2014 of Multi-span Bridges - User Manual 6. Performing Organization Code 7. Author(s) 8. Performing Organization Report No. Ahmed Elgamal and Jinchi Lu UCSD / SSRP-14/04 9. Performing Organization Name and Address 10. Work Unit No. (TRAIS) Department of Structural Engineering School of Engineering University of California, San Diego 11. Contract or Grant No. La Jolla, California 92093-0085 65A0445 12. Sponsoring Agency Name and Address 13. Type of Report and Period Covered Final Report California Department of Transportation Division of Engineering Services 14. Sponsoring Agency Code th 1801 30 St., MS-9-2/5i Sacramento, California 95816 15. -

Pro Opengl for C# Developers High-Performance 2D and 3D Graphics for Desktop, Web, Ios and Android

F. Ramos Pro OpenGL for C# Developers High-Performance 2D and 3D Graphics for Desktop, Web, iOS and Android ▶ Access the hugely popular OpenGL graphics API in C#, without the need to use C++.Build your own game engine with 2D and 3D support.Target multiple platforms with your next game. OpenGL is widely considered the industry standard in high performance graphics for gaming, virtual reality and visualization. Unlike DirectX, OpenGL can be used on a wide range of platforms beyond Windows, from Linux to iOS and PlayStation Vita. Pro OpenGL for C# Developers shows you how to harness this powerful API from your language of choice, C#, and start creating professional-quality games and interactive graphics applications. 1st ed. 2014, 450 p. The book starts with an introduction to the OpenGL API and a guide to the process A product of Apress involved in rendering graphics, known as the graphics pipeline. You'll also meet OpenTK, the fully managed wrapper that makes it easy and painless to work with OpenGL in C# (or any other .NET language). Chapters 2 and 3 take you through the process of building your Printed book game engine, covering topics like architecture, object-oriented design and test-driven development in the context of game development. You'll begin to discover the power of Softcover OpenGL, build your first rendering demo, and learn techniques for rendering 2D in 3D, and ISBN 978-1-4842-0050-6 3D in 2D! (That is, a 2D world in a 3D game engine, and a 3D scene on a 2D display.) ▶ 44,95 € | £35.50 ▶ *48,10 € (D) | 49,45 € (A) | CHF 60.00 Further chapters dive deep into specific areas of graphic programming: shaders, particle systems, animation and path finding. -

Protobuild Documentation Release Latest

Protobuild Documentation Release latest June Rhodes Aug 24, 2017 General Information 1 User Documentation 3 1.1 Frequently Asked Questions.......................................3 1.2 GitHub..................................................6 1.3 Twitter..................................................6 1.4 Contributing...............................................6 1.5 Getting Started..............................................8 1.6 Getting Started..............................................8 1.7 Defining Application Projects...................................... 11 1.8 Defining Console Projects........................................ 14 1.9 Defining Library Projects........................................ 15 1.10 Defining Content Projects........................................ 16 1.11 Defining Include Projects........................................ 18 1.12 Defining External Projects........................................ 20 1.13 Migrating Existing C# Projects..................................... 24 1.14 Customizing Projects with Properties.................................. 25 1.15 Changing Module Properties....................................... 34 1.16 Including Submodules.......................................... 36 1.17 Package Management with Protobuild.................................. 37 1.18 Package Management with NuGet.................................... 40 1.19 Creating Packages for Protobuild.................................... 42 1.20 Code Signing for iOS.......................................... 46 1.21 Targeting the Web -

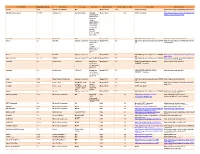

Third Party Version

Third Party Name Third Party Version Manufacturer License Type Comments Merge Product Merge Product Versions License details Software source autofac 3.5.2 Autofac Contributors MIT Merge Cardio 10.2 SOUP repository https://www.nuget.org/packages/Autofac/3.5 .2 Gibraltar Loupe Agent 2.5.2.815 eSymmetrix Gibraltor EULA Gibraltar Merge Cardio 10.2 SOUP repository https://my.gibraltarsoftware.com/Support/Gi Loupe Agent braltar_2_5_2_815_Download will be used within the Cardio Application to view events and metrics so you can resolve support issues quickly and easily. Modernizr 2.8.3 Modernizr MIT Merge Cadio 6.0 http://modernizr.com/license/ http://modernizr.com/download/ drools 2.1 Red Hat Apache License 2.0 it is a very old Merge PACS 7.0 http://www.apache.org/licenses/LICENSE- http://mvnrepository.com/artifact/drools/dro version of 2.0 ols-spring/2.1 drools. Current version is 6.2 and license type is changed too drools 6.3 Red Hat Apache License 2.0 Merge PACS 7.1 http://www.apache.org/licenses/LICENSE- https://github.com/droolsjbpm/drools/releases/ta 2.0 g/6.3.0.Final HornetQ 2.2.13 v2.2..13 JBOSS Apache License 2.0 part of JBOSS Merge PACS 7.0 http://www.apache.org/licenses/LICENSE- http://mvnrepository.com/artifact/org.hornet 2.0 q/hornetq-core/2.2.13.Final jcalendar 1.0 toedter.com LGPL v2.1 MergePacs Merge PACS 7.0 GNU LESSER GENERAL PUBLIC http://toedter.com/jcalendar/ server uses LICENSE Version 2. v1, and viewer uses v1.3. -

Universidade Regional De Blumenau

UNIVERSIDADE REGIONAL DE BLUMENAU CENTRO DE CIÊNCIAS EXATAS E NATURAIS CURSO DE CIÊNCIA DA COMPUTAÇÃO – BACHARELADO MODELAGEM TRIDIMENSIONAL DE AMBIENTE UTILIZANDO KINECT DANIEL URIO MENDES BLUMENAU 2012 2012/2-08 DANIEL URIO MENDES MODELAGEM TRIDIMENSIONAL DE AMBIENTE UTILIZANDO KINECT Trabalho de Conclusão de Curso submetido à Universidade Regional de Blumenau para a obtenção dos créditos na disciplina Trabalho de Conclusão de Curso II do curso de Ciência da Computação — Bacharelado. Prof. Aurélio Faustino Hoppe - Orientador BLUMENAU 2012 2012/2-08 MODELAGEM TRIDIMENSIONAL DE AMBIENTE UTILIZANDO KINECT Por DANIEL URIO MENDES Trabalho aprovado para obtenção dos créditos na disciplina de Trabalho de Conclusão de Curso II, pela banca examinadora formada por: ______________________________________________________ Presidente: Prof. Aurélio Faustino Hoppe, Mestre – Orientador, FURB ______________________________________________________ Membro: Prof. Dalton Solano Dos Reis, Mestre – FURB ______________________________________________________ Membro: Prof. Mauro Marcelo Mattos, Mestre – FURB Blumenau, 11 de dezembro de 2012 Dedico este trabalho à minha família e todos os meus amigos, especialmente aqueles que me ajudaram diretamente na realização deste. AGRADECIMENTOS À minha família, e em especial aos meus pais, Daniel Mendes e Leonilda Fatima Mendes, pelo amor, compreensão, confiança e apoio que me incentivaram para o término de mais esta etapa. Aos meus colegas e amigos, pelos empurrões, cobranças, apoio, companheirismo e principalmente pela compreensão durante essa etapa. À todas as outras pessoas que de uma forma ou de outra contribuíram para o meu desenvolvimento intelectual e pessoal, e em especial ao professor Aurélio Faustino Hoppe, pela sabedoria e competência na orientação deste trabalho. Em grupo, se sobrepor aos outros é burrice, procure buscar a junção de potenciais. -

Cross Platform Development Possibilities and Drawbacks of The

FH JOANNEUM University of Applied Sciences Cross Platform Development Possibilities and drawbacks of the Xamarin platform DI (FH) Norbert Haberl 24. August 2015 Table of Content Table of Content Table of Content ...................................................................................................................................... 2 Figures ..................................................................................................................................................... 4 Tables ...................................................................................................................................................... 5 Abstract Deutsch ..................................................................................................................................... 6 Abstract English ....................................................................................................................................... 7 1 Introduction ..................................................................................................................................... 8 2 Definitions ....................................................................................................................................... 9 2.1.1 Application (App) .................................................................................................................... 9 2.1.2 Application Programming Interface (API) ............................................................................... 9 2.1.3 Smartphone -

Towards Left Duff S Mdbg Holt Winters Gai Incl Tax Drupal Fapi Icici

jimportneoneo_clienterrorentitynotfoundrelatedtonoeneo_j_sdn neo_j_traversalcyperneo_jclientpy_neo_neo_jneo_jphpgraphesrelsjshelltraverserwritebatchtransactioneventhandlerbatchinsertereverymangraphenedbgraphdatabaseserviceneo_j_communityjconfigurationjserverstartnodenotintransactionexceptionrest_graphdbneographytransactionfailureexceptionrelationshipentityneo_j_ogmsdnwrappingneoserverbootstrappergraphrepositoryneo_j_graphdbnodeentityembeddedgraphdatabaseneo_jtemplate neo_j_spatialcypher_neo_jneo_j_cyphercypher_querynoe_jcypherneo_jrestclientpy_neoallshortestpathscypher_querieslinkuriousneoclipseexecutionresultbatch_importerwebadmingraphdatabasetimetreegraphawarerelatedtoviacypherqueryrecorelationshiptypespringrestgraphdatabaseflockdbneomodelneo_j_rbshortpathpersistable withindistancegraphdbneo_jneo_j_webadminmiddle_ground_betweenanormcypher materialised handaling hinted finds_nothingbulbsbulbflowrexprorexster cayleygremlintitandborient_dbaurelius tinkerpoptitan_cassandratitan_graph_dbtitan_graphorientdbtitan rexter enough_ram arangotinkerpop_gremlinpyorientlinkset arangodb_graphfoxxodocumentarangodborientjssails_orientdborientgraphexectedbaasbox spark_javarddrddsunpersist asigned aql fetchplanoriento bsonobjectpyspark_rddrddmatrixfactorizationmodelresultiterablemlibpushdownlineage transforamtionspark_rddpairrddreducebykeymappartitionstakeorderedrowmatrixpair_rddblockmanagerlinearregressionwithsgddstreamsencouter fieldtypes spark_dataframejavarddgroupbykeyorg_apache_spark_rddlabeledpointdatabricksaggregatebykeyjavasparkcontextsaveastextfilejavapairdstreamcombinebykeysparkcontext_textfilejavadstreammappartitionswithindexupdatestatebykeyreducebykeyandwindowrepartitioning -

A Comparison of Visualisation Techniques for Complex Networks

DEGREE PROJECT IN COMPUTER SCIENCE AND ENGINEERING, SECOND CYCLE, 30 CREDITS STOCKHOLM, SWEDEN 2016 A comparison of visualisation techniques for complex networks VIKTOR GUMMESSON KTH ROYAL INSTITUTE OF TECHNOLOGY SCHOOL OF COMPUTER SCIENCE AND COMMUNICATION A comparison of visualisation techniques for complex networks En jämförelse av visualiseringsmetoder för komplexa nätverk VIKTOR GUMMESSON [email protected] Master’s Thesis in Computer Science Royal Institute of Technology Supervisor, KTH: Olov Engwall Examiner: Olle Bälter Project commissioned by: Scania Supervisors at Scania: Magnus Kyllegård, Viktor Kaznov Abstract The need to visualise sets of data and networks within a company is a well-known task. In this thesis, research has been done of techniques used to visualize complex networks in order to find out if there is a generalized optimal technique that can visualize complex networks. For this purpose an application was implemented containing three different views, which were selected from the research done on the sub- ject. As it turns out, it points toward that there is no generalized op- timal technique that can be used to default visualize complex networks in a satisfactory way. A definit conclusion could not be given due to the fact that all visualization techniques which could not be evaluated within this thesis timeframe. Referat Behov av att visualisera data inom bolag är ett känt faktum. Denna avhandling har använt olika tekniker för att undersöka om det existe- rar en generell optimal teknik som kan tillämpas vid visualisering av komplexa nätverk. Vid genomförandet implementerades en applikation med tre olika vyer som valdes ut baserat på forskning inom det valda området. -

Graphics with Opengl Documentation Release 0.1

Graphics with OpenGL Documentation Release 0.1 Jose Salvatierra Nov 13, 2017 Contents 1 Introduction and OpenGL 3 1.1 Introduction to this document......................................3 1.2 The OpenGL Pipeline..........................................5 1.3 Development environment........................................ 11 1.4 Drawing................................................. 12 2 Transforms and 3D 17 2.1 Vectors.................................................. 17 2.2 Transformations............................................. 20 2.3 Viewing.................................................. 21 3 Lighting 27 3.1 Lighting................................................. 27 3.2 Shading.................................................. 29 4 Texture and coloring 35 4.1 Texturing................................................. 35 4.2 Blending, Aliasing, and Fog....................................... 36 5 Complex objects 43 5.1 Importing 3D objects........................................... 43 5.2 Procedural Generation.......................................... 44 6 Noise particles and normal mapping 49 6.1 Particles................................................. 49 6.2 Bump and Normal Maps......................................... 50 7 Shadow Casting 51 7.1 Simple Method.............................................. 51 7.2 Shadow Z-buffer method......................................... 51 7.3 Fixed vs Dynamic Shadows....................................... 53 8 Geometry and Tessellation Shaders 55 8.1 Tessellation Shaders.......................................... -

Slides for My Mono for Game Development

Mono for Game Developers Miguel de Icaza [email protected], @migueldeicaza Xamarin Inc Agenda • Mono in Games • Using Mono for Games • Performance • Garbage CollecKon • Co-rouKnes, Asynchronous Programming Mono for Game Developers – AltDevConf 2012 hp://www.xamarin.com MONO IN GAMES C# Java JavaScript Ruby Python Visual Basic F# Mono for Game Developers – AltDevConf 2012 hp://www.xamarin.com C# Java JavaScript Ruby Python Visual Basic F# Mono for Game Developers – AltDevConf 2012 hp://www.xamarin.com Sims 3 • Mixed Code: – C/C++ engine – C# scripKng/AI – C# high-level • Visual Studio + Mono • X86, PS3, Xbox360 Credit: www.thesims3facts.webs.com BasKon – on Google Chrome NaCl • C# XNA codebase • Originally on Xbox • Ported to NaveClient – Mono – MonoGame (XNA) • Mac, Windows, Linux Pure C# - SoulCra • DeltaEngine – Pure C# engine – Open source – Android, iOS, Mac, Win Unity 3D • Unity Engine – C/C++ game engine – Embedded Mono • User code – C# or UnityScript – Extends Unity itself Shadow Gun, built with Unity SecondLife • Mono on the server • Powers LSL scripts • Nice 200x perf boost • Code InjecKon Infinite Flight • Subject of the second part of this session WHY MONO? Because Life is too Short • To debug another memory leak • To track another memory corrupKon bug • Because you deserve be=er Mono for Game Developers – AltDevConf 2012 hp://www.xamarin.com The Quest for ProducKvity System Languages Scripng Languages Pros: Pros: • Low-level • High-level, good producKvity • Good control of hardware • • Typed Easy to write • Fast code • Safe, -

4D3 Computer Graphics Opengl Project Report

4D3 Computer Graphics OpenGL Project Report Mikhail Volkov <volkovm at tcd dot ie> 04400593 January 18, 2010 Department of Electronic & Electrical Engineering Trinity College Dublin Contents I User Documentation 3 1 Introduction 3 2 Getting Started 4 2.1 Operating System Requirements . .4 2.2 Setup . .4 2.3 Controls . .4 II Developer Documentation 5 1 Technical Overview 5 1.1 Why C#? . .5 1.2 Why Tao? . .5 1.3 Why FreeGlut? . .6 2 Implementation Details 6 2.1 Navigation . .7 2.1.1 Mouse Look . .7 2.1.2 WASD Movement . .8 2.2 Representation . 10 2.3 Physics and Collision Detection . 13 2.3.1 Collisions between Entities and Bullets . 13 2.3.2 Collision with Walls . 14 2.3.3 Bullet Management . 16 2.4 Models and Animation . 17 2.4.1 Hierarchical Spider Models . 17 2.4.2 Animation . 21 2.4.3 Animated Sky . 23 2.5 Gameplay and AI . 25 2.5.1 Spider Bite Handling . 25 2.5.2 Spider AI . 25 2.5.3 Spider Eggs . 26 2.6 Stereo Display Mode . 26 3 Sources 31 4 Final Remarks 31 Part I User Documentation 1 Introduction Beware of the Spider was meant to be an introspective exploration of my irrational fear of spiders. Armed with a flak cannon, the protagonist navigates through a maze, slaughtering giant arachnids and leaving a trail of blood and carnage in his wake. The objective of the game is to find and destroy the (obviously evil) Gargantuan Spider Queen. But it is no easy feat! The labyrinth is infested with her progeny and the floors are littered with spider eggs.