Loadleveler: Using and Administering

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Featuring Independent Software Vendors

Software Series 32000 Catalog Featuring Independent Software Vendors Software Series 32000 Catalog Featuring Independent Software Vendors .. - .. a National Semiconductor Corporation GENIX, Series 32000, ISE, SYS32, 32016 and 32032 are trademarks of National Semiconductor Corp. IBM is a trademark of International Business Machines Corp. VAX 11/730, 750, 780, PDP-II Series, LSl-11 Series, RSX-11 M, RSX-11 M PLUS, DEC PRO, MICRO VAX, VMS, and RSTS are trademarks of Digital Equipment Corp. 8080, 8086, 8088, and 80186 are trademarks of Intel Corp. Z80, Z80A, Z8000, and Z80000 are trademarks of Zilog Corp. UNIX is a trademark of AT&T Bell Laboratories. MS-DOS, XENIX, and XENIX-32 are trademarks of Microsoft Corp. NOVA, ECLIPSE, and AoS are trademarks of Data General Corp. CP/M 2.2, CP/M-80, CP/M-86 are registered trademarks of Digital Research, Inc. Concurrent DOS is a trademark of Digital Research, Inc. PRIMOS is a trademark of Prime Computer Corp. UNITY is a trademark of Human Computing Resources Corp. Introduction Welcome to the exciting world of As a result, the Independent software products for National's Software Vendor (ISV) can provide advanced 32-bit Microprocessor solutions to customer software family, Series 32000. We have problems by offering the same recently renamed our 32-bit software package independent of microprocessor products from the the particular Series 32000 CPU. NS16000 family to Series 32000. This software catalog was This program was effective created to organize, maintain, and immediately following the signing disseminate Series 32000 software of Texas Instruments, Inc. as our information. It includes the software second source for the Series vendor, contact information, and 32000. -

{PDF} MAC Facil Ebook, Epub

MAC FACIL PDF, EPUB, EBOOK Pivovarnick | none | 27 Feb 1995 | Prentice Hall (a Pearson Education Company) | 9789688804735 | English, Spanish | Hemel Hempstead, United Kingdom MAC Facil PDF Book It's nice to have the options for a floating window or menu bar icon. Your browser does not support the video tag. There are two main disk images we use to carry MacOS versions. Digitally embody awesome characters using just a webcam Get started now Watch it in action. Did you click the "Add People" button at the bottom of the People album? Are you saying to keep Photos open but not use it or should I shut down the app? This information is in the link that Idris provided, which you apparently did not read before responding. You don't need to keep Photos open. Dec 6, AM in response to Esquared In response to Esquared I beg to differ, having designed computers for many years. Otherwise, it would be considered non-secure. Enjoy this tip? While future Macs may have the added hardware required, they currently do not. I don't think you understand how seriously Apple takes security. Apple has added new features, improvements, and bug fixes to this version of MacOS. Bomb Dodge. No menus, no sliders—simply natural text to control your timer. Ask other users about this article Ask other users about this article. Then enter your Apple ID and password and click Deauthorize. People that you mark as favorites appear in large squares at the top of the window. The magical Faces feature is based on facial detection and recognition technologies. -

A Survey of Engineering of Formally Verified Software

The version of record is available at: http://dx.doi.org/10.1561/2500000045 QED at Large: A Survey of Engineering of Formally Verified Software Talia Ringer Karl Palmskog University of Washington University of Texas at Austin [email protected] [email protected] Ilya Sergey Milos Gligoric Yale-NUS College University of Texas at Austin and [email protected] National University of Singapore [email protected] Zachary Tatlock University of Washington [email protected] arXiv:2003.06458v1 [cs.LO] 13 Mar 2020 Errata for the present version of this paper may be found online at https://proofengineering.org/qed_errata.html The version of record is available at: http://dx.doi.org/10.1561/2500000045 Contents 1 Introduction 103 1.1 Challenges at Scale . 104 1.2 Scope: Domain and Literature . 105 1.3 Overview . 106 1.4 Reading Guide . 106 2 Proof Engineering by Example 108 3 Why Proof Engineering Matters 111 3.1 Proof Engineering for Program Verification . 112 3.2 Proof Engineering for Other Domains . 117 3.3 Practical Impact . 124 4 Foundations and Trusted Bases 126 4.1 Proof Assistant Pre-History . 126 4.2 Proof Assistant Early History . 129 4.3 Proof Assistant Foundations . 130 4.4 Trusted Computing Bases of Proofs and Programs . 137 5 Between the Engineer and the Kernel: Languages and Automation 141 5.1 Styles of Automation . 142 5.2 Automation in Practice . 156 The version of record is available at: http://dx.doi.org/10.1561/2500000045 6 Proof Organization and Scalability 162 6.1 Property Specification and Encodings . -

Top Functional Programming Languages Based on Sentiment Analysis 2021 11

POWERED BY: TOP FUNCTIONAL PROGRAMMING LANGUAGES BASED ON SENTIMENT ANALYSIS 2021 Functional Programming helps companies build software that is scalable, and less prone to bugs, which means that software is more reliable and future-proof. It gives developers the opportunity to write code that is clean, elegant, and powerful. Functional Programming is used in demanding industries like eCommerce or streaming services in companies such as Zalando, Netflix, or Airbnb. Developers that work with Functional Programming languages are among the highest paid in the business. I personally fell in love with Functional Programming in Scala, and that’s why Scalac was born. I wanted to encourage both companies, and developers to expect more from their applications, and Scala was the perfect answer, especially for Big Data, Blockchain, and FinTech solutions. I’m glad that my marketing and tech team picked this topic, to prepare the report that is focused on sentiment - because that is what really drives people. All of us want to build effective applications that will help businesses succeed - but still... We want to have some fun along the way, and I believe that the Functional Programming paradigm gives developers exactly that - fun, and a chance to clearly express themselves solving complex challenges in an elegant code. LUKASZ KUCZERA, CEO AT SCALAC 01 Table of contents Introduction 03 What Is Functional Programming? 04 Big Data and the WHY behind the idea of functional programming. 04 Functional Programming Languages Ranking 05 Methodology 06 Brand24 -

Comparing UNIX with Other Systems

Comparing UNIX with other systems Timothy DO' Chase Corporate Computer systems, Inc. 33 West Main Street Holmdel, New Jersey he original concept behind this article was to make a grand comparison between UNIX Tand several other well known systems. This was to beall encompassing and packed with vital information summarized in neat charts, tables and graphs. As the work began, the realization settled in that this was not only difficult to do, but would result in a work so boring as to beincomprehensible. The reader, faced with such awealth ofinformation would be lost at best. Conclusions would be difficult to draw and, in short, the result would be worthless. Mtertearfully filling my waste basket with the initial efforts, I regrouped and began by as king myself why would anyone be interested in comparing UNIX with another operating system? There appears to be only two answers. First, one might hope to learn something about UNIX by analogy. IfI understand the file system on MPEN and someone tells me that UNIX is like that except for such and so, then I might be more quickly able to under stand UNIX. This I felt was an unlikely motivation. After all, there are much simpler ways to learn UNIX. Instead, the motivation for comparing UNIX to other systems must come from a need to evaluate UNIX. Ifwe are aware of the features or short comings ofother systems, then we can benefit by evaluating UNIX relative to those systems. Choosing an operating system or computer is a major decision which we can benefit from or be stuck with for a long time. -

David Colignon, Ulg

Introduction to Parallel Programming in OpenMP David Colignon, ULg CÉCI - Consortium des Équipements de Calcul Intensif http://www.ceci-hpc.be Main References • “Parallel Programming with GCC”, Diego Novillo, Red Hat Red Hat Summit, Nashville, May 2006 http://www.airs.com/dnovillo/Papers/rhs2006.pdf • "An Overview of OpenMP", Ruud van der Pas, Oracle IWOMP 2010, Tsukuba, 14-16 June 2010 http://www.compunity.org/training/tutorials/3 Overview_OpenMP.pdf and http://openmp.org/wp/2010/07/iwomp-2010-material-available/ More References: Specification OpenMP, The OpenMP API specification for parallel programming http://openmp.org/ Articles Wikipedia (good summary) http://en.wikipedia.org/wiki/Openmp 32 OpenMP traps for C++ developers http://software.intel.com/en-us/articles/32-openmp-traps-for-c-developers/ Common Mistakes in OpenMP and How To Avoid Them http://www.michaelsuess.net/.../suess_leopold_common_mistakes_06.pdf IWOMP 2009, The 2009 International Workshop on OpenMP (Slides) http://openmp.org/wp/2009/06/iwomp2009/ IWOMP 2010, The 2010 International Workshop on OpenMP (Slides) http://openmp.org/wp/2010/07/iwomp-2010-material-available/ Avoiding and Identifying False Sharing Among Threads http://software.intel.com/en-us/articles/avoiding-and-identifying-false-sharing Tutorials Parallel Programming with GCC, D. Novillo, Red Hat Summit, Nashville, May 2006 http://www.airs.com/dnovillo/Papers/rhs2006.pdf An Overview of OpenMP, IWOMP 2010, Ruud van der Pas, Oracle http://www.compunity.org/training/tutorials/3 Overview_OpenMP.pdf A "Hands-on" Introduction to OpenMP, SC08, Mattsonand Meadows, Intel http://www.openmp.org/mp-documents/omp-hands-on-SC08.pdf Cours OpenMP (en français !) de l'IDRIS http://www.idris.fr/data/cours/parallel/openmp/ Using OpenMP, SC09, Hartman-Baker R., ORNL, NCCS http://www.greatlakesconsortium.org/events/scaling/files/openmp09.pdf OpenMP Tutorial, Barney B., LLNL https://computing.llnl.gov/tutorials/openMP/ Books Using OpenMP - Portable Shared Memory Parallel Programming, by Chapman et al. -

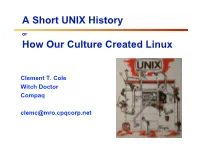

A Short UNIX History How Our Culture Created Linux

A Short UNIX History or How Our Culture Created Linux Clement T. Cole Witch Doctor Compaq [email protected] A UNIX Family History RIG CMU CMU CMU CMU OSF1 Tru64 Linux Accent Mach Mach Mach Linux . 1.X Other Players 2.5 3.0 99 Minix Multics Idris BBN GNU C 386/BSD FreeBSD Generic TCP/IP Net 2.0 Net 1.0 4BSD 4.1A UCB BSD 2BSD 3BSD 4.1BSD 4.2BSD 4.3BSD 4.3Tahoe 4.3Reno 4.4BSD Research X Windows X 10 X 11 32V 1st 2nd 3rd 4th 5th 6th 7th 8th 9th 10th Ed Ed Ed Ed Ed Ed Ed Ed Ed Ed NT/OS2 NT/Win PWB PWB/UNIX PWB 1.0 PWB 2.0 Sys III Sys V Sys V. SVR3 SVR4 SVR4/ 2 ESMP µSoft/SCO µSoft/Xenix SCO/Xenix SCO/UNIX UNIXWARE ‘72 ‘73 ‘74 ‘75 ‘76 ‘77 ‘78 ‘79 ‘80 ‘81 ‘82 ‘83 ‘84 ‘85 ‘86 ‘87 ‘88 ‘89 ‘90 ‘91 ‘92 ‘93 Clem Cole Themes ◆ Something new is really something old. ◆ The Open Source Culture predates UNIX and Linux. ◆ Evolution is good. ◆ Fighting is not always bad, but learn when it’s good enough and stop fighting. Clem Cole Agenda ◆ Technical History ◆ Legal History ◆ What it all means Clem Cole A Word on Engineers “Good programmers write good programs. Great programmers start and build upon other great programmer’s work.” Unknown origin, often attributed to Fred Brooks. Clem Cole Multics ◆ MULTiplexed Information and Computing Service ❖ or “Many Unbelievably Large Tables In Core Simultaneously.” ❖ Actually very cool system, see: The Multics system; an Examination of its Structure, Elliott I. -

Installation Procedures for Clusters

Moreno Baricevic CNR-IOM DEMOCRITOS Trieste, ITALY InstallationInstallation ProceduresProcedures forfor ClustersClusters PART 3 – Cluster Management Tools and Security AgendaAgenda Cluster Services Overview on Installation Procedures Configuration and Setup of a NETBOOT Environment Troubleshooting ClusterCluster ManagementManagement ToolsTools NotesNotes onon SecuritySecurity Hands-on Laboratory Session 2 ClusterCluster managementmanagement toolstools CLUSTERCLUSTER MANAGEMENTMANAGEMENT AdministrationAdministration ToolsTools Requirements: ✔ cluster-wide command execution ✔ cluster-wide file distribution and gathering ✔ password-less environment ✔ must be simple, efficient, easy to use for CLI addicted 4 CLUSTERCLUSTER MANAGEMENTMANAGEMENT AdministrationAdministration ToolsTools C3 tools – The Cluster Command and Control tool suite allows configurable clusters and subsets of machines concurrently execution of commands supplies many utilities cexec (parallel execution of standard commands on all cluster nodes) cexecs (as the above but serial execution, useful for troubleshooting and debugging) cpush (distribute files or directories to all cluster nodes) cget (retrieves files or directory from all cluster nodes) crm (cluster-wide remove) ... and many more PDSH – Parallel Distributed SHell same features as C3 tools, few utilities pdsh, pdcp, rpdcp, dshbak Cluster-Fork – NPACI Rocks serial execution only ClusterSSH multiple xterm windows handled through one input grabber Spawn an xterm for each node! DO NOT EVEN TRY IT ON A LARGE CLUSTER! -

Bare Metal Provisioning to Openstack Using Xcat

JOURNAL OF COMPUTERS, VOL. 8, NO. 7, JULY 2013 1691 Bare Metal Provisioning to OpenStack Using xCAT Jun Xie Network Information Center, BUPT, Beijing, China Email: [email protected] Yujie Su, Zhaowen Lin, Yan Ma and Junxue Liang Network Information Center, BUPT, Beijing, China Email: {suyj, linzw, mayan, liangjx}@bupt.edu.cn Abstract—Cloud computing relies heavily on virtualization mainly built on virtualization technologies (Xen, KVM, technologies. This also applies to OpenStack, which is etc.). currently the most popular IaaS platform; it mainly provides virtual machines to cloud end users. However, B. Motivation virtualization unavoidably brings some performance The adoption of virtualization in cloud environment penalty, contrasted with bare metal provisioning. In this has resulted in great benefits, however, this has not been paper, we present our approach for extending OpenStack to without attendant problems,such as unresponsive support bare metal provisioning through xCAT(Extreme Cloud Administration Toolkit). As a result, the cloud virtualized systems, crashed virtualized servers, mis- platform could be deployed to provide both virtual configured virtual hosting platforms, performance tuning machines and bare metal machines. This paper firstly and erratic performance metrics, among others. introduces why bare metal machines are desirable in a cloud Consequently, we might want to run the compute jobs on platform, then it describes OpenStack briefly and also the "bare metal”: without a virtualization layer, Consider the xCAT driver, which makes xCAT work with the rest of the following cases: OpenStack platform in order to provision bare metal Case 1: Your OpenStack cloud environment contains machines to cloud end users. At the end, it presents a boards with Tilera processors (non-X86), and Tilera does performance comparison between a virtualized and bare- not currently support virtualization technologies like metal environment KVM. -

Xcat 2 Cookbook for Linux 8/7/2008 (Valid for Both Xcat 2.0.X and Pre-Release 2.1)

xCAT 2 Cookbook for Linux 8/7/2008 (Valid for both xCAT 2.0.x and pre-release 2.1) Table of Contents 1.0 Introduction.........................................................................................................................................3 1.1 Stateless and Stateful Choices.........................................................................................................3 1.2 Scenarios..........................................................................................................................................4 1.2.1 Simple Cluster of Rack-Mounted Servers – Stateful Nodes....................................................4 1.2.2 Simple Cluster of Rack-Mounted Servers – Stateless Nodes..................................................4 1.2.3 Simple BladeCenter Cluster – Stateful or Stateless Nodes......................................................4 1.2.4 Hierarchical Cluster - Stateless Nodes.....................................................................................5 1.3 Other Documentation Available......................................................................................................5 1.4 Cluster Naming Conventions Used in This Document...................................................................5 2.0 Installing the Management Node.........................................................................................................6 2.1 Prepare the Management Node.......................................................................................................6 -

Torqueadminguide-5.1.0.Pdf

Welcome Welcome Welcome to the TORQUE Adminstrator Guide, version 5.1.0. This guide is intended as a reference for both users and system administrators. For more information about this guide, see these topics: l Overview l Introduction Overview This section contains some basic information about TORQUE, including how to install and configure it on your system. For details, see these topics: l TORQUE Installation Overview on page 1 l Initializing/Configuring TORQUE on the Server (pbs_server) on page 10 l Advanced Configuration on page 17 l Manual Setup of Initial Server Configuration on page 32 l Server Node File Configuration on page 33 l Testing Server Configuration on page 35 l TORQUE on NUMA Systems on page 37 l TORQUE Multi-MOM on page 42 TORQUE Installation Overview This section contains information about TORQUE architecture and explains how to install TORQUE. It also describes how to install TORQUE packages on compute nodes and how to enable TORQUE as a service. For details, see these topics: l TORQUE Architecture on page 2 l Installing TORQUE on page 2 l Compute Nodes on page 7 l Enabling TORQUE as a Service on page 9 Related Topics Troubleshooting on page 132 TORQUE Installation Overview 1 Overview TORQUE Architecture A TORQUE cluster consists of one head node and many compute nodes. The head node runs the pbs_server daemon and the compute nodes run the pbs_mom daemon. Client commands for submitting and managing jobs can be installed on any host (including hosts not running pbs_server or pbs_mom). The head node also runs a scheduler daemon. -

Magellan Final Report

The Magellan Report on Cloud Computing for Science U.S. Department of Energy Office of Advanced Scientific Computing Research (ASCR) December, 2011 CSO 23179 The Magellan Report on Cloud Computing for Science U.S. Department of Energy Office of Science Office of Advanced Scientific Computing Research (ASCR) December, 2011 Magellan Leads Katherine Yelick, Susan Coghlan, Brent Draney, Richard Shane Canon Magellan Staff Lavanya Ramakrishnan, Adam Scovel, Iwona Sakrejda, Anping Liu, Scott Campbell, Piotr T. Zbiegiel, Tina Declerck, Paul Rich Collaborators Nicholas J. Wright Jeff Broughton Rollin Thomas Brian Toonen Richard Bradshaw Michael A. Guantonio Karan Bhatia Alex Sim Shreyas Cholia Zacharia Fadikra Henrik Nordberg Kalyan Kumaran Linda Winkler Levi J. Lester Wei Lu Ananth Kalyanraman John Shalf Devarshi Ghoshal Eric R. Pershey Michael Kocher Jared Wilkening Ed Holohann Vitali Morozov Doug Olson Harvey Wasserman Elif Dede Dennis Gannon Jan Balewski Narayan Desai Tisha Stacey CITRIS/University of STAR Collboration Krishna Muriki Madhusudhan Govindaraju California, Berkeley Linda Vu Victor Markowitz Gabriel A. West Greg Bell Yushu Yao Shucai Xiao Daniel Gunter Nicholas Dale Trebon Margie Wylie Keith Jackson William E. Allcock K. John Wu John Hules Nathan M. Mitchell David Skinner Brian Tierney Jon Bashor This research used resources of the Argonne Leadership Computing Facility at Argonne National Laboratory, which is supported by the Office of Science of the U.S. Department of Energy under contract DE-AC02¬06CH11357, funded through the American Recovery and Reinvestment Act of 2009. Resources and research at NERSC at Lawrence Berkeley National Laboratory were funded by the Department of Energy from the American Recovery and Reinvestment Act of 2009 under contract number DE-AC02-05CH11231.