Electronic Hamiltonians - Part II

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

First Principles Investigation Into the Atom in Jellium Model System

First Principles Investigation into the Atom in Jellium Model System Andrew Ian Duff H. H. Wills Physics Laboratory University of Bristol A thesis submitted to the University of Bristol in accordance with the requirements of the degree of Ph.D. in the Faculty of Science Department of Physics March 2007 Word Count: 34, 000 Abstract The system of an atom immersed in jellium is solved using density functional theory (DFT), in both the local density (LDA) and self-interaction correction (SIC) approxima- tions, Hartree-Fock (HF) and variational quantum Monte Carlo (VQMC). The main aim of the thesis is to establish the quality of the LDA, SIC and HF approximations by com- paring the results obtained using these methods with the VQMC results, which we regard as a benchmark. The second aim of the thesis is to establish the suitability of an atom in jellium as a building block for constructing a theory of the full periodic solid. A hydrogen atom immersed in a finite jellium sphere is solved using the methods listed above. The immersion energy is plotted against the positive background density of the jellium, and from this curve we see that DFT over-binds the electrons as compared to VQMC. This is consistent with the general over-binding one tends to see in DFT calculations. Also, for low values of the positive background density, the SIC immersion energy gets closer to the VQMC immersion energy than does the LDA immersion energy. This is consistent with the fact that the electrons to which the SIC is applied are becoming more localised at these low background densities and therefore the SIC theory is expected to out-perform the LDA here. -

![Arxiv:2006.09236V4 [Quant-Ph] 27 May 2021](https://docslib.b-cdn.net/cover/1529/arxiv-2006-09236v4-quant-ph-27-may-2021-1001529.webp)

Arxiv:2006.09236V4 [Quant-Ph] 27 May 2021

The Free Electron Gas in Cavity Quantum Electrodynamics Vasil Rokaj,1, ∗ Michael Ruggenthaler,1, y Florian G. Eich,1 and Angel Rubio1, 2, z 1Max Planck Institute for the Structure and Dynamics of Matter, Center for Free Electron Laser Science, 22761 Hamburg, Germany 2Center for Computational Quantum Physics (CCQ), Flatiron Institute, 162 Fifth Avenue, New York NY 10010 (Dated: May 31, 2021) Cavity modification of material properties and phenomena is a novel research field largely mo- tivated by the advances in strong light-matter interactions. Despite this progress, exact solutions for extended systems strongly coupled to the photon field are not available, and both theory and experiments rely mainly on finite-system models. Therefore a paradigmatic example of an exactly solvable extended system in a cavity becomes highly desireable. To fill this gap we revisit Som- merfeld's theory of the free electron gas in cavity quantum electrodynamics (QED). We solve this system analytically in the long-wavelength limit for an arbitrary number of non-interacting elec- trons, and we demonstrate that the electron-photon ground state is a Fermi liquid which contains virtual photons. In contrast to models of finite systems, no ground state exists if the diamagentic A2 term is omitted. Further, by performing linear response we show that the cavity field induces plasmon-polariton excitations and modifies the optical and the DC conductivity of the electron gas. Our exact solution allows us to consider the thermodynamic limit for both electrons and photons by constructing an effective quantum field theory. The continuum of modes leads to a many-body renormalization of the electron mass, which modifies the fermionic quasiparticle excitations of the Fermi liquid and the Wigner-Seitz radius of the interacting electron gas. -

Singular Fermi Liquids, at Least for the Present Case Where the Singularities Are Q-Dependent

Singular Fermi Liquids C. M. Varmaa,b, Z. Nussinovb, and Wim van Saarloosb aBell Laboratories, Lucent Technologies, Murray Hill, NJ 07974, U.S.A. 1 bInstituut–Lorentz, Universiteit Leiden, Postbus 9506, 2300 RA Leiden, The Netherlands Abstract An introductory survey of the theoretical ideas and calculations and the ex- perimental results which depart from Landau Fermi-liquids is presented. Common themes and possible routes to the singularities leading to the breakdown of Landau Fermi liquids are categorized following an elementary discussion of the theory. Sol- uble examples of Singular Fermi liquids include models of impurities in metals with special symmetries and one-dimensional interacting fermions. A review of these is followed by a discussion of Singular Fermi liquids in a wide variety of experimental situations and theoretical models. These include the effects of low-energy collective fluctuations, gauge fields due either to symmetries in the hamiltonian or possible dynamically generated symmetries, fluctuations around quantum critical points, the normal state of high temperature superconductors and the two-dimensional metallic state. For the last three systems, the principal experimental results are summarized and the outstanding theoretical issues highlighted. Contents 1 Introduction 4 1.1 Aim and scope of this paper 4 1.2 Outline of the paper 7 arXiv:cond-mat/0103393v1 [cond-mat.str-el] 19 Mar 2001 2 Landau’s Fermi-liquid 8 2.1 Essentials of Landau Fermi-liquids 8 2.2 Landau Fermi-liquid and the wave function renormalization Z 11 -

The Uniform Electron Gas! We Have No Simpler Paradigm for the Study of Large Numbers Of

The uniform electron gas Pierre-Fran¸cois Loos1, a) and Peter M. W. Gill1, b) Research School of Chemistry, Australian National University, Canberra ACT 2601, Australia The uniform electron gas or UEG (also known as jellium) is one of the most funda- mental models in condensed-matter physics and the cornerstone of the most popular approximation — the local-density approximation — within density-functional the- ory. In this article, we provide a detailed review on the energetics of the UEG at high, intermediate and low densities, and in one, two and three dimensions. We also re- port the best quantum Monte Carlo and symmetry-broken Hartree-Fock calculations available in the literature for the UEG and discuss the phase diagrams of jellium. Keywords: uniform electron gas; Wigner crystal; quantum Monte Carlo; phase dia- gram; density-functional theory arXiv:1601.03544v2 [physics.chem-ph] 19 Feb 2016 a)Electronic mail: [email protected]; Corresponding author b)Electronic mail: [email protected] 1 I. INTRODUCTION The final decades of the twentieth century witnessed a major revolution in solid-state and molecular physics, as the introduction of sophisticated exchange-correlation models1 propelled density-functional theory (DFT) from qualitative to quantitative usefulness. The apotheosis of this development was probably the award of the 1998 Nobel Prize for Chemistry to Walter Kohn2 and John Pople3 but its origins can be traced to the prescient efforts by Thomas, Fermi and Dirac, more than 70 years earlier, to understand the behavior of ensembles -

Electron-Electron Interaction and the Fermi-Liquid Theory

PHZ 7427 SOLID STATE II: Electron-electron interaction and the Fermi-liquid theory D. L. Maslov Department of Physics, University of Florida (Dated: February 21, 2014) 1 CONTENTS I.Notations 2 II.Electrostaticscreening 2 A.Thomas-Fermi model 2 B.Effective strength of the electron-electron interaction. Parameter rs: 2 C.Full solution (Lindhard function) 3 D.Lindhardfunction 5 1.Adiscourse: properties of Fourier transforms 6 2.Endof discourse 7 E.Friedel oscillations 7 1.Enhancement of the backscattering probability due to Friedel oscillations 8 F.Hamiltonianof the jellium model 9 G.Effective mass near the Fermi level 13 1.Effective mass in the Hartree-Fock approximation 15 2.Beyond the Hartree-Fock approximation 15 III.Stonermodel of ferromagnetism in itinerant systems 16 IV.Wignercrystal 20 V.Fermi-liquid theory 22 A.Generalconcepts 22 1.Motivation 23 B.Scatteringrate in an interacting Fermi system 24 C.Quasi-particles 27 1.Interaction of quasi-particles 29 D.Generalstrategy of the Fermi-liquid theory 31 E.Effective mass 31 F.Spinsusceptibility 33 1.Free electrons 33 2.Fermi liquid 34 G.Zerosound 35 2 VI.Non-Fermi-liquid behaviors 37 A.Dirty Fermi liquids 38 1.Scatteringrate 38 2.T-dependence of the resistivity 40 A.Bornapproximation for the Landau function 43 References 44 I. NOTATIONS • kB = 1 (replace T by kBT in the final results) • ~ = 1 (momenta and wave numbers have the same units, so do frequency and energy) • ν(") ≡ density of states II. ELECTROSTATIC SCREENING A. Thomas-Fermi model For Thomas-Fermi model, see AM, Ch. 17. B. Effective strength of the electron-electron interaction. -

Chapter 3. Insulators, Metals, and Semiconductors

Chapter 3. Insulators, Metals, and Semiconductors Canal Robert Wittig "A scientific truth does not triumph by convincing its opponents and making them see the light, but rather because its opponents eventually die and a new generation grows up that is familiar with it." Max Planck 215 Chapter 3. Insulators, Metals, and Semiconductors 216 Chapter 3. Insulators, Metals, and Semiconductors Contents 3.1. Introduction 219 Photons and Phonons 220 Small Phonon Wave Vectors 220 Wave Vector Conservation 221 Raman Scattering 222 3.2. TO and LO Branches: Wave Vector Conservation 227 Maxwell's Equations 229 3.3. Plasmas in Metals and Semiconductors 230 Tradition 230 Electron Density and Free Particle Character 231 Screening 236 Electron-Electron Scattering 237 Back to Screening 237 Thomas-Fermi 239 Chemical Potential 240 Maxwell-Boltzmann 244 Back to the Chemical Potential 245 Injecting an Electron 246 Back to Thomas-Fermi 247 Lindhard Model 251 Quasiparticles to the Rescue 252 Analogy 253 Superconductivity 255 Electron Fluid 255 Debye-Hückel Model 256 Metals and Doped Semiconductors 258 217 Chapter 3. Insulators, Metals, and Semiconductors "One of the most decisive influences on my scientific out- look came from Professor Paul Ehrenfest. He had been born and brought up in Vienna and taught at the University of Leiden in Holland. In 1929, when Born had his stroke, Ehrenfest came to Göttingen to teach for a year, and I was lucky enough to meet him. Ehrenfest took a special interest in me because I came from Vienna, and we spent a lot of time together. As a theoretical physicist, he also shunned long mathematical derivations, a trail that aroused my en- thusiastic approval. -

Exchange Interactions and Itinerant Ferromagnetism in Ultracold Fermi Gases

PHYSICAL REVIEW A 98, 023635 (2018) Exchange interactions and itinerant ferromagnetism in ultracold Fermi gases Enya Vermeyen* TQC, Universiteit Antwerpen, B-2610 Antwerpen, Belgium Carlos A. R. Sá de Melo School of Physics, Georgia Institute of Technology, Atlanta, Georgia 30332, USA Jacques Tempere TQC, Universiteit Antwerpen, B-2610 Antwerpen, Belgium and Lyman Laboratory of Physics, Harvard University, Cambridge, Massachusetts 02138, USA (Received 8 December 2017; revised manuscript received 10 July 2018; published 31 August 2018) In the 1930s, two main paradigms for the theoretical description of ferromagnetism were developed: Heisenberg ferromagnetism of localized fermions (e.g., in a lattice), and Bloch or Stoner ferromagnetism of nonlocalized fermions (i.e., in a gas), also called itinerant ferromagnetism. Despite many theoretical predictions, itinerant ferromagnetism has remained elusive in experiments. This ferromagnetic state is predicted to occur for strong repulsive interactions, corresponding to a regime that is very challenging to describe theoretically because there are multiple competing physical effects, including superfluid pairing. In this paper, we point out that the problem of itinerant ferromagnetism for atomic Fermi gases is different from that of electron gases in metals due to the short-range nature of the interatomic interactions. We also show that the standard saddle point used to describe itinerant ferromagnetism of the electron gas in metals does not apply, because in the short-range limit of this approximation the Pauli exclusion principle is violated. As a remedy, we introduce a modified interaction pseudopotential for ultracold gases which includes both local (Hartree) and nonlocal (Fock) terms while preserving the Pauli exclusion principle in the short-range regime. -

Theory of Adsorption on Metal Substrates

CHAPTER 5 Theory of Adsorption on Metal Substrates M. Scheffler and C. Stampfl Fritz-Haber-Institut der Max-Planck-Gesellschaft Faradayweg 4-6 D-14195 Berlin, Germany Handbook of Surface Science arXiv:cond-mat/0011217v1 [cond-mat.mtrl-sci] 13 Nov 2000 Volume 2, edited by K. Horn and M. Scheffler Contents 5.1 Introduction 5.1.1 The nature of the surface chemical bond: indeterminate concepts, yet useful ... 5.1.2 What will be discussed and why, and what is missing 5.2 Concepts and definitions 5.2.1 Density of states 5.2.2 Energies 5.2.3 Binding energy at kink sites 5.2.4 The surface energy barrier 5.3 The tight-binding picture of bonding 5.3.1 Adsorbate-substrate interaction 5.3.2 Adsorbate band structure 5.4 Adsorption of isolated adatoms 5.4.1 Geometry 5.4.2 Density of states ∆N(ǫ) 5.4.3 Electron density: n(r), ∆n(r), and n∆(r) 5.4.4 Surface dipole moments 5.5 Alkali-metal adsorption: the traditional picture of on-surface adsorption 5.5.1 The Langmuir-Gurney picture 5.5.2 Coverage dependence of the work function 5.5.3 Ionization of the adsorbate and screening by the substrate electrons 5.5.4 Surface band structure 5.6 Substitutional adsorption and formation of surface alloys 5.6.1 Na on Al(001) 5.6.2 Na on Al(111) 5.6.3 Co on Cu(001) 5.7 Adsorption of CO on transition-metal surfaces – a model system for a simple molecular adsorbate 5.8 Co-adsorption [the example CO plus O on Ru(0001)] 286 5.9 Chemical reactions at metal surfaces 5.9.1 The problems with “the” transition state 5.9.2 Dissociative adsorption and associative desorption of H2 at transition metals 5.9.2.1 The potential-energy surface of H2 at transition-metal surfaces 5.9.2.2 The dynamics of H2 dissociation at transition-metal surfaces 5.10 The catalytic oxidation of CO 5.11 Summary outline of main points References 287 5.1 Introduction The theory of adsorption has reached a level where it is possible to calculate free energies, as well as the electronic and atomic structure, of medium-sized systems with predictive accu- racy. -

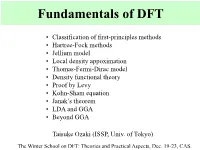

1-DFT-Ozaki.Pdf

Fundamentals of DFT • Classification of first-principles methods • Hartree-Fock methods • Jellium model • Local density appoximation • Thomas-Fermi-Dirac model • Density functional theory • Proof by Levy • Kohn-Sham equation • Janak’s theorem • LDA and GGA • Beyond GGA Taisuke Ozaki (ISSP, Univ. of Tokyo) The Winter School on DFT: Theories and Practical Aspects, Dec. 19-23, CAS. Challenges in computational materials science 1. To understand physical and chemical properties of molecules and solids by solving the Dirac equation as accurate as possible. 2. To design novel materials having desired properties from atomistic level theoretically, before actual experiments. 3. To propose possible ways of synthesis for the designed materials theoretically. Schrödinger equation and wave functions kinectic external e-e Conditions that wave functions must satisfy (1) indistinctiveness (2) anticommutation (Pauli’s exclusion principle) (3) orthonormalization A expression that satisfies above conditions: Classification of electronic structure methods Computational Wave function theory complexity Features e.g., configuration interaction (CI) method O(eN) High accurary High cost Density functional theory 3 Medium accuracy O(N ) Low cost Quantum Monte Carlo method 3~ High accuray O(N ) High cost Easy to parallel Many body Green’s function method O(N3~) Medium accuray Excited states Hartree-Fock (HF) method Slater determinantal funtion A form of many electron wave funtion satisfying indistinctiveness and anti- commutation. One-electron integral HF energy Coulomb integral Exchange integral The variation of E w.r.t ψ leads to HF equation: Results by the HF method e.g., H2O HF Experiment bond(O-H) (Å) 0.940 0.958 Angle(H-O-H) (Deg.)) 106.1 104.5 -1 ν1 (cm ) 4070 3657 -1 ν2 (cm ) 1826 1595 Correlation energy Ecorr = Eexact - EHF e.g. -

Introduction to Theoretical Surface Science

Introduction to Theoretical Surface Science Axel Groß Abteilung Theoretische Chemie Universit¨at Ulm Albert-Einstein-Allee 11 D-89069 Ulm GERMANY email: [email protected] Abstract Recent years have seen a tremendous progress in the microscopic theoretical treatment of surfaces and processes on surfaces. While some decades ago a phenomenological thermody- namic approach was dominant, a variety of surface properties can now be described from first principles, i.e. without invoking any empirical parameters. Consequently, the field of theoretical surface science is no longer limited to explanatory purposes only. It has reached such a level of sophistication and accuracy that reliable predictions for certain surface science problems have become possible. Hence both experiment and theory can contribute on an equal footing to the scientific progress. In this lecture, the theoretical concepts and computational tools necessary and relevant for theoretical surface science will be introduced. A microscopic approach towards the theoretical description of surface science will be presented. Based on the fundamental theoretical entity, the Hamiltonian, a hierarchy of theoretical methods will be introduced in order to describe surface structures and processes at different length and time scales. But even for the largest time and length scales, all necessary parameters will be derived from microscopic properties. 1 Introduction It is the aim of theoretical surface science to contribute significantly to the fundamental under- standing of the underlying principles that govern the geometric and electronic structure of surfaces and the processes occuring on these surfaces such as growth of surface layers, gas-surface scattering, friction or reactions at surfaces [1]. -

Landau's Fermi Liquid Concept to the Extreme

Landau's Fermi Liquid Concept to the Extreme: the Physics of Heavy Fermions Prof. Thomas Pruschke XVI Training Course in the Physics of Strongly Correlated Systems, Salerno, Fall 2011 Contents 1 The homogeneous electron gas 5 1.1 Basic concetps . .6 1.2 Ground state properties . .7 1.3 Evaluation of k-sums { Density of States . .9 1.4 Excited states of the electron gas . 10 ⃗ 1.5 Finite temperatures . 11 2 Fermi liquid theory 15 2.1 Beyond the independent electron approximation . 16 2.2 Landau's Fermi liquid theory . 18 3 Heavy Fermions 35 3.1 Introductory remarks . 36 3.2 Connection of Heavy Fermions and Kondo effect . 37 3.3 Some fundamental results . 38 3.4 The local Fermi liquid . 43 3.5 Heavy Fermions - a first attempt . 45 3.6 DMFT treatment of the periodic Kondo model . 48 i CONTENTS ii Introductory remarks Introductory remarks Landau's Fermi liquid is the fundamental concept in modern solid state physics and sets the language and notion used to communicate and interpret physi- cal observations in solids. Within the Fermi liquid theory, electrons in solids are described as non-interacting quasi-particles, with some of their properties changed as compared to the electrons in free space. Most of you will know the concept of the effective mass, which often is sufficient to explain a whole bunch of different experimental findings for a given material. In addition, new \types" of particles, so-called holes, appear, and both are needed for a proper understanding of the electronic properties of soilds. -

Finite Jellium Models. I. Restricted Hartree–Fock Calculations

THE JOURNAL OF CHEMICAL PHYSICS 122, 154108 ͑2005͒ Finite jellium models. I. Restricted Hartree–Fock calculations Sankha Ghosh School of Chemistry, University of Nottingham, Nottingham NG7 2RD, United Kingdom ͒ Peter M. W. Gilla Research School of Chemistry, Australian National University, Canberra, Australian Capital Territory 0200, Australia ͑Received 26 April 2004; accepted 26 January 2005; published online 20 April 2005͒ Restricted Hartree–Fock calculations have been performed on the Fermi configurations of n electrons confined within a cube. The self-consistent-field orbitals have been expanded in a basis of N particle-in-a-box wave functions. The difficult one- and two-electron integrals have been reduced to a small set of canonical integrals that are calculated accurately using quadrature. The total energy and exchange energy per particle converge smoothly toward their limiting values as n increases; the highest occupied molecular orbital–lowest unoccupied molecular orbital gap and Dirac coefficient converge erratically. However, the convergence in all cases is slow. © 2005 American Institute of Physics. ͓DOI: 10.1063/1.1873552͔ I. INTRODUCTION finite space. Ellert et al., for example, have rationalized17 the optical absorption spectra of small, open-shell sodium clus- Jellium is the most popular electronic structure model in ters by considering n electrons in a spheroidal cavity, Koski- solid-state physics and also constitutes the starting point for nen, Lipas, and Manninen have studied n electrons in a re- many of the density functional theories that are now widely laxed background charge density,18 Holas et al. have 19 used in computational chemistry.1 Its success stems largely examined n electrons in a magnetic field, and Alavi’s group from its surpassing simplicity: an infinite uniform gas of has recently published studies of n=2 highly correlated elec- 20 21 electrons characterized by a single number, its electron den- trons in a cube and a ball.