Robust Maximum Association Estimators

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Multivariate Statistical Functions in R

Multivariate statistical functions in R Michail T. Tsagris [email protected] College of engineering and technology, American university of the middle east, Egaila, Kuwait Version 6.1 Athens, Nottingham and Abu Halifa (Kuwait) 31 October 2014 Contents 1 Mean vectors 1 1.1 Hotelling’s one-sample T2 test ............................. 1 1.2 Hotelling’s two-sample T2 test ............................ 2 1.3 Two two-sample tests without assuming equality of the covariance matrices . 4 1.4 MANOVA without assuming equality of the covariance matrices . 6 2 Covariance matrices 9 2.1 One sample covariance test .............................. 9 2.2 Multi-sample covariance matrices .......................... 10 2.2.1 Log-likelihood ratio test ............................ 10 2.2.2 Box’s M test ................................... 11 3 Regression, correlation and discriminant analysis 13 3.1 Correlation ........................................ 13 3.1.1 Correlation coefficient confidence intervals and hypothesis testing us- ing Fisher’s transformation .......................... 13 3.1.2 Non-parametric bootstrap hypothesis testing for a zero correlation co- efficient ..................................... 14 3.1.3 Hypothesis testing for two correlation coefficients . 15 3.2 Regression ........................................ 15 3.2.1 Classical multivariate regression ....................... 15 3.2.2 k-NN regression ................................ 17 3.2.3 Kernel regression ................................ 20 3.2.4 Choosing the bandwidth in kernel regression in a very simple way . 23 3.2.5 Principal components regression ....................... 24 3.2.6 Choosing the number of components in principal component regression 26 3.2.7 The spatial median and spatial median regression . 27 3.2.8 Multivariate ridge regression ......................... 29 3.3 Discriminant analysis .................................. 31 3.3.1 Fisher’s linear discriminant function .................... -

Probabilistic Inferences for the Sample Pearson Product Moment Correlation Jeffrey R

Journal of Modern Applied Statistical Methods Volume 10 | Issue 2 Article 8 11-1-2011 Probabilistic Inferences for the Sample Pearson Product Moment Correlation Jeffrey R. Harring University of Maryland, [email protected] John A. Wasko University of Maryland, [email protected] Follow this and additional works at: http://digitalcommons.wayne.edu/jmasm Part of the Applied Statistics Commons, Social and Behavioral Sciences Commons, and the Statistical Theory Commons Recommended Citation Harring, Jeffrey R. and Wasko, John A. (2011) "Probabilistic Inferences for the Sample Pearson Product Moment Correlation," Journal of Modern Applied Statistical Methods: Vol. 10 : Iss. 2 , Article 8. DOI: 10.22237/jmasm/1320120420 Available at: http://digitalcommons.wayne.edu/jmasm/vol10/iss2/8 This Regular Article is brought to you for free and open access by the Open Access Journals at DigitalCommons@WayneState. It has been accepted for inclusion in Journal of Modern Applied Statistical Methods by an authorized editor of DigitalCommons@WayneState. Journal of Modern Applied Statistical Methods Copyright © 2011 JMASM, Inc. November 2011, Vol. 10, No. 2, 476-493 1538 – 9472/11/$95.00 Probabilistic Inferences for the Sample Pearson Product Moment Correlation Jeffrey R. Harring John A. Wasko University of Maryland, College Park, MD Fisher’s correlation transformation is commonly used to draw inferences regarding the reliability of tests comprised of dichotomous or polytomous items. It is illustrated theoretically and empirically that omitting test length and difficulty results in inflated Type I error. An empirically unbiased correction is introduced within the transformation that is applicable under any test conditions. Key words: Correlation coefficients, measurement, test characteristics, reliability, parallel forms, test equivalency. -

Point‐Biserial Correlation: Interval Estimation, Hypothesis Testing, Meta

UC Santa Cruz UC Santa Cruz Previously Published Works Title Point-biserial correlation: Interval estimation, hypothesis testing, meta- analysis, and sample size determination. Permalink https://escholarship.org/uc/item/3h82b18b Journal The British journal of mathematical and statistical psychology, 73 Suppl 1(S1) ISSN 0007-1102 Author Bonett, Douglas G Publication Date 2020-11-01 DOI 10.1111/bmsp.12189 Peer reviewed eScholarship.org Powered by the California Digital Library University of California 1 British Journal of Mathematical and Statistical Psychology (2019) © 2019 The British Psychological Society www.wileyonlinelibrary.com Point-biserial correlation: Interval estimation, hypothesis testing, meta-analysis, and sample size determination Douglas G. Bonett* Department of Psychology, University of California, Santa Cruz, California, USA The point-biserial correlation is a commonly used measure of effect size in two-group designs. New estimators of point-biserial correlation are derived from different forms of a standardized mean difference. Point-biserial correlations are defined for designs with either fixed or random group sample sizes and can accommodate unequal variances. Confidence intervals and standard errors for the point-biserial correlation estimators are derived from the sampling distributions for pooled-variance and separate-variance versions of a standardized mean difference. The proposed point-biserial confidence intervals can be used to conduct directional two-sided tests, equivalence tests, directional non-equivalence tests, and non-inferiority tests. A confidence interval for an average point-biserial correlation in meta-analysis applications performs substantially better than the currently used methods. Sample size formulas for estimating a point-biserial correlation with desired precision and testing a point-biserial correlation with desired power are proposed. -

A New Parametrization of Correlation Matrices∗

A New Parametrization of Correlation Matrices∗ Ilya Archakova and Peter Reinhard Hansenb† aUniversity of Vienna bUniversity of North Carolina & Copenhagen Business School January 14, 2019 Abstract For the modeling of covariance matrices, the literature has proposed a variety of methods to enforce the positive (semi) definiteness. In this paper, we propose a method that is based on a novel parametrization of the correlation matrix, specifically the off-diagonal elements of the matrix loga- rithmic transformed correlations. This parametrization has many attractive properties, a wide range of applications, and may be viewed as a multivariate generalization of Fisher’s Z-transformation of a single correlation. Keywords: Covariance Modeling, Covariance Regularization, Fisher Transformation, Multivariate GARCH, Stochastic Volatility. JEL Classification: C10; C22; C58 ∗We are grateful for many valuable comments made by Immanuel Bomze, Bo Honoré, Ulrich Müller, Georg Pflug, Werner Ploberger, Rogier Quaedvlieg, and Christopher Sims, as well as many conference and seminar participants. †Address: University of North Carolina, Department of Economics, 107 Gardner Hall Chapel Hill, NC 27599-3305 1 1 Introduction In the modeling of covariance matrices, it is often advantageous to parametrize the model so that the parameters are unrestricted. The literature has proposed several methods to this end, and most of these methods impose additional restrictions beyond the positivity requirement. Only a handful of methods ensure positive definiteness without imposing additional restrictions on the covariance matrix, see Pinheiro and Bates (1996) for a discussion of five parameterizations of this kind. In this paper, we propose a new way to parametrize the covariance matrix that ensures positive definiteness without imposing additional restrictions, and we show that this parametrization has many attractive properties. -

Connectivity.Pdf

FMRI Connectivity Analysis in AFNI Gang Chen SSCC/NIMH/NIH Nov. 12, 2009 Structure of this lecture !! Overview !! Correlation analysis "! Simple correlation "! Context-dependent correlation (PPI) !! Structural equation modeling (SEM) "! Model validation "! Model search !! Granger causality (GC) "! Bivariate: exploratory - ROI search "! Multivariate: validating – path strength among pre-selected ROIs Overview: FMRI connectivity analysis !! All about FMRI "! Not for DTI "! Some methodologies may work for MEG, EEG-ERP !! Information we have "! Anatomical structures o! Exploratory: A seed region in a network, or o! Validating: A network with all relevant regions known "! Brain output (BOLD signal): regional time series !! What can we say about inter-regional communications? "! Inverse problem: make inference about intra-cerebral neural processes from extra-cerebral/vascular signal "! Based on response similarity (and sequence) Approach I: seed-based; ROI search !! Regions involved in a network are unknown "! Bi-regional/seed vs. whole brain (3d*): brain volume as input "! Mainly for ROI search "! Popular name: functional connectivity "! Basic, coarse, exploratory with weak assumptions "! Methodologies: simple correlation, PPI, bivariate GC "! Weak in interpretation: may or may not indicate directionality/ causality Approach II: ROI-based !! Regions in a network are known "! Multi-regional (1d*): ROI data as input "! Model validation, connectivity strength testing "! Popular name: effective or structural connectivity "! Strong assumptions: -

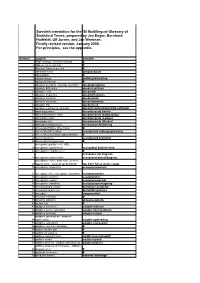

Swedish Translation for the ISI Multilingual Glossary of Statistical Terms, Prepared by Jan Enger, Bernhard Huitfeldt, Ulf Jorner, and Jan Wretman

Swedish translation for the ISI Multilingual Glossary of Statistical Terms, prepared by Jan Enger, Bernhard Huitfeldt, Ulf Jorner, and Jan Wretman. Finally revised version, January 2008. For principles, see the appendix. -

Copyrighted Material

INDEX addition rule, 106, 126 mean, 128 adjacent values, 76 variance, 128 adjusted rate, 13, 15 binomial probability, 126 agreement, 112 bioassay, 330 AIC. see Akaike Information Criterion blinded study Akaike Information Criterion, 418 double, 496 alpha, 198 triple, 496 analysis of variance (ANOVA), 253, 273 block, 273, 280 analysis of variance (ANOVA) table, 254, complete, 281 276, 310 fixed, 281 antibody response, 316 random, 284 antilog. see exponentiation blocking factor. see block area under the density curve, 117, 124 Bonferroni’s type I error adjustment, 258 average. see mean box plot, 76 bar chart, 7 case–control study, 2, 130, 199, 358, baseline hazard, 451, 456, 460 439, 494 Bayesian Information Criterion, 418 matched, 518 Bayes’ theorem, 111 pair matched, 464 Bernoulli distribution, 354 unmatched, 516, 520 mean, 359 censoring, 442 variance, 360 censoring indicator, 443 better treatment trials,COPYRIGHTED 505 central MATERIAL limit theorem, 115, 125, 146, 153, BIC. see Bayesian Information Criterion 182, 198, 236 binary characteristic, 2 chance, 103, 116 binary data. see variable, binary change rate, 10 binomial distribution, 126, 132 chi‐square distribution, 125 Introductory Biostatistics, Second Edition. Chap T. Le and Lynn E. Eberly. © 2016 John Wiley & Sons, Inc. Published 2016 by John Wiley & Sons, Inc. Companion website: www.wiley.com/go/Le/Biostatistics 0002710614.indd 585 3/26/2016 1:42:39 PM 586 INDEX chi‐square test, 212, 458, 470, 471 contingency table, 22, 197, 211 difference in proportions, 203 contingency table, -

Confidence Intervals for Comparison of the Squared Multiple Correlation Coefficients of Non-Nested Models

Western University Scholarship@Western Electronic Thesis and Dissertation Repository 2-14-2012 12:00 AM Confidence Intervals for Comparison of the Squared Multiple Correlation Coefficients of Non-nested Models Li Tan Jr. The University of Western Ontario Supervisor Dr. G.Y. Zou The University of Western Ontario Joint Supervisor Dr. John Koval The University of Western Ontario Graduate Program in Biostatistics A thesis submitted in partial fulfillment of the equirr ements for the degree in Master of Science © Li Tan Jr. 2012 Follow this and additional works at: https://ir.lib.uwo.ca/etd Part of the Biostatistics Commons Recommended Citation Tan, Li Jr., "Confidence Intervals for Comparison of the Squared Multiple Correlation Coefficients of Non- nested Models" (2012). Electronic Thesis and Dissertation Repository. 384. https://ir.lib.uwo.ca/etd/384 This Dissertation/Thesis is brought to you for free and open access by Scholarship@Western. It has been accepted for inclusion in Electronic Thesis and Dissertation Repository by an authorized administrator of Scholarship@Western. For more information, please contact [email protected]. Confidence Intervals for Comparison of the Squared Multiple Correlation Coefficients of Non-nested Models (Spine Title: CIs for Comparison of the Squared Multiple Correlations of Non-nested Models) (Thesis Format: Monograph) by Li Tan, MSc. Graduate Program in Epidemiology & Biostatistics Submitted in partial fulfillment of the requirements for the degree of Master of Science School of Graduate and Postdoctoral Studies The University of Western Ontario London, Ontario February 2012 c Li Tan, 2012 THE UNIVERSITY OF WESTERN ONTARIO SCHOOL OF GRADUATE AND POSTDOCTORAL STUDIES CERTIFICATE OF EXAMINATION Joint Supervisor Examiners Dr. -

Modified T Tests and Confidence Intervals for Asymmetrical Populations Author(S): Norman J

Modified t Tests and Confidence Intervals for Asymmetrical Populations Author(s): Norman J. Johnson Source: Journal of the American Statistical Association, Vol. 73, No. 363 (Sep., 1978), pp. 536- 544 Published by: American Statistical Association Stable URL: http://www.jstor.org/stable/2286597 . Accessed: 19/12/2013 01:17 Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at . http://www.jstor.org/page/info/about/policies/terms.jsp . JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about JSTOR, please contact [email protected]. American Statistical Association is collaborating with JSTOR to digitize, preserve and extend access to Journal of the American Statistical Association. http://www.jstor.org This content downloaded from 143.169.248.6 on Thu, 19 Dec 2013 01:17:45 AM All use subject to JSTOR Terms and Conditions ModifiedL Testsand ConfidenceIntervals forAsymmetrical Populations NORMANJ. JOHNSON* This articleconsiders a procedurethat reducesthe effectof popu- versa. These authors studied the use of It in forming lation skewnesson the distributionof the t variableso that tests about themean can be morecorrectly computed. A modificationof confidenceintervals for ,u in orderto reduce the skewness thet variable is obtainedthat is usefulfor distributions with skewness effect.Their resultsshowed that the use of I tI was valid as severeas that of the exponentialdistribution. The procedureis only in the case of slightpopulation skewness. -

Multiple Faults Diagnosis for Sensors in Air Handling Unit Using Fisher Discriminant Analysis

Energy Conversion and Management 49 (2008) 3654–3665 Contents lists available at ScienceDirect Energy Conversion and Management journal homepage: www.elsevier.com/locate/enconman Multiple faults diagnosis for sensors in air handling unit using Fisher discriminant analysis Zhimin Du *, Xinqiao Jin School of Mechanical Engineering, Shanghai Jiao Tong University, 800, Dongchuan Road, Shanghai 200240, China article info abstract Article history: This paper presents a data-driven method based on principal component analysis and Fisher discriminant Received 26 November 2007 analysis to detect and diagnose multiple faults including fixed bias, drifting bias, complete failure of sen- Accepted 29 June 2008 sors, air damper stuck and water valve stuck occurred in the air handling units. Multi-level strategies are Available online 15 August 2008 developed to improve the diagnosis efficiency. Firstly, system-level PCA model I based on energy balance is used to detect the abnormity in view of system. Then the local-level PCA model A and B based on supply Keywords: air temperature and outdoor air flow rate control loops are used to further detect the occurrence of faults Multiple faults and pre-diagnose them into various locations. As a linear dimensionality reduction technique, moreover, Principal component analysis Fisher discriminant analysis is presented to diagnose the fault source after pre-diagnosis. With Fisher Fisher discriminant analysis Air handling unit transformation, all of the data classes including normal and faulty operation can be re-arrayed in a Detection and diagnosis transformed data space and as a result separated. Comparing the Mahalanobis distances (MDs) of all the candidates, the least one can be identified as the fault source. -

Lecture 3: Statistical Sampling Uncertainty

Lecture 3: Statistical sampling uncertainty c Christopher S. Bretherton Winter 2015 3.1 Central limit theorem (CLT) Let X1; :::; XN be a sequence of N independent identically-distributed (IID) random variables each with mean µ and standard deviation σ. Then X1 + X2::: + XN − Nµ ZN = p ! n(0; 1) as N ! 1 σ N To be precise, the arrow means that the CDF of the left hand side converges pointwise to the CDF of the `standard normal' distribution on the right hand side. An alternative statement of the CLT is that X + X ::: + X p 1 2 N ∼ n(µ, σ= N) as N ! 1 (3.1.1) N where ∼ denotes asymptotic convergence; that is the ratio of the CDF of tMN to the normal distribution on the right hand side tends to 1 for large N. That is, regardless of the distribution of the Xk, given enough samples, their sample mean is approximatelyp normally distributed with mean µ and standard devia- tion σm = σ= N. For instance, for large N, the mean of N Bernoulli random variables has an approximately normally-distributed CDF with mean p and standard deviation pp(1 − p)=N. More generally, other quantities such as vari- ances, trends, etc., also tend to have normal distributions even if the underlying data are not normally-distributed. This explains why Gaussian statistics work surprisingly well for many purposes. Corollary: The product of N IID RVs will asymptote to a lognormal dis- tribution as N ! 1. The Central Limit Theorem Matlab example on the class web page shows the results of 100 trials of averaging N = 20 Bernoulli RVs with p = 0:3 (note that a Bernoulli RV is highly non-Gaussian!). -

Comparison of Correlation, Partial Correlation, and Conditional Mutual

University of Arkansas, Fayetteville ScholarWorks@UARK Theses and Dissertations 8-2018 Comparison of Correlation, Partial Correlation, and Conditional Mutual Information for Interaction Effects Screening in Generalized Linear Models Ji Li University of Arkansas, Fayetteville Follow this and additional works at: http://scholarworks.uark.edu/etd Part of the Applied Statistics Commons Recommended Citation Li, Ji, "Comparison of Correlation, Partial Correlation, and Conditional Mutual Information for Interaction Effects Screening in Generalized Linear Models" (2018). Theses and Dissertations. 2860. http://scholarworks.uark.edu/etd/2860 This Thesis is brought to you for free and open access by ScholarWorks@UARK. It has been accepted for inclusion in Theses and Dissertations by an authorized administrator of ScholarWorks@UARK. For more information, please contact [email protected], [email protected]. Comparison of Correlation, Partial Correlation, and Conditional Mutual Information for Interaction Effects Screening in Generalized Linear Models A thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Statistics and Analytics by Ji Li East China University of Science and Technology Bachelor of Science in Food Quality and Safety, 2008 August 2018 University of Arkansas This thesis is approved for recommendation to the Graduate Council. ____________________________________ Qingyang Zhang, PhD Thesis Director ____________________________________ ____________________________________ Jyotishka Datta, PhD Avishek Chakraborty, PhD Committee Member Committee Member ABSTRACT Numerous screening techniques have been developed in recent years for genome-wide association studies (GWASs) (Moore et al., 2010). In this thesis, a novel model-free screening method was developed and validated by an extensive simulation study. Many screening methods were mainly focused on main effects, while very few studies considered the models containing both main effects and interaction effects.