Downloading the Video to Their Device (See Figure 3-63)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Rns Over the Long Term and Critical to Enabling This Is Continued Investment in Our Technology and People, a Capital Allocation Priority

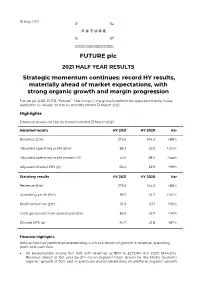

19 May 2021 FUTURE plc 2021 HALF YEAR RESULTS Strategic momentum continues: record HY results, materially ahead of market expectations, with strong organic growth and margin progression Future plc (LSE: FUTR, “Future”, “the Group”), the global platform for specialist media, today publishes its results for the six months ended 31 March 2021. Highlights Financial results for the six months ended 31 March 2021 Adjusted results HY 2021 HY 2020 Var Revenue (£m) 272.6 144.3 +89% Adjusted operating profit (£m)1 89.2 39.9 +124% Adjusted operating profit margin (%) 33% 28% +5ppt Adjusted diluted EPS (p) 65.4 32.9 +99% Statutory results HY 2021 HY 2020 Var Revenue (£m) 272.6 144.3 +89% Operating profit (£m) 59.7 24.7 +142% Profit before tax (£m) 56.9 27.1 +110% Cash generated from operations (£m) 85.9 35.7 +141% Diluted EPS (p) 40.7 21.8 +87% Financial highlights Robust first-half performance extending our track record of growth in revenue, operating profit and cash flow: An exceptionally strong first half, with revenue up 89% to £272.6m (HY 2020: £144.3m). Revenue ahead of last year by 21% on an organic2 basis, driven by the Media division’s organic2 growth of 30% and in particular digital advertising on-platform organic2 growth of 30% and eCommerce affiliates’ organic2 growth of 56%. US achieved revenue growth of 31% on an organic2 basis and UK revenues grew by 5% organically (UK has a higher mix of events and magazines revenues which were impacted more materially by the pandemic). -

Audio Middleware the Essential Link from Studio to Game Design

AUDIONEXT B Y A LEX A N D E R B R A NDON Audio Middleware The Essential Link From Studio to Game Design hen I first played games such as Pac Man and GameCODA. The same is true of Renderware native audio Asteroids in the early ’80s, I was fascinated. tools. One caveat: Criterion is now owned by Electronic W While others saw a cute, beeping box, I saw Arts. The Renderware site was last updated in 2005, and something to be torn open and explored. How could many developers are scrambling to Unreal 3 due to un- these games create sounds I’d never heard before? Back certainty of Renderware’s future. Pity, it’s a pretty good then, it was transistors, followed by simple, solid-state engine. sound generators programmed with individual memory Streaming is supported, though it is not revealed how registers, machine code and dumb terminals. Now, things it is supported on next-gen consoles. What is nice is you are more complex. We’re no longer at the mercy of 8-bit, can specify whether you want a sound streamed or not or handing a sound to a programmer, and saying, “Put within CAGE Producer. GameCODA also provides the it in.” Today, game audio engineers have just as much ability to create ducking/mixing groups within CAGE. In power to create an exciting soundscape as anyone at code, this can also be taken advantage of using virtual Skywalker Ranch. (Well, okay, maybe not Randy Thom, voice channels. but close, right?) Other than SoundMAX (an older audio engine by But just as a single-channel strip on a Neve or SSL once Analog Devices and Staccato), GameCODA was the first baffled me, sound-bank manipulation can baffle your audio engine I’ve seen that uses matrix technology to average recording engineer. -

Professional Work

[Page 1/2] www.marcsoskin.com [email protected] Professional Work The Outer Worlds Obsidian Entertainment Area Designer March 2018 – April 2020 The Outer Worlds is a FPS RPG with a focus on player choice and reactivity. As designers, we had a mandate to design scenarios where the player could complete quests through combat, stealth or dialogue, while making difficult narrative choices along the way. Responsibilities . Writer: . Provided additional writing, including dialogue in the intro area, Space-Crime Continuum and all the dialogue and text associated with the quest "Why Call Them Back from Retirement?" . As part of the narrative team, won the 2019 Nebula Award for Best Game Writing . Strike Team Lead: . g, along with the rest of the game' . After alpha, I was responsible for a team of artists, designers and writers whose goal was to revise and polish two planets – Monarch and Tartarus – to shippable quality. In addition to management and design work, I reviewed dialogue, assisted with optimization and eventually assumed responsibility for all design bugs as designers moved on to other assignments . Area Designer: . Primary designer for the quest "Slaughterhouse Clive" and its associated level. I drove a demo of this level at E3 2019, where it won numerous awards including the Game Critics Award for Best Original Game . Primary designer for the 6 Monarch faction quests. Helped design the intro/tutorial area and SubLight Salvage quest line and took them to an alpha state. Initial designer on 3 side quests in Byzantium, from documentation through alpha. Helped design the game’s final level (Tartarus) and bring it from alpha to ship. -

THIEF PS4 Manual

See important health and safety warnings in the system Settings menu. GETTING STARTED PlayStation®4 system Starting a game: Before use, carefully read the instructions supplied with the PS4™ computer entertainment system. The documentation contains information on setting up and using your system as well as important safety information. Touch the (power) button of the PS4™ system to turn the system on. The power indicator blinks in blue, and then lights up in white. Insert the Thief disc with the label facing up into the disc slot. The game appears in the content area of the home screen. Select the software title in the PS4™ system’s home screen, and then press the S button. Refer to this manual for Default controls: information on using the software. Xxxxxx xxxxxx left stick Xxxxxx xxxxxx R button Quitting a game: Press and hold the button, and then select [Close Application] on the p Xxxxxx xxxxxx E button screen that is displayed. Xxxxxx xxxxxx W button Returning to the home screen from a game: To return to the home screen Xxxxxx xxxxxx K button Xxxxxx xxxxxx right stick without quitting a game, press the p button. To resume playing the game, select it from the content area. Xxxxxx xxxxxx N button Xxxxxx xxxxxx (Xxxxxx xxxxxxx) H button Removing a disc: Touch the (eject) button after quitting the game. Xxxxxx xxxxxx (Xxxxxx xxxxxxx) J button Xxxxxx xxxxxx (Xxxxxx xxxxxxx) Q button Trophies: Earn, compare and share trophies that you earn by making specic in-game Xxxxxx xxxxxx (Xxxxxx xxxxxxx) Click touchpad accomplishments. Trophies access requires a Sony Entertainment Network account . -

MORE INFORMATION to Use a Safe Boot Option, Follow These Steps

MORE INFORMATION To use a Safe Boot option, follow these steps: 1. Restart your computer and start pressing the F8 key on your keyboard. On a computer that is configured for booting to multiple operating systems, you can press the F8 key when the Boot Menu appears. 2. Select an option when the Windows Advanced Options menu appears, and then press ENTER. 3. When the Boot menu appears again, and the words "Safe Mode" appear in blue at the bottom, select the installation that you want to start, and then press ENTER. Back to the top Description of Safe Boot options • Safe Mode (SAFEBOOT_OPTION=Minimal): This option uses a minimal set of device drivers and services to start Windows. • Safe Mode with Networking (SAFEBOOT_OPTION=Network): This option uses a minimal set of device drivers and services to start Windows together with the drivers that you must have to load networking. • Safe Mode with Command Prompt (SAFEBOOT_OPTION=Minimal(AlternateShell)): This option is the same as Safe mode, except that Cmd.exe starts instead of Windows Explorer. • Enable VGA Mode: This option starts Windows in 640 x 480 mode by using the current video driver (not Vga.sys). This mode is useful if the display is configured for a setting that the monitor cannot display. Note Safe mode and Safe mode with Networking load the Vga.sys driver instead. • Last Known Good Configuration: This option starts Windows by using the previous good configuration. • Directory Service Restore Mode: This mode is valid only for Windows-based domain controllers. This mode performs a directory service repair. -

Sony Corporation Announces Signing of a Definitive Agreement for the Acquisition of Equity Interest in Insomniac Games, Inc

August 19, 2019 Sony Corporation Sony Corporation Announces Signing of a Definitive Agreement for the Acquisition of Equity Interest in Insomniac Games, Inc. by its Wholly-owned Subsidiary August 19, 2019 (Pacific Standard Time) – Sony Interactive Entertainment LLC (“SIE”), a wholly-owned subsidiary of Sony Corporation (“Sony”), today announced that SIE has entered into definitive agreements to acquire the entire equity interest in Insomniac Games, Inc. Completion of the acquisition is subject to regulatory approvals and certain other closing conditions. Financial terms of this transaction are not disclosed due to contractual commitments. For further details, please refer to the attached press release. This transaction is not anticipated to have a material impact on Sony's forecast for its consolidated financial results for the fiscal year ending March 31, 2020. SONY INTERACTIVE ENTERTAINMENT TO ACQUIRE INSOMNIAC GAMES, DEVELOPER OF PLAYSTATION®4 TOP-SELLING MARVEL’S SPIDER-MAN, RATCHET & CLANK ~Highly-acclaimed Game Creators to Join Sony Interactive Entertainment’s Worldwide Studios to Deliver Extraordinary Gaming Experiences~ SAN MATEO, Calif., August 19, 2019 - Sony Interactive Entertainment (“SIE”) announced today that SIE has entered into definitive agreements to acquire Insomniac Games, Inc. (“Insomniac Games”), a leading game developer and long-time partner of SIE, in its entirety. Insomniac Games is the developer of PlayStation®4’s (PS4™) top-selling Marvel’s Spider-Man and the hugely popular PlayStation® Ratchet & Clank franchise. Upon completion of the acquisition, Insomniac Games will join the global development operation of Sony Interactive Entertainment Worldwide Studios (“SIE WWS”). Insomniac Games is the 14th studio to join the SIE WWS family. -

Sector Magazin 11/2012

1 HERNY´ MAGAZÍN 11/2012 FAR CRY 3 COD: BLACK OPS 2 HITMAN ABSOLUTION HALO 4 2 ÚVODNÍK Ani sme sa nenazdali a je tu december a s ním spojené aj zvoľnenie tempa. Novembrová nádielka prehrmela a schovala svojimi zárobkami otváracie týždne veľkofilmov. Čo obyčajne po nej zostáva je vietor v peňaženkách a rozhádzané plagáty malých titulov rozdupané miliónmi hráčov uprednostňujúcich zvučné mená. Far Cry 3 síce k nim nepatrí, ale vyšiel po funuse, po všetkých premiérach a napriek tomu nás dostal do kolien. Pravdepodobne posledná hra tento rok, ktorá si odniesla maximálnu možnú známku 10/10. Ubisoftu sa podarilo nadviazať na odkaz prvého dielu, vrátiť na exotický ostrov voľnosť a vtisnúť hráčom do rúk opraty pri rozhodovaní sa v otvorenom svete plnom príležitostí. Zaujímavý je aj z iného hľadiska, zatiaľ čo u nás mrzne, tam môžete loviť žralokov v priezračnej vode s palmami za chrbtom. S Far Cry 3 neprišiel definitívny koniec herného roka, Telltale Games uzatvorili výbornú epizodickú sériu The Walking Dead a Nintendo stihlo uviesť na trh novú konzolu Wii U. O tom však až nabudúce. Pavol Buday VYDÁVA REDAKCIA SECTOR s.r.o. Peter Dragula 3 Branislav Kohút LAYOUT Matúš Štrba Vladimír Pribila Peter Dragula Jaroslav Otčenáš ŠÉFREDAKTOR Michal Korec Články nájdete na Juraj Malíček Pavol Buday www.sector.sk OBSAH Ján Kordoš DOJMY RECENZIE TECH UŽÍVATELIA NextGen Expo Far Cry 3 Wii mini predstavené Project Cars Hawken Halo 4 Surface Pro Exhumed War Z Call of Duty Black Ops 2 Playstation 3 12GB Hitman Absolution NFS Most Wanted Euro Truck Simulator 2 Playstation AllStars Walking Dead Air Conflict Pacific Carriers LittleBigPlanet Karting Wonderbook Assassins Creed 3 Liberation 007 Legends FILMY Dance Central 3 vs Atlas Mrakov Just Dance 4 Sedem Psychopatov 4 NEXTGEN EXPO 2012 KTO PRIŠIEL, VIDEL A ZAŽIL, KTO NEBOL, MÔŽE ĽUTOVAŤ. -

Metadefender Core V4.12.2

MetaDefender Core v4.12.2 © 2018 OPSWAT, Inc. All rights reserved. OPSWAT®, MetadefenderTM and the OPSWAT logo are trademarks of OPSWAT, Inc. All other trademarks, trade names, service marks, service names, and images mentioned and/or used herein belong to their respective owners. Table of Contents About This Guide 13 Key Features of Metadefender Core 14 1. Quick Start with Metadefender Core 15 1.1. Installation 15 Operating system invariant initial steps 15 Basic setup 16 1.1.1. Configuration wizard 16 1.2. License Activation 21 1.3. Scan Files with Metadefender Core 21 2. Installing or Upgrading Metadefender Core 22 2.1. Recommended System Requirements 22 System Requirements For Server 22 Browser Requirements for the Metadefender Core Management Console 24 2.2. Installing Metadefender 25 Installation 25 Installation notes 25 2.2.1. Installing Metadefender Core using command line 26 2.2.2. Installing Metadefender Core using the Install Wizard 27 2.3. Upgrading MetaDefender Core 27 Upgrading from MetaDefender Core 3.x 27 Upgrading from MetaDefender Core 4.x 28 2.4. Metadefender Core Licensing 28 2.4.1. Activating Metadefender Licenses 28 2.4.2. Checking Your Metadefender Core License 35 2.5. Performance and Load Estimation 36 What to know before reading the results: Some factors that affect performance 36 How test results are calculated 37 Test Reports 37 Performance Report - Multi-Scanning On Linux 37 Performance Report - Multi-Scanning On Windows 41 2.6. Special installation options 46 Use RAMDISK for the tempdirectory 46 3. Configuring Metadefender Core 50 3.1. Management Console 50 3.2. -

The Cross Over Talk

Programming Composers and Composing Programmers Victoria Dorn – Sony Interactive Entertainment 1 About Me • Berklee College of Music (2013) – Sound Design/Composition • Oregon State University (2018) – Computer Science • Audio Engineering Intern -> Audio Engineer -> Software Engineer • Associate Software Engineer in Research and Development at PlayStation • 3D Audio for PS4 (PlayStation VR, Platinum Wireless Headset) • Testing, general research, recording, and developer support 2 Agenda • Programming tips/tricks for the audio person • Audio and sound tips/tricks for the programming person • Creating a dialog and establishing vocabulary • Raise the level of common understanding between sound people and programmers • Q&A 3 Media Files Used in This Presentation • Can be found here • https://drive.google.com/drive/folders/1FdHR4e3R4p59t7ZxAU7pyMkCdaxPqbKl?usp=sharing 4 Programming Tips for the ?!?!?! Audio Folks "Binary Code" by Cncplayer is licensed under CC BY-SA 3.0 5 Music/Audio Programming DAWs = Programming Language(s) Musical Motives = Programming Logic Instruments = APIs or Libraries 6 Where to Start?? • Learning the Language • Pseudocode • Scripting 7 Learning the Language • Programming Fundamentals • Variables (a value with a name) soundVolume = 10 • Loops (works just like looping a sound actually) for (loopCount = 0; while loopCount < 10; increase loopCount by 1){ play audio file one time } • If/else logic (if this is happening do this, else do something different) if (the sky is blue){ play bird sounds } else{ play rain sounds -

How to Get Away with Copyright Infringement: Music Sampling As Fair Use

THIS VERSION MAY CONTAIN INACCURATE OR INCOMPLETE PAGE NUMBERS. PLEASE CONSULT THE PRINT OR ONLINE DATABASE VERSIONS FOR THE PROPER CITATION INFORMATION. NOTE HOW TO GET AWAY WITH COPYRIGHT INFRINGEMENT: MUSIC SAMPLING AS FAIR USE SAM CLAFLIN1 CONTENTS I. INTRODUCTION ............................................................................................ 102 II. BACKGROUND ............................................................................................ 104 A. History ............................................................................................ 104 i. Foundations of Copyright Law with Respect to Music ............ 104 ii. Music Sampling and Copyright ............................................... 105 B. The Fair Use Doctrine .................................................................... 107 i. Fair Use and Appropriation Art ................................................ 110 III. A CONTEMPORARY PERSPECTIVE ON MUSIC SAMPLING AND FAIR USE: ESTATE OF SMITH V. CASH MONEY RECORDS, INC. ................................ 113 A. Background ..................................................................................... 113 B. Analysis – An In-Depth Look at the Court’s Fair Use Approach in Estate of Smith ........................................................ 114 i. Factor One .............................................................................. 115 ii. Factor Two ............................................................................. 118 iii. Factor Three .......................................................................... -

Products of Interest

Products of Interest Gibber JavaScript Live-Coding background, auditioning it through Overtone is available as a free Environment headphones and pushing it to the download. Contact: Sam Aaron, master computer when they wish to. University of Cambridge Computer Gibber is a live-coding environment A number of video demonstrations Laboratory, William Gates Build- for Google Chrome. It is written of Gibber being used in performance ing, 15 JJ Thomson Avenue, Cam- completely in JavaScript and can be is available on the developer’s Web bridge CB3 0FD, UK; electronic mail used within any Web page. It uses the site. [email protected]; Web overtone audioLib.js library, which was created Gibber is available as a free down- .github.io/. by Finish musician and programmer load. Contact: Charlie Roberts, elec- Jussi Kalliokoski. Gibber extends tronic mail charlie@charlie-roberts this library to add extra effects, .com; Web www.charlie-roberts.com/ TIAALS Analysis Tools sequencing, and automated audio gibber/ and github.com/charlieroberts/ for Electroacoustic Music graph management. The environment Gibber/. provides the user with a simple Tools for the Interactive Aural Analy- syntax, sample accurate timings sis (TIAALS) of Electroacoustic music for synthesis and sequencing, and a Overtone Live-Coding Audio is the result of a research project be- simple model for networked use, and Environment tween the University of Huddersfield it supports music notes and chords and Durham University in the UK. It through the teoria.js library. Overtone is an open source audio is a set of tools for analysis and in- Six types of oscillators are avail- environment designed for live coding. -

Adaptive Audio in INSIDE and 140

Adaptive Audio in INSIDE and 140 SpilBar 41 Jakob Schmid slides available at https://www.schmid.dk/talks/ Who Am I? Jakob Schmid INSIDE, Playdead, audio programmer 140, Carlsen Games, music and sound design Co-founded new game studio in 2017 ? IGF award 2013 Excellence in Audio Honorable mention: Technical Excellence Spilprisen 2014 Sound of the Year Nordic Game Award 2014 Artistic Achievement Game Developers Choice Awards 2016 Best Audio, Best Visual Art Game Critics Awards 2016 Best Independent Game The Game Awards 2016 Best Art Direction, Best Independent Game DICE Awards 2016 Spirit Award, Art Direction, Game Direction 13th British Academy Games Awards Artistic Achievement, Game Design, Narrative, Original Property The Edge Awards 2016 Best Audio Design Adaptive Audio in INSIDE INSIDE Audio Team Martin Stig Andersen audio director, sound designer, composer Andreas Frostholm sound designer Søs Gunver Ryberg composer, sound designer Jakob Schmid audio programmer Audio Engine: Audiokinetic Wwise INSIDE Video Animation Events Animation Events ● Associated with a specific animation ● Occur at a specific animation frame ● Can trigger sounds or visual effects footstep Context-Sensitive Events jog previous action: idle current action: jog idle play sound 'takeoff_mf' ... Context-Sensitive Events ● If previous action was 'sneak', a different sound is played jog previous action: sneak current action: jog sneak play sound 'walk' Wet Animation Events ● Shoes can get wet ● Adds wet sound on top of footstep ● Wetness value is used to set volume ○ Is set high when in water or on a wet surface ○ Dries out over time Elbow Brush Sounds brush brush Voice Sequencer Continuous Voice Sequencing ● Recorded breath sounds have varying durations ● 'Stitching' recorded sounds together results in natural, uneven breathing pattern switches br.