Understanding the U.S. News Law School Rankings

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

PATHWAY to LAW Building a Diversity Pipeline Into the Law

PATHWAY TO LAW Building a Diversity Pipeline into the Law A PARTNERSHIP OF: The State Bar of California The California Lawyers Association The California Department of Education The California Community College Chancellor’s Office’ Loyola Marymount University and Loyola Law School The University of California Undergraduate and Law School @ Davis, Berkeley, UCLA, and Irvine Santa Clara University and School of Law University of San Francisco and School of Law University of Southern California and School of Law Pepperdine University and School of Law State Bar of California 2004 Long Term Strategic Plan Values Statement: The State Bar of California believes in Diversity and Broad Participation in Bar Membership and Leadership. Mission: The State Bar of California's mission is to protect the public and includes the primary functions of licensing, regulation and discipline of attorneys; the advancement of the ethical and competent practice of law; and support of efforts for greater access to, and inclusion in, the legal system. Assembly Bill 3249 Signed by Governor Brown September 2018 The bill requires the State Bar to develop and implement a plan to meet certain goals relating to access, fairness, and diversity in the legal profession and the elimination of bias in the practice of law. The bill would require the State Bar to prepare and submit a report on the plan and its implementation to the Legislature, by March 15, 2019, and every 2 years thereafter. The first Diversity Report to the Legislature was submitted by the State Bar of California in March 2019. COUNCIL ON ACCESS AND FAIRNESS Created in 2007 Think Tank for diversity in the legal profession Supported creation of law academies in 2010 as the first “Boots on the Ground” diversity program Launched the Pathway to Law Initiative in 2014 The California Lawyers Association The California Lawyers Association, formed in 2018, included Diversity and Inclusion in their Mission Statement. -

Loyola Lawyer Law School Publications

Loyola Lawyer Law School Publications Fall 9-1-1990 Loyola Lawyer Loyola Law School - Los Angeles Follow this and additional works at: https://digitalcommons.lmu.edu/loyola_lawyer Repository Citation Loyola Law School - Los Angeles, "Loyola Lawyer" (1990). Loyola Lawyer. 24. https://digitalcommons.lmu.edu/loyola_lawyer/24 This Magazine is brought to you for free and open access by the Law School Publications at Digital Commons @ Loyola Marymount University and Loyola Law School. It has been accepted for inclusion in Loyola Lawyer by an authorized administrator of Digital Commons@Loyola Marymount University and Loyola Law School. For more information, please contact [email protected]. ALUMNI DINNER SET FOR NOVEMBER 15 he Loyola Law School Alumni Friedlander; John]. Karmelich, Charles Dinner honoring distinguished H. Kent, ]ames H. Kindel, Harry V T alumnus Professor William Leppek, Stanley P. Makay. Angus D. Coskran '59 and The Class of 1940 will be McDonald, John R. Morris, Hon. Thomas held Thursday. November 15, 1990 at the c. Murphy. P.E Rau, George R. Stene, Sheraton Grande Hotel in downtown Steven Wixon and Jack E. Woods. Los Angeles. Tickets for the dinner are $75 each or The Distinguished Service Award will $750 for a table of ten. For reservations be presented to Coskran by the Loyola and ticket information, contact the Law School Alumni Association for de Loyola Alumni Office at (213) 736-1096. dicated and humanitarian service to his Cocktails will be served at 6:00p.m., and school, profession and community Past dinner at 7:30p.m., in the Sheraton's recipients ofthe Loyola Law School Grande Ballroom. -

Sande Lynn Buhai

SANDE LYNN BUHAI Loyola Law School 213 – 736 1156 919 Albany Street [email protected] Los Angeles, CA 90041 EDUCATION: Loyola Law School, Los Angeles, CA, J.D. 1982, cum laude (top 6%) Loyola International Law Journal, Note and Comment Editor Loyola Reporter, Staff Western State Scholarship Recipient Mabel Wilson Richards Scholarship Recipient St. Thomas More Law Honor Society Scott Moot Court Kings College of Law, University of London, UK, Summer 1981 University of California at Los Angeles, CA, B.A., English 1979 Daily Bruin, Staff PROFESSIONAL EXPERIENCE Loyola Law School, Los Angeles, CA Clinical Professor, 1994-present Visiting Professor, 1989-1994 Director, Public Interest Law Department, 1994-present Coordinator, Appellate Advocacy Program, 2000-2016 Chair, Admissions Committee, 2013-15 Member, Admissions Committee, Clinics and Externships Committee, Advocacy Committee Courses taught: Ethical Lawyering, Administrative Law, Trial Advocacy, Appellate Advocacy, Disability Rights, Legal Drafting, Law Practice Management, Public Interest Seminar, Animal Law, Introduction to Negotiation, Torts, and Legal Writing Southwestern University School of Law, Los Angeles, CA Visiting Professor, Sum 2005, 2006, 2007, Fall 2007, Sum 2008, Fall 2010 Courses taught: Disability Law, Animal Law University of International Business and Economics, Beijing, China Visiting Professor, Sum 1991 Course taught: U.S. Corporations 2019 – Sande Buhai CV Page 1 Western Law Center for Disability Rights, Los Angeles, CA Executive Director, 1989-1994 Responsible for managing the organization, supervising staff and students, and serving as lead attorney on complex disability rights litigation. Increased staff and budget by 100% over five years. Deputy Attorney General, State of California, 1984-1989 Represented the California Department of Consumer Affairs in civil and administrative actions. -

September 11, 2017 VIA EMAIL and PERSONAL DELIVERY the Honorable Tani G. Canti

September 11, 2017 VIA EMAIL AND PERSONAL DELIVERY The Honorable Tani G. Cantil-Sakauye, Chief Justice and Associate Justices SUPREME COURT OF CALIFORNIA 350 McAllister Street Room 1295 San Francisco, California 94902-4797 RE: The California Bar Exam – Adjustment to the Minimum Passing Score Dear Chief Justice and Associate Justices: The undersigned Deans of the California Accredited Law Schools (CALS) request leave to file this Letter Brief to ask the Court to exercise its inherent power to admit persons to practice law in California and to adjust the minimum passing score (cut score) of the California bar exam.1 Following comprehensive study and analysis of minimum competence, the CALS join with many other stakeholders and experts, including the State Bar of California, in supporting a change in the minimum passing score of the California Bar Exam to 1390, as the one score that represents the intersection of research data, norms, current practice, and policy. The CALS previously petitioned the Court on March 2, 2017 to request an adjustment to the minimum passing score from 1440 to 1350.2 In response, the Court expressed its concern that it “lacks a fully developed analysis with supporting evidence from which to conclude that 1440 or another cut score would be most appropriate for admission to the bar in California.”3 The Court directed the State Bar of California (State Bar) to conduct “a thorough and expedited investigation” that includes “a meaningful analysis of the current pass rate and information sufficient to determine whether protection of potential clients and the public is served by maintaining the current cut score.”4 1 California Rules of Court, Rules 9.3(a) and 9.6(a), as amended and effective on January 1, 2018. -

New Legal Realism at Ten Years and Beyond Bryant Garth

UC Irvine Law Review Volume 6 Article 3 Issue 2 The New Legal Realism at Ten Years 6-2016 Introduction: New Legal Realism at Ten Years and Beyond Bryant Garth Elizabeth Mertz Follow this and additional works at: https://scholarship.law.uci.edu/ucilr Part of the Law and Philosophy Commons Recommended Citation Bryant Garth & Elizabeth Mertz, Introduction: New Legal Realism at Ten Years and Beyond, 6 U.C. Irvine L. Rev. 121 (2016). Available at: https://scholarship.law.uci.edu/ucilr/vol6/iss2/3 This Foreword is brought to you for free and open access by UCI Law Scholarly Commons. It has been accepted for inclusion in UC Irvine Law Review by an authorized editor of UCI Law Scholarly Commons. Garth & Mertz UPDATED 4.14.17 (Do Not Delete) 4/19/2017 9:40 AM Introduction: New Legal Realism at Ten Years and Beyond Bryant Garth* and Elizabeth Mertz** I. Celebrating Ten Years of New Legal Realism ........................................................ 121 II. A Developing Tradition ............................................................................................ 124 III. Current Realist Directions: The Symposium Articles ....................................... 131 Conclusion: Moving Forward ....................................................................................... 134 I. CELEBRATING TEN YEARS OF NEW LEGAL REALISM This symposium commemorates the tenth year that a body of research has formally flown under the banner of New Legal Realism (NLR).1 We are very pleased * Chancellor’s Professor of Law, University of California, Irvine School of Law; American Bar Foundation, Director Emeritus. ** Research Faculty, American Bar Foundation; John and Rylla Bosshard Professor, University of Wisconsin Law School. Many thanks are owed to Frances Tung for her help in overseeing part of the original Tenth Anniversary NLR conference, as well as in putting together some aspects of this Symposium. -

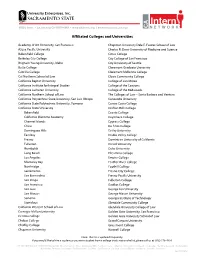

Affiliated Colleges and Universities

Affiliated Colleges and Universities Academy of Art University, San Francisco Chapman University Dale E. Fowler School of Law Azusa Pacific University Charles R. Drew University of Medicine and Science Bakersfield College Citrus College Berkeley City College City College of San Francisco Brigham Young University, Idaho City University of Seattle Butte College Claremont Graduate University Cabrillo College Claremont McKenna College Cal Northern School of Law Clovis Community College California Baptist University College of San Mateo California Institute for Integral Studies College of the Canyons California Lutheran University College of the Redwoods California Northern School of Law The Colleges of Law – Santa Barbara and Ventura California Polytechnic State University, San Luis Obispo Concordia University California State Polytechnic University, Pomona Contra Costa College California State University Crafton Hills College Bakersfield Cuesta College California Maritime Academy Cuyamaca College Channel Islands Cypress College Chico De Anza College Dominguez Hills DeVry University East Bay Diablo Valley College Fresno Dominican University of California Fullerton Drexel University Humboldt Duke University Long Beach El Camino College Los Angeles Empire College Monterey Bay Feather River College Northridge Foothill College Sacramento Fresno City College San Bernardino Fresno Pacific University San Diego Fullerton College San Francisco Gavilan College San Jose George Fox University San Marcos George Mason University Sonoma Georgia Institute of Technology Stanislaus Glendale Community College California Western School of Law Glendale University College of Law Carnegie Mellon University Golden Gate University, San Francisco Cerritos College Golden Gate University School of Law Chabot College Grand Canyon University Chaffey College Grossmont College Chapman University Hartnell College Note: This list is updated frequently. -

Distinguishing Science from Philosophy: a Critical Assessment of Thomas Nagel's Recommendation for Public Education Melissa Lammey

Florida State University Libraries Electronic Theses, Treatises and Dissertations The Graduate School 2012 Distinguishing Science from Philosophy: A Critical Assessment of Thomas Nagel's Recommendation for Public Education Melissa Lammey Follow this and additional works at the FSU Digital Library. For more information, please contact [email protected] THE FLORIDA STATE UNIVERSITY COLLEGE OF ARTS & SCIENCES DISTINGUISHING SCIENCE FROM PHILOSOPHY: A CRITICAL ASSESSMENT OF THOMAS NAGEL’S RECOMMENDATION FOR PUBLIC EDUCATION By MELISSA LAMMEY A Dissertation submitted to the Department of Philosophy in partial fulfillment of the requirements for the degree of Doctor of Philosophy Degree Awarded: Spring Semester, 2012 Melissa Lammey defended this dissertation on February 10, 2012. The members of the supervisory committee were: Michael Ruse Professor Directing Dissertation Sherry Southerland University Representative Justin Leiber Committee Member Piers Rawling Committee Member The Graduate School has verified and approved the above-named committee members, and certifies that the dissertation has been approved in accordance with university requirements. ii For Warren & Irene Wilson iii ACKNOWLEDGEMENTS It is my pleasure to acknowledge the contributions of Michael Ruse to my academic development. Without his direction, this dissertation would not have been possible and I am indebted to him for his patience, persistence, and guidance. I would also like to acknowledge the efforts of Sherry Southerland in helping me to learn more about science and science education and for her guidance throughout this project. In addition, I am grateful to Piers Rawling and Justin Leiber for their service on my committee. I would like to thank Stephen Konscol for his vital and continuing support. -

Q&A with Baylor Law Judges

SPRING ‘15 SCHOOL OF LAW One Bear Place #97288 Waco, TX 76798-7288 Baylor Law is committed to being one of the smallest law schools in the nation. With a total Established in 1857, student body of 383 (fall 2014), we are able to Baylor Law School offer more personalized attention to each student. is ranked third TOTAL PROFILE OF in the nation for STUDENT BODY ENTERING CLASS advocacy by U.S.News FALL 2014 FALL 2014 & World Report. TOTAL ENTERING STUDENTS STUDENTS Every year, Baylor Law 383 83 students achieve one of 58% 42% 75th/25th the highest bar passage MEN WOMEN GPA - 3.71/3.38 (Median 3.55) rates in the country and 75th/25th enjoy an excellent career LSAT - 163/158 placement rate. (Median 160) WITH Q&A WITH BAYLOR LAW JUDGES + BAYLOR LAW JUDGES ACROSS THE NATION VOLUME 127 | SPRING ‘15 BAYLOR UNIVERSITY SCHOOL OF LAW MAGAZINE 4 Dean’s Letter 16 Alumni Notes 18 Student Notes 21 Commencement Photos 22 Faculty Notes 23 Adjunct Faculty Profiles 26 Obituaries 28 Out & Abouts 30 Back in Time © Baylor University School of Law. All Rights Reserved. VOLUME 127 | SPRING ‘15 BAYLOR UNIVERSITY SCHOOL OF LAW MAGAZINE 4 Dean’s Letter 16 Alumni Notes 18 Student Notes 21 Commencement Photos 22 Faculty Notes 23 Adjunct Faculty Profiles 26 Obituaries 28 Out & Abouts 30 Back in Time © Baylor University School of Law. All Rights Reserved. A Message Docket Call is published by the Baylor University from Dean Toben School of Law for its alumni, faculty, staff, students, supporters, and friends. -

Applicants to Accredited Law Schools

Applicants to Accredited Law Schools, 2012-2013 WFU National Seniors All Seniors All Number of Applicants 47 107 19,576 59,384 Average LSAT Score 156.4 157.9 153.8 153.1 Percentile 67th 71st 56th 56th Undergraduate GPA 3.34 3.22 3.37 3.26 Admitted to ABA Law School(s) Number 42 94 16,769 45,700 Percent 89% 88% 86% 77% Enrolled at a Law School Number 36 83 14,672 37,936 Percent 77% 78% 75% 64% Admissions per Applicant 3.85 3.58 3.48 2.82 Law Schools Admitting Wake Forest Applicants, 2012-2013 An asterisk (*) indicates that a student from Wake Forest enrolled at the school. Albany Law School of Union University* Michigan State University College of Law University of Chicago Law School American University Washington College of Law* Mississippi College of Law University of Cincinnati College of Law Appalachian School of Law New England Law – Boston University of Connecticut School of Law* Atlanta’s John Marshall Law School* New York Law School University of Denver School of Law Baylor University School of Law New York University School of Law University of the District of Columbia Benjamin N. Cardozo School of Law* North Carolina Central University* University of Florida* Boston College Law School Northeastern University School of Law University of Georgia School of Law Boston University School of Law* Northern Kentucky University University of Houston Law Center Brooklyn Law School* Northwestern University School of Law University of Idaho School of Law* Campbell University* Notre Dame Law School University of Illinois College of Law Charleston -

Feb 2018 Cal Bar Exam

GENERAL STATISTICS REPORT FEBRUARY 2018 CALIFORNIA BAR EXAMINATION1 OVERALL STATISTICS FOR CATEGORIES WITH MORE THAN 11 APPLICANTS WHO COMPLETED THE EXAMINATION First-Timers Repeaters All Takers Applicant Group Took Pass %Pass Took Pass %Pass Took Pass %Pass General Bar Examination 1267 498 39.3 3434 784 22.8 4701 1282 27.3 Attorneys’ Examination 391 211 54.0 211 50 23.7 602 261 43.4 Total 1658 709 42.8 3645 834 22.9 5303 1543 29.1 DISCIPLINED ATTORNEYS EXAMINATION STATISTICS Took Pass % Pass CA Disciplined Attorneys 25 0 0 GENERAL BAR EXAMINATION STATISTICS First-Timers Repeaters All Takers Law School Type Took Pass %Pass Took Pass %Pass Took Pass %Pass CA ABA Approved 316 143 45.3 1423 445 31.3 1739 588 33.8 Out-of-State ABA 164 58 35.4 538 144 26.8 702 202 28.8 CA Accredited 122 28 23.0 570 52 9.1 692 80 11.6 CA Unaccredited 75 16 21.3 244 18 7.4 319 34 10.7 Law Office/Judges’ * * * Chambers Foreign Educated/JD 68 7 10.3 157 17 10.8 225 24 10.7 Equivalent + One Year US Education US Attorneys Taking the 310 204 65.8 140 64 45.7 450 268 59.6 General Bar Exam2 Foreign Attorneys 198 38 19.2 312 44 14.1 510 82 16.1 Taking the General Bar Exam3 4-Year Qualification4 * 19 0 0 26 0 0 Schools No Longer in * 29 0 0 33 1 3.0 Operation * Fewer than 11 Applicants 1 These statistics were compiled using data available as of the date results from the examination were released. -

1 Realism V Equilibrism About Philosophy* Daniel Stoljar, ANU 1

Realism v Equilibrism about Philosophy* Daniel Stoljar, ANU Abstract: According to the realist about philosophy, the goal of philosophy is to come to know the truth about philosophical questions; according to what Helen Beebee calls equilibrism, by contrast, the goal is rather to place one’s commitments in a coherent system. In this paper, I present a critique of equilibrism in the form Beebee defends it, paying particular attention to her suggestion that various meta-philosophical remarks made by David Lewis may be recruited to defend equilibrism. At the end of the paper, I point out that a realist about philosophy may also be a pluralist about philosophical culture, thus undermining one main motivation for equilibrism. 1. Realism about Philosophy What is the goal of philosophy? According to the realist, the goal is to come to know the truth about philosophical questions. Do we have free will? Are morality and rationality objective? Is consciousness a fundamental feature of the world? From a realist point of view, there are truths that answer these questions, and what we are trying to do in philosophy is to come to know these truths. Of course, nobody thinks it’s easy, or at least nobody should. Looking over the history of philosophy, some people (I won’t mention any names) seem to succumb to a kind of triumphalism. Perhaps a bit of logic or physics or psychology, or perhaps just a bit of clear thinking, is all you need to solve once and for all the problems philosophers are interested in. Whether those who apparently hold such views really do is a difficult question. -

Contributors' Notes

Contributors' Notes Frances E. Dolan is Assistant Professor of English at Miami Univer- sity, Ohio. Her essays have appeared or are forthcoming in Medieval and Renaissance Drama, PMLA, and SEL. She is currently completing a book, DangerousFamiliars: PopularAccounts of Domestic Crime in Eng- land, 1550-1700, of which the present essay is a part. Barbara Taback Schneider is a 1990 graduate of Harvard Law School. She is currently an attorney in Portland, Maine, with the firm of Murray, Plumb & Murray. The paper on which her article was based received the Irving Oberman Memorial Award from the Law School for the best es- say by a graduating law student on a current legal topic. In 1990 that topic was legal history. Brian Leiter is a graduate student in philosophy at the University of Michigan in Ann Arbor, where he is writing a dissertation on Nietzche's critique of morality. A graduate of Princeton, he also holds a law degree from the University of Michigan and is a member of the New York Bar. John Martin Fischer received his Ph.D. in philosophy from Cornell University in 1982. He taught for seven years in the philosophy depart- ment at Yale University as an assistant and associate professor. He is currently Professor of Philosophy at the University of California, River- side. He has written on various topics in moral philosophy and meta- physics, especially free will and moral responsibility. Recently, he has published a book jointly edited with Mark Ravizza entitled Ethics: Problems and Principles (Harcourt Brace Jovanovich, 1991). Mark Tushnet, Professor of Law, Georgetown University Law Center, has written on constitutional law and legal history.