Defending Against Malicious Peripherals with Cinch Sebastian Angel, the University of Texas at Austin and New York University; Riad S

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Linux Hardware Compatibility HOWTO

Linux Hardware Compatibility HOWTO Steven Pritchard Southern Illinois Linux Users Group [email protected] 3.1.5 Copyright © 2001−2002 by Steven Pritchard Copyright © 1997−1999 by Patrick Reijnen 2002−03−28 This document attempts to list most of the hardware known to be either supported or unsupported under Linux. Linux Hardware Compatibility HOWTO Table of Contents 1. Introduction.....................................................................................................................................................1 1.1. Notes on binary−only drivers...........................................................................................................1 1.2. Notes on commercial drivers............................................................................................................1 1.3. System architectures.........................................................................................................................1 1.4. Related sources of information.........................................................................................................2 1.5. Known problems with this document...............................................................................................2 1.6. New versions of this document.........................................................................................................2 1.7. Feedback and corrections..................................................................................................................3 1.8. Acknowledgments.............................................................................................................................3 -

Apollo Twin USB Hardware Manual

Apollo Twin USB Hardware Manual Manual Version 210429 www.uaudio.com A Letter from Bill Putnam Jr. Thank you for deciding to make the Apollo Twin High-Resolution Interface part of your music making experience. We know that any new piece of gear requires an investment of time and money — and our goal is to make your investment pay off. The fact that we get to play a part in your creative process is what makes our efforts meaningful, and we thank you for this. In many ways, the Apollo family of audio interface products represent the best examples of what Universal Audio has stood for over its long history; from UA’s original founding in the 1950s by my father, through our current vision of delivering the best of both analog and digital audio technologies. Starting with its high-quality analog I/O, Apollo Twin’s superior sonic performance serves as its foundation. This is just the beginning however, as Apollo Twin is the only desktop audio interface that allows you to run UAD plug-ins in real time. Want to monitor yourself through a Neve® console channel strip while tracking bass through a classic Fairchild or LA-2A compressor? Or how about tracking vocals through a Studer® tape machine with some added Lexicon® reverb?* With our growing library of more than 90 UAD plug-ins, the choices are limitless. At UA, we are dedicated to the idea that this powerful technology should ultimately serve the creative process — not be a barrier. These are the very ideals my father embodied as he invented audio equipment to solve problems in the studio. -

TECHNICAL MANUAL of Intel H110 Express Chipset Based Mini-ITX

TECHNICAL MANUAL Of Intel H110 Express Chipset Based Mini-ITX M/B NO. G03-NF693-F Revision: 3.0 Release date: July 19, 2017 Trademark: * Specifications and Information contained in this documentation are furnished for information use only, and are subject to change at any time without notice, and should not be construed as a commitment by manufacturer. Environmental Protection Announcement Do not dispose this electronic device into the trash while discarding. To minimize pollution and ensure environment protection of mother earth, please recycle. i TABLE OF CONTENT ENVIRONMENTAL SAFETY INSTRUCTION ...................................................................... iii USER’S NOTICE .................................................................................................................. iv MANUAL REVISION INFORMATION .................................................................................. iv ITEM CHECKLIST ................................................................................................................ iv CHAPTER 1 INTRODUCTION OF THE MOTHERBOARD 1-1 FEATURE OF MOTHERBOARD ................................................................................ 1 1-2 SPECIFICATION ......................................................................................................... 2 1-3 LAYOUT DIAGRAM .................................................................................................... 3 CHAPTER 2 HARDWARE INSTALLATION 2-1 JUMPER SETTING .................................................................................................... -

Embedded Android

www.it-ebooks.info www.it-ebooks.info Praise for Embedded Android “This is the definitive book for anyone wanting to create a system based on Android. If you don’t work for Google and you are working with the low-level Android interfaces, you need this book.” —Greg Kroah-Hartman, Core Linux Kernel Developer “If you or your team works on creating custom Android images, devices, or ROM mods, you want this book! Other than the source code itself, this is the only place where you’ll find an explanation of how Android works, how the Android build system works, and an overall view of how Android is put together. I especially like the chapters on the build system and frameworks (4, 6, and 7), where there are many nuggets of information from the AOSP source that are hard to reverse-engineer. This book will save you and your team a lot of time. I wish we had it back when our teams were starting on the Frozen Yogurt version of Android two years ago. This book is likely to become required reading for new team members working on Intel Android stacks for the Intel reference phones.” —Mark Gross, Android/Linux Kernel Architect, Platform System Integration/Mobile Communications Group/Intel Corporation “Karim methodically knocks out the many mysteries Android poses to embedded system developers. This book is a practical treatment of working with the open source software project on all classes of devices, beyond just consumer phones and tablets. I’m personally pleased to see so many examples provided on affordable hardware, namely BeagleBone, not just on emulators.” —Jason Kridner, Sitara Software Architecture Manager at Texas Instruments and cofounder of BeagleBoard.org “This book contains information that previously took hundreds of hours for my engineers to discover. -

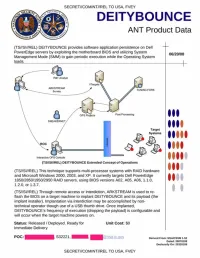

NSA ANT Catalog PDF from EFF.Org

SECRET//COMINT//REL TO USA. FVEY DEITYBOUNCE ANT Product Data (TS//SI//REL) DEITYBOUNCE provides software application persistence on Dell PowerEdge servers by exploiting the motherboard BIOS and utilizing System Management Mode (SMM) to gain periodic execution while the Operating System loads. _________________ _________________________________________ TUMI KG FORK Post Proc*t*ftg Target Systems (TS//SM/REL) DEITYBOUNCE Extended Concept ot Operations (TS//SI//REL) This technique supports multi-processor systems with RAID hardware and Microsoft Windows 2000. 2003. and XP. It currently targets Dell PowerEdge A A 1850/2850/1950/2950 RAID servers, using BIOS versions A02. A05. A 0 6 .1.1.0. " " 1.2.0. or 1.3.7. (TS//SI//REL) Through remote access or interdiction. ARKSTREAM is used to re flash the BIOS on a target machine to implant DEITYBOUNCE and its payload (the implant installer). Implantation via interdiction may be accomplished by non technical operator though use of a USB thumb drive. Once implanted. DEITYBOUNCE's frequency of execution (dropping the payload) is configurable and will occur when the target machine powers on. Status: Released / Deployed. Ready for Unit Cost: $0 Immediate Delivery POC: S32221. | Oenverl From: NSAfCSSM 1-52 Dated: 20070108 Oeclaisify On: 20320108 SECRET//COMINT//REL TO USA. FVEY TOP SECRET//COMINT//REL TO USA. FVEY IRONCHEF ANT Product Data (TS//SI//REL) IRONCHEF provides access persistence to target systems by exploiting the motherboard BIOS and utilizing System Management Mode (SMM) to 07/14/08 communicate with a hardware implant that provides two-way RF communication. CRUMPET COVERT CLOSED NETWORK NETW ORK (CCN ) (Tefgef So*col CCN STRAITBIZAKRE N ode Compute* Node r -\— 0 - - j CCN S e rv e r STRAITBIZARRE Node CCN Computer UNITCORAKt Computer Node I Futoie Nodoe UNITE ORA KE Server Node I (TS//SI//REL) IRONCHEF Extended Concept of Operations (TS//SI/REL) This technique supports the HP Proliant 380DL G5 server, onto which a hardware implant has been installed that communicates over the l?C Interface (WAGONBED). -

Configuring the Information Environment of Microcomputers with the Microsoft Windows 10 Operating System

Configuring the Information Environment of Microcomputers with the Microsoft Windows 10 Operating System Felix Kasparinsky[0000-0002-1048-9212] MASTER-MULTIMEDIA Ltd, Entuziastov Shosse 98-3-274, Moscow 111531, Russia [email protected] Abstract. Since 2015, microcomputers have appeared in the information envi- ronment, which are a compact system unit with minimal functionality without peripherals. The article published the results of the analysis of the use of 6 dif- ferent microcomputers in various fields of activity. The purpose of the study is to determine the limiting factors affecting the efficiency of the targeted use of microcomputers. It has been established that for scientific and educational presentations, office and trading activities, it is cur-rently advisable to use fan- less microcomputers with a perforated case and an internal WiFi antenna, at least 4 GB of operational and 64 GB of permanent memory, and a microSD (TF) memory card slot, at least 128 GB, NTFS file system), Intel HD Graphics, USB3.0 and HDMI interfaces. Based on comparative experiments, methodolog- ical recommendations were created on optimizing the configuration of the hardware-software environment of microcomputers in stationary and mobile conditions. The problems of major updates to Windows 10, as well as the com- patibility of Microsoft Store software and third-party manufacturers, are ana- lyzed. It is recommended to specialize individual microcomputers for working with 32-bit applications; accounting and cryptographic programs; as well as conducting presentations with their video. Options for optimal configuration of the Start menu of the Windows 10 desktop are suggested. It is concluded that specialization in the hardware-software configuration of modern microcomput- ers allows you to increase the efficiency of using single de-vices and their paired systems in accordance with BYOD (Bring Your Own Device). -

Linux Hardware Compatibility HOWTO

Linux Hardware Compatibility HOWTO Steven Pritchard Southern Illinois Linux Users Group / K&S Pritchard Enterprises, Inc. <[email protected]> 3.2.4 Copyright © 2001−2007 Steven Pritchard Copyright © 1997−1999 Patrick Reijnen 2007−05−22 This document attempts to list most of the hardware known to be either supported or unsupported under Linux. Copyright This HOWTO is free documentation; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free software Foundation; either version 2 of the license, or (at your option) any later version. Linux Hardware Compatibility HOWTO Table of Contents 1. Introduction.....................................................................................................................................................1 1.1. Notes on binary−only drivers...........................................................................................................1 1.2. Notes on proprietary drivers.............................................................................................................1 1.3. System architectures.........................................................................................................................1 1.4. Related sources of information.........................................................................................................2 1.5. Known problems with this document...............................................................................................2 1.6. New versions of this document.........................................................................................................2 -

USB3 Debug Class Specification

USB 3.1 Debug Class 7/14/2015 USB 3.1 Device Class Specification for Debug Devices Revision 1.0 – July 14, 2015 - 1 - USB 3.1 Debug Class 7/14/2015 INTELLECTUAL PROPERTY DISCLAIMER THIS SPECIFICATION IS PROVIDED TO YOU “AS IS” WITH NO WARRANTIES WHATSOEVER, INCLUDING ANY WARRANTY OF MERCHANTABILITY, NON-INFRINGEMENT, OR FITNESS FOR ANY PARTICULAR PURPOSE. THE AUTHORS OF THIS SPECIFICATION DISCLAIM ALL LIABILITY, INCLUDING LIABILITY FOR INFRINGEMENT OF ANY PROPRIETARY RIGHTS, RELATING TO USE OR IMPLEMENTATION OF INFORMATION IN THIS SPECIFICATION. THE PROVISION OF THIS SPECIFICATION TO YOU DOES NOT PROVIDE YOU WITH ANY LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS. Please send comments via electronic mail to [email protected]. For industry information, refer to the USB Implementers Forum web page at http://www.usb.org. All product names are trademarks, registered trademarks, or service marks of their respective owners. Copyright © 2010-2015 Hewlett-Packard Company, Intel Corporation, Microsoft Corporation, Renesas, STMicroelectronics, and Texas Instruments All rights reserved. - 2 - USB 3.1 Debug Class 7/14/2015 Contributors Intel (chair) Rolf Kühnis Intel Sri Ranganathan Intel Sankaran Menon Intel (chair) John Zurawski ST-Ericsson Andrew Ellis ST-Ericsson Tomi Junnila ST-Ericsson Rowan Naylor ST-Ericsson Graham Wells ST Microelectronics Jean-Francis Duret Texas Instruments Gary Cooper Texas Instruments Jason Peck Texas Instruments Gary Swoboda AMD Will Harris Lauterbach Stephan Lauterbach Lauterbach Ingo Rohloff Nokia Henning Carlsen Nokia Eugene Gryazin Nokia Leon Jørgensen Qualcomm Miguel Barasch Qualcomm Terry Remple Qualcomm Yoram Rimoni - 3 - USB 3.1 Debug Class 7/14/2015 TABLE OF CONTENTS 1 TERMS AND ABBREVIATIONS ....................................................................................................... -

Huawei E1550 Data Card FAQ 文档密级:内部公开

Huawei E1550 Data Card FAQ 文档密级:内部公开 Huawei E1550 Data Card FAQ Q:Which OS can the E1550 support? A: Now the E1550 can support Windows 2000, Windows XP, Windows Vista and Mac OS. Q: When E1550 connected to PC, how can I confirm that E1550 has been identified? A: Open the device-manager and take the following steps: 1. Open control panel, select capability and maintenance. 2. Select system 3. Open the hardware, select device-manager 4. Unfold ‘Modem’ and ‘ports’. Confirm whether there are 3G Modem and 3G PC UI Interface. These devices show whether the E1550 Data Card has been identified. Q: PC cannot find any new hardware when E1550 connected to it. What should I do? A: 1. Change another USB port. 2. If the problem still exists, please contact local Operator or agent to change your E1550. Q: When E1550 connected to PC, the system identified the virtual CD-ROM and installed the dashboard. But the computer cannot find any new hardware. What should I do? A: 1. First check whether the device-manager has identified USB Mass Storage without any other Modem device. 2009-6-5 华为机密,未经许可不得扩散 第 1 页, 共 16 页 Huawei E1550 Data Card FAQ 文档密级:内部公开 2. Use the devsetup.exe file which you can find in the Drivers folder to install the driver of data card. 2009-6-5 华为机密,未经许可不得扩散 第 2 页, 共 16 页 Huawei E1550 Data Card FAQ 文档密级:内部公开 Q: When E1550 connected to PC, the system identified the virtual CD-ROM and installed the dashboard, then PC find the new hardware. -

Tethering an Android Smartphone to USB Devices

Tethering an Android Smartphone to USB Devices March 2011 [email protected] Veteran Owned Small Business DUNS: 003083420 CAGE: 4SX48 http://www.securecommconsulting.com 1.1. Purpose This paper presents a brief background of Universal Serial Bus (USB) and the architecture of USB drivers in the Linux operating system. The purpose of this paper is to provide a brief explanation of the technical issues involved in making an Android smart phone operate as a USB Host, and properly connect and interoperate with USB devices. 1.2 General Background The Universal Serial Bus (USB) architecture includes a USB Host and a USB Device. The Host is the master and controls the communication between itself and all of its connected USB Devices. Normally1, smart phones are considered USB devices, and are connected to personal computers (PCs) or laptops. However, a popular topic of late is the tethering of an Android smart phone to a USB device (i.e. the smart phone becomes the USB host). This is due to increased demand for smart phone applications that utilize external USB devices (such as mass storage devices, keyboards, and even external networking devices like radios). As a USB Device, a smart phone cannot connect to other USB devices; therefore, to enable smart phones to connect to either a USB device or a USB host, smart phone USB drivers must be modified to support USB OTG (On-The-Go) as depicted in Figure 1. USB OTG allows a component to take on the role of either a USB Host or a USB Device, whichever is best suited for the currently connected gadgets. -

Writing Your Own Gadget

02 November 2018 Writing Your Own Gadget Andrei Emeltchenko Contents ▪ Introduction ▪ General USB ▪ Using Ethernet over USB ▪ Using 802.15.4 over USB ▪ Other USB usages ▪ Experimental USB features: WebUSB ▪ Debugging without the board ▪ Summary ▪ References 2 Introduction: Problem ▪ Devices around (sensors, switches, etc) ▪ Problem connecting to devices with non standard PC interfaces ○ SPI, I2C, etc Access Complex Hardware 3 Introduction: Solution ▪ Use embedded board with special interfaces powered by Zephyr OS ▪ Zephyr board connects to Host via USB Zephyr gadget Interface USB Complex Hardware 4 Introduction: Zephyr ▪ Open Source RTOS for connected resource constrained devices https://www.zephyrproject.org/what-is-zephyr/ ▪ Hosted by Linux Foundation ▪ Supported more then 100 boards: https://docs.zephyrproject.org/latest/boards/boards.html ▪ Zephyr Project Documentation https://docs.zephyrproject.org/latest/index.html ▪ License: Apache 2.0 ▪ Source: https://github.com/zephyrproject-rtos/zephyr 5 Hello world in Zephyr ▪ Set up a development system https://docs.zephyrproject.org/latest/getting_started/getting_started.html#set-up-a-development-system ▪ Set up build environment $ source zephyr-env.sh ▪ Build hello_world sample for Qemu ▪ Run hello_world in Qemu $ cd samples/hello_world/ $ make run $ mkdir build $ cd build To exit from QEMU enter: 'CTRL+a, x' # Use cmake to configure a Make-based build [QEMU] CPU: qemu32,+nx,+pae $ cmake -DBOARD=qemu_x86 .. # Now run make on the generated build system: ***** Booting Zephyr OS zephyr-v1.13.0 ***** $ make Hello World! qemu_x86 6 USB: General overview ▪ One Host connected to many devices Device Descriptor ▪ Device identifies itself through Descriptors Endpoint Descriptor 1 ▪ Descriptors are binary data ... describing USB capabilities Endpoint Descriptor N ○ Device Class Interface Descriptor 1 ○ Product ID / Vendor ID .. -

USB Complete the Developer's Guide 4Th Ed.Pdf

# Lakeview Research LLC Madison, WI 53704 USB Complete: The Developer’s Guide, Fourth Edition by Jan Axelson Copyright 1999-2009 by Janet L. Axelson All rights reserved. No part of the contents of this book, except the program code, may be reproduced or transmitted in any form or by any means without the written permission of the publisher. The program code may be stored and executed in a computer system and may be incorporated into computer pro- grams developed by the reader. The information, computer programs, schematic diagrams, documentation, and other material in this book are provided “as is,” without warranty of any kind, expressed or implied, including without limitation any warranty concerning the accuracy, adequacy, or completeness of the material or the results obtained from using the material. Neither the publisher nor the author shall be responsible for any claims attributable to errors, omissions, or other inaccuracies in the material in this book. In no event shall the publisher or author be liable for direct, indi- rect, special, incidental, or consequential damages in connection with, or arising out of, the construction, performance, or other use of the materials contained herein. Many of the products and company names mentioned herein are the trademarks of their respective holders. PIC and MPLAB are registered trademarks of Micro- chip Technology Inc. in the U.S.A. and other countries. PICBASIC PRO is a trademark of Microchip Technology Inc. in the U.S.A. and other countries. Published by Lakeview Research LLC, 5310 Chinook Ln., Madison WI 53704 www.Lvr.com Distributed by Independent Publishers Group (ipgbook.com).