A Cultural and Political Economy of Web 2.0

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Brick-And-Mortar Retailers' Survival Strategies Amid the COVID-19 Crisis

Mitsui & Co. Global Strategic Studies Institute Monthly Report June 2020 BRICK-AND-MORTAR RETAILERS’ SURVIVAL STRATEGIES AMID THE COVID-19 CRISIS Katsuhide Takashima Industrial Research Dept. III, Industrial Studies Div. Mitsui & Co. Global Strategic Studies Institute SUMMARY As the shift in consumer demand towards e-commerce (EC) has taken root amid the COVID-19 crisis, brick-and-mortar retailers will need stronger survival strategies. The first strategy is to respond to consumer needs for infection prevention, such as by adopting cashierless checkout systems and implementing measures to reduce the amount of time customers spend in stores. The second strategy is to enhance the sophistication of EC initiatives by leveraging the advantage of speediness in product delivery that only brick-and-mortar retailers can provide. The third strategy is to expand and monetize the showroom function. Business models providing insight to this end are beginning to emerge. The spread of COVID-19 infections has divided the retail industry, placing companies in stark contrast with each other depending on business format. Most specialty retailers, including department stores, shopping malls, and apparel shops, saw their earnings decline because they closed their stores to prevent infections, or otherwise suffered from operating restrictions. Meanwhile, other retail formats permitted to stay open in to supply consumers with daily necessities marked earnings growth. They include supermarkets, which captured demand from restaurants that had either closed or were avoided by consumers, and drugstores, which saw growth in demand for infection prevention products, e.g., masks and disinfectants (Figure 1). In addition, e-commerce (EC) transactions are increasing sharply, reflecting rapidly expanded usage by consumers who are refraining from going out. -

New Insights on Retail E-Commerce (July 26, 2017)

U.S. Department of Commerce Economics Newand Insights Statistics on Retail Administration E-Commerce Office of the Chief Economist New Insights on Retail E-Commerce Executive Summary The U.S. Census Bureau has been collecting data on retail sales since the 1950s and data on e-commerce retail sales since 1998. As the Internet has become ubiquitous, many retailers have created websites and even entire divisions devoted to fulfilling online orders. Many consumers have By turned to e-commerce as a matter of convenience or to increase the Jessica R. Nicholson variety of goods available to them. Whatever the reason, retail e- commerce sales have skyrocketed and the Internet will undoubtedly continue to influence how consumers shop, underscoring the need for good data to track this increasingly important economic activity. In June 2017, the Census Bureau released a new supplemental data table on retail e-commerce by type of retailer. The Census Bureau developed these estimates by re-categorizing e-commerce sales data from its ESA Issue Brief existing “electronic shopping” sales data according to the primary #04-17 business type of the retailer, such as clothing stores, food stores, or electronics stores. This report examines how the new estimates enhance our understanding of where consumers are shopping online and also provides an overview of trends in retail and e-commerce sales. Findings from this report include: E-commerce sales accounted for 7.2 percent of all retail sales in 2015, up dramatically from 0.2 percent in 1998. July 26, 2017 E-commerce sales have been growing nine times faster than traditional in-store sales since 1998. -

Trademarks, Metatags, and Initial Interest Confusion: a Look to the Past to Re- Conceptualize the Future

173 TRADEMARKS, METATAGS, AND INITIAL INTEREST CONFUSION: A LOOK TO THE PAST TO RE- CONCEPTUALIZE THE FUTURE CHAD J. DOELLINGER* INTRODUCTION Web sites, through domain names and metatags, have created a new set of problems for trademark owners. A prominent problem is the use of one’s trademarks in the metatags of a competitor’s web site. The initial interest confusion doctrine has been used to combat this problem.1 Initial interest confusion involves infringement based on confusion that creates initial customer interest, even though no transaction takes place.2 Several important questions have currently received little atten- tion: How should initial interest confusion be defined? How should initial interest confusion be conceptualized? How much confusion is enough to justify a remedy? Who needs to be confused, when, and for how long? How should courts determine when initial interest confusion is sufficient to support a finding of trademark infringement? These issues have been glossed over in the current debate by both courts and scholars alike. While the two seminal opinions involving the initial interest confusion doctrine, Brookfield Commun., Inc. v. West Coast Ent. Corp.3 and * B.A., B.S., University of Iowa (1998); J.D., Yale Law School (2001). Mr. Doellinger is an associate with Pattishall, McAuliffe, Newbury, Hilliard & Geraldson, 311 S. Wacker Drive, Chicago, Illinois 60606. The author would like to thank Uli Widmaier for his assistance and insights. The views and opinions in this article are solely those of the author and do not necessarily reflect those of Pattishall, McAuliffe, Newbury, Hilliard & Geraldson. 1 See J. Thomas McCarthy, McCarthy on Trademarks and Unfair Competition, vol. -

Proceedings of the 46Th Annual Meeting of the Association for Computational Linguistics on Hu- Man Language Technologies, Pages 9–12

Coling 2010 23rd International Conference on Computational Linguistics Proceedings of the 2nd Workshop on The People’s Web Meets NLP: Collaboratively Constructed Semantic Resources Produced by Chinese Information Processing Society of China All rights reserved for Coling 2010 CD production. To order the CD of Coling 2010 and its Workshop Proceedings, please contact: Chinese Information Processing Society of China No.4, Southern Fourth Street Haidian District, Beijing, 100190 China Tel: +86-010-62562916 Fax: +86-010-62562916 [email protected] ii Introduction This volume contains papers accepted for presentation at the 2nd Workshop on Collaboratively Constructed Semantic Resources that took place on August 28, 2010, as part of the Coling 2010 conference in Beijing. Being the second workshop on this topic, we were able to build on the success of the previous workshop on this topic held as part of ACL-IJCNLP 2009. In many works, collaboratively constructed semantic resources have been used to overcome the knowledge acquisition bottleneck and coverage problems pertinent to conventional lexical semantic resources. The greatest popularity in this respect can so far certainly be attributed to Wikipedia. However, other resources, such as folksonomies or the multilingual collaboratively constructed dictionary Wiktionary, have also shown great potential. Thus, the scope of the workshop deliberately includes any collaboratively constructed resource, not only Wikipedia. Effective deployment of such resources to enhance Natural Language Processing introduces a pressing need to address a set of fundamental challenges, e.g. the interoperability with existing resources, or the quality of the extracted lexical semantic knowledge. Interoperability between resources is crucial as no single resource provides perfect coverage. -

Pdf Recommendations to Leverage E

Trade and COVID-19 Guidance Note RECOMMENDATIONS TO LEVERAGE E-COMMERCE DURING THE COVID-19 CRISIS Christoph Ungerer, Alberto Portugal, Martin Molinuevo and Natasha Rovo1 May 12, 2020 Public Disclosure Authorized KEY MESSAGES • In the fight against COVID-19, economic activities that require close physical contact have been severely restricted. In this context, e-commerce – defined broadly as the sale of goods or services online - is emerging as a major pillar in the COVID-19 crisis. E-commerce can help further reduce the risk of new infections by minimizing face to face interactions. It can help preserve jobs during the crisis. And it can help increase the acceptance of prolonged physical distancing measures among the population. • Public policy can only play an enabling role, tackling market failures and creating an environment in which digital entrepreneurship can thrive. This guidance note highlights 13 key measures that governments can take in the short term to support e-commerce during the ongoing crisis. The first group of measures aims to help more businesses and households to connect to the digital economy during the crisis. The second group of measures aims to ensure that e-commerce can continue to Public Disclosure Authorized serve the public in a way that is safe, even during the COVID-19 lockdown. The third group of measures aims to ensure that the government’s e-commerce strategy during the crisis is clearly communicated, implemented, and coordinated with other policy measures. • The crisis may have a permanent impact on the private sector landscape, consumer preferences, and shopping patterns. Many brick-and-mortar shops have been forced to move online. -

Using Wikipedia to Strengthen Research and Critical Thinking Skills

From Audience to Authorship to Authority: Using Wikipedia to Strengthen Research and Critical Thinking Skills Michele Van Hoeck and Debra Hoffmann This paper looks at Wikipedia’s effectiveness as a pedagogical tool to encourage students to think critical- ly about notions of audience, authorship and authority, and also Wikipedia’s impact on student research persistence. Two case studies are presented in which Wikipedia was used as the platform for assignments in 1) a two-unit freshman information literacy course at California State University (CSU), Maritime (Cal Maritime), and 2) in two three-unit, cross-listed upper-division courses at CSU Channel Islands (CI). At Cal Maritime, the course culminated with students significantly expanding a Wikipedia article. At CI, one course used Wikipedia to explore issues of authority and expertise; the other course used Wikipedia to think critically about notions of authorship and audience. Cal Maritime data on student attitudes, re- search practices such as interlibrary loan borrowing, and student citations suggest the Wikipedia plat- form had, overall, a positive impact on research persistence. Finally, the authors discuss lessons learned from initial use of Wikipedia in the classroom and opportunities for instruction librarians to incorporate Wikipedia into information literacy instruction outside of a credit-bearing course. Introduction and critical thinking skills as editors and creators of Information Literacy instruction often focuses on content. We take a case study approach in presenting cautioning students regarding the use of Wikipedia experiences on two California State University cam- as a credible source for their research, as authors of puses in which Wikipedia was used as the platform Wikipedia articles are often anonymous, and edit- for assignments in credit-bearing information literacy ing Wikipedia does not require proof of expertise. -

Defining the Digital Services Landscape for the Middle East

Defining the Digital Services landscape for the Middle East Defining the Digital Services landscape for the Middle East 1 2 Contents Defining the Digital Services landscape for the Middle East 4 The Digital Services landscape 6 Consumer needs landscape Digital Services landscape Digital ecosystem Digital capital Digital Services Maturity Cycle: Middle East 24 Investing in Digital Services in the Middle East 26 Defining the Digital Services landscape for the Middle East 3 Defining the Digital Services landscape for the Middle East The Middle East is one of the fastest growing emerging markets in the world. As the region becomes more digitally connected, demand for Digital Services and technologies is also becoming more prominent. With the digital economy still in its infancy, it is unclear which global advances in Digital Services and technologies will be adopted by the Middle East and which require local development. In this context, identifying how, where and with whom to work with in this market can be very challenging. In our effort to broaden the discussion, we have prepared this report to define the Digital Services landscape for the Middle East, to help the region’s digital community in understanding and navigating through this complex and ever-changing space. Eng. Ayman Al Bannaw Today, we are witnessing an unprecedented change in the technology, media, and Chairman & CEO telecommunications industries. These changes, driven mainly by consumers, are taking Noortel place at a pace that is causing confusion, disruption and forcing convergence. This has created massive opportunities for Digital Services in the region, which has in turn led to certain industry players entering the space in an incoherent manner, for fear of losing their market share or missing the opportunities at hand. -

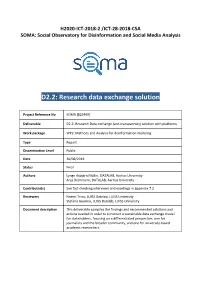

D2.2: Research Data Exchange Solution

H2020-ICT-2018-2 /ICT-28-2018-CSA SOMA: Social Observatory for Disinformation and Social Media Analysis D2.2: Research data exchange solution Project Reference No SOMA [825469] Deliverable D2.2: Research Data exchange (and transparency) solution with platforms Work package WP2: Methods and Analysis for disinformation modeling Type Report Dissemination Level Public Date 30/08/2019 Status Final Authors Lynge Asbjørn Møller, DATALAB, Aarhus University Anja Bechmann, DATALAB, Aarhus University Contributor(s) See fact-checking interviews and meetings in appendix 7.2 Reviewers Noemi Trino, LUISS Datalab, LUISS University Stefano Guarino, LUISS Datalab, LUISS University Document description This deliverable compiles the findings and recommended solutions and actions needed in order to construct a sustainable data exchange model for stakeholders, focusing on a differentiated perspective, one for journalists and the broader community, and one for university-based academic researchers. SOMA-825469 D2.2: Research data exchange solution Document Revision History Version Date Modifications Introduced Modification Reason Modified by v0.1 28/08/2019 Consolidation of first DATALAB, Aarhus draft University v0.2 29/08/2019 Review LUISS Datalab, LUISS University v0.3 30/08/2019 Proofread DATALAB, Aarhus University v1.0 30/08/2019 Final version DATALAB, Aarhus University 30/08/2019 Page | 1 SOMA-825469 D2.2: Research data exchange solution Executive Summary This report provides an evaluation of current solutions for data transparency and exchange with social media platforms, an account of the historic obstacles and developments within the subject and a prioritized list of future scenarios and solutions for data access with social media platforms. The evaluation of current solutions and the historic accounts are based primarily on a systematic review of academic literature on the subject, expanded by an account on the most recent developments and solutions. -

Initiativen Roger Cloes Infobrief

Wissenschaftliche Dienste Deutscher Bundestag Infobrief Entwicklung und Bedeutung der im Internet ehrenamtlich eingestell- ten Wissensangebote insbesondere im Hinblick auf die Wiki- Initiativen Roger Cloes WD 10 - 3010 - 074/11 Wissenschaftliche Dienste Infobrief Seite 2 WD 10 - 3010 - 074/11 Entwicklung und Bedeutung der ehrenamtlich im Internet eingestellten Wissensangebote insbe- sondere im Hinblick auf die Wiki-Initiativen Verfasser: Dr. Roger Cloes / Tim Moritz Hector (Praktikant) Aktenzeichen: WD 10 - 3010 - 074/11 Abschluss der Arbeit: 1. Juli 2011 Fachbereich: WD 10: Kultur, Medien und Sport Ausarbeitungen und andere Informationsangebote der Wissenschaftlichen Dienste geben nicht die Auffassung des Deutschen Bundestages, eines seiner Organe oder der Bundestagsverwaltung wieder. Vielmehr liegen sie in der fachlichen Verantwortung der Verfasserinnen und Verfasser sowie der Fachbereichsleitung. Der Deutsche Bundestag behält sich die Rechte der Veröffentlichung und Verbreitung vor. Beides bedarf der Zustimmung der Leitung der Abteilung W, Platz der Republik 1, 11011 Berlin. Wissenschaftliche Dienste Infobrief Seite 3 WD 10 - 3010 - 074/11 Zusammenfassung Ehrenamtlich ins Internet eingestelltes Wissen spielt in Zeiten des sogenannten „Mitmachweb“ eine zunehmend herausgehobene Rolle. Vor allem Wikis und insbesondere Wikipedia sind ein nicht mehr wegzudenkendes Element des Internets. Keine anderen vergleichbaren Wissensange- bote im Internet, auch wenn sie zum freien Abruf eingestellt sind und mit Steuern, Gebühren, Werbeeinnahmen finanziert oder als Gratisproben im Rahmen von Geschäftsmodellen verschenkt werden, erreichen die Zugriffszahlen von Wikipedia. Das ist ein Phänomen, das in seiner Dimension vor dem Hintergrund der urheberrechtlichen Dis- kussion und der Begründung von staatlichem Schutz als Voraussetzung für die Schaffung von geistigen Gütern kaum Beachtung findet. Relativ niedrige Verbreitungskosten im Internet und geringe oder keine Erfordernisse an Kapitalinvestitionen begünstigen diese Entwicklung. -

Letter, If Not the Spirit, of One Or the Other Definition

Producing Open Source Software How to Run a Successful Free Software Project Karl Fogel Producing Open Source Software: How to Run a Successful Free Software Project by Karl Fogel Copyright © 2005-2021 Karl Fogel, under the CreativeCommons Attribution-ShareAlike (4.0) license. Version: 2.3214 Home site: https://producingoss.com/ Dedication This book is dedicated to two dear friends without whom it would not have been possible: Karen Under- hill and Jim Blandy. i Table of Contents Preface ............................................................................................................................. vi Why Write This Book? ............................................................................................... vi Who Should Read This Book? ..................................................................................... vi Sources ................................................................................................................... vii Acknowledgements ................................................................................................... viii For the first edition (2005) ................................................................................ viii For the second edition (2021) .............................................................................. ix Disclaimer .............................................................................................................. xiii 1. Introduction ................................................................................................................... -

Supporting Sensemaking by the Masses for the Public Good

Supporting Sensemaking by the Masses for the Public Good Derek L. Hansen Assistant Professor, Maryland’s iSchool Director of CASCI (http://casci.umd.edu) Introduction One of the marvels of our time is the unprecedented use and development of technologies that support social interaction. Recent decades have repeatedly demonstrated human ingenuity as individuals and collectives have adopted and adapted these technologies to engender new ways of working, playing, and creating meaning. Although we already take for granted the ubiquity of social-mediating technologies such as email, Facebook, Twitter, and wikis, their potential for improving the human condition has hardly been tapped. There is a great need for researchers to 1) develop theories that better explain how and why technology-mediated collaborations succeed and fail, and 2) develop novel socio-technical strategies to address national priorities such as healthcare, education, government transparency, and environmental sustainability. In this paper, I discuss the need to understand and develop technologies and social structures that support large-scale, collaborative sensemaking. Sensemaking Humans can’t help but try to make sense of the world around them. When faced with an unfamiliar problem or situation we instinctively gather, organize, and interpret information, constructing meanings that enable us to move forward (Dervin, 1998; Lee & Abrams 2008; Russell, et al., 2008.). Individuals engage in sensemaking activities when we create a presentation, come to grips with a life-threatening illness, decide who to vote for, or produce an intelligence report. As collectives we engage in collaborative sensemaking activities at many levels of social aggregation ranging from small groups (e.g., co-authors of a paper) to hundreds (e.g., the scientific community developing a cure for cancer) to millions (e.g., fans of ABC’s Lost making sense of the complex show). -

Reliability of Wikipedia 1 Reliability of Wikipedia

Reliability of Wikipedia 1 Reliability of Wikipedia The reliability of Wikipedia (primarily of the English language version), compared to other encyclopedias and more specialized sources, is assessed in many ways, including statistically, through comparative review, analysis of the historical patterns, and strengths and weaknesses inherent in the editing process unique to Wikipedia. [1] Because Wikipedia is open to anonymous and collaborative editing, assessments of its Vandalism of a Wikipedia article reliability usually include examinations of how quickly false or misleading information is removed. An early study conducted by IBM researchers in 2003—two years following Wikipedia's establishment—found that "vandalism is usually repaired extremely quickly — so quickly that most users will never see its effects"[2] and concluded that Wikipedia had "surprisingly effective self-healing capabilities".[3] A 2007 peer-reviewed study stated that "42% of damage is repaired almost immediately... Nonetheless, there are still hundreds of millions of damaged views."[4] Several studies have been done to assess the reliability of Wikipedia. A notable early study in the journal Nature suggested that in 2005, Wikipedia scientific articles came close to the level of accuracy in Encyclopædia Britannica and had a similar rate of "serious errors".[5] This study was disputed by Encyclopædia Britannica.[6] By 2010 reviewers in medical and scientific fields such as toxicology, cancer research and drug information reviewing Wikipedia against professional and peer-reviewed sources found that Wikipedia's depth and coverage were of a very high standard, often comparable in coverage to physician databases and considerably better than well known reputable national media outlets.