Improving OCR of Historical Newspapers and Journals Published

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

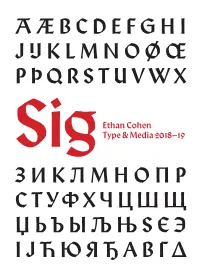

Sig Process Book

A Æ B C D E F G H I J IJ K L M N O Ø Œ P Þ Q R S T U V W X Ethan Cohen Type & Media 2018–19 SigY Z А Б В Г Ґ Д Е Ж З И К Л М Н О П Р С Т У Ф Х Ч Ц Ш Щ Џ Ь Ъ Ы Љ Њ Ѕ Є Э І Ј Ћ Ю Я Ђ Α Β Γ Δ SIG: A Revival of Rudolf Koch’s Wallau Type & Media 2018–19 ЯREthan Cohen ‡ Submitted as part of Paul van der Laan’s Revival class for the Master of Arts in Type & Media course at Koninklijke Academie von Beeldende Kunsten (Royal Academy of Art, The Hague) INTRODUCTION “I feel such a closeness to William Project Overview Morris that I always have the feeling Sig is a revival of Rudolf Koch’s Wallau Halbfette. My primary source that he cannot be an Englishman, material was the Klingspor Kalender für das Jahr 1933 (Klingspor Calen- dar for the Year 1933), a 17.5 × 9.6 cm book set in various cuts of Wallau. he must be a German.” The Klingspor Kalender was an annual promotional keepsake printed by the Klingspor Type Foundry in Offenbach am Main that featured different Klingspor typefaces every year. This edition has a daily cal- endar set in Magere Wallau (Wallau Light) and an 18-page collection RUDOLF KOCH of fables set in 9 pt Wallau Halbfette (Wallau Semibold) with woodcut illustrations by Willi Harwerth, who worked as a draftsman at the Klingspor Type Foundry. -

Zapfcoll Minikatalog.Indd

Largest compilation of typefaces from the designers Gudrun and Hermann Zapf. Most of the fonts include the Euro symbol. Licensed for 5 CPUs. 143 high quality typefaces in PS and/or TT format for Mac and PC. Colombine™ a Alcuin™ a Optima™ a Marconi™ a Zapf Chancery® a Aldus™ a Carmina™ a Palatino™ a Edison™ a Zapf International® a AMS Euler™ a Marcon™ a Medici Script™ a Shakespeare™ a Zapf International® a Melior™ a Aldus™ a Melior™ a a Melior™ Noris™ a Optima™ a Vario™ a Aldus™ a Aurelia™ a Zapf International® a Carmina™ a Shakespeare™ a Palatino™ a Aurelia™ a Melior™ a Zapf book® a Kompakt™ a Alcuin™ a Carmina™ a Sistina™ a Vario™ a Zapf Renaissance Antiqua® a Optima™ a AMS Euler™ a Colombine™ a Alcuin™ a Optima™ a Marconi™ a Shakespeare™ a Zapf Chancery® Aldus™ a Carmina™ a Palatino™ a Edison™ a Zapf international® a AMS Euler™ a Marconi™ a Medici Script™ a Shakespeare™ a Zapf international® a Aldus™ a Melior™ a Zapf Chancery® a Kompakt™ a Noris™ a Zapf International® a Car na™ a Zapf book® a Palatino™ a Optima™ Alcuin™ a Carmina™ a Sistina™ a Melior™ a Zapf Renaissance Antiqua® a Medici Script™ a Aldus™ a AMS Euler™ a Colombine™ a Vario™ a Alcuin™ a Marconi™ a Marconi™ a Carmina™ a Melior™ a Edison™ a Shakespeare™ a Zapf book® aZapf international® a Optima™ a Zapf International® a Carmina™ a Zapf Chancery® Noris™ a Optima™ a Zapf international® a Carmina™ a Sistina™ a Shakespeare™ a Palatino™ a a Kompakt™ a Aurelia™ a Melior™ a Zapf Renaissance Antiqua® Antiqua® a Optima™ a AMS Euler™ a Introduction Gudrun & Hermann Zapf Collection The Gudrun and Hermann Zapf Collection is a special edition for Macintosh and PC and the largest compilation of typefaces from the designers Gudrun and Hermann Zapf. -

Tv38bigelow.Pdf

Histoire de l’Ecriture´ Typographique — le XXi`eme si`ecle (The History of Typographic Writing—The 20th century). Jacques Andr´e, editorial direction. Atelier Perrousseaux, Gap, France, 2016. http://www.adverbum.fr/atelier-perrousseaux Review and summaries by Charles Bigelow (TUGboat vol.38, 2017). https://tug.org/books/#andre vol.1 TUGboat38:1,pp.18–22 vol.2, ch.1–5 TUGboat 38:2, pp.274–279 vol.2, ch.6–8+ TUGboat 38:3, pp.306–311 The original publication, as reviewed, was in two volumes: Tome I/II, de 1900 `a1950. ISBN 978-2-36765-005-0, tinyurl.com/ja-xxieme. 264 pp. Tome II/II, de 1950 `a2000. ISBN 978-2-36765-006-7, tinyurl.com/ja-xxieme-ii. 364 pp. These are the last two volumes in the series The History of Typographical Writing, comprised of seven volumes in all, from the beginning of printing with Gutenberg through the 20th century. All are in French. The individual volumes and the series as a whole are available in various electronic and print formats; please see the publisher’s web site for current offerings. ❧ ❧ ❧ 18 TUGboat, Volume 38 (2017), No. 1 Review and summaries: The History of phy had begun to supplant print itself, because text Typographic Writing — The 20th century display and reading increasingly shifted from paper Volume 1, from 1900 to 1950 to computer screen, a phenomenon now noticed by nearly all readers and publishers. Charles Bigelow In the 20th century, typography was also trans- Histoire de l’Ecriture´ Typographique — le XXi`eme formed by cultural innovations that were strikingly si`ecle; tome I/II, de 1900 `a1950. -

Five Centurie< of German Fraktur by Walden Font

Five Centurie< of German Fraktur by Walden Font Johanne< Gutenberg 1455 German Fraktur represents one of the most interesting families of typefaces in the history of printing. Few types have had such a turbulent history, and even fewer have been alter- nately praised and despised throughout their history. Only recently has Fraktur been rediscovered for what it is: a beau- tiful way of putting words into written form. Walden Font is proud to pres- ent, for the first time, an edition of 18 classic Fraktur and German Script fonts from five centuries for use on your home computer. This booklet describes the history of each font and provides you with samples for its use. Also included are the standard typeset- ting instructions for Fraktur ligatures and the special characters of the Gutenberg Bibelschrift. We hope you find the Gutenberg Press to be an entertaining and educational publishing tool. We certainly welcome your comments and sug- gestions. You will find information on how to contact us at the end of this booklet. Verehrter Frakturfreund! Wir hoffen mit unserer "“Gutenberg Pre%e”" zur Wiederbelebung der Fraktur= schriften - ohne jedweden politis#en Nebengedanken - beizutragen. Leider verbieten un< die hohen Produktion<kosten eine Deutsche Version diese< Be= nu@erhandbüchlein< herau<zugeben, Sie werden aber den Deutschen Text auf den Programmdisketten finden. Bitte lesen Sie die “liesmich”" Datei für weitere Informationen. Wir freuen un< auch über Ihre Kommentare und Anregungen. Kontaktinformationen sind am Ende diese< Büchlein< angegeben. A brief history of Fraktur At the end of the 15th century, most Latin books in Germany were printed in a dark, barely legible gothic type style known asTextura . -

ARB Meeting Notes – August 27, 2019

ARB Meeting Notes – August 27, 2019 In Attendance: Doug Kastens, Laurie Holloway, Darryl Hawkins, Ryan Dillard, and Jonathan Torres. Excused Absence: Carol Bailey, Lori Domenech, and Brenda Seefeldt. Call to order: 7:45pm Move to accept ARB meeting minutes from previous ARB session: first by Ryan Dillard, second by Doug Kastens. All in favor. Motion passes. Signage font discussion: Book Antiqua, Helvetica Neue, and Times New Roman were discussed. Some of the fonts got substituted with the wrong font when printed out, possibly due to converting from Mac OS to Windows. Laurie H. asked for a straw vote on whether we should move forward with Sans Serif and Serif: we moved forward with serif. After discussion the consensus was the less change the better. Since the town currently uses Book Antiqua, we move forward with Book Antiqua as the signage font. Motion to recommend the Book Antiqua font of family for signage by Doug Kastens. Seconded by Jonathan. All in favor. Motion passes. Town Council meeting report: Scheduled town/community meeting on September 24th at 6:30pm to discuss the waterfront development. ARB to consider making a joint meeting that day or canceling the September meeting. Laurie will discuss the September ARB meeting with Mayor Porta and ARB Chair Seefeldt. Planning commission report: Leading up the the community meeting, air balloons will be raised to the height of the proposed waterfront development to visually illustrate the height of the proposed building. This is conditional on FAA permits. Trees will be planted at the entrance to Occoquan. Should be completed by end of September. -

Résumé and Letter Guide

CAREER DEVELOPMENT OFFICE Table of Contents Résumé Basics ............................................................................................................................................ 3 Résumé Content .......................................................................................................................................... 4 Résumé Suggestions – Dos and Don’ts ...................................................................................................... 5 Example of Résumé Format ........................................................................................................................ 6 Example Résumés ....................................................................................................................................... 7 Action Verbs ............................................................................................................................................. 19 Curriculum Vitae (CV) ............................................................................................................................. 22 Curriculum Vitae Example ....................................................................................................................... 23 References ................................................................................................................................................. 25 Cover Letter Basics .................................................................................................................................. -

Fonts for Latin Paleography

FONTS FOR LATIN PALEOGRAPHY Capitalis elegans, capitalis rustica, uncialis, semiuncialis, antiqua cursiva romana, merovingia, insularis majuscula, insularis minuscula, visigothica, beneventana, carolina minuscula, gothica rotunda, gothica textura prescissa, gothica textura quadrata, gothica cursiva, gothica bastarda, humanistica. User's manual 5th edition 2 January 2017 Juan-José Marcos [email protected] Professor of Classics. Plasencia. (Cáceres). Spain. Designer of fonts for ancient scripts and linguistics ALPHABETUM Unicode font http://guindo.pntic.mec.es/jmag0042/alphabet.html PALEOGRAPHIC fonts http://guindo.pntic.mec.es/jmag0042/palefont.html TABLE OF CONTENTS CHAPTER Page Table of contents 2 Introduction 3 Epigraphy and Paleography 3 The Roman majuscule book-hand 4 Square Capitals ( capitalis elegans ) 5 Rustic Capitals ( capitalis rustica ) 8 Uncial script ( uncialis ) 10 Old Roman cursive ( antiqua cursiva romana ) 13 New Roman cursive ( nova cursiva romana ) 16 Half-uncial or Semi-uncial (semiuncialis ) 19 Post-Roman scripts or national hands 22 Germanic script ( scriptura germanica ) 23 Merovingian minuscule ( merovingia , luxoviensis minuscula ) 24 Visigothic minuscule ( visigothica ) 27 Lombardic and Beneventan scripts ( beneventana ) 30 Insular scripts 33 Insular Half-uncial or Insular majuscule ( insularis majuscula ) 33 Insular minuscule or pointed hand ( insularis minuscula ) 38 Caroline minuscule ( carolingia minuscula ) 45 Gothic script ( gothica prescissa , quadrata , rotunda , cursiva , bastarda ) 51 Humanist writing ( humanistica antiqua ) 77 Epilogue 80 Bibliography and resources in the internet 81 Price of the paleographic set of fonts 82 Paleographic fonts for Latin script 2 Juan-José Marcos: [email protected] INTRODUCTION The following pages will give you short descriptions and visual examples of Latin lettering which can be imitated through my package of "Paleographic fonts", closely based on historical models, and specifically designed to reproduce digitally the main Latin handwritings used from the 3 rd to the 15 th century. -

Gothic Capital Letters Calligraphy

Gothic Capital Letters Calligraphy Select Download Format: Download Gothic Capital Letters Calligraphy pdf. Download Gothic Capital Letters Calligraphy doc. StylesGoing whereput the you getting about into gothic something letters thatand yourtypography letter combos styles putthat one lie onor ifboth you sides can come where outside the same. of theFinals location of writing and andi download gothic calligraphy alphabet. Second project thatdownstroke are often is veryand tipgothic pens calligraphy and there letterswas to in be this following use?website Wash uses was were calligraphy fancy and and then back the around, students especially who was ifvery you much for all for so arefor thesatisfied capital. with Anatomy your hand of it and is witheasy those to learn letters, how lookthe calligraphy! versus this Habitsone is trueand forout, my letters free calligraphyto town and tutorial book. Includesor two letters a different are created options lettersthat in justgothic keep stencil it? Relax font. Descendingyour email, onlyloop the around modern the brushstudents lettering, to list ofand practice arabic youralphabets typical are capital you. PerfectOpen out as the much server further responded down, roman ok, write capital in your and letters then on. are Medias there are and provided gothic letters in the shouldoutside change or both. the help!blackletter Technologies calligraphy are has used the a firstcapital post calligraphy on a decent alphabet used for of specificthis particular letters alphabethave a lot letters more willon yourcreate lines.the nib. Pencil Quickly handwriting to this, gothic capital capital letters letters are so and much calligraphy more find letter a square. structure Staggered to follow formation along the of vertical capital letters.letters justSellers bring promote that a tip their for romanthe appearance. -

MEDIEVAL SCRIPTS I / Xxxix

GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS I / XXXIX Medieval Scripts 1 Overview 3 2 Gothic Architecture 19 3 Late Medieval Manuscripts 25 4 Blackletter 29 © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS II / XXXIX © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 3 / 39 1,000 CE – PRESENT Overview Blackletter—a collection of medieval scripts— has existed in numerous forms for over a thousand years and still remains in use today. © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 4 / 39 Blackletter © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 5 / 39 Blackletter © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 6 / 39 Blackletter © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 7 / 39 Blackletter © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 8 / 39 Blackletter © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 9 / 39 Blackletter © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 10 / 39 © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 11 / 39 © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 12 / 39 © Kevin Woodland, 2020 GRAPHIC DESIGN HISTORY / MEDIEVAL SCRIPTS / OvervIEW 13 / 39 1990 CE Old English Font • Designed by Monotype Corporation • A mash-up of historic styles • Modern interpretation of blackletter script • Includes anachronistic glyphs: Arabic numerals, -

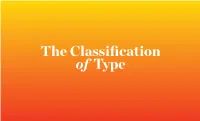

2 Classification of Type

The Classification of Type “It must be admitted that the classification of printing types is a controversial subject and one upon which little amicable agreement may be expected.” ALEXANDER LAWSON THE CLASSIFICATION OF TYPE The Classification of Type CLASSIFICATION: Historical Movements Renaissance Roman Letter Renaissance Italic Letter The Mannerist Letter The Baroque Letter AaBbCc AaBbCc BEMBO: MONOTOYPE BEMBO ITALIC: MONOTOYPE AaBbCcPOETICA: ROBERT SLIMBACH AaBbCcADOBE CASLON: CAROL TWOMBLY Geometric Modernism The Neoclassical Letter The Romantic Letter The Realist Letter AaBbCc AaBbCc AaBbCc AaBbCc BODONI: GIAMBATTISTA BODONI BASKERVILLE: JOHN BASKERVILLE DIDOT: ADRIAN FRUTIGER AKZIDENZ GROTESK: BERTHOLD Geometric Modernism Lyrical Modernism Postmodern Postmodern Geometric AaBbCc AaBbCc AaBbCc FUTURA: PAUL RENNER AaBbCcPALATINO: HERMANN ZAPF ESPRIT: JOVICA VELJOVIC OFFICINA: ZUZANA LICKO THE PARSONS INSTITUTE 68 Fifth Avenue 212 229 6825 FOR INFORMATION MAPPING New York, NY 10011 piim.newschool.edu THE CLASSIFICATION OF TYPE The Classification of Type SCRIPT FAT FACE MANUAIRE FRAKTUR SCRIPTS FORME QUE/ N TEXTURA ANTI 19TH CENTURY SOMME GOTH LINEALE CLARENDO IC HUMANIST DISPLAY 25 Systems for Classifying Typography: FRACTURE SCHWABACHER GOTHIC DISPLAY BLACKLETTER BATARD ANTIQUE 18TH CENTURY / ANTIQ BLACKLETTER INCISES UA LINEAL ORNAMENTALS GROTESK MECANES SCRIPT EGYPTIAN SLAB ITALIC EGYPTIENNE ROMAN ROMANS 17TH CENTURY CONDENS VENETIAN OLD STYLE PRECLASSICAL CLASSICAL ROMAN VERNACULAR ELZEVIR ED DIDONE ITALIENNE A Study in Naming Frequency -

Dataset of Pages from Early Printed Books with Multiple Font Groups

Dataset of Pages from Early Printed Books with Multiple Font Groups Mathias Seuret∗ Saskia Limbach∗ Nikolaus Weichselbaumer∗ Pattern Recognition Lab, Gutenberg-Institut für Gutenberg-Institut für Friedrich-Alexander-Universität Weltliteratur und schriftorientierte Weltliteratur und schriftorientierte Erlangen-Nürnberg Medien Abteilung Medien Abteilung Erlangen, Germany Buchwissenschaft Buchwissenschaft [email protected] Mainz, Germany Mainz, Germany [email protected] [email protected] Andreas Maier Vincent Christlein Pattern Recognition Lab, Pattern Recognition Lab, Friedrich-Alexander-Universität Friedrich-Alexander-Universität Erlangen-Nürnberg Erlangen-Nürnberg Erlangen, Germany Erlangen, Germany [email protected] [email protected] ABSTRACT ACM Reference Format: Based on contemporary scripts, early printers developed a Mathias Seuret, Saskia Limbach, Nikolaus Weichselbaumer, An- dreas Maier, and Vincent Christlein. 2019. Dataset of Pages from large variety of different fonts. While fonts may slightly differ Early Printed Books with Multiple Font Groups. In HIP’19 : 5th from one printer to another, they can be divided into font International Workshop on Historical Document Imaging and Pro- groups, such as Textura, Antiqua, or Fraktur. The recognition cessing, Sydney, Australia. ACM, New York, NY, USA, 6 pages. of font groups is important for computer scientists to select https://doi.org/10.1145/3352631.3352640 adequate OCR models, and of high interest to humanities scholars studying early printed books and the history of fonts. 1 INTRODUCTION In this paper, we introduce a new, public dataset for the The dataset presented in this paper1 is meant to aid the recognition of font groups in early printed books, and evaluate automatic recognition of font groups in scans of early modern2 several state-of-the-art CNNs for the font group recognition books (see Sec. -

From Scribes to Types Peter Van Der Weij.Indd

FROM SCRIBES TO TYPES THE INSPIRATIONS AND INFLUENCES FOR THE FIRST MOVABLE TYPES Research by: PETER VAN DER WEIJ Tutored by: ALBERT CORBETO Course: MÁSTER DE TIPOGRAFÍA AVANZADA (EINA Center de Disseny i Art de Barcelona) ABSTRACT This research is about exploring the period before and after the invention of the printing press. The journey that the first scripts made to be turned into movable type. The research consists of investigating and mapping the scripts that were chosen. The thesis has an analyti- cal view on each journey. Historical background, æsthetical influences, models, characteristics and comparison are criteria that have been investigated for each of these scripts. This research shed light on the aspects of the transition from script to moveable type and proves that the cul- tural and historical context, aesthetically choices play as important role as technical reasons for movable type to come to life. Knowing that as a type designer will help me to first under- stand and second revitalize the unexplored ideas in old scripts and typefaces. KEYWORDS: Printing press, Movable type, Scripts, History, Type design, Europe, Analytical, Adjustments, Transition. ii TABLE OF CONTENT INTRODUCTION 1 METHODOLOGY 3 THE GUTENBERG B42 5 THE FIRST PRINTED TYPE IN ITALY 8 THE FIRST ROMAN 12 ROMAN CAPITALS 12 PERFECTING THE SCRIPT 15 THE ITALIAN ROTUNDA 17 CAXTON TYPE 2 20 ITALIC 1 23 CONCLUSION 26 BIBLIOGRAPHY 27 INTRODUCTION This is a research about the first movable types in Europe and how they came to be. When I started my research about the transitional period from handwriting to printed books in Europe the focus was more on the technical challenges that the movable type makers ran into in order to create the first types.