Resource-Oriented Architecture Patterns for Webs of Data Synthesis Lectures on the Semantic Web: Theory and Technology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Überconf Westin Westminster June 19 - 22, 2012

ÜberConf Westin Westminster June 19 - 22, 2012 Tue, Jun. 19, 2012 Westminster I Westminster II Standley I Standley II Lake House Cotton Creek Meadowbrook I Meadowbrook II Windsor 8:00 - 9:00 AM EARLY REGISTRATION: PRE-CONFERENCE WORKSHOP ATTENDEES ONLY - WESTMINSTER BALLROOM FOYER 9:00 - 6:00 PM Programming iOS Workshop Android Web Application Domain-Driven Git Bootcamp with HTML 5 Christopher Judd Workshop Security Design (DDD) - An All-Day Venkat James Harmon Workshop Workshop Workshop Subramaniam Ken Sipe Paul Rayner Matthew McCullough 5:00 - 6:30 PM MAIN UBERCONF REGISTRATION - WESTMINSTER BALLROOM FOYER 6:30 - 8:30 PM DINNER/KEYNOTE - WESTMINSTER BALLROOM 3/4 7:30 - 8:30 PM Keynote: by Tim Berglund 8:30 - 10:30 PM OPENING NIGHT OUTDOOR RECEPTION - SOUTH COURTYARD ÜberConf Westin Westminster June 19 - 22, 2012 Wed, Jun. 20, 2012 Westminster I Westminster II Standley I Standley II Lake House Cotton Creek Meadowbrook I Meadowbrook II Windsor 7:00 - 8:00 AM 5K FUN RUN & POWER WALK - MEET IN LOBBY 7:30 - 8:30 AM BREAKFAST & LATE REGISTRATION - WESTMINSTER BALLROOM 3/4 8:30 - 10:00 AM Succeeding Scala for Professional Effective Spring NoSQL Build Lifecycle Developer guide OOP Principles The Who and with the Apache the Intrigued Javascript Workshop Smackdown 2012 Craftsmanship to the cloud Ken Sipe What of Agile - SOA stack Venkat development Craig Walls Tim Berglund Tools Pratik Patel Personas and Johan Edstrom Subramaniam for the Java Matthew Story Maps developer McCullough Nathaniel Schutta Peter Bell 10:00 - 10:30 AM MORNING BREAK 10:30 -

Resource-Oriented Computing W/ Netkernel

Resource-Oriented Computing w/ NetKernel : Software for the 21st Century Brian Sletten Zepheira, LLC [email protected] Speaker Qualifications Over 14 years of software development experience Has own software consulting company for design, mentoring, training and development Currently working in Semantic Web, AOP, Grid Computing, P2P and security consulting Pinky Committer Agenda What the heck is NetKernel? Why We Care NetKernel Overview NetKernel Languages Advanced Concepts Pinky What the heck is NetKernel? A micro-kernel based resource ecosystem built around the principles of REST, Unix Pipes and SOA Why We Care: The Simple Answer Most enterprise systems use XML and are built in Java and this is still too much of a pain Complicated Different Languages for different technologies Predicting scalability Why We Care : The Complicated Answer This is hard because of a general mismatch at the intersection of languages, data model, processing abstractions, architectural tiers, etc. Larger Trends Data formatted Code written 1970s 1980s 1990s 2000s NetKernel A Ground-Up Resource-oriented computing environment Modern, microkernel architecture Takes the best of REST, Unix Pipes, SOA Open Source w/ Dual License for non-open source uses Resource-Oriented Computing Everything is a Resource Everything is URI-Addressable (logical reference) “Computation” is the turning of logical resource references into physical representation Lossless conversion between representations REST Representational State Transfer Architectural style described in Roy Fielding’s -

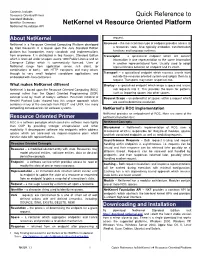

Quick Reference to Netkernel V4 Resource Oriented Platform

Contents Include: Resource Oriented Primer Quick Reference to Standard Modules Identifier Grammars NetKernel v4 Resource Oriented Platform NetKernel Foundation API About NetKernel request. NetKernel is a Resource Oriented Computing Platform developed Accessor ± the most common type of endpoint provides access to by 1060 Research. It is based upon the Java Standard Edition a resources state. Also typically embodies transformation platform but incorporates many standards and implementations functions and language runtimes. from elsewhere. It is distributed in two flavours, Standard Edition Transreptor ± a specialised endpoint which will convert which is licensed under an open source 1060 Public License and an information in one representation to the same information Enterprise Edition which is commercially licensed. Uses of in another representational form. Usually used to adapt NetKernel range from application server, rich client, (or representations between an endpoint and it©s client. combinations of both), with HTTP transports and many others through to very small footprint standalone applications and Transport ± a specialised endpoint which receives events from embedded with Java containers. outside the resource oriented system and adapts them to a request. Transports may return responses too. What makes NetKernel different Overlay ± a specialised endpoint which wraps a space and issues NetKernel is based upon the Resource Oriented Computing (ROC) sub-requests into it. This provides the basis for patterns concept rather than the Object Oriented Programming (OOP) such as importing spaces into other spaces. concept used by much of today©s software. Research initiated at Request Scope ± an ordered list of spaces within a request which Hewlett Packard Labs showed how this unique approach which are used to determine resolution. -

Document Schema Definition Languages (DSDL)

ISO/IEC PDTR 19757-10 Contents Page Foreword................................................................................................................................................................... iv Introduction............................................................................................................................................................... v 1 Scope............................................................................................................................................................ 1 2 Normative references.................................................................................................................................. 1 3 Terms and definitions.................................................................................................................................. 1 4 Using Pipelining for Validation Management............................................................................................ 2 4.1 Validation Management using DPML......................................................................................................... 2 4.1.1 Example of Linear DPML............................................................................................................................. 3 4.1.2 Example of Asynchronous DPML............................................................................................................... 7 4.2 Validation Management using Cocoon................................................................................................... -

Space Details

Space Details Key: GROOVY Name: Groovy Description: Documentation and web site of the Groovy scripting language for the JVM. Creator (Creation Date): bob (Apr 15, 2004) Last Modifier (Mod. Date): glaforge (Apr 12, 2005) Available Pages • Home • Advanced Usage Guide • Ant Task Troubleshooting • BuilderSupport • Compiling Groovy • Compiling With Maven2 • Design Patterns with Groovy • Abstract Factory Pattern • Adapter Pattern • Bouncer Pattern • Chain of Responsibility Pattern • Composite Pattern • Decorator Pattern • Delegation Pattern • Flyweight Pattern • Iterator Pattern • Loan my Resource Pattern • Null Object Pattern • Pimp my Library Pattern • Proxy Pattern • Singleton Pattern • State Pattern • Strategy Pattern • Template Method Pattern • Visitor Pattern • Dynamic language beans in Spring • Embedding Groovy • Influencing class loading at runtime • Make a builder • Mixed Java and Groovy Applications • Optimising Groovy bytecodes with Soot Document generated by Confluence on Dec 07, 2007 12:38 Page 1 • Refactoring with Groovy • Introduce Assertion • Replace Inheritance with Delegation • Security • Writing Domain-Specific Languages • Articles • Community and Support • Contributing • Mailing Lists • Related Projects • User Groups • Cookbook Examples • Accessing SQLServer using groovy • Alternate Spring-Groovy-Integration • Batch Image Manipulation • command line groovy doc or methods lookup • Compute distance from Google Earth Path (in .kml file) • Convert SQL Result To XML • Embedded Derby DB examples • Embedding a Groovy Console in -

Pragpub #066, December 2014

The Pragmatic Bookshelf PragPThe Secondu Iterationb IN THIS ISSUE * Rothman and Lester on mentoring * Marcus Blankenship on managing programmers * Ron Hitchens on Resource Oriented Computing vs Object Oriented Computing * Brian Sletten on Resource Oriented Computing vs Microservices * Tom Geudens on Resource Oriented Computing * Dan Wohlbruck on the C language * Antonio Cangiano on new books for programmers Issue #66 December 2014 PragPub • December 2014 Contents FEATURES Your Object Model Sucks ................................................................................... 15 by Ron Hitchens The big idea in Object Oriented Programming was always messaging, not objects or classes. Resource Oriented Programming gets that right. Microservices Is not the Answer ..................................................................... 23 by Brian Sletten Microservices has the right idea. It just doesn’t take it far enough. Introducing Resource Oriented Computing ................................................. 33 by Tom Geudens There’s a program that’s been running for 25 years, that scales like nobody’s business, and that’s based on open protocols. Can your code measure up to that? The Evolution of C ............................................................................................... 44 by Dan Wohlbruck Dan continues his series on the history of programming languages with more on C. — i — DEPARTMENTS On Tap ....................................................................................................................... -

Resource-Oriented Computing® and Netkernel® 5

1060 Research Limited - NetKernel and Resource-Oriented Computing 1060 Research Limited Collection of Articles Resource-Oriented Computing® and NetKernel® 5 v 1.1.1 1060 Research Limited - NetKernel and Resource-Oriented Computing 1060, NetKernel, Resource Oriented Computing, ROC are respectively registered trademark and trademarks of 1060 Research Limited 2001-2013 © 1060 Research Limited 1060 Research Limited - NetKernel and Resource-Oriented Computing Simplicity is the ultimate sophistication. Leonardo da Vinci ost software today is very much like an M Egyptian pyramid with millions of bricks piled on top of each other, with no struc- tural integrity, but just done by brute force and thousands of slaves. Dr. Alan Kay (father of OO programming) his software transformation, whatever it is, is coming. It must come; because we sim- T ply cannot keep piling complexity upon complexity. We need some new organizing principle that revamps the very foundations of the way we think about software and opens up vistas that will take us into the 22nd century. Robert Cecil Martin, aka “Uncle Bob” n order to handle large systems over time, there needs to be a high level description of I information...a language which allows the uniform representation of information. Algo- rithms is one type of information that has to do with computations, but there are other types. ...Computations need not be arranged in programs; they must be information, standing on their own, allowing the user to mix them at will, just like with any other type of informa- tion. -

CS 162 Nachos Tutorial and Source Code

!∀# ! " # $ % & ' ' " ( )$ " # $ % * " ( $ # $ # $ " " " ! % % ,2 " ,2 !33'1 % % +, " . /0 ' ,2 % !-& % " . /0 1 % ) % # $ # 4-$ " # & ! " 4- 55 ) && $ " 4- 6 % 7 " , & & ' & !/4-0 " , & " ) ) ' 4- 8 ' " , & ( ( )$ " ! 9) " ;4- ::55/ &0 " 9 ) 4- ) " 9# '-4 % / 0 2 ' ; % / 0 " < - % )/2 *0 " =4-&>( % / ) -0 % ' '6 ' % /2 0 &' ) % /2 0 4- % -/2 ?0 9 " # ' , " , @ - 6 '',2 ' ' " 2 " A ) / !0 , B " , " C ) " C ) ' - " -E' ) - - " ) ) % , /0 % ! ) 1 ,2 % , /0 % ) 6& % , /0 " )' ) % , )D)/0 ' " - - ' / 0 " ' & " - " (- % /0) ' & -GFF ) / 1 0 % )/0) & / F ) 0 " % )>/0- ) % &/0 % /0 ) % &/0 % (/0 " - 6 7 " 2 - ! (>- " 4- " > ) " % >7) 8 4- 55' % 9/0 &/ 60 7 % 9/0) & " )D) % ( /0 % '& )& - % < 2 * " & ' ) % . && & % -- ) H- ) C " & C " ( ) " " % @/0@ )' B C / & 0 % /0 / &0 " C % /0 / 0 % '='='9 )'3 % /0A - - " ;-C " ; 2 - C & 9 " &4- = " 8- =&/0') " & C ' )/0 " ;1 " I &8 1 " > = = & " ! " 3 " " C @ # $%& $ % C' % && ! $ ! % " > 8 " # C $ " # $ - ( $ " ,2 -

Operating System

Operating System Operating System Concepts and Techniques M. Naghibzadeh Professor of Computer Engineering iUniverse, Inc. New York Lincoln Shanghai Operating System Concepts and Techniques Copyright © 2005 by Mahmoud Naghibzadeh All rights reserved. No part of this book may be used or reproduced by any means, graphic, electronic, or mechanical, including photocopying, recording, taping or by any information storage retrieval system without the written permission of the publisher except in the case of brief quotations embodied in critical articles and reviews. iUniverse books may be ordered through booksellers or by contacting: iUniverse 2021 Pine Lake Road, Suite 100 Lincoln, NE 68512 www.iuniverse.com 1-800-Authors (1-800-288-4677) ISBN-13: 978-0-595-37597-4 (pbk) ISBN-13: 978-0-595-81992-8 (ebk) ISBN-10: 0-595-37597-9 (pbk) ISBN-10: 0-595-81992-3 (ebk) Printed in the United States of America Contents Preface ..................................................................................................xiii Chapter 1 Computer Hardware Organization ..................................1 1.1 The Fetch-Execute Cycle ......................................................................2 1.2 Computer Hardware Organization ....................................................4 1.3 Summary ..............................................................................................7 1.4 Problems ..............................................................................................7 Recommended References ........................................................................8 -

Technical Paper, ROCAS V1.2 Cg 1060 Research Ltd 1

Technical Paper, ROCAS v1.2 c 1060 Research Ltd Abstract Today's software systems have a much shorter life span than other engineering arti- facts. Code is brittle and system architectures are rigid and not robust to change. With the Web an alternative model of computing has taken shape, a model that allows for change on a small scale inside of a complex and large-scale system. The core abstrac- tion behind the Web can be applied to the design of software systems. The abstraction in Resource Oriented Computing generalizes the Web abstraction to enable the most adaptable software systems and architectures that evolve with change. 1 Resource Oriented Computing for Adaptive Systems Peter J. Rodgers, PhD, [email protected] May 2015 1 Challenge The second Law of Thermodynamics states that entropy will always increase. The challenge faced by long-lived resource adaptive systems is to fight entropy with the least possible expenditure of energy (physical, computational, financial). 2 Definitions "Myriad computational systems, both hardware and It is helpful to start by stating some definitions so that we may clarify concepts such as software, are organized as \stability" and \evolvability" as applied to adaptive computation systems. state-transition systems. Such a system evolves over It will be shown that we can obtain a useful working understanding of stability and time (or, computes) by evolvability by considering computational state.1 continually changing state We require to maintain a conceptual distinction between a given logical abstract potential in response to one or more discrete stimuli (typically state and an instantaneous physical representation of the state.