SPARKS ACROSS the GAP: ESSAYS by Megan E. Mericle, B.A

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Songs by Artist

Reil Entertainment Songs by Artist Karaoke by Artist Title Title &, Caitlin Will 12 Gauge Address In The Stars Dunkie Butt 10 Cc 12 Stones Donna We Are One Dreadlock Holiday 19 Somethin' Im Mandy Fly Me Mark Wills I'm Not In Love 1910 Fruitgum Co Rubber Bullets 1, 2, 3 Redlight Things We Do For Love Simon Says Wall Street Shuffle 1910 Fruitgum Co. 10 Years 1,2,3 Redlight Through The Iris Simon Says Wasteland 1975 10, 000 Maniacs Chocolate These Are The Days City 10,000 Maniacs Love Me Because Of The Night Sex... Because The Night Sex.... More Than This Sound These Are The Days The Sound Trouble Me UGH! 10,000 Maniacs Wvocal 1975, The Because The Night Chocolate 100 Proof Aged In Soul Sex Somebody's Been Sleeping The City 10Cc 1Barenaked Ladies Dreadlock Holiday Be My Yoko Ono I'm Not In Love Brian Wilson (2000 Version) We Do For Love Call And Answer 11) Enid OS Get In Line (Duet Version) 112 Get In Line (Solo Version) Come See Me It's All Been Done Cupid Jane Dance With Me Never Is Enough It's Over Now Old Apartment, The Only You One Week Peaches & Cream Shoe Box Peaches And Cream Straw Hat U Already Know What A Good Boy Song List Generator® Printed 11/21/2017 Page 1 of 486 Licensed to Greg Reil Reil Entertainment Songs by Artist Karaoke by Artist Title Title 1Barenaked Ladies 20 Fingers When I Fall Short Dick Man 1Beatles, The 2AM Club Come Together Not Your Boyfriend Day Tripper 2Pac Good Day Sunshine California Love (Original Version) Help! 3 Degrees I Saw Her Standing There When Will I See You Again Love Me Do Woman In Love Nowhere Man 3 Dog Night P.S. -

G by Don Mueller

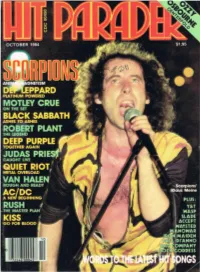

Grace Under Pressure Proves Canadian Trio Remain Masters Of Eclectic Metal. by Don Mueller Geddy Lee sat quietly in Rush's backstage dressing room transfixed by the tiny images on the screen beiore him. "Hey, it's almost time to go on stage:' guitarist Alex Lifeson said, trying to rouse Geddy from his TV obsession. "Not now, not now," Lee shot back in annoyance. "It's the bottom of the ninth, and the Expos are down by one - do you really expect me to leave at a time like this?" Few things can draw the members of Rush away from playing their music, but in the case of Lee, a good baseball game is one of them . "We're so conservative it's sickening," said the hawk-nosed bassist/vocalist. "Most rock and roll bands are into drugs and groupies - we're into sports. There's something about a good baseball game that's very special. Baseball is a lot like the music we play. There's an entertainment value to it, but underneath everything there's a great deal of thought and planning that goes into what's going on. On stage we're like a team, we each have our positions and our specific responsibilities - I guess you could think of us as the Rush All-Stars." With the success of their latest album, Grace Under Pressure, Lee, Weson and drummer Neil Peart have again displayed their league-leading musical skills that over the last decade, have continually made them candidates for the titl rock's Most Valuable Players. -

(Anesthesia) Pulling Teeth Metallica

(Anesthesia) Pulling Teeth Metallica (How Sweet It Is) To Be Loved By You Marvin Gaye (Legend of the) Brown Mountain Light Country Gentlemen (Marie's the Name Of) His Latest Flame Elvis Presley (Now and Then There's) A Fool Such As I Elvis Presley (You Drive ME) Crazy Britney Spears (You're My) Sould and Inspiration Righteous Brothers (You've Got) The Magic Touch Platters 1, 2 Step Ciara and Missy Elliott 1, 2, 3 Gloria Estefan 10,000 Angels Mindy McCreedy 100 Years Five for Fighting 100% Pure Love Crystal Waters 100% Pure Love (Club Mix) Crystal Waters 1‐2‐3 Len Barry 1234 Coolio 157 Riverside Avenue REO Speedwagon 16 Candles Crests 18 and Life Skid Row 1812 Overture Tchaikovsky 19 Paul Hardcastle 1979 Smashing Pumpkins 1985 Bowling for Soup 1999 Prince 19th Nervous Breakdown Rolling Stones 1B Yo‐Yo Ma 2 Become 1 Spice Girls 2 Minutes to Midnight Iron Maiden 2001 Melissa Etheridge 2001 Space Odyssey Vangelis 2012 (It Ain't the End) Jay Sean 21 Guns Green Day 2112 Rush 21st Century Breakdown Green Day 21st Century Digital Boy Bad Religion 21st Century Kid Jamie Cullum 21st Century Schizoid Man April Wine 22 Acacia Avenue Iron Maiden 24‐7 Kevon Edmonds 25 or 6 to 4 Chicago 26 Miles (Santa Catalina) Four Preps 29 Palms Robert Plant 30 Days in the Hole Humble Pie 33 Smashing Pumpkins 33 (acoustic) Smashing Pumpkins 3am Matchbox 20 3am Eternal The KLF 3x5 John Mayer 4 in the Morning Gwen Stefani 4 Minutes to Save the World Madonna w/ Justin Timberlake 4 Seasons of Loneliness Boyz II Men 40 Hour Week Alabama 409 Beach Boys 5 Shots of Whiskey -

Song List - by Song - Mr K Entertainment

Main Song List - By Song - Mr K Entertainment Title Artist Disc Track 02:00:00 AM Iron Maiden 1700 5 3 AM Matchbox 20 236 8 4:00 AM Our Lady Peace 1085 11 5:15 Who, The 167 9 3 Spears, Britney 1400 2 7 Prince & The New Power Generation 1166 11 11 Pope, Cassadee 1657 4 17 Cross Canadian Ragweed 803 12 22 Allen, Lily 1413 3 22 Swift, Taylor 1646 15 23 Mike Will Made It & Miley Cyrus 1667 16 33 Smashing Pumpkins 1662 11 45 Shinedown 1190 19 98.6 Keith 1096 4 99 Toto 1150 20 409 Beach Boys, The 989 7 911 Jean, Wyclef & Mary J. Blige 725 4 1215 Strokes 1685 12 1234 Feist 1125 12 1929 Deana Carter 1636 15 1959 Anderson, John 1416 7 1963 New Order 1313 1 1969 Stegall, Keith 1004 13 1973 Blunt, James 1294 16 1979 Smashing Pumpkins 820 4 1982 Estefan, Gloria 153 1 1982 Travis, Randy 367 5 1983 Neon Trees 1522 14 1984 Bowie, David 1455 14 1985 Bowling For Soup 670 5 1994 Aldean, Jason 1647 15 1999 Prince 182 9 Dec-43 Montgomery, John Michael 1715 4 1-2-3 Berry, Len 46 13 # Dream Lennon, John 1154 3 #1 Crush Garbage 215 12 #Selfie Chainsmokers 1666 6 Check Us Out Online at: www.AustinKaraoke.com Main Song List - By Song - Mr K Entertainment (I Know) I'm Losing You Temptations 1199 5 (Love Is Like A) Heatwave Reeves, Martha And The Vandellas 1199 6 (Your(The Angels Love Keeps Wanna Lifting Wear Me) My) Higher Red Shoes And Costello, Elvis 1209 4 Higher Wilson, Jackie 1199 8 (You're My) Soul & Inspiration Righteous Brothers 963 7 (You're) Adorable Martin, Dean 1375 11 1 2 3 4 Feist 939 14 1 Luv E40 & Leviti 1499 9 1, 2 Step Ciara & Missy Elliott 746 5 1, 2, 3 Redlight 1910 Fruitgum Co. -

Robot Competition, Design of Labyrinth and Robotdemo Työn Ohjaaja: Juha Räty Työn Valmistumislukukausi Ja -Vuosi: Kevät 2013 Sivumäärä: 85 + 8 Liitettä

Alfonso Antonio Reyes Jaramillo ROBOT COMPETITION, DESIGN OF LABYRINTH AND ROBOTDEMO ROBOT COMPETITION, DESIGN OF LABYRINTH AND ROBOTDEMO Alfonso Antonio Reyes Jaramillo Bachelor’s Thesis Spring 2013 Tietotekniikan koulutusohjelma Oulun seudun ammattikorkeakoulu TIIVISTELMÄ Oulun seudun ammattikorkeakoulu Tietotekniikan koulutusohjelma, Tekijä: Alfonso Antonio Reyes Jaramillo Opinnäytetyön nimi: Robot Competition, Design of Labyrinth and RobotDemo Työn ohjaaja: Juha Räty Työn valmistumislukukausi ja -vuosi: Kevät 2013 Sivumäärä: 85 + 8 liitettä Tämä opinnäytetyö sai alkunsa tuntiopettaja Juha Rädyn ehdotuksesta. Ehdotettu teema oli robottikilpailu, labyrinttiradan suunnittelu kilpailua varten sekä robotti, joka nimettiin RobotDemoksi. Labyrintin malli perustui esikolumbiaanisen ajan Perun muinaiskaupungin palatsin rakenteeseen. Tästä rakenteesta ovat peräisin palatsin L-muoto, pihan U-muoto, toisen pihan suorakulmainen muoto sekä labyrintin pyramidi. Nämä arkkitehtuuriset elementit jaettiin kolmeen alueeseen. Kilpailua varten oli laadittu tietyt säännöt. Näihin sääntöihin kuului ohjeita joukkueille ja järjestäjille. Säännöissä oli lisäksi tekniset määritelmät kahdelle robottikategorialle. Myös säädökset pisteiden jakamisesta määriteltiin. Pisteitä jaettiin roboteille jotka selvittivät labyrintin nopeimmin. Tämän lisäksi robotit saivat myös tietyn määrän pisteitä jokaisesta tehtävästä jonka ne suorittivat. Tämä järjestelmä mahdollisti sen, että robotti saattoi voittaa kilpailun, vaikka se ei olisikaan kaikista nopein. Pistejärjestelmän -

Expansions on the Book of Ezekiel Introduction This

Expansions on the Book of Ezekiel Introduction This document follows the same pattern as other “expansion” texts posted on the Lectio Divina Homepage relative to books of the Bible. That is to say, it presents the Book of Ezekiel to be read in accord with the practice of lectio divina whose single purpose is to dispose the reader to being in God’s presence. The word “expansion” means that the text is fleshed out with a certain liberty while at the same time remaining consistent with the original Hebrew, a practice not unlike you’d find in a modern day yeshiva. There’s not attempt to provide information about the text nor its author; that can be garnered elsewhere from reliable sources. Besides, inserting such information can be a distraction from reading Ezekiel in the spirit of lectio divina. One requirement for lectio divina as applied to the Book of Ezekiel is demanded, if you will. That consists in taking as much time as possible to ponder and to linger over a single word, phrase or sentence. In other words, the whole idea of a time limit or covering material is to be dismissed. Obviously this runs counter to the conventional way we read and process information. Adopting this slow-motion practice isn’t as easy as it seems, but even a small exposure to it makes you sensitive to the Book of Ezekiel as an aide to prayer. Apart from this approach, the text at hand has nothing to offer, really. As for reading it, anyone will discover quickly that it’s rather chopped up, not coherent as one would read a conventional text. -

Unit 12 Americans in Indochina

UNIT 12 AMERICANS IN INDOCHINA CHAPTER 1 THE FRENCH IN INDOCHINA.......................................................................................................1 CHAPTER 2 COMMUNISM, GUERRILLAS AND FALLING DOMINOES .....................................................5 CHAPTER 3 ʹSINK OR SWIM, WITH NGO DINH DIEMʹ...................................................................................9 CHAPTER 4 FIGHTING AGAINST GUERRILLAS: THE STRATEGIC HAMLET PROGRAM...................12 CHAPTER 5 EXIT NGO DINH DIEM AND THE GULF OF TONKIN INCIDENT.......................................16 CHAPTER 6 FIGHTING A GUERRILLA WAR...................................................................................................22 CHAPTER 7 HOW MY LAI WAS PACIFIED ......................................................................................................25 CHAPTER 8 THE TET OFFENSIVE .....................................................................................................................30 CHAPTER 9 VIETNAMIZATION ........................................................................................................................36 CHAPTER 10 THE WAR IS FINISHED.................................................................................................................40 CHAPTER 11 WAS THE ʹWHOLE THING A LIEʹ OR A ʹNOBLE CRUSADEʹ...............................................43 by Thomas Ladenburg, copyright, 1974, 1998, 2001, 2007 100 Brantwood Road, Arlington, MA 02476 781-646-4577 [email protected] Page -

Science & Technology Trends 2020-2040

Science & Technology Trends 2020-2040 Exploring the S&T Edge NATO Science & Technology Organization DISCLAIMER The research and analysis underlying this report and its conclusions were conducted by the NATO S&T Organization (STO) drawing upon the support of the Alliance’s defence S&T community, NATO Allied Command Transformation (ACT) and the NATO Communications and Information Agency (NCIA). This report does not represent the official opinion or position of NATO or individual governments, but provides considered advice to NATO and Nations’ leadership on significant S&T issues. D.F. Reding J. Eaton NATO Science & Technology Organization Office of the Chief Scientist NATO Headquarters B-1110 Brussels Belgium http:\www.sto.nato.int Distributed free of charge for informational purposes; hard copies may be obtained on request, subject to availability from the NATO Office of the Chief Scientist. The sale and reproduction of this report for commercial purposes is prohibited. Extracts may be used for bona fide educational and informational purposes subject to attribution to the NATO S&T Organization. Unless otherwise credited all non-original graphics are used under Creative Commons licensing (for original sources see https://commons.wikimedia.org and https://www.pxfuel.com/). All icon-based graphics are derived from Microsoft® Office and are used royalty-free. Copyright © NATO Science & Technology Organization, 2020 First published, March 2020 Foreword As the world Science & Tech- changes, so does nology Trends: our Alliance. 2020-2040 pro- NATO adapts. vides an assess- We continue to ment of the im- work together as pact of S&T ad- a community of vances over the like-minded na- next 20 years tions, seeking to on the Alliance. -

A Tribute to Rush's Incomparable Drum Icon

A TRIBUTE TO RUSH’S INCOMPARABLE DRUM ICON THE WORLD’S #1 DRUM RESOURCE MAY 2020 ©2020 Drum Workshop, Inc. All Rights Reserved. From each and every one of us at DW, we’d simply like to say thank you. Thank you for the artistry. Thank you for the boundless inspiration. And most of all, thank you for the friendship. You will forever be in our hearts. Volume 44 • Number 5 CONTENTS Cover photo by Sayre Berman ON THE COVER 34 NEIL PEART MD pays tribute to the man who gave us inspiration, joy, pride, direction, and so very much more. 36 NEIL ON RECORD 70 STYLE AND ANALYSIS: 44 THE EVOLUTION OF A LIVE RIG THE DEEP CUTS 48 NEIL PEART, WRITER 74 FIRST PERSON: 52 REMEMBERING NEIL NEIL ON “MALIGNANT NARCISSISM” 26 UP AND COMING: JOSHUA HUMLIE OF WE THREE 28 WHAT DO YOU KNOW ABOUT…CERRONE? The drummer’s musical skills are outweighed only by his ambitions, Since the 1970s he’s sold more than 30 million records, and his playing which include exploring a real-time multi-instrumental approach. and recording techniques infl uenced numerous dance and electronic by Mike Haid music artists. by Martin Patmos LESSONS DEPARTMENTS 76 BASICS 4 AN EDITOR’S OVERVIEW “Rhythm Basics” Expanded, Part 3 by Andy Shoniker In His Image by Adam Budofsky 78 ROCK ’N’ JAZZ CLINIC 6 READERS’ PLATFORM Percussion Playing for Drummers, Part 2 by Damon Grant and Marcos Torres A Life Changed Forever 8 OUT NOW EQUIPMENT Patrick Hallahan on Vanessa Carlton’s Love Is an Art 12 PRODUCT CLOSE-UP 10 ON TOUR WFLIII Three-Piece Drumset and 20 IN THE STUDIO Peter Anderson with the Ocean Blue Matching Snare Drummer/Producer Elton Charles Doc Sweeney Classic Collection 84 CRITIQUE Snares 80 NEW AND NOTABLE Sabian AAX Brilliant Thin Crashes 88 BACK THROUGH THE STACK and Ride and 14" Medium Hi-Hats Billy Cobham, August–September 1979 Gibraltar GSSVR Stealth Side V Rack AN EDITOR’S OVERVIEW In His Image Founder Ronald Spagnardi 1943–2003 ole models are a tricky thing. -

How to Build a Combat Robot V1.2.Pdf

1 Contents page 1. Cover 2. Contents page 3. Introduction, disclaimer, safety and weight categories 4. Competition rules, advice to teachers and useful websites Designing 5. Standard robot designs 6. General design advice and recommended CAD software Building – Electronics 7. General wiring advice and ESCs 8. Removable links and remote control 9. Wiring diagrams 10. Relays and fuses 11. LiPo batteries and safety 12. LiPo batteries, safety and alternative battery types Building – Mechanical hardware 13. Drive and weapon motors 14. Power transfer methods Building – Chassis & armour 15. Robot layout and suitable build materials Misc 16. Competitions and troubleshooting 17. Shopping list 18. Glossary 2 Introduction This guide is designed to give someone interested in building a combat robot a good foundation of knowledge to safely take part in this hobby. The information is suitable for an enthusiastic builder but has been written for teachers looking to run a combat robotics club in a school context, some of the information therefore may not be required by the individual builder. Combat robotics is an engaging hobby that stretches competitor’s abilities in; problem solving, engineering, design, CAD, fabrication, electronics and much more. As a vehicle for classroom engagement the learning opportunities are vast; maths, physics, engineering, materials science, team work and time management are all skills required to successfully build a combat robot. This guide is not exhaustive, the information found here is the key information for someone to safely design and build a robot, but additional research will usually be required throughout a build. Hopefully with this guide, and a few basic workshop tools, you and your students will have the satisfaction of designing and building your own combat robot. -

1 “I Grew up Loving Cars and the Southern California Car Culture. My Dad Was a Parts Manager at a Chevrolet Dealership, So V

“I grew up loving cars and the Southern California car culture. My dad was a parts manager at a Chevrolet dealership, so ‘Cars’ was very personal to me — the characters, the small town, their love and support for each other and their way of life. I couldn’t stop thinking about them. I wanted to take another road trip to new places around the world, and I thought a way into that world could be another passion of mine, the spy movie genre. I just couldn’t shake that idea of marrying the two distinctly different worlds of Radiator Springs and international intrigue. And here we are.” — John Lasseter, Director ABOUT THE PRODUCTION Pixar Animation Studios and Walt Disney Studios are off to the races in “Cars 2” as star racecar Lightning McQueen (voice of Owen Wilson) and his best friend, the incomparable tow truck Mater (voice of Larry the Cable Guy), jump-start a new adventure to exotic new lands stretching across the globe. The duo are joined by a hometown pit crew from Radiator Springs when they head overseas to support Lightning as he competes in the first-ever World Grand Prix, a race created to determine the world’s fastest car. But the road to the finish line is filled with plenty of potholes, detours and bombshells when Mater is mistakenly ensnared in an intriguing escapade of his own: international espionage. Mater finds himself torn between assisting Lightning McQueen in the high-profile race and “towing” the line in a top-secret mission orchestrated by master British spy Finn McMissile (voice of Michael Caine) and the stunning rookie field spy Holley Shiftwell (voice of Emily Mortimer). -

A Brief History of Robotics

A Brief History of Robotics ~ 350 B.C The brilliant Greek mathematician, Archytas ('ahr 'ky tuhs') of Tarentum builds a mechanical bird dubbed "the Pigeon" that is propelled by steam. It serves as one of history’s earliest studies of flight, not to mention probably the first model airplane. ~ 322 B.C. The Greek philosopher Aristotle writes... “If every tool, when ordered, or even of its own accord, could do the work that befits it... then there would be no need either of apprentices for the master workers or of slaves for the lords.” ...hinting how nice it would be to have a few robots around. ~ 200 B.C. The Greek inventor and physicist Ctesibus ('ti sib ee uhs') of Alexandria designs water clocks that have movable figures on them. Water clocks are a big breakthrough for timepieces. Up until then the Greeks used hour glasses that had to be turned over after all the sand ran through. Ctesibus' invention changed this because it measured time as a result of the force of water falling through it at a constant rate. In general, the Greeks were fascinated with automata of all kinds, often using them in theater productions and religious ceremonies. 1495 Leonardo DaVinci designs a mechanical device that looks like an armored knight. The mechanisms inside "Leonardo's robot" are designed to make the knight move as if there was a real person inside. Inventors in medieval times often built machines like "Leonardo's robot" to amuse royalty. 1738 Jacques de Vaucanson begins building automata in Grenoble, France. He builds three in all.