The Eighteenth-Century Playbill at Scale

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

John Dryden and the Late 17Th Century Dramatic Experience Lecture 16 (C) by Asher Ashkar Gohar 1 Credit Hr

JOHN DRYDEN AND THE LATE 17TH CENTURY DRAMATIC EXPERIENCE LECTURE 16 (C) BY ASHER ASHKAR GOHAR 1 CREDIT HR. JOHN DRYDEN (1631 – 1700) HIS LIFE: John Dryden was an English poet, literary critic, translator, and playwright who was made England's first Poet Laureate in 1668. He is seen as dominating the literary life of Restoration England to such a point that the period came to be known in literary circles as the “Age of Dryden”. The son of a country gentleman, Dryden grew up in the country. When he was 11 years old the Civil War broke out. Both his father’s and mother’s families sided with Parliament against the king, but Dryden’s own sympathies in his youth are unknown. About 1644 Dryden was admitted to Westminster School, where he received a predominantly classical education under the celebrated Richard Busby. His easy and lifelong familiarity with classical literature begun at Westminster later resulted in idiomatic English translations. In 1650 he entered Trinity College, Cambridge, where he took his B.A. degree in 1654. What Dryden did between leaving the university in 1654 and the Restoration of Charles II in 1660 is not known with certainty. In 1659 his contribution to a memorial volume for Oliver Cromwell marked him as a poet worth watching. His “heroic stanzas” were mature, considered, sonorous, and sprinkled with those classical and scientific allusions that characterized his later verse. This kind of public poetry was always one of the things Dryden did best. On December 1, 1663, he married Elizabeth Howard, the youngest daughter of Thomas Howard, 1st earl of Berkshire. -

Aphra Behn and the Roundheads Author(S): Kimberly Latta Source: Journal for Early Modern Cultural Studies , Spring/Summer 2004, Vol

Aphra Behn and the Roundheads Author(s): Kimberly Latta Source: Journal for Early Modern Cultural Studies , Spring/Summer 2004, Vol. 4, No. 1, Women Writers of the Eighteenth Century (Spring/Summer 2004), pp. 1-36 Published by: University of Pennsylvania Press Stable URL: https://www.jstor.org/stable/27793776 JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about JSTOR, please contact [email protected]. Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at https://about.jstor.org/terms University of Pennsylvania Press is collaborating with JSTOR to digitize, preserve and extend access to Journal for Early Modern Cultural Studies This content downloaded from 42.110.144.138 on Thu, 04 Mar 2021 08:16:04 UTC All use subject to https://about.jstor.org/terms JEMCS 4.1 (Spring/Summer 2004) Aphra Behn and the Roundheads Kimberly Latta In a secret life I was a Roundhead general.1 The unacknowledged identified herself as a factprophet. is Inthat the dedicatoryAphra Behnepistle frequently to The Roundheads (1682), for example, she begged the priv ileges of the "Prophets ... of old," to predict the future and admonish the populace. To the newly ascended James II she boasted, "Long with Prophetick Fire, Resolved and Bold,/ Your Glorious FATE and FORTUNE I foretold.^ When the Whigs drove James from power and installed William of Orange in his place, she represented herself standing mournfully, "like the Excluded Prophet" on the "Forsaken Barren Shore."3 In these and other instances, Behn clearly and consciously drew upon a long-standing tradition in English letters of associating poets with prophets. -

Katherine Philips and the Discourse of Virtue

https://theses.gla.ac.uk/ Theses Digitisation: https://www.gla.ac.uk/myglasgow/research/enlighten/theses/digitisation/ This is a digitised version of the original print thesis. Copyright and moral rights for this work are retained by the author A copy can be downloaded for personal non-commercial research or study, without prior permission or charge This work cannot be reproduced or quoted extensively from without first obtaining permission in writing from the author The content must not be changed in any way or sold commercially in any format or medium without the formal permission of the author When referring to this work, full bibliographic details including the author, title, awarding institution and date of the thesis must be given Enlighten: Theses https://theses.gla.ac.uk/ [email protected] Katherine Philips and the Discourse of Virtue Tracy J. Byrne Thesis submitted for the degree of Ph.D. University of Glasgow Department of English Literature March 2002 This copy of the thesis has been supplied on condition that anyone who consults it is understood to recognise that its copyright rests with the author and that no quotation from the thesis, nor any information derived therefrom, may be published without the author's prior written consent. ProQuest Number: 10647853 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a com plete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. -

OROONOKO; OR the ROYAL SLAVE. by Aphra Behn (1688)

1 OROONOKO; OR THE ROYAL SLAVE. By Aphra Behn (1688) INTRODUCTION. THE tale of Oroonoko, the Royal Slave is indisputedly Mrs. Behn’s masterpiece in prose. Its originality and power have singled it out for a permanence and popularity none of her other works attained. It is vivid, realistic, pregnant with pathos, beauty, and truth, and not only has it so impressed itself upon the readers of more than two centuries, but further, it surely struck a new note in English literature and one which was re-echoed far and wide. It has been said that ‘Oroonoko is the first emancipation novel’, and there is no little acumen in this remark. Certainly we may absolve Mrs. Behn from having directly written with a purpose such as animated Mrs. Harriet Beecher Stowe’s Uncle Tom’s Cabin; but none the less her sympathy with the oppressed blacks, her deep emotions of pity for outraged humanity, her anger at the cruelties of the slave- driver aye ready with knout or knife, are manifest in every line. Beyond the intense interest of the pure narrative we have passages of a rhythm that is lyric, exquisitely descriptive of the picturesque tropical scenery and exotic vegetations, fragrant and luxuriant; there are intimate accounts of adventuring and primitive life; there are personal touches which lend a colour only personal touches can, as Aphara tells her prose-epic of her Superman, Cæsar the slave, Oroonoko the prince. EPISTLE DEDICATORY. 2 TO THE RIGHT HONOURABLE THE LORD MAITLAND. This Epistle Dedicatory was printed as an Appendix My Lord, Since the World is grown so Nice and Critical upon Dedications, and will Needs be Judging the Book by the Wit of the Patron; we ought, with a great deal of Circumspection to chuse a Person against whom there can be no Exception; and whose Wit and Worth truly Merits all that one is capable of saying upon that Occasion. -

Jane Milling

ORE Open Research Exeter TITLE ‘“For Without Vanity I’m Better Known”: Restoration Actors and Metatheatre on the London Stage.’ AUTHORS Milling, Jane JOURNAL Theatre Survey DEPOSITED IN ORE 18 March 2013 This version available at http://hdl.handle.net/10036/4491 COPYRIGHT AND REUSE Open Research Exeter makes this work available in accordance with publisher policies. A NOTE ON VERSIONS The version presented here may differ from the published version. If citing, you are advised to consult the published version for pagination, volume/issue and date of publication Theatre Survey 52:1 (May 2011) # American Society for Theatre Research 2011 doi:10.1017/S0040557411000068 Jane Milling “FOR WITHOUT VANITY,I’M BETTER KNOWN”: RESTORATION ACTORS AND METATHEATRE ON THE LONDON STAGE Prologue, To the Duke of Lerma, Spoken by Mrs. Ellen[Nell], and Mrs. Nepp. NEPP: How, Mrs. Ellen, not dress’d yet, and all the Play ready to begin? EL[LEN]: Not so near ready to begin as you think for. NEPP: Why, what’s the matter? ELLEN: The Poet, and the Company are wrangling within. NEPP: About what? ELLEN: A prologue. NEPP: Why, Is’t an ill one? NELL[ELLEN]: Two to one, but it had been so if he had writ any; but the Conscious Poet with much modesty, and very Civilly and Sillily—has writ none.... NEPP: What shall we do then? ’Slife let’s be bold, And speak a Prologue— NELL[ELLEN]: —No, no let us Scold.1 When Samuel Pepys heard Nell Gwyn2 and Elizabeth Knipp3 deliver the prologue to Robert Howard’s The Duke of Lerma, he recorded the experience in his diary: “Knepp and Nell spoke the prologue most excellently, especially Knepp, who spoke beyond any creature I ever heard.”4 By 20 February 1668, when Pepys noted his thoughts, he had known Knipp personally for two years, much to the chagrin of his wife. -

BLACK LONDON Life Before Emancipation

BLACK LONDON Life before Emancipation ^^^^k iff'/J9^l BHv^MMiai>'^ii,k'' 5-- d^fli BP* ^B Br mL ^^ " ^B H N^ ^1 J '' j^' • 1 • GRETCHEN HOLBROOK GERZINA BLACK LONDON Other books by the author Carrington: A Life BLACK LONDON Life before Emancipation Gretchen Gerzina dartmouth college library Hanover Dartmouth College Library https://www.dartmouth.edu/~library/digital/publishing/ © 1995 Gretchen Holbrook Gerzina All rights reserved First published in the United States in 1995 by Rutgers University Press, New Brunswick, New Jersey First published in Great Britain in 1995 by John Murray (Publishers) Ltd. The Library of Congress cataloged the paperback edition as: Gerzina, Gretchen. Black London: life before emancipation / Gretchen Holbrook Gerzina p. cm. Includes bibliographical references and index ISBN 0-8135-2259-5 (alk. paper) 1. Blacks—England—London—History—18th century. 2. Africans— England—London—History—18th century. 3. London (England)— History—18th century. I. title. DA676.9.B55G47 1995 305.896´0421´09033—dc20 95-33060 CIP To Pat Kaufman and John Stathatos Contents Illustrations ix Acknowledgements xi 1. Paupers and Princes: Repainting the Picture of Eighteenth-Century England 1 2. High Life below Stairs 29 3. What about Women? 68 4. Sharp and Mansfield: Slavery in the Courts 90 5. The Black Poor 133 6. The End of English Slavery 165 Notes 205 Bibliography 227 Index Illustrations (between pages 116 and 111) 1. 'Heyday! is this my daughter Anne'. S.H. Grimm, del. Pub lished 14 June 1771 in Drolleries, p. 6. Courtesy of the Print Collection, Lewis Walpole Library, Yale University. 2. -

The Transatlantic Slave Trade and the Creation of the English Weltanschauung, 1685-1710

The Transatlantic Slave Trade and the Creation of the English Weltanschauung, 1685-1710 James Buckwalter James Buckwalter, a member of Phi Alpha Theta, is a senior majoring in History with a Secondary Education Teaching Certificate from Tinley Park, Illinois. He wrote this paper for an independent study course with Dr. Key during the fall of 2008. At the turn of the-eighteenth century, the English public was confronted with numerous and conflicting interpretations of Africans, slavery, and the slave trade. On the one hand, there were texts that glorified the institution of slavery. Gabriel de Brémond’s The Happy Slave, which was translated and published in London in 1686, tells of a Roman, Count Alexander, who is captured off the coast of Tunis by “barbarians,” but is soon enlightened to the positive aspects of slavery, such as, being “lodged in a handsome apartment, where the Baffa’s Chyrurgions searched his Wounds: And…he soon found himself better.”58 On the other hand, Bartolomé de las Casas’ Popery truly display'd in its bloody colours (written in 1552, but was still being published in London in 1689), displays slavery in the most negative light. De las Casas chastises the Spaniards’ “bloody slaughter and destruction of men,” condemning how they “violently forced away Women and Children to make them slaves, and ill-treated them, consuming and wasting their food.”59 Moreover, Thomas Southerne’s adaptation of Aphra Behn’s Oroonoko in 1699 displays slavery in a contradictory light. Southerne condemns Oroonoko’s capture as a “tragedy,” but like Behn’s version, Oroonoko’s royalty complicates the matter, eventually causing the author to show sympathy for the enslaved African prince. -

Spectacular Disappearances: Celebrity and Privacy, 1696-1801

Revised Pages Spectacular DiSappearanceS Revised Pages Revised Pages Spectacular Disappearances Celebrity and Privacy, 1696– 1801 Julia H. Fawcett University of Michigan Press Ann Arbor Revised Pages Copyright © 2016 by Julia H. Fawcett All rights reserved This book may not be reproduced, in whole or in part, including illustrations, in any form (beyond that copying permitted by Sections 107 and 108 of the U.S. Copyright Law and except by reviewers for the public press), without written permission from the publisher. Published in the United States of America by the University of Michigan Press Manufactured in the United States of America c Printed on acid- free paper 2019 2018 2017 2016 4 3 2 1 A CIP catalog record for this book is available from the British Library. ISBN 978– 0- 472– 11980– 6 (hardcover : alk. paper) ISBN 978– 0- 472– 12180– 9 (e-book) Revised Pages Acknowledgments I have often wondered if my interest in authors who wrote themselves in order to obscure themselves stems from my own anxieties about the permanence of the printed word—m y own longing (that I imagine everyone shares?) for words that linger on the page for a moment only and then— miraculously, mercifully— disappear before their inadequacies can be exposed. I think I will always harbor this anxiety, but I have been blessed with mentors, colleagues, friends, and family members who have known how to couch their criticism in kindness and without whom I could never have summoned the courage to keep this work up or to set these words down. The germs for this book’s ideas began many years ago, when, as an under- graduate at Harvard, I stumbled somewhat accidentally (to fulfill a require- ment) into Lynn Festa’s course on “Sex and Sensibility during the Enlighten- ment.” Thank goodness for requirements. -

Successful Pirates and Capitalist Fantasies: Charting Fictional Representations of Eighteenth- and Early Nineteenth -Century English Fortune Hunters

Louisiana State University LSU Digital Commons LSU Historical Dissertations and Theses Graduate School 2000 Successful Pirates and Capitalist Fantasies: Charting Fictional Representations of Eighteenth- And Early Nineteenth -Century English Fortune Hunters. Robert Gordon Dryden Louisiana State University and Agricultural & Mechanical College Follow this and additional works at: https://digitalcommons.lsu.edu/gradschool_disstheses Recommended Citation Dryden, Robert Gordon, "Successful Pirates and Capitalist Fantasies: Charting Fictional Representations of Eighteenth- And Early Nineteenth -Century English Fortune Hunters." (2000). LSU Historical Dissertations and Theses. 7191. https://digitalcommons.lsu.edu/gradschool_disstheses/7191 This Dissertation is brought to you for free and open access by the Graduate School at LSU Digital Commons. It has been accepted for inclusion in LSU Historical Dissertations and Theses by an authorized administrator of LSU Digital Commons. For more information, please contact [email protected]. INFORMATION TO USERS This manuscript has been reproduced from the microfilm master. UMI films the text directly from the original or copy submitted. Thus, some thesis and dissertation copies are in typewriter face, while others may be from any type of computer printer. The quality of this reproduction is dependent upon the quality of the copy submitted. Broken or indistinct print colored or poor quality illustrations and photographs, print bleedthrough, substandard margins, and improper alignment can adversely affect reproduction. In the unlikely event that the author did not send UMI a complete manuscript and there are missing pages, these will be noted. Also, if unauthorized copyright material had to be removed, a note will indicate the deletion. Oversize materials (e.g., maps, drawings, charts) are reproduced by sectioning the original, beginning at the upper left-hand comer and continuing from left to right in equal sections with small overlaps. -

Visible Passion and an Invisible Woman: Theatrical Oroonoko

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Kitami Institute of Technology Repository Visible Passion and an Invisible Woman: Theatrical Oroonoko 著者 Fukushi Wataru journal or 人間科学研究 publication title volume 8 page range 1-23 year 2012-03 URL http://id.nii.ac.jp/1450/00007528/ (論 文) Visible Passion and an Invisible Woman: Theatrical Oroonoko Wataru Fukushi * Abstract This paper aims to explain the dynamics behind the whitening of Imoinda in Thomas Southerne’s Oroonoko. We could argue that a particular mindset which involved the whitening of Imoinda can be detected by observing the theatricality of the play. The text of Oroonoko aims to visualize the inner passions of players through their motions, based on the acting theory of that time. Those passions were the key factor in bringing the audience and the players together. Through the interaction among the theatre company and the theatregoers, a white female body was required to share a ‘universal’ passion. When the dramatic adaptation of Oroonoko by Thomas Southerne was premiered at the Drury Lane Theatre in November 1695, one significant change was carried out: the heroine, Imoinda, was no longer a black African woman, but a white woman. Southerne’s Oroonoko achieved great popularity among contemporary audiences and it survived as a repertoire for almost the entire eighteenth century. As we will see later, critics have tried to answer a simple but difficult question, ‘why was Imoinda made into a white?’. This question also lies at the center of this paper. We can see a passing reference to Southerne’s Oroonoko in a short satire called ‘The Tryal of Skill: or, A New Session of the Poets. -

GARRICKS JUBILEE.Pdf

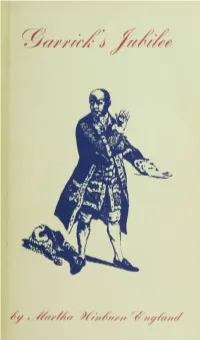

fr na A comprehensive — and occasionally hilarious—study of the three-day fes tival staged by David Garrick, the brilliant actor and manager of the Drury Lane Theatre in London, at Stratford-upon-Avon, in September, 1769. This was the first Shakespeare fes tival to engage national interest, and although its critics vilified it as a fiasco and a monumental example of bad taste, its defenders thought it a glorious occasion. James Boswell, a member of the latter group, de scribed it as being ((not a piece of farce . , but an elegant and truly classical celebration of the memory of Shakespeare." Reproduced on the stage in vary ing moods of glorification and satire, the Jubilee ultimately became en tangled in the very threads of Eng lish life. The combination of Garrick and Stratford produced a catalyst (Continued on back flap) Garrick's Jubilee Garrick's Jubilee By Martha Winburn England Ohio State University Press Copyright © 1964 by the Ohio State University Press All Rights Reserved Library of Congress Catalogue Card Number: 64-17109 Chapters II and V and portions of Chapters I and III are reprinted from the author's Garrick and Stratford, © 1962, 1964 by The New York Public Library. They are used here by permission of the publisher. A portion of Chapter VI first appeared in Bulletin of the New York Public Library, LXIII, No. 3 (March, 1959) ; and a portion of Chapter VIll in Shakespeare Survey, No. 9 (1956). They are reprinted here by permission of the publishers. To Harry Levin Preface THE LIBRARIES of Harvard University, Yale University, and Queens College, the Folger Shakespeare Library, the New York Public Library, and the archives of the Shakespeare Birthplace Trust have made my work possible. -

Cast-Mistresses: the Widow Figure in Oroonoko

Chapter 4 Cast-Mistresses: The Widow Figure in Oroonoko Kristina Bross and Kathryn Rummell Aphra Behn's prose narrative Oroonoko seems bent on confusion. A mix of settings, times, and narrative strategies, the story begins with a brief description of Surinam, the primary setting, moves to West Africa to detail the background of Oroonoko as a young, noble warrior in Coramantien, and then returns to its New World setting. Fictional, autobiographical, and historic elements mingle in a tale that contains both heroic and bathetic sentiments, recounted memoir-style by an older narrator and through as-told-to stories of the tale's main character. By contrast, Thomas Southerne's 1696 revision of Behn's prose narrative into a five act drama (also titled Oroonoko) is a much more orderly retelling of the story. Its only major complication, a split plot, resolves cleanly into clearly tragic and clearly comic conclusions. Southerne's neatly-staged play avoids Behn's eclecticism, in part by confining the West African scenes to the background and eliminating any staging of Coramantien altogether. Many of Southerne's changes have been well documented in recent scholarship - his transformation of Behn's narrator into the cross-dressing Charlotte WelldonWelldon and the 'whitening' of Imoinda in particular have been the focus of excellent commentary.] Surprisingly little attention has been paid, however, to an equally striking departure from his source: Southerne's introduction of a stock character from Restoration drama, the rich, man-hungry widow. 2 For readers familiar only with Behn's original of Oroonoko - and even for some who know both works - Southerne's Widow Lackitt may come as a surprise; there seems to be no parallel character in Behn's work to this conventional stage widow.