Dissertation Submitted in Partial Fulfillment of the Requirements for the Degree Of

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

X3DOM – Declarative (X)3D in HTML5

X3DOM – Declarative (X)3D in HTML5 Introduction and Tutorial Yvonne Jung Fraunhofer IGD Darmstadt, Germany [email protected] www.igd.fraunhofer.de/vcst © Fraunhofer IGD 3D Information inside the Web n Websites (have) become Web applications n Increasing interest in 3D for n Product presentation n Visualization of abstract information (e.g. time lines) n Enriching experience of Cultural Heritage data n Enhancing user experience with more Example Coform3D: line-up of sophisticated visualizations scanned historic 3D objects n Today: Adobe Flash-based site with videos n Tomorrow: Immersive 3D inside browsers © Fraunhofer IGD OpenGL and GLSL in the Web: WebGL n JavaScript Binding for OpenGL ES 2.0 in Web Browser n à Firefox, Chrome, Safari, Opera n Only GLSL shader based, no fixed function pipeline mehr n No variables from GL state n No Matrix stack, etc. n HTML5 <canvas> element provides 3D rendering context n gl = canvas.getContext(’webgl’); n API calls via GL object n X3D via X3DOM framework n http://www.x3dom.org © Fraunhofer IGD X3DOM – Declarative (X)3D in HTML5 n Allows utilizing well-known JavaScript and DOM infrastructure for 3D n Brings together both n declarative content design as known from web design n “old-school” imperative approaches known from game engine development <html> <body> <h1>Hello X3DOM World</h1> <x3d> <scene> <shape> <box></box> </shape> </scene> </x3d> </body> </html> © Fraunhofer IGD X3DOM – Declarative (X)3D in HTML5 • X3DOM := X3D + DOM • DOM-based integration framework for declarative 3D graphics -

Understanding Javascript Event-Based Interactions

Understanding JavaScript Event-Based Interactions Saba Alimadadi Sheldon Sequeira Ali Mesbah Karthik Pattabiraman Motivation • JavaScript – Event driven, dynamic, asynchronous • Difficult to understand the dynamic behavior and the control flow – Lower level events – Their interactions 1 Challenge 1: Event Propagation html Handler head body Triggered P div a div Handler p Triggered h1 table p Handler Triggered caption tr Handler User td Triggered Click label input table textarea button Handler 2 Triggered Challenge 2: Asynchronous Events Timeout for page expiry Server request for login Server response for login User logs in 3 Challenge 2: Asynchronous Events Timeout for page expiry Server request for login View Server response for login gallery Server request Server request Server response Server response 3 Challenge 2: Asynchronous Events Timeout for page expiry Server request for login Server response for login View Server request slideshow Server request Server response Server response Timeout for next image 3 Challenge 2: Asynchronous Events Timeout for page expiry Server request for login Server response for login Server request Server request Server response Server response Timeout for next image Server request image Server response Timeout callback Timeout callback page expiry 3 Challenge 3: DOM State function submissionHandler(e) { $('#regMsg').html("Submitted!"); var email = $('#email').val(); html if (isEmailValid(email)) { informServer(email); head Body $('#submitBtn').attr("disabled", true); } } P div a srvrMsg . function informServer(email) -

Practical Initialization Race Detection for Javascript Web Applications

Practical Initialization Race Detection for JavaScript Web Applications CHRISTOFFER QUIST ADAMSEN, Aarhus University, Denmark 66 ANDERS MØLLER, Aarhus University, Denmark FRANK TIP, Northeastern University, USA Event races are a common source of subtle errors in JavaScript web applications. Several automated tools for detecting event races have been developed, but experiments show that their accuracy is generally quite low. We present a new approach that focuses on three categories of event race errors that often appear during the initialization phase of web applications: form-input-overwritten errors, late-event-handler-registration errors, and access-before-definition errors. The approach is based on a dynamic analysis that uses a combination of adverse and approximate execution. Among the strengths of the approach are that it does not require browser modifications, expensive model checking, or static analysis. In an evaluation on 100 widely used websites, our tool InitRacer reports 1 085 initialization races, while providing informative explanations of their causes and effects. A manual study of 218 of these reports shows that 111 of them lead to uncaught exceptions and at least 47 indicate errors that affect the functionality of the websites. CCS Concepts: • Software and its engineering → Software testing and debugging; Additional Key Words and Phrases: event race detection, JavaScript, dynamic analysis ACM Reference Format: Christoffer Quist Adamsen, Anders Møller, and Frank Tip. 2017. Practical Initialization Race Detection for JavaScript Web Applications. Proc. ACM Program. Lang. 1, OOPSLA, Article 66 (October 2017), 22 pages. https://doi.org/10.1145/3133890 1 INTRODUCTION It is well known that event races are the cause of many errors in JavaScript web applications [Steen 2009]. -

Web Components in Action MEAP

MEAP Edition Manning Early Access Program Web Components in Action Version 2 Copyright 2018 Manning Publications For more information on this and other Manning titles go to www.manning.com ©Manning Publications Co. We welcome reader comments about anything in the manuscript - other than typos and other simple mistakes. These will be cleaned up during production of the book by copyeditors and proofreaders. https://forums.manning.com/forums/web-components-in-action welcome Thank you very much for purchasing the MEAP for Web Components in Action. I’ve been speaking and blogging for over a decade now, and the common thread throughout has been that there hasn’t really been a common thread. I get excited about new technologies and techniques, but ultimately move to the next new thing. Web Components have been a bit different for me. I spoke at a few conferences and wrote some blog posts about them, and I did move on to other new and exciting things, but the difference here is that I never stopped building with Web Components. They’ve been a staple of my web development workflow for four years now. Like many web developers, I too have cycled through many frameworks and libraries that help with application development. Most we really good! It’s easy to laugh at a three or four year old framework years later, but it’s been very interesting to see how we as web developers progress as we try to make better and better applications with better and better tools. I’ve also been fortunate enough to use many other programming languages and platforms as well. -

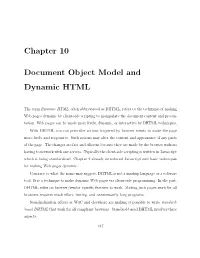

Chapter 10 Document Object Model and Dynamic HTML

Chapter 10 Document Object Model and Dynamic HTML The term Dynamic HTML, often abbreviated as DHTML, refers to the technique of making Web pages dynamic by client-side scripting to manipulate the document content and presen- tation. Web pages can be made more lively, dynamic, or interactive by DHTML techniques. With DHTML you can prescribe actions triggered by browser events to make the page more lively and responsive. Such actions may alter the content and appearance of any parts of the page. The changes are fast and e±cient because they are made by the browser without having to network with any servers. Typically the client-side scripting is written in Javascript which is being standardized. Chapter 9 already introduced Javascript and basic techniques for making Web pages dynamic. Contrary to what the name may suggest, DHTML is not a markup language or a software tool. It is a technique to make dynamic Web pages via client-side programming. In the past, DHTML relies on browser/vendor speci¯c features to work. Making such pages work for all browsers requires much e®ort, testing, and unnecessarily long programs. Standardization e®orts at W3C and elsewhere are making it possible to write standard- based DHTML that work for all compliant browsers. Standard-based DHTML involves three aspects: 447 448 CHAPTER 10. DOCUMENT OBJECT MODEL AND DYNAMIC HTML Figure 10.1: DOM Compliant Browser Browser Javascript DOM API XHTML Document 1. Javascript|for cross-browser scripting (Chapter 9) 2. Cascading Style Sheets (CSS)|for style and presentation control (Chapter 6) 3. Document Object Model (DOM)|for a uniform programming interface to access and manipulate the Web page as a document When these three aspects are combined, you get the ability to program changes in Web pages in reaction to user or browser generated events, and therefore to make HTML pages more dynamic. -

A Trusted Infrastructure for Symbolic Analysis of Event-Driven Web

A Trusted Infrastructure for Symbolic Analysis of Event-Driven Web Applications Gabriela Sampaio Imperial College London, UK [email protected] José Fragoso Santos INESC-ID/Instituto Superior Técnico, Universidade de Lisboa, Portugal Imperial College London, UK [email protected] Petar Maksimović Imperial College London, UK [email protected] Philippa Gardner Imperial College London, UK [email protected] Abstract We introduce a trusted infrastructure for the symbolic analysis of modern event-driven Web applica- tions. This infrastructure consists of reference implementations of the DOM Core Level 1, DOM UI Events, JavaScript Promises and the JavaScript async/await APIs, all underpinned by a simple Core Event Semantics which is sufficiently expressive to describe the event models underlying these APIs. Our reference implementations are trustworthy in that three follow the appropriate standards line-by-line and all are thoroughly tested against the official test-suites, passing all the applicable tests. Using the Core Event Semantics and the reference implementations, we develop JaVerT.Click, a symbolic execution tool for JavaScript that, for the first time, supports reasoning about JavaScript programs that use multiple event-related APIs. We demonstrate the viability of JaVerT.Click by proving both the presence and absence of bugs in real-world JavaScript code. 2012 ACM Subject Classification Software and its engineering → Formal software verification; Software and its engineering → Software testing and debugging Keywords and phrases Events, DOM, JavaScript, promises, symbolic execution, bug-finding Digital Object Identifier 10.4230/LIPIcs.ECOOP.2020.28 Acknowledgements Fragoso Santos, Gardner, and Maksimović were partially supported by the EPSRC Programme Grant ‘REMS: Rigorous Engineering for Mainstream Systems’ (EP/K008528/1) and the EPSRC Fellowship ‘VetSpec: Verified Trustworthy Software Specification’ (EP/R034567/1). -

Web Browser Access to Cryptographic Hardware

Universidade do Minho Escola de Engenharia Leonel João Fernandes Braga Web Browser Access to Cryptographic Hardware Outubro de 2012 Universidade do Minho Escola de Engenharia Leonel João Fernandes Braga Web Browser Access to Cryptographic Harware Tese de Mestrado Mestrado em Engenharia Informática Trabalho realizado sob orientação de Doutor Vítor Francisco Fonte Supervisão na empresa de Engenheiro Renato Portela Outubro de 2012 Acknowledgments I could not conclude this work without acknowledge all the support, time, and understanding of all the people who have been around me during this phase and during my journey of life. I am sure that without them everything would be much more difficult, and the success would be harder to achieve. First of all, I want to thank to my supervisor Professor Victor Fonte for being so helpful and supportive. His guidance certainly improved my work and my knowledge as well. I want also to thank to Engenheiro Renato Portela from MULTICERT for enlightening me when I was more doubtful. A special thanks to MULTICERT for letting me enrol in this project: it made me grow professionally and enhanced my knowledge. I want also to thank the Firebreath community for clarifying all the doubts I had. Congrat- ulations for your great work as well. In this context, there is one person to whom I could not be more grateful: Pedro, thank you for your help and patience, even when I had lots of questions. I am also grateful for the discussions I had with Pedro and Ulisses: they gave me lots of ideas of how I could improve my work. -

BENEFITS and PITFALLS of USING HTML5 APIS for ONLINE EXPERIMENTS and SIMULATIONS Benefits and Pitfalls of Using HTML5 Apis for Online Experiments and Simulations

SPECIAL FOCUS PAPER BENEFITS AND PITFALLS OF USING HTML5 APIS FOR ONLINE EXPERIMENTS AND SIMULATIONS Benefits and Pitfalls of Using HTML5 APIs for Online Experiments and Simulations http://dx.doi.org/10.3991/ijoe.v8iS3.22541 P. Garaizar, M.A. Vadillo and D. López-de-Ipiña University of Deusto, Bilbao, Spain. Abstract—The most recent advances in the architecture of benefits and pitfalls of using them regarding online the Web allow using it as an excellent platform to deliver experimentation and simulations. experiments and simulations over the Internet. However, there are still some challenges related to the animations’ II. ANIMATIONS accuracy, to user input collection or to real-time communications that have to be accomplished to properly There are several technologies and APIs related to the port native application- based experiments and simulations HTML5 standard that can be used to generate animations. to the Web. The limitations of the standards preceding Some of them are part of the HTML5 specification (e.g., HTML5 have forced web developers to embed non-HTML Canvas 2D API [5]), and some others are defined in objects using a wide range of non-standard plugins and different specifications (e.g., WebGL [6]). Although this causing an extremely fragmented execution environment distinction is clear, many web browser vendors (i.e., where features must be implemented several times in Microsoft, Apple, Google, Mozilla, Opera) usually refer to different programming languages to guarantee full all of them as HTML5-related technologies, and so we do compliance with every user-agent. As HTML5 provides a in the rest of this paper. -

SMIL and SVG in Teaching

SMIL and SVG in teaching Horst Eidenberger* Vienna University of Technology, Institute of Software Technology and Interactive Systems, Favoritenstrasse 9-11, 1040 Vienna, Austria ABSTRACT This paper describes how the web standards Synchronized Multimedia Integration Language (SMIL) and Scalable Vector Graphics (SVG) are used in teaching at the Vienna University of Technology. SMIL and SVG are used in courses on multimedia authoring. Didactically, the goal is to teach students how to use media objects and timing concepts to build interactive media applications. Additionally, SMIL is applied to generate multimedia content from a database using a content management system. The paper gives background information on the SMIL and SVG standards and sketches how teaching multimedia is organised at the Vienna University of Technology. Courses from the summer term 2003 are described and illustrated in two case studies. General design problems of SMIL-based presentations are modelled as patterns. Additionally, suggestions for improvement in the standards are given and shortcomings of existing user agents are summarised. Our conclusion is that SMIL and SVG are very well suited for teaching multimedia. Currently, the main problem is that all existing SMIL players lack some properties desired for teaching applications (stability, correctness, etc.). Keywords: Multimedia authoring, Synchronized Multimedia Integration Language, SMIL, Scalable Vector Graphics, SVG, content management, CMS, education, case study, personalisation, CC/PP 1. INTRODUCTION In recent years universities all over the world and, especially, in the German-speaking countries, that offer computer engineering studies have established courses on multimedia and temporal media processing. So at the Vienna University of Technology. Since the year 2000 it offers a very popular media informatics curriculum (about 350 beginners per year). -

The Hypertext Transfer Protocol

Introduction to JavaScript – Part III Hamid Zarrabi-Zadeh Web Programming – Fall 2013 2 Outline • Document Object Model (DOM) • The Browser Object Model • Event Handling • Exception Handling 3 Document Object Model • The Document Object Model (DOM) defines a standard for accessing documents through an object model • DOM is platform and language independent • It allows programs and scripts to dynamically access and update the content, structure, and style of a document 4 The W3C DOM • The W3C DOM standard is separated into three different parts: Core DOM - standard model for all document types XML DOM - standard model for XML documents HTML DOM - standard model for HTML documents 5 The HTML DOM • The HTML DOM defines: The HTML elements as objects The properties of all HTML elements The methods to access all HTML elements The events for all HTML elements 6 The HTML DOM Tree • The HTML DOM represents HTML document as a tree of objects 7 Finding Elements • Using get methods getElementById getElementsByName getElementsByTagName getElementsByClassName • Using document object collections anchors, forms, images, links var x = document.getElementById('main'); var y = x.getElementsByTagName('p'); var form = document.forms[0]; 8 Getting Information • For each element, information can be obtained from nodeValue – The text of a text node nodeName – The tag name nodeType – The type of node (a number) innerHTML – The inner HTML content var x = document.getElementById('main'); alert(x.nodeName); 9 Updating Elements • Change HTML content -

A Web-Based Collaborative Multimedia Presentation Document System

ABSTRACT With the distributed and rapidly increasing volume of data and expeditious de- velopment of modern web browsers, web browsers have become a possible legitimate vehicle for remote interactive multimedia presentation and collaboration, especially for geographically dispersed teams. To our knowledge, although there are a large num- ber of applications developed for these purposes, there are some drawbacks in prior work including the lack of interactive controls of presentation flows, general-purpose collaboration support on multimedia, and efficient and precise replay of presentations. To fill the research gaps in prior work, in this dissertation, we propose a web-based multimedia collaborative presentation document system, which models a presentation as media resources together with a stream of media events, attached to associated media objects. It represents presentation flows and collaboration actions in events, implements temporal and spatial scheduling on multimedia objects, and supports real-time interactive control of the predefined schedules. As all events are repre- sented by simple messages with an object-prioritized approach, our platform can also support fine-grained precise replay of presentations. Hundreds of kilobytes could be enough to store the events in a collaborative presentation session for accurate replays, compared with hundreds of megabytes in screen recording tools with a pixel-based replay mechanism. A WEB-BASED COLLABORATIVE MULTIMEDIA PRESENTATION DOCUMENT SYSTEM by Chunxu Tang B.S., Xiamen University, 2013 M.S., Syracuse University, 2015 Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Electrical and Computer Engineering. Syracuse University June 2019 Copyright c Chunxu Tang 2019 All Rights Reserved To my parents. -

Document Object Model †DOM‡ Level 3 Load and Save Specification

Document Object Model (DOM) Level 3 Load and Save Specification Document Object Model (DOM) Level 3 Load and Save Specification Version 1.0 W3C Recommendation 07 April 2004 This version: http://www.w3.org/TR/2004/REC-DOM-Level-3-LS-20040407 Latest version: http://www.w3.org/TR/DOM-Level-3-LS Previous version: http://www.w3.org/TR/2004/PR-DOM-Level-3-LS-20040205/ Editors: Johnny Stenback, Netscape Andy Heninger, IBM (until March 2001) Please refer to the errata for this document, which may include some normative corrections. This document is also available in these non-normative formats: XML file, plain text, PostScript file, PDF file, single HTML file, and ZIP file. See also translations of this document. Copyright ©2004 W3C ® (MIT, ERCIM, Keio), All Rights Reserved. W3C liability, trademark, document use and software licensing rules apply. Abstract This specification defines the Document Object Model Load and Save Level 3, a platform- and language-neutral interface that allows programs and scripts to dynamically load the content of an XML document into a DOM document and serialize a DOM document into an XML document; DOM documents being defined in [DOM Level 2 Core] or newer, and XML documents being defined in [XML 1.0] or newer. It also allows filtering of content at load time and at serialization time. 1 Status of this document Status of this document This section describes the status of this document at the time of its publication. Other documents may supersede this document. A list of current W3C publications and the latest revision of this technical report can be found in the W3C technical reports index at http://www.w3.org/TR/.