Functional Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sobolev Spaces, Theory and Applications

Sobolev spaces, theory and applications Piotr Haj lasz1 Introduction These are the notes that I prepared for the participants of the Summer School in Mathematics in Jyv¨askyl¨a,August, 1998. I thank Pekka Koskela for his kind invitation. This is the second summer course that I delivere in Finland. Last August I delivered a similar course entitled Sobolev spaces and calculus of variations in Helsinki. The subject was similar, so it was not posible to avoid overlapping. However, the overlapping is little. I estimate it as 25%. While preparing the notes I used partially the notes that I prepared for the previous course. Moreover Lectures 9 and 10 are based on the text of my joint work with Pekka Koskela [33]. The notes probably will not cover all the material presented during the course and at the some time not all the material written here will be presented during the School. This is however, not so bad: if some of the results presented on lectures will go beyond the notes, then there will be some reasons to listen the course and at the same time if some of the results will be explained in more details in notes, then it might be worth to look at them. The notes were prepared in hurry and so there are many bugs and they are not complete. Some of the sections and theorems are unfinished. At the end of the notes I enclosed some references together with comments. This section was also prepared in hurry and so probably many of the authors who contributed to the subject were not mentioned. -

On Quasi Norm Attaining Operators Between Banach Spaces

ON QUASI NORM ATTAINING OPERATORS BETWEEN BANACH SPACES GEUNSU CHOI, YUN SUNG CHOI, MINGU JUNG, AND MIGUEL MART´IN Abstract. We provide a characterization of the Radon-Nikod´ymproperty in terms of the denseness of bounded linear operators which attain their norm in a weak sense, which complement the one given by Bourgain and Huff in the 1970's. To this end, we introduce the following notion: an operator T : X ÝÑ Y between the Banach spaces X and Y is quasi norm attaining if there is a sequence pxnq of norm one elements in X such that pT xnq converges to some u P Y with }u}“}T }. Norm attaining operators in the usual (or strong) sense (i.e. operators for which there is a point in the unit ball where the norm of its image equals the norm of the operator) and also compact operators satisfy this definition. We prove that strong Radon-Nikod´ymoperators can be approximated by quasi norm attaining operators, a result which does not hold for norm attaining operators in the strong sense. This shows that this new notion of quasi norm attainment allows to characterize the Radon-Nikod´ymproperty in terms of denseness of quasi norm attaining operators for both domain and range spaces, completing thus a characterization by Bourgain and Huff in terms of norm attaining operators which is only valid for domain spaces and it is actually false for range spaces (due to a celebrated example by Gowers of 1990). A number of other related results are also included in the paper: we give some positive results on the denseness of norm attaining Lipschitz maps, norm attaining multilinear maps and norm attaining polynomials, characterize both finite dimensionality and reflexivity in terms of quasi norm attaining operators, discuss conditions to obtain that quasi norm attaining operators are actually norm attaining, study the relationship with the norm attainment of the adjoint operator and, finally, present some stability results. -

Compact Operators on Hilbert Spaces S

INTERNATIONAL JOURNAL OF MATHEMATICS AND SCIENTIFIC COMPUTING (ISSN: 2231-5330), VOL. 4, NO. 2, 2014 101 Compact Operators on Hilbert Spaces S. Nozari Abstract—In this paper, we obtain some results on compact Proof: Let T is invertible. It is well-known and easy to operators. In particular, we prove that if T is an unitary operator show that the composition of two operator which at least one on a Hilbert space H, then it is compact if and only if H has T of them be compact is also compact ([4], Th. 11.5). Therefore finite dimension. As the main theorem we prove that if be TT −1 I a hypercyclic operator on a Hilbert space, then T n (n ∈ N) is = is compact which contradicts to Lemma I.3. noncompact. Corollary II.2. If T is an invertible operator on an infinite Index Terms—Compact operator, Linear Projections, Heine- dimensional Hilbert space, then it is not compact. Borel Property. Corollary II.3. Let T be a bounded operator with finite rank MSC 2010 Codes – 46B50 on an infinite-dimensional Hilbert space H. Then T is not invertible. I. INTRODUCTION Proof: By Theorem I.4, T is compact. Now the proof is Surely, the operator theory is the heart of functional analy- completed by Theorem II.1. sis. This means that if one wish to work on functional analysis, P H he/she must study the operator theory. In operator theory, Corollary II.4. Let be a linear projection on with finite we study operators and connection between it with other rank. -

Fundamentals of Functional Analysis Kluwer Texts in the Mathematical Sciences

Fundamentals of Functional Analysis Kluwer Texts in the Mathematical Sciences VOLUME 12 A Graduate-Level Book Series The titles published in this series are listed at the end of this volume. Fundamentals of Functional Analysis by S. S. Kutateladze Sobolev Institute ofMathematics, Siberian Branch of the Russian Academy of Sciences, Novosibirsk, Russia Springer-Science+Business Media, B.V. A C.I.P. Catalogue record for this book is available from the Library of Congress ISBN 978-90-481-4661-1 ISBN 978-94-015-8755-6 (eBook) DOI 10.1007/978-94-015-8755-6 Translated from OCHOBbI Ij)YHK~HOHaJIl>HODO aHaJIHsa. J/IS;l\~ 2, ;l\OIIOJIHeHHoe., Sobo1ev Institute of Mathematics, Novosibirsk, © 1995 S. S. Kutate1adze Printed on acid-free paper All Rights Reserved © 1996 Springer Science+Business Media Dordrecht Originally published by Kluwer Academic Publishers in 1996. Softcover reprint of the hardcover 1st edition 1996 No part of the material protected by this copyright notice may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying, recording or by any information storage and retrieval system, without written permission from the copyright owner. Contents Preface to the English Translation ix Preface to the First Russian Edition x Preface to the Second Russian Edition xii Chapter 1. An Excursion into Set Theory 1.1. Correspondences . 1 1.2. Ordered Sets . 3 1.3. Filters . 6 Exercises . 8 Chapter 2. Vector Spaces 2.1. Spaces and Subspaces ... ......................... 10 2.2. Linear Operators . 12 2.3. Equations in Operators ........................ .. 15 Exercises . 18 Chapter 3. Convex Analysis 3.1. -

Quantitative Hahn-Banach Theorems and Isometric Extensions for Wavelet and Other Banach Spaces

Axioms 2013, 2, 224-270; doi:10.3390/axioms2020224 OPEN ACCESS axioms ISSN 2075-1680 www.mdpi.com/journal/axioms Article Quantitative Hahn-Banach Theorems and Isometric Extensions for Wavelet and Other Banach Spaces Sergey Ajiev School of Mathematical Sciences, Faculty of Science, University of Technology-Sydney, P.O. Box 123, Broadway, NSW 2007, Australia; E-Mail: [email protected] Received: 4 March 2013; in revised form: 12 May 2013 / Accepted: 14 May 2013 / Published: 23 May 2013 Abstract: We introduce and study Clarkson, Dol’nikov-Pichugov, Jacobi and mutual diameter constants reflecting the geometry of a Banach space and Clarkson, Jacobi and Pichugov classes of Banach spaces and their relations with James, self-Jung, Kottman and Schaffer¨ constants in order to establish quantitative versions of Hahn-Banach separability theorem and to characterise the isometric extendability of Holder-Lipschitz¨ mappings. Abstract results are further applied to the spaces and pairs from the wide classes IG and IG+ and non-commutative Lp-spaces. The intimate relation between the subspaces and quotients of the IG-spaces on one side and various types of anisotropic Besov, Lizorkin-Triebel and Sobolev spaces of functions on open subsets of an Euclidean space defined in terms of differences, local polynomial approximations, wavelet decompositions and other means (as well as the duals and the lp-sums of all these spaces) on the other side, allows us to present the algorithm of extending the main results of the article to the latter spaces and pairs. Special attention is paid to the matter of sharpness. Our approach is quasi-Euclidean in its nature because it relies on the extrapolation of properties of Hilbert spaces and the study of 1-complemented subspaces of the spaces under consideration. -

Sequential Completeness of Inductive Limits

Internat. J. Math. & Math. Sci. Vol. 24, No. 6 (2000) 419–421 S0161171200003744 © Hindawi Publishing Corp. SEQUENTIAL COMPLETENESS OF INDUCTIVE LIMITS CLAUDIA GÓMEZ and JAN KUCERAˇ (Received 28 July 1999) Abstract. A regular inductive limit of sequentially complete spaces is sequentially com- plete. For the converse of this theorem we have a weaker result: if indEn is sequentially complete inductive limit, and each constituent space En is closed in indEn, then indEn is α-regular. Keywords and phrases. Regularity, α-regularity, sequential completeness of locally convex inductive limits. 2000 Mathematics Subject Classification. Primary 46A13; Secondary 46A30. 1. Introduction. In [2] Kuˇcera proves that an LF-space is regular if and only if it is sequentially complete. In [1] Bosch and Kuˇcera prove the equivalence of regularity and sequential completeness for bornivorously webbed spaces. It is natural to ask: can this result be extended to arbitrary sequentially complete spaces? We give a partial answer to this question in this paper. 2. Definitions. Throughout the paper {(En,τn)}n∈N is an increasing sequence of Hausdorff locally convex spaces with continuous identity maps id : En → En+1 and E = indEn its inductive limit. A topological vector space E is sequentially complete if every Cauchy sequence {xn}⊂E is convergent to an element x ∈ E. An inductive limit indEn is regular, resp. α-regular, if each of its bounded sets is bounded, resp. contained, in some constituent space En. 3. Main results Theorem 3.1. Let each space (En,τn) be sequentially complete and indEn regular. Then indEn is sequentially complete. Proof. Let {xk} be a Cauchy sequence in indEn. -

View of This, the Following Observation Should Be of Some Interest to Vector Space Pathologists

Can. J. Math., Vol. XXV, No. 3, 1973, pp. 511-524 INCLUSION THEOREMS FOR Z-SPACES G. BENNETT AND N. J. KALTON 1. Notation and preliminary ideas. A sequence space is a vector subspace of the space co of all real (or complex) sequences. A sequence space E with a locally convex topology r is called a K- space if the inclusion map E —* co is continuous, when co is endowed with the product topology (co = II^Li (R)*). A i£-space E with a Frechet (i.e., complete, metrizable and locally convex) topology is called an FK-space; if the topology is a Banach topology, then E is called a BK-space. The following familiar BK-spaces will be important in the sequel: ni, the space of all bounded sequences; c, the space of all convergent sequences; Co, the space of all null sequences; lp, 1 ^ p < °°, the space of all absolutely ^-summable sequences. We shall also consider the space <j> of all finite sequences and the linear span, Wo, of all sequences of zeros and ones; these provide examples of spaces having no FK-topology. A sequence space is solid (respectively monotone) provided that xy £ E whenever x 6 m (respectively m0) and y £ E. For x £ co, we denote by Pn(x) the sequence (xi, x2, . xn, 0, . .) ; if a i£-space (E, r) has the property that Pn(x) —» x in r for each x G E, then we say that (£, r) is an AK-space. We also write WE = \x Ç £: P„.(x) —>x weakly} and ^ = jx Ç E: Pn{x) —>x in r}. -

Sisältö Part I: Topological Vector Spaces 4 1. General Topological

Sisalt¨ o¨ Part I: Topological vector spaces 4 1. General topological vector spaces 4 1.1. Vector space topologies 4 1.2. Neighbourhoods and filters 4 1.3. Finite dimensional topological vector spaces 9 2. Locally convex spaces 10 2.1. Seminorms and semiballs 10 2.2. Continuous linear mappings in locally convex spaces 13 3. Generalizations of theorems known from normed spaces 15 3.1. Separation theorems 15 Hahn and Banach theorem 17 Important consequences 18 Hahn-Banach separation theorem 19 4. Metrizability and completeness (Fr`echet spaces) 19 4.1. Baire's category theorem 19 4.2. Complete topological vector spaces 21 4.3. Metrizable locally convex spaces 22 4.4. Fr´echet spaces 23 4.5. Corollaries of Baire 24 5. Constructions of spaces from each other 25 5.1. Introduction 25 5.2. Subspaces 25 5.3. Factor spaces 26 5.4. Product spaces 27 5.5. Direct sums 27 5.6. The completion 27 5.7. Projektive limits 28 5.8. Inductive locally convex limits 28 5.9. Direct inductive limits 29 6. Bounded sets 29 6.1. Bounded sets 29 6.2. Bounded linear mappings 31 7. Duals, dual pairs and dual topologies 31 7.1. Duals 31 7.2. Dual pairs 32 7.3. Weak topologies in dualities 33 7.4. Polars 36 7.5. Compatible topologies 39 7.6. Polar topologies 40 PART II Distributions 42 1. The idea of distributions 42 1.1. Schwartz test functions and distributions 42 2. Schwartz's test function space 43 1 2.1. The spaces C (Ω) and DK 44 1 2 2.2. -

Functional Analysis Lecture Notes Chapter 2. Operators on Hilbert Spaces

FUNCTIONAL ANALYSIS LECTURE NOTES CHAPTER 2. OPERATORS ON HILBERT SPACES CHRISTOPHER HEIL 1. Elementary Properties and Examples First recall the basic definitions regarding operators. Definition 1.1 (Continuous and Bounded Operators). Let X, Y be normed linear spaces, and let L: X Y be a linear operator. ! (a) L is continuous at a point f X if f f in X implies Lf Lf in Y . 2 n ! n ! (b) L is continuous if it is continuous at every point, i.e., if fn f in X implies Lfn Lf in Y for every f. ! ! (c) L is bounded if there exists a finite K 0 such that ≥ f X; Lf K f : 8 2 k k ≤ k k Note that Lf is the norm of Lf in Y , while f is the norm of f in X. k k k k (d) The operator norm of L is L = sup Lf : k k kfk=1 k k (e) We let (X; Y ) denote the set of all bounded linear operators mapping X into Y , i.e., B (X; Y ) = L: X Y : L is bounded and linear : B f ! g If X = Y = X then we write (X) = (X; X). B B (f) If Y = F then we say that L is a functional. The set of all bounded linear functionals on X is the dual space of X, and is denoted X0 = (X; F) = L: X F : L is bounded and linear : B f ! g We saw in Chapter 1 that, for a linear operator, boundedness and continuity are equivalent. -

On Domain of Nörlund Matrix

mathematics Article On Domain of Nörlund Matrix Kuddusi Kayaduman 1,* and Fevzi Ya¸sar 2 1 Faculty of Arts and Sciences, Department of Mathematics, Gaziantep University, Gaziantep 27310, Turkey 2 ¸SehitlerMah. Cambazlar Sok. No:9, Kilis 79000, Turkey; [email protected] * Correspondence: [email protected] Received: 24 October 2018; Accepted: 16 November 2018; Published: 20 November 2018 Abstract: In 1978, the domain of the Nörlund matrix on the classical sequence spaces lp and l¥ was introduced by Wang, where 1 ≤ p < ¥. Tu˘gand Ba¸sarstudied the matrix domain of Nörlund mean on the sequence spaces f 0 and f in 2016. Additionally, Tu˘gdefined and investigated a new sequence space as the domain of the Nörlund matrix on the space of bounded variation sequences in 2017. In this article, we defined new space bs(Nt) and cs(Nt) and examined the domain of the Nörlund mean on the bs and cs, which are bounded and convergent series, respectively. We also examined their inclusion relations. We defined the norms over them and investigated whether these new spaces provide conditions of Banach space. Finally, we determined their a-, b-, g-duals, and characterized their matrix transformations on this space and into this space. Keywords: nörlund mean; nörlund transforms; difference matrix; a-, b-, g-duals; matrix transformations 1. Introduction 1.1. Background In the studies on the sequence space, creating a new sequence space and research on its properties have been important. Some researchers examined the algebraic properties of the sequence space while others investigated its place among other known spaces and its duals, and characterized the matrix transformations on this space. -

Linear Operators and Adjoints

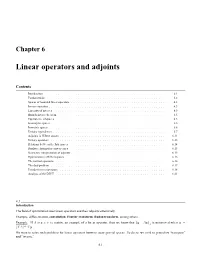

Chapter 6 Linear operators and adjoints Contents Introduction .......................................... ............. 6.1 Fundamentals ......................................... ............. 6.2 Spaces of bounded linear operators . ................... 6.3 Inverseoperators.................................... ................. 6.5 Linearityofinverses.................................... ............... 6.5 Banachinversetheorem ................................. ................ 6.5 Equivalenceofspaces ................................. ................. 6.5 Isomorphicspaces.................................... ................ 6.6 Isometricspaces...................................... ............... 6.6 Unitaryequivalence .................................... ............... 6.7 Adjoints in Hilbert spaces . .............. 6.11 Unitaryoperators ...................................... .............. 6.13 Relations between the four spaces . ................. 6.14 Duality relations for convex cones . ................. 6.15 Geometric interpretation of adjoints . ............... 6.15 Optimization in Hilbert spaces . .............. 6.16 Thenormalequations ................................... ............... 6.16 Thedualproblem ...................................... .............. 6.17 Pseudo-inverseoperators . .................. 6.18 AnalysisoftheDTFT ..................................... ............. 6.21 6.1 Introduction The field of optimization uses linear operators and their adjoints extensively. Example. differentiation, convolution, Fourier transform, -

Introduction to Sobolev Spaces

Introduction to Sobolev Spaces Lecture Notes MM692 2018-2 Joa Weber UNICAMP December 23, 2018 Contents 1 Introduction1 1.1 Notation and conventions......................2 2 Lp-spaces5 2.1 Borel and Lebesgue measure space on Rn .............5 2.2 Definition...............................8 2.3 Basic properties............................ 11 3 Convolution 13 3.1 Convolution of functions....................... 13 3.2 Convolution of equivalence classes................. 15 3.3 Local Mollification.......................... 16 3.3.1 Locally integrable functions................. 16 3.3.2 Continuous functions..................... 17 3.4 Applications.............................. 18 4 Sobolev spaces 19 4.1 Weak derivatives of locally integrable functions.......... 19 1 4.1.1 The mother of all Sobolev spaces Lloc ........... 19 4.1.2 Examples........................... 20 4.1.3 ACL characterization.................... 21 4.1.4 Weak and partial derivatives................ 22 4.1.5 Approximation characterization............... 23 4.1.6 Bounded weakly differentiable means Lipschitz...... 24 4.1.7 Leibniz or product rule................... 24 4.1.8 Chain rule and change of coordinates............ 25 4.1.9 Equivalence classes of locally integrable functions..... 27 4.2 Definition and basic properties................... 27 4.2.1 The Sobolev spaces W k;p .................. 27 4.2.2 Difference quotient characterization of W 1;p ........ 29 k;p 4.2.3 The compact support Sobolev spaces W0 ........ 30 k;p 4.2.4 The local Sobolev spaces Wloc ............... 30 4.2.5 How the spaces relate.................... 31 4.2.6 Basic properties { products and coordinate change.... 31 i ii CONTENTS 5 Approximation and extension 33 5.1 Approximation............................ 33 5.1.1 Local approximation { any domain............. 33 5.1.2 Global approximation on bounded domains.......