Reconstructions in Limited Angle X-Ray Tomography: Characterization of Classical Reconstructions and Adapted Curvelet Sparse Regularization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Image Reconstruction and Imaging Configuration Optimization with A

Southern Illinois University Carbondale OpenSIUC Dissertations Theses and Dissertations 8-1-2012 Image reconstruction and imaging configuration optimization with a novel nanotechnology enabled breast tomosynthesis multi-beam X-ray system Weihua Zhou Southern Illinois University Carbondale, [email protected] Follow this and additional works at: http://opensiuc.lib.siu.edu/dissertations Recommended Citation Zhou, Weihua, "Image reconstruction and imaging configuration optimization with a novel nanotechnology enabled breast tomosynthesis multi-beam X-ray system" (2012). Dissertations. Paper 540. This Open Access Dissertation is brought to you for free and open access by the Theses and Dissertations at OpenSIUC. It has been accepted for inclusion in Dissertations by an authorized administrator of OpenSIUC. For more information, please contact [email protected]. IMAGE RECONSTRUCTION AND IMAGING CONFIGURATION OPTIMIZATION WITH A NOVEL NANOTECHNOLOGY ENABLED BREAST TOMOSYNTHESIS MULTI-BEAM X-RAY SYSTEM by Weihua Zhou Ph.D., Southern Illinois University, 2012 A Dissertation Submitted in Partial Fulfillment of the Requirements for the Doctor of Philosophy Department of Electrical and Computer Engineering in the Graduate School Southern Illinois University Carbondale August 2012 DISSERTATION APPROVAL IMAGE RECONSTRUCTION AND IMAGING CONFIGURATION OPTIMIZATION WITH A NOVEL NANOTECHNOLOGY ENABLED BREAST TOMOSYNTHESIS MULTI-BEAM X-RAY SYSTEM by Weihua Zhou A Dissertation Submitted in Partial Fulfillment of the Requirements for the Degree of PhD. in the field of Electrical and Computer Engineering Approved by: Ying Chen, Chair Nazeih Botros Gupta Lalit Ramanarayanan Viswanathan Mengxia Zhu Graduate School Southern Illinois University Carbondale March 8th, 2012 AN ABSTRACT OF THE DISSERTATION OF Weihua Zhou, for the Doctor of Philosophy degree in ELETRICAL AND COMPUTER ENGINEERING, presented on March 8th, 2012, at Southern Illinois University Carbondale. -

Oblique Reconstructions in Tomosynthesis. I. Linear Systems Theory Raymond J

Oblique reconstructions in tomosynthesis. I. Linear systems theory Raymond J. Acciavatti and Andrew D. A. Maidment Citation: Medical Physics 40, 111911 (2013); doi: 10.1118/1.4819941 View online: http://dx.doi.org/10.1118/1.4819941 View Table of Contents: http://scitation.aip.org/content/aapm/journal/medphys/40/11?ver=pdfcov Published by the American Association of Physicists in Medicine Articles you may be interested in Characterization of a high-energy in-line phase contrast tomosynthesis prototype Med. Phys. 42, 2404 (2015); 10.1118/1.4917227 Oblique reconstructions in tomosynthesis. II. Super-resolution Med. Phys. 40, 111912 (2013); 10.1118/1.4819942 The effect of angular dose distribution on the detection of microcalcifications in digital breast tomosynthesis Med. Phys. 38, 2455 (2011); 10.1118/1.3570580 Experimental validation of a three-dimensional linear system model for breast tomosynthesis Med. Phys. 36, 240 (2009); 10.1118/1.3040178 Noise and resolution in images reconstructed with FBP and OSC algorithms for CT Med. Phys. 34, 585 (2007); 10.1118/1.2409481 Oblique reconstructions in tomosynthesis. I. Linear systems theory Raymond J. Acciavatti and Andrew D. A. Maidmenta) Department of Radiology, Perelman School of Medicine at the University of Pennsylvania, Philadelphia, Pennsylvania 19104-4206 (Received 18 May 2013; revised 4 August 2013; accepted for publication 12 August 2013; published 11 October 2013) Purpose: By convention, slices in a tomosynthesis reconstruction are created on planes parallel to the detector. It has not yet been demonstrated that slices can be generated along oblique directions through the same volume, analogous to multiplanar reconstructions in computed tomography (CT). -

The Radon Transform: First Steps F

l I The Radon Transform: First Steps N.M. Temme In this note we discuss some aspects of the Radon transform mentioned in the review of Helgason's book in this Newsletter. In short, the Radon transform of a function f (x,y) of two variables is the set of line integrals, with obvious generalizations to higher dimensions. It plays a fundamental role in a large class of applications which fall under the heading of tomography. In a narrow sense, tomography is the problem of reconstruct ing the interior of an object by passing radiation through it and recording the resulting intensity over a range of directions. It is the problem of finding f from the above mentioned line integrals and is related to the inversion of the Radon transform. Before discussing a simple example of how to compute the Radon transform, we will tell more about the background of the applications. In mathematical physics there is a notorious class of difficult problems: the ill-posed problems. The notion of a we/I-posed problem is due to Hadamard: a solution must exist, be unique, and depend continuously on the data. In ill-posed problems the last condition may be violated, and then important difficulties may arise, especially when the data are not complete or not accurate. Tomography falls in this class of ill-posed problems. Probably the most widely known applications of tomography are in medi cine. Computer assisted tomography (CAT-scan) uses X-rays directed from a range of directions to reconstruct the density in a thin slice of the body (the Greek word TOJ.WS means section). -

![Arxiv:1712.06453V2 [Math.SG]](https://docslib.b-cdn.net/cover/1153/arxiv-1712-06453v2-math-sg-2471153.webp)

Arxiv:1712.06453V2 [Math.SG]

RADON TRANSFORM FOR SHEAVES HONGHAO GAO Abstract. We define the Radon transform functor for sheaves and prove that it is an equivalence after suitable microlocal localizations. As a result, the sheaf category associated to a Legendrian is invariant under the Radon transform. We also manage to place the Radon transform and other transforms in microlocal sheaf theory altogether in a diagram. 1. Introduction The goal of the paper is to define the Radon transform for microlocal sheaf categories and study its properties. The term “microlocal” refers to the consideration of the cotangent bundle when we study sheaves over a smooth manifold. This method was introduced in [KS1] and has been systematically developed ever since [KS2]. The geometric nature of cotangent bundles makes microlocal sheaf theory a handy tool for problems in symplectic and contact geometry, such as those related to Lagrangian and Legendrian invariants [GKS, Ta], the Fukaya category [Na, NZ], Legendrian knots [STZ, NRSSZ], and more. We define a sheaf version of the classical Radon transform (Definition 3.1). The classical Radon transform is an integral functional which takes a rapidly decreasing function on Euclidean space to a function on the space of hyperplanes in the Euclidean space. These analytic concepts have sheaf counterparts, where the sectional integration is captured by taking compactly supported sections. The Radon transform functor is expected to be an equivalence of categories, which corresponds to the reconstruction property of the classical Radon functional. In other words, the Radon transform should admit an inverse. In the first main theorem, we prove such an equivalence holds after some microlocal localizations. -

Radon Transform on Spaces of Constant Curvature 1

PROCEEDINGS OF THE AMERICAN MATHEMATICAL SOCIETY Volume 125, Number 2, February 1997, Pages 455–461 S 0002-9939(97)03570-3 RADON TRANSFORM ON SPACES OF CONSTANT CURVATURE CARLOS A. BERENSTEIN, ENRICO CASADIO TARABUSI, AND ARP´ AD´ KURUSA (Communicated by Peter Li) Abstract. A correspondence among the totally geodesic Radon transforms— as well as among their duals—on the constant curvature spaces is established, and is used here to obtain various range characterizations. 1. Introduction The totally geodesic Radon transform on spaces of constant curvature has been widely studied (see [Hg1], [Hg2], [BC1], [Ku1], to quote only a few). Yet many of the known results, in spite of their similarities, were obtained on each such space independently. The idea of relating these transforms by projecting each space to the Euclidean one appeared independently in [BC2] (for negative curvature) and [Ku4] (for curvature of any sign), and was used to obtain, respectively, range characterizations of the Radon transform on the hyperbolic space and support theorems on all constant curvature spaces. In this paper we extend and exploit further this correspondence, establishing an explicit formula relating the dual Radon transforms on the different constant cur- vature spaces, and proving various range characterizations for the Radon transform and its dual on the Euclidean and elliptic space. The third author wishes to thank the Soros Foundation and MIT for supporting his visit at MIT, during which this paper was conceived. 2. Preliminaries n Let Mκ be an n-dimensional simply connected complete Riemannian manifold of constant curvature κ. Normalizing the metric so that κ equals 1, 0, or +1, we get, respectively, the hyperbolic space Hn, the Euclidean space Rn−, and the sphere Sn—or its two-to-one quotient, the projective space Pn. -

The Radon Transform on Euclidean Spaces, Compact Two-Point Homogeneous Spaces and Grassmann Manifolds

THE RADON TRANSFORM ON EUCLIDEAN SPACES, COMPACT TWO-POINT HOMOGENEOUS SPACES AND GRASSMANN MANIFOLDS BY SIGURDUR HELGASON The Institute for Advanced Study, Princeton, N. J., U.S.A.(1) w 1. Introduction As proved by Radon [16] and John [13], a differentiable function / of compact support on a Euclidean space R n can be determined explicitly by means of its integrals over the hyperplanes in the space. Let J(~o, p) denote the integral of / over the hyperplane <x, co> =p where r is a unit vector and <,> the inner product in R n. If A denotes the La- placian on R n, do) the area element on the unit sphere S n-1 then (John [14], p. 13) J(x) =1(2~i)i-n (Ax)~(n-1)fs.J(~, <eo, x))dw, (n odd); (1) /(x)=(2:~i)-n(Ax) I do~f~ dJ(~'p) (neven), (2) j sn-, p - <co, x>' where, in the last formula, the Cauchy principal value is taken. Considering now the simpler formula (1) we observe that it contains two dual integra- tions: the first over the set of points in a given hyperplane, the second over the set of hyperplanes passing through a given point. Generalizing this situation we consider the following setup: (i) Let X be a manifold and G a transitive Lie transformation group of X. Let ~ be a family of subsets of X permuted transitively by the action of G on X, whence ~ acquires a G-invariant differentiable structure. Here ~ will be called the dual space of X. (ii) Given x E X, let ~ denote the set of ~ e 7~ passing through x. -

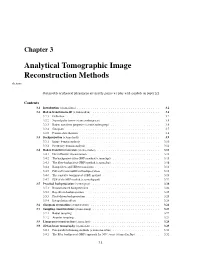

Analytical Tomographic Image Reconstruction Methods Ch,Tomo

Chapter 3 Analytical Tomographic Image Reconstruction Methods ch,tomo Our models of physical phenomena are merely games we play with symbols on paper [1] Contents 3.1 Introduction (s,tomo,intro) ..................................... 3.2 3.2 Radon transform in 2D (s,tomo,radon) .............................. 3.2 3.2.1 Definition ..................................... ..... 3.2 3.2.2 Signed polar forms (s,tomo,radon,polar) . ................ 3.5 3.2.3 Radon transform properties (s,tomo,radon,prop) . ................... 3.6 3.2.4 Sinogram ...................................... .... 3.7 3.2.5 Fourier-slicetheorem . .......... 3.8 3.3 Backprojection (s,tomo,back) ................................... 3.9 3.3.1 Image-domainanalysis . ......... 3.10 3.3.2 Frequency-domain analysis . ........... 3.11 3.4 Radon transform inversion (s,tomo,iradon) ............................ 3.13 3.4.1 DirectFourierreconstruction . ............. 3.13 3.4.2 The backproject-filter (BPF) method (s,tomo,bpf) . .................. 3.15 3.4.3 The filter-backproject (FBP) method (s,tomo,fbp) . .................. 3.16 3.4.4 RampfiltersandHilberttransforms . ............. 3.18 3.4.5 Filtered versus unfiltered backprojection . ................. 3.19 3.4.6 The convolve-backproject (CBP) method . .............. 3.19 3.4.7 PSFoftheFBPmethod(s,tomo,fbp,psf) . ............. 3.22 3.5 Practical backprojection (s,tomo,prac) .............................. 3.24 3.5.1 Rotation-based backprojection . ............. 3.24 3.5.2 Ray-driven backprojection . ........... 3.25 3.5.3 Pixel-driven backprojection . ............. 3.25 3.5.4 Interpolationeffects . ........... 3.26 3.6 Sinogram restoration (s,tomo,restore) ............................... 3.26 3.7 Sampling considerations (s,tomo,samp) .............................. 3.27 3.7.1 Radialsampling................................ ....... 3.27 3.7.2 Angularsampling ............................... ....... 3.27 3.8 Linogram reconstruction (s,tomo,lino) ............................. -

Restricted Radon Transforms and Unions of Hyperplanes

RESTRICTED RADON TRANSFORMS AND UNIONS OF HYPERPLANES Daniel M. Oberlin Department of Mathematics, Florida State University October 2004 1 Introduction x (n 1) n If Σ − is the unit sphere in R , the Radon transform Rf of a suitable function f on Rn is defined by (n 1) Rf(σ; t) = Z f(p + tσ) dmn 1(p) σ Σ − ; t R; − σ? 2 2 where the integral is with respect to (n 1)-dimensional Lebesgue measure on the − hyperplane σ?. We also define, for 0 < δ < 1, 1 Rδf(σ; t) = δ− Z f(x + tσ) dmn(x): [σ B(0;1)]+B(0,δ) ?\ The paper [5] is concerned with the mapping properties of R from Lp(Rn) into mixed norm spaces defined by the norms q=r 1=q 1 r Z hZ g(σ; t) dti dσ : Σ(n 1) j j − −∞ (n 1) Here dσ denotes Lebesgue measure on Σ − . The purpose of this paper is mainly to study the possibility of analogous mixed norm estimates when dσ is replaced (n 1) by measures dµ(σ) supported on compact subsets S Σ − having dimension < n 1. We are usually interested in the case r =⊆ and will mostly settle for estimates− of restricted weak type in the indices p and1 q and those only for f supported in a ball. The following theorem, which we regard as an estimate for a restricted Radon transform, is typical of our results here: Theorem 1. Fix α (1; n 1). Suppose µ is a nonnegative and finite Borel (n 1) 2 − measure on Σ − satisfying the Frostman condition dµ(σ1)dµ(σ2) Z Z α < : (n 1) (n 1) σ1 σ2 1 Σ − Σ − j − j Typeset by -TEX AMS 1 2 DANIEL M. -

GCC and FBCC for Linear Tomosynthesis Jer´ Omeˆ Lesaint, Simon Rit, Rolf Clackdoyle, Laurent Desbat

GCC and FBCC for linear tomosynthesis Jer´ omeˆ Lesaint, Simon Rit, Rolf Clackdoyle, Laurent Desbat Abstract—Grangeat-based Consistency Conditions (GCC) and correction in x-ray CT [9]. For FBCC, a circular cone-beam Fan-beam Consistency Conditions (FBCC) are two ways to de- micro CT application appeared recently [10]. scribe consistency (or redundancy) between cone-beam projections. In this work, we focus on GCC and FBCC and propose a Here we consider cone-beam projections that are collected in the linear tomosynthesis geometry. We propose a theoretical theoretical comparison of these two sets of DCC. We carry comparison of these two sets of consistency conditions and illustrate this work in the specific context of tomosynthesis, with an X- the comparison with numerical simulations of a thorax Forbild ray source moving along a line. It is proved that the FBCC phantom. are stronger than the GCC, in the sense that if the FBCC are Index Terms—Cone-beam computed tomography (CBCT), data satisfied, then so are the GCC, but not conversely. We also show consistency conditions (DCCs), tomosynthesis. that if all the projections are complete (non-truncated) then the FBCC and GCC are equivalent. Furthermore the hypothesis of complete projections is essential; we show that under particular I. INTRODUCTION circumstances (with truncated projections), the FBCC are more In Computed Tomography (CT), the 3D density map of a restrictive, i.e., the FBCC can fail even when the GCC are patient (or an object) is reconstructed from a set of 2D radio- satisfied. We finally prove that neither of these two sets of graphs. -

![Arxiv:1705.03609V4 [Math.NA] 6 Dec 2018](https://docslib.b-cdn.net/cover/2378/arxiv-1705-03609v4-math-na-6-dec-2018-3552378.webp)

Arxiv:1705.03609V4 [Math.NA] 6 Dec 2018

DIMENSIONAL SPLITTING OF HYPERBOLIC PARTIAL DIFFERENTIAL EQUATIONS USING THE RADON TRANSFORM DONSUB RIM∗ Abstract. We introduce a dimensional splitting method based on the intertwining property of the Radon transform, with a particular focus on its applications related to hyperbolic partial differ- ential equations (PDEs). This dimensional splitting has remarkable properties that makes it useful in a variety of contexts, including multi-dimensional extension of large time-step (LTS) methods, absorbing boundary conditions, displacement interpolation, and multi-dimensional generalization of transport reversal [34]. 1. Introduction. Dimensional splitting provides the simplest approach to ob- taining a multi-dimensional method from a one-dimensional method [28, 37, 14, 25]. Although extremely powerful, existing splitting methods do not preserve a special feature that is easily obtained for 1D methods. For 1D hyperbolic partial differential equations (PDEs) of the type (1.1) qt + Aqx = 0 where A is a constant diagonalizable matrix with real and distinct eigenvalues, one can devise large time-step (LTS) methods that allow the solution to be solved up to any time without incurring excessive numerical diffusion [21, 23, 22]. Previous splitting methods do not lead to such LTS methods in multi-dimensions. In this paper, we introduce a dimensional splitting method that allows multi- dimensional linear constant coefficient hyperbolic problems to be solved up to desired time. The method relies on the intertwining property of Radon transforms [18, 29], thereby transforming a multi-dimensional problem into a family of one-dimensional ones. Simply by applying an 1D LTS method on each of these one-dimensional prob- lems, one obtains a multi-dimensional LTS method. -

Radon Transform Second Edition Contents

i Sigurdur Helgason Radon Transform Second Edition Contents Preface to the Second Edition . iv Preface to the First Edition . v CHAPTER I The Radon Transform on Rn 1 Introduction . 1 x2 The Radon Transform . The Support Theorem . 2 x3 The Inversion Formula . 15 x4 The Plancherel Formula . 20 x5 Radon Transform of Distributions . 22 x6 Integration over d-planes. X-ray Transforms. 28 x7 Applications . 41 x A. Partial differential equations. 41 B. X-ray Reconstruction. 47 Bibliographical Notes . 51 CHAPTER II A Duality in Integral Geometry. 1 Homogeneous Spaces in Duality . 55 x2 The Radon Transform for the Double Fibration . 59 x3 Orbital Integrals . 64 x4 Examples of Radon Transforms for Homogeneous Spaces in Duality 65 x ii A. The Funk Transform. 65 B. The X-ray Transform in H2. 67 C. The Horocycles in H2. 68 D. The Poisson Integral as a Radon Transform. 72 E. The d-plane Transform. 74 F. Grassmann Manifolds. 76 G. Half-lines in a Half-plane. 77 H. Theta Series and Cusp Forms. 80 Bibliographical Notes . 81 CHAPTER III The Radon Transform on Two-point Homogeneous Spaces 1 Spaces of Constant Curvature. Inversion and Support Theorems 83 x A. The Hyperbolic Space . 85 B. The Spheres and the Elliptic Spaces . 92 C. The Spherical Slice Transform . 107 2 Compact Two-point Homogeneous Spaces. Applications . 110 x3 Noncompact Two-point Homogeneous Spaces . 116 x4 The X-ray Transform on a Symmetric Space . 118 x5 Maximal Tori and Minimal Spheres in Compact Symmetric Spaces 119 x Bibliographical Notes . 120 CHAPTER IV Orbital Integrals 1 Isotropic Spaces . -

The Radon Transform Joshua Benjamin

THE RADON TRANSFORM JOSHUA BENJAMIN III CLASS OF 2020 [email protected] Acknowledgments I would like to thank: Fabian Haiden for allowing me to create an exposition and for oering advice as to what topic I should explore. I would also like to thank Kevin Yang and Reuben Stern for aiding me in checking for errors and oering advice on what information was superuous. ii To My Haters iii THE RADON TRANSFORM JOSHUA BENJAMIN III FABIAN HAIDEN iv Contents 1 Introduction 1 2 e Radon Transform 2 2.1 Two Dimensions (R2)............................2 2.2 Higher Dimensions (Rn)..........................3 2.3 Dual Transform . .4 2.4 Gaussian distribution . .4 3 Properties 5 3.1 Homogeneity and scaling . .5 3.2 Linearity . .5 3.3 Transform of a linear transformation . .6 3.4 Shiing . .6 4 Inversion 7 4.1 Inversion formulas . .7 v 4.2 Unication . .8 5 Relation to the Fourier Transform 9 5.1 Fourier and Radon . .9 5.2 Another Inversion . 10 6 Application 11 vi Chapter 1 Introduction e reconstruction problem has long been of interest to mathematicians, and with the advancements in experimental science, applied elds also needed a solution to this is- sue. e reconstruction problem concerns distributions and proles. Given the pro- jected distribution (or prole), what can be known about the actual distribution? In 1917, Johann Radon of Austria presented a solution to the reconstruction problem (he was not aempting to solve this problem) with the Radon transform and its inversion formula. He developed this solution aer building on the work of Hermann Minkowski and Paul Funk while maintaining private conversation with Gustav Herglotz.