(A Thousand Points Of) Light on Biased Language

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Culture Wars' Reloaded: Trump, Anti-Political Correctness and the Right's 'Free Speech' Hypocrisy

The 'Culture Wars' Reloaded: Trump, Anti-Political Correctness and the Right's 'Free Speech' Hypocrisy Dr. Valerie Scatamburlo-D'Annibale University of Windsor, Windsor, Ontario, Canada Abstract This article explores how Donald Trump capitalized on the right's decades-long, carefully choreographed and well-financed campaign against political correctness in relation to the broader strategy of 'cultural conservatism.' It provides an historical overview of various iterations of this campaign, discusses the mainstream media's complicity in promulgating conservative talking points about higher education at the height of the 1990s 'culture wars,' examines the reconfigured anti- PC/pro-free speech crusade of recent years, its contemporary currency in the Trump era and the implications for academia and educational policy. Keywords: political correctness, culture wars, free speech, cultural conservatism, critical pedagogy Introduction More than two years after Donald Trump's ascendancy to the White House, post-mortems of the 2016 American election continue to explore the factors that propelled him to office. Some have pointed to the spread of right-wing populism in the aftermath of the 2008 global financial crisis that culminated in Brexit in Europe and Trump's victory (Kagarlitsky, 2017; Tufts & Thomas, 2017) while Fuchs (2018) lays bare the deleterious role of social media in facilitating the rise of authoritarianism in the U.S. and elsewhere. Other 69 | P a g e The 'Culture Wars' Reloaded: Trump, Anti-Political Correctness and the Right's 'Free Speech' Hypocrisy explanations refer to deep-rooted misogyny that worked against Hillary Clinton (Wilz, 2016), a backlash against Barack Obama, sedimented racism and the demonization of diversity as a public good (Major, Blodorn and Blascovich, 2016; Shafer, 2017). -

Online Media and the 2016 US Presidential Election

Partisanship, Propaganda, and Disinformation: Online Media and the 2016 U.S. Presidential Election The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters Citation Faris, Robert M., Hal Roberts, Bruce Etling, Nikki Bourassa, Ethan Zuckerman, and Yochai Benkler. 2017. Partisanship, Propaganda, and Disinformation: Online Media and the 2016 U.S. Presidential Election. Berkman Klein Center for Internet & Society Research Paper. Citable link http://nrs.harvard.edu/urn-3:HUL.InstRepos:33759251 Terms of Use This article was downloaded from Harvard University’s DASH repository, and is made available under the terms and conditions applicable to Other Posted Material, as set forth at http:// nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#LAA AUGUST 2017 PARTISANSHIP, Robert Faris Hal Roberts PROPAGANDA, & Bruce Etling Nikki Bourassa DISINFORMATION Ethan Zuckerman Yochai Benkler Online Media & the 2016 U.S. Presidential Election ACKNOWLEDGMENTS This paper is the result of months of effort and has only come to be as a result of the generous input of many people from the Berkman Klein Center and beyond. Jonas Kaiser and Paola Villarreal expanded our thinking around methods and interpretation. Brendan Roach provided excellent research assistance. Rebekah Heacock Jones helped get this research off the ground, and Justin Clark helped bring it home. We are grateful to Gretchen Weber, David Talbot, and Daniel Dennis Jones for their assistance in the production and publication of this study. This paper has also benefited from contributions of many outside the Berkman Klein community. The entire Media Cloud team at the Center for Civic Media at MIT’s Media Lab has been essential to this research. -

Fact Or Fiction?

The Ins and Outs of Media Literacy 1 Part 1: Fact or Fiction? Fake News, Alternative Facts, and other False Information By Jeff Rand La Crosse Public Library 2 Goals To give you the knowledge and tools to be a better evaluator of information Make you an agent in the fight against falsehood 3 Ground rules Our focus is knowledge and tools, not individuals You may see words and images that disturb you or do not agree with your point of view No political arguments Agree 4 Historical Context “No one in this world . has ever lost money by underestimating the intelligence of the great masses of plain people.” (H. L. Mencken, September 19, 1926) 5 What is happening now and why 6 Shift from “Old” to “New” Media Business/Professional Individual/Social Newspapers Facebook Magazines Twitter Television Websites/blogs Radio 7 News Platforms 8 Who is your news source? Professional? Personal? Educated Trained Experienced Supervised With a code of ethics https://www.spj.org/ethicscode.asp 9 Social Media & News 10 Facebook & Fake News 11 Veles, Macedonia 12 Filtering Based on: Creates filter bubbles Your location Previous searches Previous clicks Previous purchases Overall popularity 13 Echo chamber effect 14 Repetition theory Coke is the real thing. Coke is the real thing. Coke is the real thing. Coke is the real thing. Coke is the real thing. 15 Our tendencies Filter bubbles: not going outside of your own beliefs Echo chambers: repeating whatever you agree with to the exclusion of everything else Information avoidance: just picking what you agree with and ignoring everything else Satisficing: stopping when the first result agrees with your thinking and not researching further Instant gratification: clicking “Like” and “Share” without thinking (Dr. -

Won't Make Hundreds of Millions of Dollars While Their Employees Are Below the Poverty Line

won't make hundreds of millions of dollars while their employees are below the poverty line. Hillary said "We are going to take things away from you for the common good." Goddess bless her. We need stations like WTPG to show the masses what we liberals Dlan to do to helD this countw. Jeff Coryell 44118 The progressive community in Columbus is vibrant and growing. More and more Ohioans are seeing through the empty right wing spin and coming around to the common-sense progressive agenda. They need a radio station that respects their point of view! ~oan Fluharty 43068 Sara Richman 01940 Progressive Talk Radio sserves an imponant for the public to hear another side of the issues. Talk radio is "lopsidedly" one sided and as we know, this is extremely dangerous in a democracy. Boston lost their Progressive Talk Radio and I feel as if I lost a friend. Out of habit, when I turn to the station in the car or in the kitchen, I get annoying rumba music. I wish a petition could be started to have another Boston station broadcast Progressive Talk. I khank you, Sara Richman Karl lKav 143232 IProqressive radio is imDonant in Central Ohio because someone has to tell They are more than willing to patronize any advenisers to the channel. arilyn eerman Franken and Schultz have reg Iavis 1230 since it started in Columbus in September 2004. On average, we e listened to AM1230 for eight to ten hours a day (my wife listens to it a 1230, as have many of our friends and acquaintances. -

(Pdf) Download

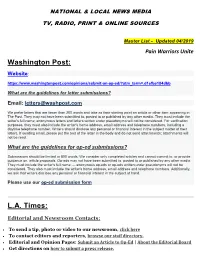

NATIONAL & LOCAL NEWS MEDIA TV, RADIO, PRINT & ONLINE SOURCES Master List - Updated 04/2019 Pain Warriors Unite Washington Post: Website: https://www.washingtonpost.com/opinions/submit-an-op-ed/?utm_term=.d1efbe184dbb What are the guidelines for letter submissions? Email: [email protected] We prefer letters that are fewer than 200 words and take as their starting point an article or other item appearing in The Post. They may not have been submitted to, posted to or published by any other media. They must include the writer's full name; anonymous letters and letters written under pseudonyms will not be considered. For verification purposes, they must also include the writer's home address, email address and telephone numbers, including a daytime telephone number. Writers should disclose any personal or financial interest in the subject matter of their letters. If sending email, please put the text of the letter in the body and do not send attachments; attachments will not be read. What are the guidelines for op-ed submissions? Submissions should be limited to 800 words. We consider only completed articles and cannot commit to, or provide guidance on, article proposals. Op-eds may not have been submitted to, posted to or published by any other media. They must include the writer's full name — anonymous op-eds or op-eds written under pseudonyms will not be considered. They also must include the writer's home address, email address and telephone numbers. Additionally, we ask that writers disclose any personal or financial interest in the subject at hand. Please use our op-ed submission form L.A. -

The Rise of Talk Radio and Its Impact on Politics and Public Policy

Mount Rushmore: The Rise of Talk Radio and Its Impact on Politics and Public Policy Brian Asher Rosenwald Wynnewood, PA Master of Arts, University of Virginia, 2009 Bachelor of Arts, University of Pennsylvania, 2006 A Dissertation presented to the Graduate Faculty of the University of Virginia in Candidacy for the Degree of Doctor of Philosophy Department of History University of Virginia August, 2015 !1 © Copyright 2015 by Brian Asher Rosenwald All Rights Reserved August 2015 !2 Acknowledgements I am deeply indebted to the many people without whom this project would not have been possible. First, a huge thank you to the more than two hundred and twenty five people from the radio and political worlds who graciously took time from their busy schedules to answer my questions. Some of them put up with repeated follow ups and nagging emails as I tried to develop an understanding of the business and its political implications. They allowed me to keep most things on the record, and provided me with an understanding that simply would not have been possible without their participation. When I began this project, I never imagined that I would interview anywhere near this many people, but now, almost five years later, I cannot imagine the project without the information gleaned from these invaluable interviews. I have been fortunate enough to receive fellowships from the Fox Leadership Program at the University of Pennsylvania and the Corcoran Department of History at the University of Virginia, which made it far easier to complete this dissertation. I am grateful to be a part of the Fox family, both because of the great work that the program does, but also because of the terrific people who work at Fox. -

Billionaires Tea Party

1 THE BILLIONAIRES’ TEA PARTY How Corporate America is Faking a Grassroots Revolution [transcript] Barack Obama: This is our moment. This is our time. To reclaim the American Dream and reaffirm that fundamental truth that where we are many, we are one; that while we breathe, we hope; and where we are met with cynicism and doubt and those who tell us we can’t, we will respond with that timeless creed that sums up the spirit of a people: Yes we can. Man on Stage: They’re listening to us. They are taking us seriously, and the message is: It’s our county, and they can have it when they pry it from our cold dead fingers. They work for me! NARRATOR: Where did it all go wrong for Barack Obama and the democrats? After sweeping to power with a promise of hope and change, a citizens uprising called the tea party movement emerged. Their message was “no” to big government spending, “no” to healthcare and climate change legislation, and “no” to Obama himself. Woman: Obama is a communist. He says that he doesn't believe in the constitution. NARRATOR: Then, two years into Obama’s presidency, tea party endorsed candidates emerged to sweep the republicans to victory in the House of Representatives. Male News Reader: 32% of the candidates that were elected last night across this country are affiliated with the Tea Party movement. Rand Paul: There's a Tea Party tidal wave, and we're sending a message to 'em. Female Reporter: And they see it as a repudiation of the President and his policies. -

Business Associations, Conservative Networks, and the Ongoing Republican War Over Medicaid Expansion

Journal of Health Politics, Policy and Law Journal of Health Politics, Policy and Law Report on Health Reform Implementation Business Associations, Conservative Networks, and the Ongoing Republican War over Medicaid Expansion Alexander Hertel-Fernandez Theda Skocpol Daniel Lynch Harvard University Editor’s Note: JHPPL has started an ACA Scholar-Practitioner Network (ASPN). The ASPN assembles people of different backgrounds (prac- titioners, stakeholders, and researchers) involved in state-level health reform implementation across the United States. The newly developed ASPN website documents ACA implementation research projects to assist policy makers, researchers, and journalists in identifying and integrating scholarly work on state-level implementation of the ACA. If you would like your work included on the ASPN website, please contact web coordi- nator Phillip Singer at [email protected]. You can visit the site at http://ssascholars.uchicago.edu/jhppl/. JHPPL seeks to bring this important and timely work to the fore in Report on Health Reform Implementation, a recurring special section. The journal will publish essays in this section based on findings that emerge from network participants. Thanks to funding from the Robert Wood Johnson Foundation, all essays in the section are published open access. —Colleen M. Grogan Abstract A major component of the Affordable Care Act involves the expansion of state Medicaid programs to cover the uninsured poor. In the wake of the 2012 Supreme Court decision upholding and modifying reform legislation, states can decide whether to expand Medicaid—and twenty states are still not proceeding as of August 2015. What explains state choices about participation in expansion, including governors’ decisions to endorse expansion or not as well as final state decisions? We tackle this For comments, inspiration, and information helpful to our research, we especially thank Katherine Swartz, Jacob Steinberg-Otter, and Christopher Howard and his associates in Virginia. -

Anti-Reflexivity: the American Conservative Movement's Success

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/249726287 Anti-reflexivity: The American Conservative Movement's Success in Undermining Climate Science and Policy Article in Theory Culture & Society · May 2010 DOI: 10.1177/0263276409356001 CITATIONS READS 360 1,842 2 authors, including: Riley E. Dunlap Oklahoma State University - Stillwater 369 PUBLICATIONS 25,266 CITATIONS SEE PROFILE Some of the authors of this publication are also working on these related projects: Climate change: political polarization and organized denial View project Tracking changes in environmental worldview over time View project All content following this page was uploaded by Riley E. Dunlap on 30 March 2014. The user has requested enhancement of the downloaded file. Theory, Culture & Society http://tcs.sagepub.com Anti-reflexivity: The American Conservative Movement’s Success in Undermining Climate Science and Policy Aaron M. McCright and Riley E. Dunlap Theory Culture Society 2010; 27; 100 DOI: 10.1177/0263276409356001 The online version of this article can be found at: http://tcs.sagepub.com/cgi/content/abstract/27/2-3/100 Published by: http://www.sagepublications.com Additional services and information for Theory, Culture & Society can be found at: Email Alerts: http://tcs.sagepub.com/cgi/alerts Subscriptions: http://tcs.sagepub.com/subscriptions Reprints: http://www.sagepub.com/journalsReprints.nav Permissions: http://www.sagepub.co.uk/journalsPermissions.nav Citations http://tcs.sagepub.com/cgi/content/refs/27/2-3/100 Downloaded from http://tcs.sagepub.com at OKLAHOMA STATE UNIV on May 25, 2010 100-133 TCS356001 McCright_Article 156 x 234mm 29/03/2010 16:06 Page 100 Anti-reflexivity The American Conservative Movement’s Success in Undermining Climate Science and Policy Aaron M. -

Newsbusters • 2007

MEDIA RESEARCH CENTER 2007 ANNUAL REPORT 20 YEARS OF EXCELLENCE CONTENTS A Message from L. Brent Bozell III 1 News Analysis Division 2 Business & Media Institute 4 Culture and Media Institute 6 TimesWatch 8 CNSNews.com 9 Farewell to David Thibault 11 MRCAction.org 12 FightMediaBias.org 12 NewsBusters.org 13 Youth Education & Internship Program 14 MRC’s Web sites 15 Publications 16 Whitewash 17 Impact: TV, Radio, Print & Web 18 MRC Through the Years Intro: 20 1987-1988 22 1989-1990 23 1991-1992 24 1993-1994 25 1995-1996 26 1997-1998 27 1999-2000 28 2001-2002 29 2003-2004 30 2005-2006 31 The MRC’s 20th Anniversary Gala 32 MRC Galas Through the Years 34 MRC Leadership and Board of Trustees 36 MRC Associates 37 Honor Roll of Major Benefactors 40 Inside the MRC 2007 41 2007 Financial Report 42 Letter to L. Brent Bozell III from William F. Buckley Jr. 44 ABOUT THE COVER: At the MRC’s 20th Anniversary Gala, Herman Cain, Chairman of the MRC’s Business & Media Institute, and MRC Board of Trustees Chairman Dick Eckburg surprised Brent Bozell with this painting they commissioned by renowned artist Steve Penley. Penley is best known for his bold and vibrant paintings of historical and popular icons, including Ronald Reagan, George Washington and Winston Churchill. The massive 7’ x 9’ painting now hangs outside Mr. Bozell’s offi ce suite at the MRC’s headquarters in Alexandria, Virginia. A Message from L. Brent Bozell III In 2007, the liberal media proved, once again, that they are the most powerful arm of the Left. -

© Copyright 2020 Yunkang Yang

© Copyright 2020 Yunkang Yang The Political Logic of the Radical Right Media Sphere in the United States Yunkang Yang A dissertation submitted in partial fulfilment of the requirements for the degree of Doctor of Philosophy University of Washington 2020 Reading Committee: W. Lance Bennett, Chair Matthew J. Powers Kirsten A. Foot Adrienne Russell Program Authorized to Offer Degree: Communication University of Washington Abstract The Political Logic of the Radical Right Media Sphere in the United States Yunkang Yang Chair of the Supervisory Committee: W. Lance Bennett Department of Communication Democracy in America is threatened by an increased level of false information circulating through online media networks. Previous research has found that radical right media such as Fox News and Breitbart are the principal incubators and distributors of online disinformation. In this dissertation, I draw attention to their political mobilizing logic and propose a new theoretical framework to analyze major radical right media in the U.S. Contrasted with the old partisan media literature that regarded radical right media as partisan news organizations, I argue that media outlets such as Fox News and Breitbart are better understood as hybrid network organizations. This means that many radical right media can function as partisan journalism producers, disinformation distributors, and in many cases political organizations at the same time. They not only provide partisan news reporting but also engage in a variety of political activities such as spreading disinformation, conducting opposition research, contacting voters, and campaigning and fundraising for politicians. In addition, many radical right media are also capable of forming emerging political organization networks that can mobilize resources, coordinate actions, and pursue tangible political goals at strategic moments in response to the changing political environment. -

3. Tilling Bigoted Lands, Sowing Bigoted Seeds

You Are Here 3. Tilling Bigoted Lands, Sowing Bigoted Seeds Ryan M. Milner, Whitney Phillips Published on: Apr 27, 2020 License: Creative Commons Attribution 4.0 International License (CC-BY 4.0) You Are Here 3. Tilling Bigoted Lands, Sowing Bigoted Seeds The farmhouse sits left of center in the worn, gray image, dated about 1935.1 “This photograph shows an approaching dust storm in Morton County, Kansas,” the Kansas Historical Society caption reads, and does it ever.2 There are a few other objects in the frame, set back about a quarter mile: possibly a car or set of cars, possibly a shed or set of sheds, possibly a tree or set of trees. Otherwise, all that surrounds the house is dirt. Crumbling, dry, dirt on the ground, and darkening, billowing, dirt in the sky, a hundred feet up, maybe higher, like a mountain range roaring toward the farm. For the Morton County homesteader who worked that farm—and indeed for everyone in the Southern Plains region—this looming dust storm, and the other looming dust storms that plagued the Dust Bowl throughout the 1930s, must have felt like divine retribution. In some ways, it was. Rather than being purely natural phenomena, the storms were the result of terrible meteorological luck combined with social, political, and technological forces all colliding with the Great Depression.3 One of these forces was the influx in the 1920s of “suitcase farmers” lured West by the promise of easy money, then lulled by the yields of an unusually rainy decade. Very often these farmers knew very little, or cared very little, about sustainable agriculture; they just planted and planted and planted and harvested and harvested and harvested.