Computer Vision System for a Chess-Playing Robot

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chess-Moves-November

November / December 2006 NEWSLETTER OF THE ENGLISH CHESS FEDERATION £1.50 European Union Individual Chess Championships Liverpool World Museum Wednesday 6th September to Friday 15th September 2006 FM Steve Giddins reports on round 10 Nigel Short became the outright winner of the 2006 EU Championship, by beating Mark Hebden in the 10th round, whilst his main rivals could only draw. The former world title challenger later declared himself “extremely chuffed” at having won on his first appearance in an international tournament in his home country, since 1989. Hebden is a player whose opening repertoire is well-known, and has been almost constant for his entire chess-playing life. As Black against 1 e4, he plays only 1...e5, usually either the Marshall or a main line Chigorin. Short avoided these with 3 Bc4, secure in the knowledge that Hebden only ever plays 3...Nf6. Over recent years, just about every top-level player has abandoned the Two Knights Defence, on the basis that Black does not have enough compensation after 4 Ng5. Indeed, after the game, Short commented that “The Two Knights just loses a pawn!”, and he added that anybody who played the line regularly as Black “is taking their life in their hands”. Hebden fought well, but never really had enough for his pawn, and eventually lost the ending. Meanwhile, McShane and Sulskis both fought out hard draws with Gordon and Jones respectively. Unlike Short, McShane chose to avoid a theoretical dispute and chose the Trompowsky. He did not achieve much for a long time, and although a significant outb of manoeuvring eventually netted him an extra pawn in the N+P ending, Black’s king was very active and he held the balance. -

American Chess Federation Congress at Boston the U. S. S. R

, , • • • • ,. • • • 1I0NOR PRIZE PROBLEM E. ZEP!....ER , • • Chelmlford, England I , • • , , • • WHITE MAl1ES IN FIVE MOVES -"----- - --.. - - - • THE OFFICIAL ORGAN OF THE AMERICAN CHESS FEDERA nON This Issue Features A Generous Selection of Games from the . American Chess Federation Congress at Boston The U. s. s. R. Chalnpionship . .• f ' and Other Tourneys • • _.-_.- - - --~--- AUGUST, 1938 M"ONTHLY- - 30. cu.. ANNUALLY $3.00 7he BY THE WAY THE A. C. F. CONGRESS This year· s c·ongress at Boston (treated in detail in another part of this issue) was a great success in many ways. The steady growth of interest in chess was mirrored in the numerous summaries and articles in the Boston. press by REVIEW John F. Barry, Charles Sumner Jacobs and Frank OFFICI!.L ORGAN OF THE Perkins. AMIiRICI,N CHESS FEDERATION The coverage by the New York Tim(!J was n.ot l!P to its high level, but chess players were Editors: grateful for its large and splendid selection. ISRAEL .A. HOROWITZ of some of the best games. SAMUEL S. COHEN An unfortun.ate aftermath of the tourney was the accideot which occurred to Mrs. Bain, Mrs. AJSoriate Editors: McCready and Miss W eart. 11l1ey were return. FRED REINFELO ing from Boston during the rainy .~peIl, and BARNIE F. WINKELMAN their car skidded on a slippery pavement, going into a telegraph pole. The car overturned, pin_ Problem Editor: ning Miss Weart, who luckily escaped with a R. CHENEY fractured shoulder. Mrs. Bain suffered a frac. tured vertebra, necessitating the wearing of a cast for several months. We do not know the Vol. -

Chess-July.Pdf

Edinburgh Expedition Ever a man for a challenge, Sean Marsh returned to Edinburgh for a long weekend to put some of Chess in Schools and Communities’ plans into action It is strange how plans can snowball. Edinburgh was not originally in the thoughts of Chess in Schools and Communities (‘CSC’), when expansion plans were discussed. In fact, the closest we came in the discussions was Newcastle and I delivered a CSC training day there in April 2017. Richard Payne, Chairperson of Lothian Junior Chess, attended the Newcastle day and shortly afterwards he contacted me to suggest the innovative plan of adding a training day to his junior chess tournament, with the hope of attracting any parents and teachers who happened to be at the tournament with their juniors at the time. The tournament in question was in Edinburgh and I delivered the training day there in October 2017. It was a success and it generated a lot of interest. CSC provided some of our new contacts with boards and sets, and I have maintained regular contact with several of the people who attended the courses. Colin Paterson, who is currently helping me with more expansion plans, even made the long trip to the London Chess Classic specifically for one of my full length training days, and Andrew MacQueen joined me on Teesside for a number of shadowing sessions in the Spring term. Meanwhile, we also made more schools aware of the Delancey UK Chess Challenge, which is an important part of the school calendar. Back to base, and it was time for discussions on how to build upon our initial successes. -

David Delucia Collection Highlights of the Chess Library Autographs

David DeLucia Collection Highlights of the Chess Library Autographs and Ephemera Agnel: Two-page ALS from H.R. Agnel to E.B. Cook. Oct. 27, 1857. Alapin: Two ALS. Alapin: Postcard written to Ranneforth. Alapin: Picture postcard of participants from Wien 1898. Written and signed by S. Alapin. Albin: Albin's Schach-Aphorismen und Reminiscenzen. Hannover, 1899, inscribed. Alekhine: 30 ALS (autographed letter signed). Always a favorite among collectors. Whether it be a simple signature, signed card, book, photo or letter, demand for Alekhine has always been strong and desirability high. Alekhine: 15 TLS (typed letter signed). Alekhine: 1927 WCC album with six typed game scores (Alekhine wins), all signed. A fine blue leather album containing: six typed game scores (Alekhine’s victories) all on the 1927 World Chess Championship stationery, each signed by Alekhine; signed picture photo of Alekhine; two ALS by Alekhine; ALS by Capablanca and; one drawn game score, written by a scribe on the 1927 World Chess Championship stationery and signed by both players. One of my favorite items in the collection. Alekhine: New York 1924 tournament book, signed. Alekhine: Nottingham 1936 tournament book, signed. Alekhine: My Best Games, 1908-23, inscribed. Alekhine: Auf dem Wege zur Weltmeisterschaft (1923-1927), inscribed. Alekhine: Das New Yorker Schachturnier. 1928. Inscribed. Alekhine: Four volumes, two with his hand-writing, from his library. I purchased a copy of Internationale Kaiser Jubiläums Schach Turnier, Wien 1898, from Stuart Wagman’s estate in Italy. I found Stuart’s writing on the Foreword, “This is Alekhine’s copy, purchased by me at Brentano’s in Paris, April 1949. -

Chess-Moves-March-Ap

March / April 2008 NEWSLETTER OF THE ENGLISH CHESS FEDERATION £1.50 Chess for Schools . the beginning The office of Fergus Christie, Sales Director of Holloid Plastics, the sponsor behind the whole Chess for Schools scheme. The first of the 250,000 chess sets have rolled offf o the production line in the Holloid factory and have landed in their sales directors office as pictured above. The ECF Office has logged over 2000 requests from schools for chess sets, with many more yet to be processed. If your local school has not yet requested their 10 free sets, then please encourage the school to send an email to [email protected] Editorial I am very pleased to announce that the official opening of the National Chess ECF News Library will be on the 10th June 2008 (for invitees only). Charles Clarke MP (former Home Secretary and Minister Monroi Personal Chess Manager for Education) will formally perform the opening. Sir Richard Clarke the father of - Electronic Notation Device Charles donated his personal library to the It is my view that the Monroi system is a direct substitution for a scorebook, with the BCF. It would be wonderful if our library advantage that pages cannot accidentally be turned over during a game to reveal a grew to rival the largest Chess Libraries previous game. The Monroi system is endorsed by FIDE and the Monroi devices are used in the world (I am assured space will be at many prestigious international tournaments. provided). To do this we need donations of It is my recommendation that they should be permitted to be used in any event where a single chess book or whole libraries (you scorebooks are allowed or where the controller does not need a paper copy of the game may need the room). -

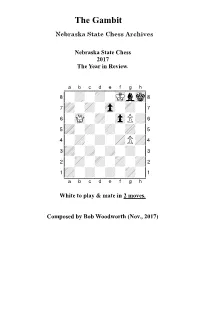

2017 Year in Review

The Gambit Nebraska State Chess Archives Nebraska State Chess 2017 The Year in Review. XABCDEFGHY 8-+-+-mKlmk( 7+-+-zp-+-' 6-wQ-+-zpP+& 5+-+-+-+-% 4-+-+-+P+$ 3+-+-+-+-# 2-+-+-+-+" 1+-+-+-+-! xabcdefghy White to play & mate in 2 moves. Composed by Bob Woodworth (Nov., 2017) From Kent’s Corner Greetings my chess friends and welcome to another issue of the Gambit. As always, it is my pleasure to produce this issue, albeit a lot of effort to achieve the final result. Of course, I am very thankful to my contributors. Special thanks to Dr. John Tomas for his recent and pass articles detailing his chess experiences in Nebraska. John is truly a wonderful writer and I was sadden to learn this is John’s last article for the Gambit. I do hope John reconsiders this. John’s articles will always have a home as long as I edit this newsletter. Nebraska State Chess Historical Archivist, Robert Woodworth, continues to write insightful and interesting articles, many of which are featured in this issue. In addition to Bob’s Gambit material, Bob has provided this editor a great deal of encouragement and support. Thank you Bob, for all you have done for me and the Gambit readership. NSCA President, John Hartmann, is doing great things for chess on the state, national and international levels. Special thanks to John for his Gambit material as well as his generous gift of a chess clock for yours truly. In addition to my regular contributors, I received nice letters for publication from Nebraska chess icon, Loren Schmidt. It’s always good to hear from Loren and know he is keeping tabs on Nebraska chess activities. -

The Chess Player's Magazine

This is a reproduction of a library book that was digitized by Google as part of an ongoing effort to preserve the information in books and make it universally accessible. https://books.google.com TheChessplayer'smagazine JohannJacobLöwenthal 'f W * « ^ u ^ M 'ai ai 'A dtt U £ U « M U tf\ I 77ie New York Public Librart/ I BEQUEST OF 1 GUSTAVUS A. PFEIFFER 1 j THE ^ MAGAZINE. ° JULY, 1863. CHESS STUDY, BY HERR HORWITZ. BLACK. Ill si WHITE. White having the move, wins. LONDON: 1 E. OWEN, 67, STRAND. PRICE ONE SHILLING. « L " Scilicit ingennas didicisse fideliter artes Emollit mores, neo sinit esse feros." Ottd. It would be false modesty on our part to apologise, to the public at large, or to the Chess-playing world in particular, for introducing a new Chess periodical, as in doing so we only supply a deficiency which already must have been strongly felt by every English Chess player, adept, amateur, or tyro. Thus, whilst Germany can boast of jts Schaclizeitung, France of its La Regence, Holland of its Sissa, the Anglo-Saxon race alone, whose rule predominates on two continents, has no Chess representative of its own in the republic of letters. Neither the United States nor England have, lately, had the advantage of a Chess organ. Our transatlantic brethren, it must be confessed, have strong and valid reasons for their momentary neglect of this noble game, in the fratricidal strife which rages in their country ; but we, here, on the shores of the Thames, where, as our Chancellor of the Exchequer assures us, peace and plenty reigns, have no such excuses to plead. -

KATOLICKI UNIWERSYTET LUBELSKI JANA PAWŁA II Wydział Nauk Humanistycznych Instytut Filologii Angielskiej

KATOLICKI UNIWERSYTET LUBELSKI JANA PAWŁA II Wydział Nauk Humanistycznych Instytut Filologii Angielskiej Krzysztof Pańczyk (Nr albumu: 110 645) Multilingual Chess Terminology - a Synchronic and Diachronic Perspective Praca magisterska napisana pod kierunkiem dr Marii Bloch-Trojnar Lublin 2009 Acknowledgements I would like to thank above all two people without whom this thesis would never be written: my master’s dissertation supervisor Doctor Maria Bloch-Trojnar and my bachelor’s thesis supervisor Professor Anna Malicka-Kleparska who encouraged me to write a work on chess. I really appreciate their kindness, invaluable advice, comments patience, understanding, help in orientation of the work and any other support. I would also like to thank everybody who helped me in different things connected with writing, first of all Mr Tomasz Lissowski for numerous consultations concerning history, literature, vocabulary as well as putting me in contact with a German chess-player. I am grateful to Dr Michael Negele from Germany for his comments concerning texts in his native languages. I thank my friend Sergey Shilov (Ukraine) who helped me a lot in Russian and Elżbieta Nowak for a consultation concerning Italian. I also thank my parents and my friends: Jacek Ilczuk, Zbigniew Szymczak, Mirosław Sarwiński, Piotr Murdzia, Adam Wróblewski (Canada), Grażyna and Krzysztof Rynkiewicz, Luigi Niewiadomski (USA) and Zygmunt Urbanowicz for different kind of help. 2 Contents: Chapter Page List of tables, Abbreviations, Symbols used in Chess Notation, Periods -

A LETTER to BERT (A Medley About Chess Libraries, Dealers and Collectors)

A LETTER TO BERT (A medley about chess libraries, dealers and collectors) Bob Meadley 2001 1 THERE IS NO COPYRIGHT-ANYONE IS WELCOME TO USE THE CONTENTS FOREWORD This letter to Bert Corneth was expanded to include the dealers and collectors I have met over the years and a chapter on how I acquired the Christmas Series and finally three pages on how chess gradually ensnared me throughout my life. I apologise for the disjointed approach taken and don’t wish to rewrite it. As I am unable to use OCR scanning because of the poor quality of the manual typewriter the fact that I have to retype this ensures I won’t rewrite it. Most of the facts are there and all those interested in chess libraries & c can add more and correct mistakes. I’m told a floppy disc holds 250 A4 size pages of text so I have hardly half filled this disc. One of my real future delights would be to receive a disc in return with additions and corrections to my material. As for the disc it seemed to me that sharing information as cheaply as possible was simply done by sending a disc in the mail. Those of you who wish a hard copy should be able to get the disc printed out at a secretarial service. The last 3 months have been quite enjoyable (including the 1996 period) and the hardest part was the chapter on the Christmas Series as it had to be dug out of files. What makes a collector? Well, in my case, in rural NSW, I needed a library to keep me sane. -

Chess Federation Vol

• America:' Che:H neU/Jpaper Copy:lg:,t 19 59 by Unlled Stetu Chess Federation Vol. XIV, No.2 Sunday, September 20, 1959 15 Cents START OF USCF PLAYERS' FUND USCF PLAYERS' FUND By USC F Business Manager Frank R. Brady As every chessplayer knows, thcre are always insurmountable prob RESOLUTION OF PURPOSE lems when money is needed to send a team abroad or to subsidize a By Jerry G. Spann, Pres., USCF player who is competing in an intemational event and this has very The U. S. Chess Federation Players' Fund, endowed entirely by often resulted in the United Slates not being represented in important contributions from chess enthusiasts and ,the public at large, shall be international events at all. used solely to underwrite the direct expenses of players representi.ng Many sources have been tapped and ideas used to help secure repre· the United States in important International Chess Events. AU monies sentation of our players in individual and team matches abroad so that received are to be acknowledged in writing and deposited in a sep'arate we could guarantee them an opportunity to participate in various events and special account, to be designated U. S. CHESS FEDERATION in the past. This year, the American Chess Foundation and the United PLAYERS' FUND. All disbu rsements thercfrom are to be made spe· States Chess Federation made arrangements to raise funds co·jointJy cifically for purpose set forth above. It should be clearly ,understood so that the entire expenses of Bobby Fischer and his second would be that every penny received shall be expended wholly for the purpose covered and that the travelling expenses of Pal Benko would also be intended. -

Everywhere It's Junior Chess NEW SCHOLASTIC ~~ OSIAS BAIN WINS ST

• ess 1 e Volume T Tuesday. N umber 18 om clnl PubUcati on of jf)e Unl teel Stutes (oess federcltl on M~)' 20, 1947 Everywhere It's Junior Chess NEW SCHOLASTIC ~~ OSIAS BAIN WINS ST. PETE (FLA.) CHESS LEAGUE QUEBEC CITY HAS 26th ANNUAL BOOSTS JR. CHESS CHAMPIONSHIP SOUTHERN MEET F UI' ther strldes woro mnue In In Quehflc (Canadll) 0 81ns Bain, .Iuly 3 at 51. Pe tersuurg (1" 10..) Penllsylv[luin.- scholasllc chess and a. studenl of chemistry a t Laval wIll IJe the scene of tho 2601 An lllla l TOllrn:lluent of the S Oulhel'lI allow o. l Hlgos chess club OI"gan llniverlllly. \\'011 It th ree·cornered bled In Allooll[l with a "Ingle ~l\' e ll t ro lny·olT with Marcel 1)1011 :uul Hllg· Che"s AssoclnUon. " he SL Pe ters· In tlHll Slnll) r cctm Uy. u:tJ by m' Lemelin 10 become City Cha",· Iml'/! Chess Cluh 1\'111 1)lny hU lll 10 \\,i l11 :1I1I ;\1. Hyland (I"W"lml'J:h). I.inn or Qllehel'. III lir e Illay'on: Ihill hb,torlc cl'('lIt II'hl".. hnd 14 ", ~ lale ~'('tl e rnIi Q II ]'rOllltlnlll [lild ]t(\in WOII [l'<lm Dloll nnd tire\\' humble heglunillg-5 in the (J co!·!("I:. USCii' Vlce'P"\lllhlenl, 11 mUI,-1I l,e ,vilh L\: m e lin. while L omelln lost l" h ... hla C hess As ~· II. urg",,17.('d h. twcell Celliral Penllsylvania. Pi Ul> 10 Uion. III tile regllial' I.ont'llft· J!l22 by l\lrtjol' J ohn ll. -

2016 Designing Chessmen Brochure3.Indd

other publications. Strangely, the details of the show were not documented thoroughly and the exhibition had been widely ignored among art historians. No checklist was recorded, or has been found, so there is much speculation as to what each artist contributed to the show. Thanks to the work of independent curator, artist, and scholar, Larry List, working as the first guest curator at The Isamu Noguchi Foundation and Garden Museum, New York, in 2005 the exhibition The Imagery of Chess Revisited was organized and an accompanying catalog published. Upon reviewing archival images, Levy’s gallery ledger, and press images, List and a network of cooperative institutions, artists, estates, and private individuals reconstructed much of the original exhibition. Bringing together many of the actual works of art and reconstructing many of the “lost” pieces, the exhibition gave new life to a forgotten unique collaboration and conflation of art and chess. With a mission to interpret the game of chess and its cultural and artistic significance, the World Chess Hall of Fame (WCHOF) is honored to celebrate the history of The Imagery of Chess and its impact on future artists and our own institution. September 2016 marks the fifth anniversary of the WCHOF’s existence in Saint Louis, Missouri. Since our opening, we have staged 10 exhibitions that merge art and chess—two of which were group shows D E S I G N I N G C H E S S M E N where artists reinterpreted the game of chess and incorporated their artistic ideas A T A S T E of T H E I M A G E R Y of C H E S S into either chess sets or chess-related imagery.