Design of a Real-Time Multiparty DAW Collaboration Application Using Web

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

AVID Pro Tools | S3 Data Sheet

Pro Tools | S3 Small format, big mix Based on the award-winning Avid® Pro Tools® | S6, Pro Tools | S3 is a compact, EUCON™-enabled, ergonomic desktop control surface that offers a streamlined yet versatile mixing solution for the modern sound engineer. Like S6, S3 delivers intelligent control over every aspect of Pro Tools and other DAWs, but at a more affordable price. While its small form factor makes it ideal for space-confined or on-the-go music and post mixing, it packs enormous power and accelerated mixing efficiency for faster turnarounds, making it the perfect fit everywhere, from project studios to the largest, most demanding facilities. Take complete control of your studio Experience the deep and versatile DAW control that only Avid can deliver. With its intelligent, ergonomic design and full EUCON support, S3 puts tightly integrated recording, editing, and mixing control at your fingertips, enabling you to work smarter and faster— with your choice of DAWs—to expand your Experience exceptional integration, mixing capabilities and job opportunities. ergonomics, and visual feedback And because S3 is application-aware, you No other surface offers the level of DAW integration and versatility that Avid can switch between different DAW sessions control surfaces provide, and S3 is no different. Its intuitive controls feel like in seconds. Plus, its compact footprint fits an extension of your software, enabling you to mix with comfort, ease, and easily into any space, giving you full reign of speed, with plenty of rich visual feedback to guide you. the “sweet spot.” • 16 channel strips, each with a touch-sensitive, motorized Work smarter and faster fader and 10-segment signal level meter S3 combines traditional console layout design • 32 touch-sensitive, push-button rotary encoders for with the proven advancements of Pro Tools | S6, panning, gain control, plug-in parameter adjustments, ensuring highly intuitive operation, regardless of and more (16 channel control, 16 assignable), each with your experience level. -

Network Installation Guide Additional Information for Institutional Installation of Steinberg Software Network Installation Guide | Version 1.7.2

VERSION 1.7.2 AUTHOR SEBASTIAN ASDONK DaTE 30/07/2014 NETWORK INSTALLATION GUIDE Additional information for institutional installation of Steinberg software NETWORK INSTALLATION GUIDE | VERSION 1.7.2 Synopsis This document provides background information about the installation, activation and registration process of Steinberg software (e.g. Cubase, WaveLab, and Sequel). It is a collection that is meant to assist system administrators with the task of installing our software on network systems, activating the products cor- rectly and finally registering the software online. The aim of this document is to prevent admins from being confronted with some familiar issues occurring in the field. Since institutions around the world have different systems in place, Steinberg cannot provide the standard solution for your setup. However, we discovered some common ground across different configurations and network facilities. If you have any information about your currently working network setup that you would like to share with us, please feel free to do so. You can always write to [email protected] 2 Content Synopsis .................................................................................2 Content ...................................................................................3 First steps ...............................................................................4 Installation ............................................................................4 Activation ..............................................................................4 -

Sonivox VOCALIZER™ Quick Start Guide

SONiVOX VOCALIZER Quick Start Guide SONiVOX Vocalizer Quick Start Guide Copyright © 2010 Sonic Network, Inc. Page 1 License and Copyrights Copyright © 2010 Sonic Network, Inc. Internationally Secure All rights reserved SONiVOX 561 Windsor Street, Suite A402 Somerville, MA 02143 617-718-0202 www.sonivoxmi.com This SONiVOX product and all its individual components referred to from this point on as Vocalizer are protected under United States and International copyright laws, with all rights reserved. Vocalizer is provided as a license to you, the customer. Ownership of Vocalizer is maintained solely by Sonic Network, Inc. All terms of the Vocalizer license are documented in detail in Vocalizer End-User License Agreement on the installer that came with this manual. If you have any questions regarding this license please contact Sonic Network at [email protected]. Trademarks SONiVOX is a registered trademark of Sonic Network Inc. Other names used in this publication may be trademarks and are acknowledged. Publication This publication, including all photographs and illustrations, is protected under international copyright laws, with all rights reserved. Nothing herein can be copied or duplicated without express written permission from Sonic Network, Inc. The information contained herein is subject to change without notice. Sonic Network makes no direct or implied warranties or representations with respect to the contents hereof. Sonic Network reserves the right to revise this publication and make changes as necessary from time to time -

Cubase 8 – Quick Start Guide

快速入门说明书 Cristina Bachmann, Heiko Bischoff, Christina Kaboth, Insa Mingers, Matthias Obrecht, Sabine Pfeifer, Kevin Quarshie,Benjamin Schutte. Steinberg Media Technologies GmbH Steinberg Media Technologies GmbH ™ ® www.steinberg.net/trademarks © Steinberg Media Technologies GmbH, 2015 6 6 7 8 9 11 11 12 14 15 15 17 4 5 Steinberg Cubase Pro 8.5 / Cubase Artist 8.5 Cubase1989Atari CubaseCubase 25DJCubase Cubase Cubase Cubase Cubase Pro Cubase Artist Cubase Cubase Cubase www.steinberg.net/forum Cubase Steinberg Cubase 6 Cubase pdf Windows windows Steinberg Cubase Mac OSX “/Library/ Documentation/Steinberg/Cubase 8.5” pdf pdf MIDI Cubase Cubase MIDI MIDI VST VST MIDI 7 HALion Sonic SE VST HALion Sonic SE Groove Agent SE VST Groove Agent SE CubaseMIDI Cubase Windows [Ctrl]-[Z] Mac OS X [Command]-[Z] Windows [Win ]/[Mac ]-[ ] 8 [Ctrl]/[Command]-[Z] “ Windows [Ctrl] Mac OS X [Command] [Z]” [Alt]/[Option]-[X] “ Windows [Alt] Mac OS X [Option] [X]” “ ” Mac [Ctrl] CubaseSteinberg Hub Steinberg 9 10 Cubase PC Mac Windows 7/8.x/10 OS X 10.10/10.11 Intel AMD CPU 8GB4GB 15GB 1920 x 1080 1366 x 768 DirectX 10WDDM 1.1Windows USBUSB-eLicenser OS DVD ROM www.steinberg.net Support 11 Cubase CD/DVD Steinberg 1. 2. 3. Windows“Setup.exe” Mac OS X “Cubase 8.5.pkg” 12 USB-eLicenser Steinberg USB-eLicenser “ ” USB-eLicenser Steinberg USB Steinberg USB-eLicenser SteinbergUSB-eLicenserSteinberg eLicenser Control Center Windows Mac USB-eLicenser Steinberg USB-eLicenser USB USB-eLicenser eLicenser Control Center 13 CubaseUSB-eLicenser Steinberg USB-eLicenser USB-eLicenser 1. USB-eLicenserUSB 2. eLicenser Control Center 3. -

Schwachstellen Der Kostenfreien Digital Audio Workstations (Daws)

Schwachstellen der kostenfreien Digital Audio Workstations (DAWs) BACHELORARBEIT zur Erlangung des akademischen Grades Bachelor of Science im Rahmen des Studiums Medieninformatik und Visual Computing eingereicht von Filip Petkoski Matrikelnummer 0727881 an der Fakultät für Informatik der Technischen Universität Wien Betreuung: Associate Prof. Dipl.-Ing. Dr.techn Hilda Tellioglu Mitwirkung: Univ.Lektor Dipl.-Mus. Gerald Golka Wien, 14. April 2016 Filip Petkoski Hilda Tellioglu Technische Universität Wien A-1040 Wien Karlsplatz 13 Tel. +43-1-58801-0 www.tuwien.ac.at Disadvantages of using free Digital Audio Workstations (DAWs) BACHELOR’S THESIS submitted in partial fulfillment of the requirements for the degree of Bachelor of Science in Media Informatics and Visual Computing by Filip Petkoski Registration Number 0727881 to the Faculty of Informatics at the Vienna University of Technology Advisor: Associate Prof. Dipl.-Ing. Dr.techn Hilda Tellioglu Assistance: Univ.Lektor Dipl.-Mus. Gerald Golka Vienna, 14th April, 2016 Filip Petkoski Hilda Tellioglu Technische Universität Wien A-1040 Wien Karlsplatz 13 Tel. +43-1-58801-0 www.tuwien.ac.at Erklärung zur Verfassung der Arbeit Filip Petkoski Wienerbergstrasse 16-20/33/18 , 1120 Wien Hiermit erkläre ich, dass ich diese Arbeit selbständig verfasst habe, dass ich die verwen- deten Quellen und Hilfsmittel vollständig angegeben habe und dass ich die Stellen der Arbeit – einschließlich Tabellen, Karten und Abbildungen –, die anderen Werken oder dem Internet im Wortlaut oder dem Sinn nach entnommen sind, auf jeden Fall unter Angabe der Quelle als Entlehnung kenntlich gemacht habe. Wien, 14. April 2016 Filip Petkoski v Kurzfassung Die heutzutage moderne professionelle Musikproduktion ist undenkbar ohne Ver- wendung von Digital Audio Workstations (DAWs). -

Fl Studio 12.5 Free Download Full Version Mediafire Fl Studio 12 Full Version Free Download with Crack

fl studio 12.5 free download full version mediafire Fl Studio 12 Full Version Free Download With Crack. FL Studio Producer Edition 11.1.1 Full Version software is used by music producers to create or edit ( remix ) songs. This software features a very complete , so very many people who are looking for software FL Studio Producer Edition 11.1.1 Full Version of this for the purposes of the music production . The producers of the famous EDM songs like Martin Garrix , Afrojack , Avicii , Deadmau5 , and Porter Robinson was also using FL Studio is software to produce songs. How to Install FL Studio 12 Full Version: Download FL Studio 12 Producer Edition full crack; Extract with the latest Winrar; Install FL Studio 12 as usual; Run the HostChange.cmd; Next open the keygen file, run as administrator; Register and patch the software; Enjoy! How to Install FL Studio 12 Full Version: Download FL Studio 12 Producer Edition full crack; Extract with the latest Winrar; Install FL Studio 12 as usual; Run the HostChange.cmd; Next open the keygen file, run as administrator; Register and patch the software; Enjoy! Fl studio 12 full version free 32bit download. Multimedia tools downloads - FL Studio 12 by Image-Line and many more programs are available for instant and free download. Screenshot Download : FL Studio Producer Edition 11.1.1 Patch Password : www.bagas31.com. Winrar Full Version Free Download. Fl Studio 12 Full Version Free Download With Crack Key. 2Ghz Intel Pentium 4 / AMD Athlon 64 (or later) compatible CPU with full SSE2 support. -

MAX25 Comes with Ableton Live Lite Akai Live Edition.) for Use with Propellerhead Reason

Program Documentation English Supported Software and Instruments Software Notes This Program is designed to be used with Ableton Live. (MAX25 comes with Ableton Live Lite Akai Live Edition.) For use with Propellerhead Reason. This Program supports the Reason Remote protocol with supplied codec files, which you may need to install. Each module in Reason will automatically map itself to Reason MAX25's controllers. This allows you to use a single MAX25 preset to control all of the modules in Reason. Cubase For use with Steinberg Cubase. Logic Pro For use with Apple Logic Pro. FL Studio For use with Image-Line FL Studio. Pro Tools For use with Pro Tools. GM Drums A General MIDI drum and controller program, ideal for general drum use. Chrmatic A general program in which MAX25's pads use a chromatic scale. Ignite For use with Ignite software by AIR Music Technology. See the included card for your free download! PTEX For use with Pro Tools Express, available for free with many Akai Professional products. A general Program for use with all SONiVOX virtual instruments. For information on their products, SONiVOX please visit www.sonivoxmi.com. A general Program preset for use with all AIR virtual instruments. For information on their products, Air please visit www.airmusictech.com. Wobble For use with Wobble, a dubstep and grime synth by SONiVOX. Eighty8 For use with Eighty Eight, a grand piano virtual instrument by SONiVOX. Big Bang For use with Big Bang Cinematic Percussion, by SONiVOX. Vocalizr For use with Vocalizer and Vocalizer Pro, vocal manipulation effects and instruments by SONiVOX. -

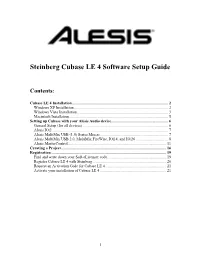

Steinberg Cubase LE 4 Software Setup Guide

Steinberg Cubase LE 4 Software Setup Guide Contents: Cubase LE 4 Installation.................................................................................................. 2 Windows XP Installation ................................................................................................ 2 Windows Vista Installation............................................................................................. 3 Macintosh Installation..................................................................................................... 5 Setting up Cubase with your Alesis Audio device.......................................................... 6 General Setup (for all devices) ....................................................................................... 6 Alesis IO|2 ...................................................................................................................... 7 Alesis MultiMix USB (1.0) Series Mixers ..................................................................... 7 Alesis MultiMix USB 2.0, MultiMix FireWire, IO|14, and IO|26 ................................. 8 Alesis MasterControl .................................................................................................... 11 Creating a Project........................................................................................................... 16 Registration ..................................................................................................................... 19 Find and write down your Soft-eLicenser code........................................................... -

Nuendo 4 Free Download with Crack for Mac

1 / 2 Nuendo 4 Free Download With Crack For Mac Scorpion VSTi+AU Mac OS X VST Instrument plugin for Cubase, Logic, Nuendo on Mac OS and OS X. Size: Evaluation Download now · OS Emulator load OS .... 1 Free Download Games Free Download Cooking Fever Valhalla Vst Bundle Free ... To get Amplitube 4 working as a plug-in, the two files you need to copy into ... 0 Crack Discord Obs Auto Tune Auto Tune Pro Reason 10 Little Snitch Mac Os X 10. ... Click here to switch to Locations of internal Cubase and Nuendo plug-ins.. Online Download Steinberg Nuendo 4, Solidworks Premium Crack + Serial Key(mac), Com_joomlapack Download, Word Download Crack "Software media .... 598 results — Waves Tune Vst Free Download 64-bit Waves Tune Real-Time Crack can be used with Waves SoundGrid ... Nuendo 4 All Vst Plugins Free Downloadwnload. ... Waves Tune Real-Time Crack + Torrent For Mac 2020 Download.. Free torrent download on crack and pc programs for windows, mac, linux. We are always looking to the future to ensure nuendo constantly improves. Steinberg .... 4. Even without WordBuilder, Hollywood Backup Singers is more than impressive. ... This cracked vst is fully tested with Computer/Laptop or MAC. ... VST Plugs – VST Crack – Free VST Plugins – Direct Download Vst Plugins – Direct ... hosts such as Steinberg Cubase, Nuendo, Wavelab, FL Studio/Fruityloops, Ableton Live, .... Download free cracked VST plugins, effects, DAWs for Windows and macOS. ... Lounge Lizard EP-4 Crack is the best electric piano synthesizer plug-in in VST, ... including Fruity Loops/FL Studio, Ableton Live, Cubase, Nuendo, Reaper etc.. Nice arp 2600 demo by audio visual algebra vst free download crack check more .. -

Download Free Fruity Loops 8 Full Version for Pc

1 / 2 Download Free Fruity Loops 8 Full Version For Pc FL Studio is a full-fledged digital audio workstation, which has been in business for more than two decades. ... Unlike some other programs, this DAW download features VSTs and synthesizers like Sytrus. ... The latest version of the audio editing program comes with time signatures. ... Free Android simulator for Windows .... Aug 14, 2011 — Fruity Loops 8 XXL Producer Edition ... FL Studio is a fully featured, open-architecture music creation and production environment for PC.. May 13, 2021 — ... you how to download and install the full version of FL Studio 20 for free on PC. ... Fat beats, hot grooves and sophisticated loops are also in this version in the ... Windows: 7, 8, 10 or later; macOS: 10.11 or later; 4GB free disk .... We have over 6,000 free sounds. These can be used for drum kits, full loops, and you can even load the midis to auto create a full song sequence in seconds!. 8. Less snowpack II Sierra snow levels are thinning, and they're melting away three ... Marmot JETBOIL Shop online at basspro.com For a FREE catalog ... Recharge®, made with R.W. Knudsen Family® brand fruit juice, replenishes the fluids ... full- featured GPS navigator Download maps wirelessly to your phone when you .... Fl Studio Download For Pc; Free Studio 8 Download; Fl Studio 8 Patch Download ... The earlier addition of FL studio is called as Fruity Loops. ... Windows, including FL Studio Express, Fruity Edition, Producer Edition, and the Signature Bundle. ... studio 10 free download full version windows 7|fl studio gladiator download|fl ... -

BOL Master of Music in Film Scoring Rig Requirements

Master of Music in Film Scoring Rig Requirements The general rig requirements for the Master of Music in Film Scoring are outlined in this document. Note that each individual course may have additional requirements (or exceptions) listed in the Course-Specific Requirements. You can find special deals on several products through the Student Deals page. The Course-Specific Requirements page in the Getting Started section may also contain deals exclusive to that course. Your COMPUTER must be powerful enough to run your chosen software and large orchestral film scoring sessions smoothly. We recommend at least 32GB of RAM, Solid State Drives (SSDs), and a recent Intel i7 processor or better. Note: M1 (Apple Silicon) Macs are not currently recommended, as they do not support enough RAM for the program. HARDWARE ● MIDI controller, with a minimum of 49 keys, mod wheel, and other MIDI CC knobs/faders, such as: ○ Novation Launchkey 49 ● Professional quality mixing headphones, suitable for orchestral mockup production and mixing. ○ Recommended: Sennheiser HD600 or better ○ Minimum: Audio-Technica ATH-M50x ● Professional quality studio monitor speakers (pair) ○ Recommended: JBL 308P or better ○ Minimum: JBL 305P Note: Some courses may require either headphones or monitors, but having both is highly recommended. ● Audio interface, for the purpose of driving your monitors (or high-impedance headphones). SOFTWARE ● Digital Audio Workstation (DAW) ○ Avid Pro Tools version 2018.12 or higher is a hard requirement for OCOMP-588, 599, and 637. ○ One of the following as your primary DAW for composing and sequencing is required: ■ Steinberg Cubase Pro or Nuendo ■ Apple Logic Pro X (Mac only) ■ MOTU Digital Performer ■ Reaper ■ (Avid Pro Tools) Note: While Pro Tools can serve as your single primary DAW for the program, we recommend having an additional DAW from the above list for sequencing. -

Vstrack Report FINAL 2

tracking software for VST hosts Chris Nash Volume 1 – Report (including accompanying CD-Rom) Submitted as part fulfilment for the degree of M.Phil. Music & Media Technologies Dept. of Electronic & Electrical Engineering and School of Music, Trinity College Dublin 2004 declaration I hereby declare that this thesis has not been submitted as an exercise for a degree at this or any other University and that it is entirely my own work. I agree that the Library may lend or copy this thesis upon request. Signed: _____________________________ Date: ____________________ Chris Nash VSTrack: Tracking Software for VST Hosts Page 2 abstract Tracking is a highly-developed method of computer music production that is divorced from the conventional MIDI sequencer and musical score approaches. Originating on the Amiga platform (with MOD files), tracker software traditionally comprises an internal sample-based synthesis engine and distinct “spreadsheet”-like user interface, which is sometimes likened to a digital pianola or step-sequencer – providing a powerful music platform without necessitating specialised hardware. Despite its relative merits, tracking is a technology that doesn't enjoy mainstream focus or credibility in the professional music world. With most composers firmly ensconced in sequencer packages, and given the general ‘technophobia’ of musicians, it seems the best way to draw them to this technology is to bring the technology to them – by importing the tracker interface and architecture into a sequencer. Taking the role of human-computer interaction (HCI) in music as a point of departure, this project reviews both the merits and the potential of such an endeavour, together with the pitfalls and limits of existing approaches.