F2: MEMORY-CENTRIC COMPUTING from Iot to ARTIFICIAL INTELLIGENCE and MACHINE LEARNING

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Conference Program Booklet

Austin Convention Center Conference Austin, TX Program http://sc15.supercomputing.org/ Conference Dates: Exhibition Dates: The International Conference for High Performance Nov. 15 - 20, 2015 Nov. 16 - 19, 2015 Computing, Networking, Storage and Analysis Sponsors: SC15.supercomputing.org SC15 • Austin, Texas The International Conference for High Performance Computing, Networking, Storage and Analysis Sponsors: 3 Table of Contents Welcome from the Chair ................................. 4 Papers ............................................................... 68 General Information ........................................ 5 Posters Research Posters……………………………………..88 Registration and Conference Store Hours ....... 5 ACM Student Research Competition ........ 114 Exhibit Hall Hours ............................................. 5 Posters SC15 Information Booth/Hours ....................... 5 Scientific Visualization/ .................................... 120 Data Analytics Showcase SC16 Preview Booth/Hours ............................. 5 Student Programs Social Events ..................................................... 5 Experiencing HPC for Undergraduates ...... 122 Registration Pass Access .................................. 7 Mentor-Protégé Program .......................... 123 Student Cluster Competition Kickoff ......... 123 SCinet ............................................................... 8 Student-Postdoc Job & ............................. 123 Convention Center Maps ................................. 12 Opportunity Fair Daily Schedules -

NVIDIA Chief Scientist Bill Dally Receives Lifetime Achievement Award from Leading Japanese Tech Society

NVIDIA Chief Scientist Bill Dally Receives Lifetime Achievement Award From Leading Japanese Tech Society First Non-Japanese Winner of Honor Since Marvin Minsky, Alan Kay Japan's largest IT society, the Information Processing Society of Japan (IPSJ), today honored NVIDIA Chief Scientist and Senior Vice President of Research Bill Dally with the Funai Achievement Award for his extraordinary achievements in the field of computer science and education. Dally is the first non-Japanese scientist to receive the award since the first two awards were given out in 2002 to Alan Kay (a pioneer in personal computing) and in 2003 to Marvin Minsky (a pioneer in artificial intelligence). The Funai Achievement Award recognizes Dally's accomplishments in computer architecture, particularly in the areas of parallel computing and Very Large Scale Integration processing. The IPSJ noted that Dally has made major contributions in education at the Massachusetts Institute of Technology, Stanford University, and in industry as NVIDIA's chief scientist. "I'm honored to be selected for one of the world's major prizes in computer science. It's particularly rewarding to be in the company of computer science luminaries like Alan Kay and Marvin Minsky," said Dally, who received the award at an IPSJ event in Matsuyama, Japan. "I'm grateful to the IPSJ for acknowledging the importance of my research in parallel computing." Professor Shuichi Sakai, dean of the Computer Science Department at the University of Tokyo, said, "Bill Dally has always been a revolutionary rather than a revisionist in computer science." Dally's achievements across more than 30 years of work and research include developing the system and network architecture, signaling, routing and synchronization technology found in most large parallel computers today. -

NVIDIA Chief Scientist Bill Dally Receives Computer Architecture's Highest Honor

NVIDIA Chief Scientist Bill Dally Receives Computer Architecture's Highest Honor ACM, IEEE Call Dally a Visionary for His Work Advancing Parallel Processing SANTA CLARA, CA -- Two leading computing organizations today honored NVIDIA chief scientist Bill Dally with the Eckert-Mauchly Award, which is considered the world's most prestigious prize for computer architecture. In awarding the prize, the Association for Computing Machinery (ACM) and the IEEE Computer Society (IEEE) called Dally a "visionary" for advancing the state of computing using parallel processors. "This wonderful recognition reflects how Bill's pioneering work in parallel processing is on its way to revolutionizing computing," said Jen-Hsun Huang, NVIDIA CEO and president. "We are delighted to have the benefits of his singular talent as we endeavor through our GPUs to bring parallel computing to the world." Previous winners of the Eckert-Mauchly Award include Seymour Cray, a key figure in the birth of supercomputing; David Patterson, a computer pioneer teaching at University of California, Berkeley; and Stanford president John Hennessy. In recognizing Dally for his achievements, the ACM and IEEE wrote: "Early in his career, Dally recognized the limitations of serial or sequential processing to cope with the increasing need for processing power in order to solve complex computational problems. He perceived the ability of parallel processing, in which many processing cores, each optimized for efficiency, can work together to solve a problem." Parallel processing has expanded in recent years from its traditional realm of environmental science, biotechnology and genetics to applications in such areas as data mining, oil exploration, Web search engines, medical imaging and diagnosis, pharmaceutical design, and financial and economic modeling. -

Full Conference Guide

FULL CONFERENCE GUIDE PRESENTED BY SPONSORED BY Dell Precision rack – a Perfect fit for Your Data center Developed in close collaboration with hardware and software partners, the Dell Precision rack workstation delivers high performance in a flexible 2U chassis — an ideal solution for centralizing critical data and workstation assets in secure data center locations. To help maximize flexibility, the Dell Precision rack workstation is enhanced by a choice of industry-standard slot combinations. Designed for performance, reliability, and scalability in environments where space is at a premium, the Dell Precision rack workstation is ideal for high-performance clusters and rendering farms. For remote, crowded, heat-challenged, and acoustically sensitive environments such as financial trading or factory floors, Dell’s optional remote access solution is the ideal solution for workstation users who need to interact with their system when elsewhere on the network. “With the emerging gPu oPPortunities, Dell Precision shoulD be the Platform of choice for anYone looking to accelerate their cgi WorkfloW.” — keith jeffery, head of Business development, taylor James Dell Precision workstations help meet your application demands for: reliabilitY: • Dell Precision, the world’s most preferred Performance anD scalabilitY: workstation family • Intel®-based processing power options • Compatibility for 90 applications including Intel® Core™2 Extreme and Intel® based on certifications by 35 leading Xeon® processors on select systems Independent Software Vendors -

Nvidia Corporation Nvidia Nvidia Corporation 2015 Annual Review Notice of Annual Meeting Proxy Statement and Form 10-K

NOTICE OF ANNUAL MEETING PROXY AND STATEMENT FORM10-K 2015 ANNUAL REVIEW2015 NVIDIA CORPORATION NVIDIA CORPORATION | 2015 ANNUAL REVIEW OVERVIEW GIANT LEAPS IN VISUAL COMPUTING NVIDIA is the world leader in visual computing. The GPU, our invention, serves as the visual cortex of modern computers and is at the heart of our products and services. Our work opens up new universes to explore, enables amazing creativity and discovery, and powers what were once science fiction inventions like self-learning machines and self-driving cars. We serve markets where visual computing is essential and deeply valued — gaming, automotive, enterprise, and high performance computing & cloud. NVIDIA’s VXGI technology enables dynamic global illumination in games. Our “Apollo 11” demo relied on VXGI to debunk long-held moon landing conspiracy theories. GIANT LEAPS IN VISUAL COMPUTING 2015 ANNUAL REVIEW 1 GAMING MADE TO GAME At $100 billion, computer gaming is the world’s largest entertainment industry, exceeding Hollywood and professional sports. And it continues to grow. > GeForce,, our GPU brand for PC gamers, is the world’s largest gaming platform, with 200 million users. > Our share of the market for discrete GPUs hit a record 72 percent. > We formally launched GRID — dubbed “a Netflix for games” — as the world’s first game-streaming service that provides AAA titles at a resolution of up to 1080p, at 60 frames per second. > We announced our first living-room entertainment device, SHIELD , the world’s most advanced smart TV console. “This product marks a major step for NVIDIA, one it has been building toward for decades — to be a full service consumer electronics company.”Jon Peddie “This product marks a major step for NVIDIA, one it has been building toward for decades — to be a full service consumer electronics company.”Jon Peddie 2015 ANNUAL REVIEW 3 AUTOMOTIVE POWERING TOMORROW’S CARS TODAY Automakers like Audi, BMW and Tesla rely on NVIDIA technology. -

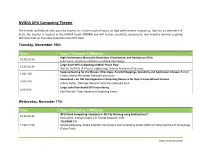

NVIDIA GPU Computing Theater

NVIDIA GPU Computing Theater The theater will feature talks given by experts on a wide range of topics on high performance computing. Open to all attendees of SC10, the theater is located in the NVIDIA booth (#1343) and will feature scientists, developers, and industry luminaries giving technical talks on the latest research in the HPC field. Tuesday, November 16th Time Topic / Presenter / Affiliation High Performance Molecular Simulation, Visualization, and Analysis on GPUs 10:00-10:30 John Stone, University of Illinois at Urbana-Champaign Large-Scale GPU Computing of Multi-Phase Flow 12:00-12:30 Wei Ge, Institute of Process Engineering, Chinese Academy of Sciences Supercomputing for the Masses: Killer-Apps, Parallel Mappings, Scalability and Application Lifespan Robert 1:00-1:30 Farber, Pacific Northwest National Laboratory Keeneland – An NSF Heterogeneous Computing Resource for Open Computational Science 2:00-2:30 Jeffrey Vetter, Oakridge National Laboratory/Georgia Tech Large Scale Distributed GPU Isosurfacing 4:00-4:30 Paul Navratil, Texas Advanced Computing Center Wednesday, November 17th Time Topic / Presenter / Affiliation GPU Cloud Computing: Examples in 3D City Planning using RealityServer® 10:00-10:30 Paul Arden, mental images and Tamrat Belayneh, ESRI TSUBAME 2.0 12:00-12:30 Satoshi Matsuoka, Global Scientific Information and Computing Center (GSIC) of Tokyo Institute of Technology (Tokyo Tech) www.nvidia.com/SC10 PGI CUDA Compiler for x86 1:00-1:30 Michael Wolfe, The Portland Group Large-Scale CFD Applications and a Full -

PDF (Issue 3, Winter 2003)

engengeniousenious a publication for alumni and friends of the division of engineering and applied science of the California Institute of Technology winter 2003 The Division of Engineering and Applied Science consists of thirteen Options working in five broad areas: Mechanics and Aerospace, Information and Communications, Materials and Devices, Environment and Civil, and Biology and Medicine. For more about E&AS visit http://www.eas.caltech.edu. DIVISION CHAIR OPTIONS Richard M. Murray (BS ’85) aeronautics (GALCIT) www.galcit.caltech.edu DIVISION STEERING COMMITTEE applied & computational mathematics www.acm.caltech.edu Jehoshua (Shuki) Bruck Gordon and Betty Moore Professor of applied mechanics Computation and Neural Systems and www.eas.caltech.edu/options/appmech.html Electrical Engineering applied physics Janet G. Hering www.aph.caltech.edu Professor of Environmental Science and Engineering bioengineering www.be.caltech.edu Hans G. Hornung C. L. “Kelly” Johnson Professor of Aeronautics civil engineering www.eas.caltech.edu/options/civileng.html Thomas Yizhao Hou Professor of Applied and Computational computation & neural systems Mathematics www.cns.caltech.edu Melany L. Hunt computer science Professor of Mechanical Engineering www.cs.caltech.edu Peter Schröder control & dynamical systems Professor of Computer Science and Applied www.cds.caltech.edu and Computational Mathematics electrical engineering Kerry J. Vahala (BS ’80, MS ’81, PhD ’85) www.ee.caltech.edu Ted and Ginger Jenkins Professor of Information Science and Technology and environmental science and engineering Professor of Applied Physics www.ese.caltech.edu P.P.Vaidyanathan materials science Professor of Electrical Engineering www.matsci.caltech.edu mechanical engineering ENGenious www.me.caltech.edu editors Marionne L.