Augmented Reality Markerless Multi-Image Outdoor Tracking System for the Historical Buildings on Parliament Hill

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Parliamentary Precinct Lighting Protocol (2019)

Federal Land Use, Design, and Transaction Public Approval Submission No. 2020-P45 To Board of Directors For DECISION Date 2020-04-23 Subject/Title Parliamentary Precinct Exterior Lighting Master Plan Purpose of the Submission To obtain approval of the Board of Directors for the Parliamentary Precinct Exterior Lighting Master Plan. Recommendation • THAT the Parliamentary Precinct Exterior Lighting Master Plan (ELMP) be approved subject to the following condition: o That the subsequent implementation projects for architectural and landscape lighting planned for in the ELMP be submitted to the NCC for review and approval; and • THAT the preparation and signature of the federal approval document for the Parliamentary Precinct Exterior Lighting Master Plan be delegated to the Vice President, Capital Planning Branch. Submitted by: Pierre Vaillancourt, A/Vice President, Capital Planning Branch______ Name _______________________________________________________ Signature Submission: 2020-P45 Page 1 of 5 Federal Land Use, Design, and Transaction Public Approval Submission 1. Authority National Capital Act, sections 11 and 12 2. Project Description • The Parliamentary Precinct is the home of Canada’s parliamentary system and the physical expression of our commitment to democracy and the principle of freedom. The picturesque landscape and architectural style of the Precinct are enduring visual symbols of our country, while the openness, accessibility and security of the public spaces are representative of the values treasured and celebrated by all Canadians. The Precinct provides the setting for the work of Parliamentarians and staff in a secure and efficient manner, but it is also the preeminent gathering place for public expression and celebration, as well as a place of quiet reflection. -

The Evolution of Modelling Practices on Canada's

The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLII-2/W11, 2019 GEORES 2019 – 2nd International Conference of Geomatics and Restoration, 8–10 May 2019, Milan, Italy THE EVOLUTION OF MODELLING PRACTICES O N CANADA’S PARLIAMENT HILL:AN ANALYSIS OF THREE SIGNIFICANT HERITAGE BUILDING INFORMATION MODELS (HBIM) L. Chow 1, K. Graham 1, T. Grunt 1, M.Gallant1, J. Rafeiro,1 S. Fai 1 * 1 Carleton Immersive Media Studio (CIMS), Carleton University, Ottawa, Canada - (lchow, kgraham, tgrunt, mgallant, jraferio, sfai)@cims.carleton.ca KEY WORDS: Heritage Building Information Model, Level of Detail, Model Tolerance, Level of Accuracy, Data management ABSTRACT: In this paper, we explore the evolution of modelling practices used to develop three significant Heritage Building Information Models (HBIM) on Canada’s Parliament Hill National Historic Site — West Block, Centre Block, and The Library of Parliament. The unique scope, objective, and timeline for each model required an in-depth analysis to select the appropriate classification for Level of Detail (LOD) and Level of Accuracy (LOA). With each project, the refinement of modelling practices and workflows evolved, culminating in one of our most complex and challenging projects — the Library of Parliament BIM. The purpose of this paper is to share ideas and lessons learned for the intricate challenges that emerge when using LOD and LOA classifications including trade-offs between model performance, tolerances, and anticipated BIM use. In addition, we will evaluate how these decisions effected managing the digitization, data processing, data synthesis, and visualisation of the models. 1. OVERVIEW monument. As both the political and symbolic locus of Canada’s parliamentary democracy, the site is in every sense a stage where In 2012, Public Services and Procurement Canada (PSPC) and Canada’s nationhood is played out for national and international the Carleton Immersive Media Studio (CIMS) began a research audiences. -

Building the Future Provides the Minister of Public Works and Government Services Canada with House of Commons Requirements

Building the Future provides the Minister of Public Works and Government Services Canada with House of Commons requirements for planning and implementing the long-term renovation and development of the Parliamentary Precinct. BuildingBuilding thethe FutureFuture House of Commons Requirements for the Parliamentary Precinct October 22, 1999 ii Building the Future Table of Contents Preface . v Foreword . .vii Executive Summary . ix The Foundation . 1 A. Historical Considerations . 2 B. Current and Future Considerations . 6 C. Guiding Principles . 8 Requirements for Members’ Lines of Business . 9 Chamber . .10 Committee . .14 Caucus . .24 Constituency . .28 Requirements for Administration and Precinct-wide Support Services . .33 Administration and Support Services . .34 Information Technology . .38 Security . .43 Circulation . .47 The Press Gallery . .51 The Visiting Public . .53 Requirements for Implementation . .55 A. A Management Model . .56 B. Use of Buildings . .58 C. Renovation Priorities . .59 Moving Ahead: Leaving a Legacy . .65 Appendix A: Past Planning Reports . .67 Appendix B: Bibliography . .71 Building the Future iii iv Building the Future Preface I am pleased to submit Building the Future: House of Commons Requirements for the Parliamentary Precinct to the Board of Internal Economy. The report sets out the broad objectives and specific physical requirements of the House of Commons for inclusion in the long-term renovation and development plan being prepared by Public Works and Government Services Canada. In preparing this report, the staff has carefully examined the history of the Precinct to ensure that our focus on the future benefits from the expertise and experiences of the past. Moreover, this work strongly reflects the advice of today’s Members of Parliament in the context of more recent reports, reflections and discussions since the Abbott Commission’s Report in 1976. -

Ottawa, Ontario West Block Parliament Hill HERITAGE CHARACTER

Ottawa, Ontario West Block Parliament Hill HERITAGE CHARACTER STATEMENT The West Block was built in 1859-65 to the designs of Thomas Stent and Augustus Laver, architects. New wings designed by the Department of Public Works were added in 1874-78 and 1906-09, and major interior renovations designed by Mathers & Haldenby of Toronto were carried out in 1961-65. Public Works and Government Services Canada is the custodian department. See FHBRO Building Report 86-52. Reason for Designation The West Block was designated Classified because of its exceptional importance in terms of historical associations, architectural quality and environmental impact. Designed to accommodate the civil service, the West Block is directly associated with the substantial role played by the administrative arm of the federal government during the country's formative years. Together with the 1859-65 wings of the East block, and the Library of Parliament, the West Block ranks with the best mid-19th century Gothic Revival buildings in the world. The product of three separate building campaigns, it presents an essay in the evolution of the Gothic Revival style from mid-19th century to the early 20th century. In addition to being a national landmark in its own right, the West Block plays a critical role in establishing the overall character of Parliament Hill and strongly influences the character of the west end of Wellington Street. Character Defining Elements The heritage character of the West Block resides in its picturesque massing, footprint, structural ornament, finish materials, and in surviving portions of the 19th and early 20th century interior plans, features and finishes. -

Building Stones of Canada's Federal Parliament Buildings

Volume 28 Numbu 1 also restored the role of the geologist, with they represent. Their design and construc- the requirement to understand the tion has been the work of formative complex reactions of the building stone to architects and builders. Great pains have the environment and its neighbouring been taken to ensure that these are masonry clcments, and in the quest to significant structures, of unique design find suitable replacement stone. and beauty, using quality materials and built with exacting craftsmanship, worrhy R~UM~ of thc importance of the business that Les pierres de construction utilisk pour transpirs within thcir walls. Canada's les tdifices du Parlement h Omwa Parliament Buildings in Ottawa are no prwienncnt de nombreuses carrikres exception. autant au Canada, aux &ts-Unis que de This is the first of a series of Building Stones plusieurs pays europkns. Ells ont et4 articles about the building stones of till&, pods et sculpt& suivant des Canada's federal and provincial Parlia- of Canada's Federal procedCs precis, en conformite avec 1s ment Buildings. Historians, political Parliament Buildings r&glesde I'an de I'epoque. La rcconstruc- scientists, architects, and engineers have tion de I'Cdifice du centre qui est le sujet written at length about the buildings and D.E. Lawxnce du prCsent article, a ttt une &rc qui tbcir varied histories. Geologists, for the Geological Survey of Cad s'est Ctirk Ctant donnt les prioritCs lors de most part, have been silent. It is expected GO1 Booth Smrt la Grande Guerrc de 1914-1918. Ces that this series will be written by a Ottawa, Ontario KIA OE8 pierres de revetement des edifices du number of geologists, and may cover all [email protected] Parlement ont subi les avanies du climat, provinces and territories to document the du feu, de tremblements de terre et de la stories of the stones themselves. -

Parliament Hill | Ottawa, Ontario

Experience. CASE STUDY Innovation. Parliament Hill | Ottawa, Ontario Balancing Technology with Photo: ManikMati Photography Historical Integrity The renovation of Parliament Hill’s West Block was an $863 million project that fi nally wrapped up in fall 2018, uniting the charm of a Victorian Gothic structure that was completed in 1865 with the technological advancements of 21st-century construction. During the renovations, architects looked at every conceivable angle to modernize the facility while also maintaining the building’s historical integrity. “The West Block was last renovated over 50 years ago,’’ said Georges Drolet, a partner in EVOQ and an architectural historian. The fi rm formed a joint ventured partnership in 1995 with Architecture 49 to plan and design the West Block Rehabilitation Project. “In that time span, all the expectations and standards of operating and working in a parliamentary offi ce building changed, from security concerns to Photo: Heather + Little communications networks, energy effi ciency targets, hazardous materials management, universal accessibility standards, broadcasting and public engagement programs and so on.” One of the most fascinating and unique projects in the overhaul of West Block was a complete roof overhaul. A striking, self-supporting, curved glass roof stands overtop the central courtyard, which will serve as the temporary home for the House of Commons. The project by Seele included 2,485 square meters of triple- Perhaps the most essential glazed glass, 938 tons of steelwork for roof and tree columns, task for architects and 2,554 square meters of laylight glazing under the roof, and engineers in the renovation 1,813 square feet of open-grid fl ooring for a service catwalk at was overhauling the the roof level. -

West Block Restoration

™ The Canadian Parliament Buildings - Ottawa, Canada West Block Restoration Cintec West Block Restoration The West Block was constructed between 1860 and 1865. In 1897 the building was damaged by fire. The West Block is one of the three buildings on Parliament Hill in Ottawa, Ontario that forms the Parliamentary precinct. The West Block after renovation now houses offices for Parliamentarians, a branch of the Library of Parliament, committee rooms, and some preserved pre-Confederation spaces, which are used for some state occasions. All Canadian laws now originate here. The world has changed radically since the Parliament Buildings were completed. The stone buildings, although repaired over the years, had begun to deteriorate. Cintec first started work on the west block in 1994 when Cintec reinforcement anchors were installed in the south west tower by Public Works based at Plouffe Park Ottawa. Since then, the West Block has been fully restored and modernized. This is a write up of our involvement in the restoration of the West Block. ©Cintec Worldwide ™ The Canadian Parliament Buildings - Ottawa, Canada An $863 million project to completely renovate the West Block began in 2011, and the renovated building opened on 28 January 2019. Major repair and restoration work has been carried out to ensure that these historic buildings continue to serve Canadians for many years to come. The restoration of the West Block of Parliament is one of the largest rehabilitation projects in North America. The 19th-century building was completely modernized with state-of-the-art facilities, while the heritage and character-defining elements were preserved with the utmost respect and sensitivity. -

Archaeological Investigation of Preliminary Geotechnical Boreholes for the Centre Block Rehabilitation Project

Archaeological Investigation of Preliminary Geotechnical Boreholes for the Centre Block Rehabilitation Project. Parliament Hill, Ottawa, Ontario Prepared for: Mr. Daniel Haché, P.Eng. Parliamentary Precinct Branch (PPB) Public Works and Government Services Canada 107 Sparks Street, Birks Building, 3rd Floor Ottawa, ON K1A 0S5 Prepared by: Stantec Consulting Ltd. 1331 Clyde Ave, Suite 400 Ottawa, ON, K2C 3G4 122411046 April 7, 2015 ARCHAEOLOGICAL INVESTIGATION OF PRELIMINARY GEOTECHNICAL BOREHOLES FOR THE CENTRE BLOCK REHABILITATION PROJECT. Table of Contents EXECUTIVE SUMMARY ............................................................................................................... I 1.0 PROJECT CONTEXT ......................................................................................................1.1 1.1 DEVELOPMENT CONTEXT .............................................................................................. 1.1 1.2 HISTORICAL CONTEXT .................................................................................................... 1.2 1.2.1 Pre-Contact Aboriginal Resources ............................................................ 1.2 1.2.2 Post-Contact Aboriginal Resources .......................................................... 1.5 1.2.3 Historic Euro-Canadian Resources ............................................................ 1.6 1.2.4 Previously Identified Archaeology Sites and Surveys ............................. 1.8 2.0 MONITORING GEOTECHNICAL BOREHOLES ............................................................2.12 -

Parliamentary Treasures Trésors

A Glimpse Inside the Archives of the Senate of Canada of Senate the of Archives the Inside Glimpse A PARLIAMENTARY TREASURES PARLIAMENTARY PARLIAMENTARY TREASURES | TRÉSORS PARLEMENTAIRES TRÉSORS PARLEMENTAIRES Regard sur les Archives du Sénat du Canada PARLIAMENTARY TREASURES A Glimpse Inside the Archives of the Senate of Canada Cataloguing in Publication: Y9-19/2014 ISBN: 978-1-100-54780-0 © Senate of Canada 2014 All rights reserved. All copyrights in the illustrations are held by the Senate of Canada unless otherwise indicated. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of the Standing Committee on Internal Economy, Budgets and Administration, Senate of Canada, Ottawa, Ontario, Canada, K1A 0A4. PARLIAMENTARY TREASURES A Glimpse Inside the Archives of the Senate of Canada TABLE OF CONTENTS Letters of Welcome 1 From the Speaker of the Senate 3 From the Clerk of the Senate and Clerk of the Parliaments Introduction History of Canada 14 The Birth of Confederation 18 The Birth of New Regions 21 The First Years 24 The World Wars and the Great Depression 28 The Modern World Transportation 37 Transport by Land: The Era of Railways 39 Transport by Water: From Canoes to Ships 41 Transport by Air: The Age of Aircraft Canadian Society 46 Official Languages 47 Acadians 47 Women 50 Aboriginal Peoples 51 Human Rights 52 Marriage and Divorce 53 Multiculturalism 56 The Arts 58 -

SACO Participants' Manual

SACO Participants' Manual Adam L. Schiff University of Washington Libraries for the Program for Cooperative Cataloging Second Edition revised by the PCC Standing Committee on Training Task Group to Update the SACO Participants’ Manual (with minor revisions, February 28, 2019) Program for Cooperative Cataloging Washington, D.C. 2007 PCC Standing Committee on Training Task Group to Update the SACO Participants’ Manual Adam L. Schiff (Chair), University of Washington Karen Jensen, McGill University John N. Mitchell, Library of Congress Kay Teel, Stanford University Alex Thurman, Columbia University Melanie Wacker, Columbia University Table of Contents Acknowledgments for the Second Edition v Acknowledgments for the First Edition vi Introduction 1 Why Participate in SACO? 4 SACO Documentation 6 Practicalities 7 Subject Headings 13 When to Make a SACO Proposal 14 Authority Research for Subjects 16 Subject Authority Proposal Form 18 Guidelines for Formulating LC Subject Heading Proposals 20 Examples of Decisions Involved in Making New Subject Proposals 23 Examples A-D: Headings Supplying More Appropriate Level of Specificity 25 Log-periodic antennas 25 Systems librarians 26 Cataloging of cartographic materials 27 Chinese mitten crab 29 Examples E-J: Headings for Genres and Forms 31 Medical drama 31 Romantic comedy films 33 Reggaetón 35 Khim and Khim music 37 Banjo and double bass music 39 Cootie catchers 40 Examples K-L: Headings Based on an Existing Pattern 42 This (The English word) 42 Historical fiction, Chilean 43 Examples M-S: Headings for Geographic Places 44 Madhupur Jungle (Bangladesh) 44 Aleknagik, Lake (Alaska) 46 Auyuittuq National Park (Nunavut) 48 Fort Worden State Park (Port Townsend, Wash.) 50 Chiles Volcano (Colombia and Ecuador) 51 Lusatian Mountains 54 Spring Lake (Hays County, Tex.) 56 Bluewater Lake (Minn.) 59 Cavanaugh, Lake (Wash. -

Annual Report 2016–2017 Library of Parliament 2016–2017 Annual Report

LIBRARY OF PARLIAMENT | ANNUAL REPORT 2016–2017 LIBRARY OF PARLIAMENT 2016–2017 ANNUAL REPORT CONTENTS Message from the Parliamentary Librarian .........................................................................................1 Strategic Priorities ..................................................................................................................................................3 Services ............................................................................................................................................................................9 Report of the Parliamentary Budget Off icer ....................................................................................18 About the Library ..................................................................................................................................................19 Financial Information ........................................................................................................................................21 LIBRARY OF PARLIAMENT 2016–2017 ANNUAL REPORT Message from the PARLIAMENTARY LIBRARIAN In 2016–2017, the Library shift ed from welcoming Canada’s 42nd Parliament to actively responding to the requirements of parliamentarians. With 225 new parliamentarians elected or appointed, the shift came with a 25% increase in demand for research, information and reference services. This required us to extend ourselves as an organization and stretch our resources so we could continue to provide the high level of service parliamentarians -

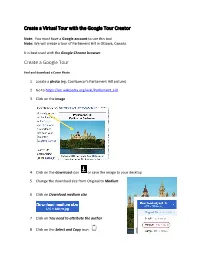

Create a Virtual Tour with the Google Tour Creator

Create a Virtual Tour with the Google Tour Creator Note: You must have a Google account to use this tool. Note: We will create a tour of Parliament Hill in Ottawa, Canada. It is best used with the Google Chrome browser. Create a Google Tour Find and download a Cover Photo 1. Locate a photo (eg: Coollcaesar’s Parliament Hill picture) 2. Go to https://en.wikipedia.org/wiki/Parliament_Hill 3. Click on the image 4. Click on the download icon or save the image to your desktop 5. Change the download size from Original to Medium 6. Click on Download medium size 7. Click on You need to attribute the author 8. Click on the Select and Copy icon Create the Tour 1. Sign on to your Google Account 2. Go to the site https://vr.google.com/tourcreator 3. Click on the Get Started button 4. Click on the New Tour button 5. The editing screen appears 6. In the Title text box type Parliament Hill 7. Click in the Description text box 8. Paste the following text into this text box Parliament Hill is an area of land on the Ottawa River in Ottawa, Canada. Its Gothic revival buildings are the home of the Parliament of Canada. Parliament Hill attracts approximately 3 million visitors each year. Originally the site of a military base in the 18th century, development of the area into a governmental zone began in 1859, after Queen Victoria chose Ottawa as the capital of the Province of Canada. Following a number of extensions to the parliament and departmental buildings and a fire in 1916 that destroyed the Centre Block, Parliament Hill took on its present form with the completion of the Peace Tower in 1927.