Virtualization | Networks | Workstations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

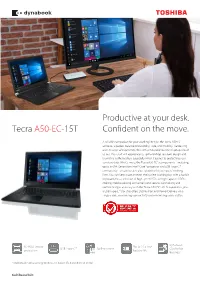

Toshiba Tecra A50-EC-15T

Productive at your desk. Tecra A50-EC-15T Confident on the move. A reliable companion for your working lifestyle, the Tecra A50-EC achieves a perfect balance of durability, style, and mobility. Harnessing over 30 years of experience, this slim yet durable business laptop is built to last. Your staff will appreciate its spill and drop-resistant design and biometric authenication, especially when it comes to protecting your sensitive data. What's more, the Tecra A50-EC's components - including up to an 8th Generation Intel® Core™ processor and USB Type-C™ connectivity - ensure best-in-class reliability for your users’ working lives. You can keep pace on even the busiest working day, with a backlit keyboard plus a selection of high speed SSDs, or high capacity HDDs, making mobile working convenient and secure. Connecting and conferencing is also easy with the Tecra A50-EC’s Wi-Fi capabilities, plus a USB Type-C™ slot that offers Display Port and Power Delivery via a single cable, maximising connectivity and minimising cable clutter. dynabook 3D HDD sensor Up to 10.5 hrs* USB Type-C™ Spill-resistant Quality for protection battery life Business * MobileMark™ 2014 running Windows 10, battery life dependent on model toshiba.eu/b2b Tecra A50-EC-15T Technical specifications Processor 8th generation Intel® Core™ i5-8250U processor (1.60/3.40 GHz, 3rd level cache: 6MB) Operating System / Platform Windows 10 Pro 64-bit Design Colour Graphite Black System memory 8,192 MB, maximum expandability: 32,768 MB, technology: DDR4 RAM (2400MHz) Solid State Drive 256GB M.2 SATA Display 39.6cm (15.6”) Full HD TFT non-reflective High Brightness eDP™ display with LED sidelighting and 16 : 9 aspect ratio, internal resolution: 1,920 x 1,080 Graphics adapter Intel® UHD Graphics 620 Interfaces 1 x RJ-45, 1 x HDMI-out supporting 1080p signal format, 1 x SD™ card slot (SD™, SDHC™, SDXC™ supporting UHS-I, MMC), 1 x external Headphone (stereo) / Microphone combo socket, 1 x RGB, 1 x USB Type-C™ with Display Port and Power Delivery capabilities, supporting 1 x external monitor. -

Toshiba Notebooks

Toshiba Notebooks June 28, 2005 SATELLITE QOSMIO SATELLITE PRO TECRA PORTÉGÉ LIBRETTO • Stylish, feature-packed value • The art of smart entertainment • The perfect companions for SMBs • First-class scalability, power and • Ultimate mobility: Redefining ultra- • The return of the mini-notebook on the move connectivity for corporate portable wireless computing • Offering outstanding quality • Born from the convergence of the AV computing • The innovatively designed libretto combined with high performance and and PC worlds, Qosmio allows you to • From the entry-level Satellite Pro, • The Portégé series offers the ultimate U100 heralds powerful, reliable attractive prices, these notebooks are create your own personal universe which offers great-value power, • The Tecra range brings the benefits in portability, from the ultra-thin portability in celebration of 20 years of ideal when impressive design, mobility and performance to the of seamless wireless connectivity and Portégé R200 to the impressive, leadership in mobile computing multimedia performance, mobility and • Designed to be the best mobile hub stylish, feature-packed widescreen exceptional mobile performance to stylish Portégé M300 and the reliability are needed, anywhere, for smart entertainment, Qosmio model, Toshiba's Satellite Pro range is business computing, with state-of-the- innovative anytime integrates advanced technologies to sure to provide an all-in-one notebook art features, comprehensive expansion Tablet PC Portégé M200 make your life simpler and more guaranteed to suit your business and complete mobility entertaining needs Product specification and prices are subject to change without prior notice. Errors and omissions excepted. For further information on Toshiba Europe GmbH Toshiba options & services visit Tel. -

University of Education, Winneba

University of Education, Winneba http://ir.uew.edu.gh UNIVERSITY OF EDUCATION, WINNEBA THE INFLUENCE OF VIRTUAL PHYSICS LABORATORY ON STUDENTS’ PERFORMANCE IN MOTION: A CASE STUDY AT BISHOP HERMAN COLLEGE, KPANDO BY ALEXANDER HERO YAWO ASARE (7110130016) A dissertation submitted to the Department of Science Education, Faculty of Science and the School of Graduate Studies, University of Education, Winneba, in partial fulfillment of the requirements for the award of a Master of Education (Science) September, 2014 University of Education, Winneba http://ir.uew.edu.gh ACKNOWLEDGEMENT I wish to express my heartfelt appreciation to my supervisor, Dr. E. K. Oppong, a Senior Lecturer in the Department of Science Education, University of Education, Winneba, for his technical guidance and encouragement throughout the supervisory work on the dissertation. I also wish to render my sincerest gratitude to Mr.Ayikue Ambrose and Ras Kumabia who willingly opted to help me. I am supremely grateful to you and your family. Again, I extend special thanks to all other lecturers in UEW especially Dr. Young, Dr. E. Ngman-Wara and Dr.Taale. Finally, I would like to say thank you to, Mrs. Mary Aguti Asare,Boati Evelyn and all my siblings University of Education, Winneba http://ir.uew.edu.gh DECLARATION Candidate’s Declaration I hereby declare that this dissertation, with the exception of quotations and references contained in published works which have all been identified and duly acknowledged, is entirely my own original work, and it has not been presented for another degree in this university or elsewhere. Signature………………………………… Date:……………………………… Alexander Hero Yawo Asare Supervisors’ Declaration I hereby declare that the preparation and presentation of this dissertation was supervised in accordance with the guidelines on supervision of dissertation as laid down by the University of Education, Winneba. -

SUPPLY CHAIN STRATEGY the Logistics of Supply Chain Management

SUPPLY CHAIN STRATEGY The Logistics of Supply Chain Management Edward Frazelle McGraw-Hill New York Chicago San Francisco Lisbon London Madrid Mexico City Milan New Delhi San Juan Seoul Singapore Sydney Toronto Copyright © 2002 by The McGraw-Hill Companies, Inc. All rights reserved. Manufactured in the United States of America. Except as permitted under the United States Copyright Act of 1976, no part of this publication may be repro- duced or distributed in any form or by any means, or stored in a database or retrieval system, without the prior writ- ten permission of the publisher. 0-07-141817-2 The material in this eBook also appears in the print version of this title: 0-07-137599-6. All trademarks are trademarks of their respective owners. Rather than put a trademark symbol after every occur- rence of a trademarked name, we use names in an editorial fashion only, and to the benefit of the trademark owner, with no intention of infringement of the trademark. Where such designations appear in this book, they have been printed with initial caps. McGraw-Hill eBooks are available at special quantity discounts to use as premiums and sales promotions, or for use in corporate training programs. For more information, please contact George Hoare, Special Sales, at [email protected] or (212) 904-4069. TERMS OF USE This is a copyrighted work and The McGraw-Hill Companies, Inc. (“McGraw-Hill”) and its licensors reserve all rights in and to the work. Use of this work is subject to these terms. Except as permitted under the Copyright Act of 1976 and the right to store and retrieve one copy of the work, you may not decompile, disassemble, reverse engi- neer, reproduce, modify, create derivative works based upon, transmit, distribute, disseminate, sell, publish or sub- license the work or any part of it without McGraw-Hill’s prior consent. -

Tecra R850 Detailed Product Specification1

This product specification is variable and subject to change prior to product launch. Tecra R850 Detailed Product Specification1 Model Name: R850-S8512 Part Number: PT524U-02G022 UPC: 883974920020 C1 2 o 4 USB ports (1 USB v3.0 port + 3 USB v2.0 ports (1 11 Operating System eSATA/USB combo with USB Sleep and Charge )) Genuine Windows® 7 Professional 64-bit , SP1 ® o RJ-45 LAN port Genuine Windows 7 Professional 32-bit , SP1 o Docking connector Processor3 and Graphics4 Security Intel® Core™ i3-2330M Processor o Fingerprint Reader o 2.20 GHz, 3MB Cache o Slot for security lock Mobile Intel® HM65 Express Chipset Physical Description ® Mobile Intel HD Graphics with 64MB-1696MB(64-bit) dynamically Graphite Black Metallic with Line Pattern allocated shared graphic memory Dimensions (W x D x H Front/H Rear): 14.9” × 9.9” × 0.82”/1.19” Memory5 Weight: Starting at 5.29 lbs. depending upon configuration 12 Configured with 4GB DDR3 1333MHz (max 8GB) Power 2 memory slots. One slot occupied. 65W (19V 3.42A) 100-240V/50-60Hz AC Adapter. Storage Drive6 Dimensions (W x H x D): 4.25” x 1.81” x 1.16” 320GB (7200 RPM) Serial ATA hard disk drive Weight: starting at 0.49 lbs. TOSHIBA Hard Drive Impact Sensor (3D sensor) Battery13 Fixed Optical Disk Drive7 6 cell/66Wh Lithium Ion battery pack DVD SuperMulti drive supporting 11 formats Battery Life Rating (measured by MobileMark™ Productivity 2007) o Maximum speed and compatibility: CD-ROM (24x), CD-R (24x), o Included 6 cell battery: up to 8 hours, 40 minutes CD-RW (10x), DVD-ROM (8x), DVD-R -

Oei Usb Smartmedia Usb Device Driver 8/13/2015

Download Instructions Oei Usb Smartmedia Usb Device Driver 8/13/2015 For Direct driver download: http://www.semantic.gs/oei_usb_smartmedia_usb_device_driver_download#secure_download Important Notice: Oei Usb Smartmedia Usb Device often causes problems with other unrelated drivers, practically corrupting them and making the PC and internet connection slower. When updating Oei Usb Smartmedia Usb Device it is best to check these drivers and have them also updated. Examples for Oei Usb Smartmedia Usb Device corrupting other drivers are abundant. Here is a typical scenario: Most Common Driver Constellation Found: Scan performed on 8/12/2015, Computer: Matsonic MS8158 Outdated or Corrupted drivers:4/18 Updated Device/Driver Status Status Description By Scanner Motherboards Intel Canal DMA 3 de la familia de procesadores Intel(R) Xeon(R) Up To Date and Functioning E5/Core i7 - 3C23 Mice And Touchpads Microsoft HID mouse Up To Date and Functioning Synaptics Souris compatible PS/2 Up To Date and Functioning Usb Devices Hewlett-Packard HP Deskjet F4100 (DOT4USB) Up To Date and Functioning Microsoft Intel(r) 82801DB/DBM USB universeller Hostcontroller - 24C2 Up To Date and Functioning Tandberg USB Composite Device Up To Date and Functioning Sound Cards And Media Devices IDT High Definition Audio Device Up To Date and Functioning Network Cards ONDA ONDA Network Device Up To Date and Functioning Keyboards Corrupted By Oei Usb Smartmedia Usb Microsoft HID Keyboard Device Hard Disk Controller Intel(R) 82801DB Ultra ATA-Speichercontroller - 24CB Up To -

Tecra® A50-C Series User's Guide

Tecra® A50-C Series User’s Guide (Windows 10) If you need assistance: Technical support is available online at Toshiba’s Web site at support.toshiba.com. At this Web site, you will find answers for many commonly asked technical questions plus many downloadable software drivers, BIOS updates, and other downloads. For more information, see “If Something Goes Wrong” on page 123 in this guide. GMAD00429010 08/15 2 California Prop 65 Warning This product contains chemicals, including lead, known to the State of California to cause cancer and birth defects or other reproductive harm. Wash hands after handling. For the state of California only. Model: Tecra A50-C Series Recordable and/or ReWritable Drive(s) and Associated Software Warranty The computer system you purchased may include Recordable and/ or ReWritable optical disc drive(s) and associated software, among the most advanced data storage technologies available. As with any new technology, you must read and follow all set-up and usage instructions in the applicable user guides and/or manuals enclosed or provided electronically. If you fail to do so, this product may not function properly and you may lose data or suffer other damage. TOSHIBA AMERICA INFORMATION SYSTEMS, INC. (“TOSHIBA”), ITS AFFILIATES AND SUPPLIERS DO NOT WARRANT THAT OPERATION OF THE PRODUCT WILL BE UNINTERRUPTED OR ERROR FREE. YOU AGREE THAT TOSHIBA, ITS AFFILIATES AND SUPPLIERS SHALL HAVE NO RESPONSIBILITY FOR DAMAGE TO OR LOSS OF ANY BUSINESS, PROFITS, PROGRAMS, DATA, NETWORK SYSTEMS OR REMOVABLE STORAGE MEDIA ARISING OUT OF OR RESULTING FROM THE USE OF THE PRODUCT, EVEN IF ADVISED OF THE POSSIBILITY THEREOF. -

Toshiba Tecra Z50-C-10M

Toshiba recommends Windows. Replace your desktop to business class Tecra Z50-C-10M The 39.6 cm (15.6") Tecra Z50-C is a high class desktop replacement. Built to the standard you’d expect from Toshiba's Z-series, it empowers you to achieve more. With a full-size, backlit keyboard, you can get things done in comfort at any time of day – in any light conditions. This usability extends to the non-reflective, high brightness, Full HD IPS screen, which shows every detail in vibrant colour – for a full view of all your work, and all your media. What's more, with two-factor authentication, offered by either fingerprint recognition or SmartCard reader, the Tecra Z0-C is easy to access in the right hands, and almost impenetrable in the wrong ones – keeping your business-critical data secure. Then, once you've been authorised, the device gives you everything you need in an instant, thanks to the Intel® Wi-Fi, LAN capability, optional WWAN, and four USB 3.0 ports. Boosted by a long lifespan and backed by our Reliability Guarantee – as well as a host of features that make it easy to integrate – the Tecra Z50-C is an outstanding investment, straight out of the box. Exclusive Windows 10 Full-size Two-factor Toshiba Quality keyboard and Toshiba Pro authentication for Business backlit keyboard BIOS pre-installed Reliability Guarantee - Terms and conditions apply. See toshiba.eu/reliability for more information Toshiba recommends Windows. Tecra Z50-C-10M Toshiba UK Processor 6th generation Intel® Core™ i5-6200U processor with Intel®Turbo Boost Technology -

The Right Laptop at the Right Price

Tecra® C50 Series The right laptop at the right price Series Overview Equip your workforce for unprecedented success with an enterprise-class laptop built and priced for small- or medium-sized businesses like yours—Toshiba’s new 15.6” Tecra® C50 laptop. Tap into the legendary Tecra® tradition of performance for the advanced power, security and durability you need to outclass the competition. An extremely durable device, and packed with essential productivity features, this laptop delivers the right gear at the right price—including the latest Intel® Core™ i3 and i5 processors, an expansive HD screen and keyboard with a numeric keypad for multitasking and working with spreadsheets, plus a great battery life rating to keep business in motion. All this with unmatched Toshiba build-quality, delivering one of the industry’s lowest failure rate. Toshiba Recommends Windows 8. Product Highlights Extended Battery Life Built-In DVD Drive Security Features Toshiba EasyGuard® Technology Extend the workday with a battery Share work, load programs, Worry a lot less about security, Tap into proven reliability with the life rating of over 7 hours. present your corporate video and thanks to a Trusted Platform Toshiba EasyGuard® Technology, do all-important PC backups with Module (on select models) and plus comprehensive testing for a built-in DVD drive. security lock slot. Drop Tests, Pressure Tests and Highly Accelerated Life Testing (HALT). Tecra® C50 Featured SKUs Specifications* Model C50-B1500 C50-B1503 Part Number PSSG4U-001001 PSSG4U-003001 Operating -

Toshiba Tecra A40-C-1KF

More than just business standard Tecra A40-C-1KF With outstanding connectivity and a range of ergonomic design features, the 35,6 cm (14”) Tecra A40-C can set new standards for your business – wherever your work takes you. From the optimised antenna, to the latest Intel® Wi-Fi, Gigabit LAN and optional LTE, assuring the best connection quality for your work at all times. What’s more, the Tecra A40-C features a full-size and silent keyboard that keeps typing comfortable – making it perfect for writing documents both in the office, and in the coffee shop. With a non-reflective screen and an optional backlit keyboard, you can get things done whether you’re in bright sunlight or complete darkness. When you are in the office, simply plug in to your Hi-Speed Port Replicator III docker*, enabling you to create a full featured desk environment. Whilst on the move, the Tecra A40-C provides an uncompromised range of full-size ports, avoiding the need for any adapters, allowing you to work or present with confidence. dynabook In-house Windows 10 Up to 8H* TPM Quality for developed Pro (battery life) encryption Business BIOS pre-installed *Accessories sold separately. Please check the range of accessories available at www.toshiba.co.uk/accessories/laptops ** Measured with Mobile Mark™ 2014. Mobile Mark is a trademark of Business Applications Performance Corporation. Reliability Guarantee - Terms and conditions apply. See toshiba.co.uk/reliability for more information. uk.dynabook.com Tecra A40-C-1KF Specifications Processor 6th generation Intel® -

Toshiba Tecra 500CS and 500CDT

000-book.bk : 01-find.fm5 Page 0 Wednesday, May 15, 1996 3:50 PM Chapter 1 Make Sure You Have Everything . 1 Select a Place To Work . 2 Find Out Where Everything's Located . 6 000-book.bk : 01-find.fm5 Page 1 Wednesday, May 15, 1996 3:50 PM Finding Your Way around the System You’ve bought your computer and taken everything out of the box. You may be asking yourself, “Now, what do I do?” Well, this chapter explains how to set up your computer, gives you tips on working comfortably and takes you on a tour of the computer’s features. Make Sure You Have Everything Your computer comes with everything you need to get up and run- ning quickly. However, before you do anything else, it's a good idea to make sure you received everything you were supposed to. This information is listed on the Quick Start Card at the top of the box. If any items are missing or damaged, notify your dealer immedi- ately. For additional help, contact Toshiba as described in “If You Need Further Assistance” on page 357. 1 000-book.bk : 01-find.fm5 Page 2 Wednesday, May 15, 1996 3:50 PM Finding Your Way around the System 2 Select a Place To Work Select a Place To Work Your computer is portable, designed to be used in a variety of circumstances and locations. However, by giving some thought to your work environment, you can protect the computer and work in comfort. Keep the Computer Comfortable Use a flat surface which is large enough for the computer and any items you need to refer to while your work. -

TOSHIBA TECRA S1 Series Portable Personal Computer User's Manual

TOSHIBA TECRA S1 Series Portable Personal Computer User’s Manual Copyright © 2003 by TOSHIBA Corporation. All rights reserved. Under the copyright laws, this manual cannot be reproduced in any form without the prior written permission of TOSHIBA. No patent liability is assumed, with respect to the use of the information contained herein. TOSHIBA TECRA S1series Portable Personal Computer User’s Manual First edition March 2003 Copyright authority for music, movies, computer programs, data bases and other intellectual property covered by copyright laws belongs to the author or to the copyright owner. Copyrighted material can be reproduced only for personal use or use within the home. Any other use beyond that stipulated above (including conversion to digital format, alteration, transfer of copied material and distribution on a network) without the permission of the copyright owner is a violation of copyright or author’s rights and is subject to civil damages or criminal action. Please comply with copyright laws in making any reproduction from this manual. Disclaimer This manual has been validated and reviewed for accuracy. The instructions and descriptions it contains are accurate for the TOSHIBA TECRA S1series Portable Personal Computer at the time of this manual’s production. However, succeeding computers and manuals are subject to change without notice. TOSHIBA assumes no liability for damages incurred directly or indirectly from errors, omissions or discrepancies between the computer and the manual. Trademarks IBM is a registered trademark, and IBM PC and PS/2 are trademarks of International Business Machines Corporation. Intel, Intel SpeedStep and Pentium are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries/regions.