Stronger Physical and Biological Measurement Strategy for Biomedical and Wellbeing Application by CICT

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Biomedical Engineering Graduate Program Background

302 Maroun Semaan Faculty of Engineering and Architecture (MSFEA) Biomedical Engineering Graduate Program Dawy, Zaher Coordinator: (Electrical & Computer Engineering, MSFEA) Jaffa, Ayad Co-coordinator: (Biochemistry & Molecular Genetics, FM) Amatoury, Jason (Biomedical Engineering, MSFEA) Daou, Arij (Biomedical Engineering, MSFEA) Darwiche, Nadine (Biochemistry & Molecular Genetics, FM) Coordinating Committee Khoueiry, Pierre Members: (Biochemistry & Molecular Genetics, FM) Khraiche, Massoud (Biomedical Engineering, MSFEA) Mhanna, Rami (Biomedical Engineering, MSFEA) Oweis, Ghanem (Mechanical Engineering, MSFEA) Background The Biomedical Engineering Graduate Program (BMEP) is a joint MSFEA and FM interdisciplinary program that offers two degrees: Master of Science (MS) in Biomedical Engineering and Doctor of Philosophy (PhD) in Biomedical Engineering. The BMEP is housed in the MSFEA and administered by both MSFEA and FM via a joint program coordinating committee (JPCC). The mission of the BMEP is to provide excellent education and promote innovative research enabling students to apply knowledge and approaches from the biomedical and clinical sciences in conjunction with design and quantitative principles, methods and tools from the engineering disciplines to address human health related challenges of high relevance to Lebanon, the Middle East and beyond. The program prepares its students to be leaders in their chosen areas of specialization committed to lifelong learning, critical thinking and intellectual integrity. The curricula of the -

A Cybernetics Update for Competitive Deep Learning System

OPEN ACCESS www.sciforum.net/conference/ecea-2 Conference Proceedings Paper – Entropy A Cybernetics Update for Competitive Deep Learning System Rodolfo A. Fiorini Politecnico di Milano, Department of Electronics, Information and Bioengineering (DEIB), Milano, Italy; E-Mail: [email protected]; Tel.: +039-02-2399-3350; Fax: +039-02-2399-3360. Published: 16 November 2015 Abstract: A number of recent reports in the peer-reviewed literature have discussed irreproducibility of results in biomedical research. Some of these articles suggest that the inability of independent research laboratories to replicate published results has a negative impact on the development of, and confidence in, the biomedical research enterprise. To get more resilient data and to achieve higher result reproducibility, we present an adaptive and learning system reference architecture for smart learning system interface. To get deeper inspiration, we focus our attention on mammalian brain neurophysiology. In fact, from a neurophysiological point of view, neuroscientist LeDoux finds two preferential amygdala pathways in the brain of the laboratory mouse to experience reality. Our operative proposal is to map this knowledge into a new flexible and multi-scalable system architecture. Our main idea is to use a new input node able to bind known information to the unknown one coherently. Then, unknown "environmental noise" or/and local "signal input" information can be aggregated to known "system internal control status" information, to provide a landscape of attractor points, which either fast or slow and deeper system response can computed from. In this way, ideal system interaction levels can be matched exactly to practical system modeling interaction styles, with no paradigmatic operational ambiguity and minimal information loss. -

An Open Logic Approach to EPM

OPEN ACCESS www.sciforum.net/conference/ecea-1 Conference Proceedings Paper – Entropy An Open Logic Approach to EPM Rodolfo A. Fiorini 1,* and Piero De Giacomo 2 1 Politecnico di Milano, Department of Electronics, Information and Bioengineering, Milano, Italy; E- Mail: [email protected] 2 Dept. of Neurological and Psychiatric Sciences, Univ of Bari, Italy; E-Mail: [email protected] * E-Mail: [email protected]; Tel.: +039-02-2399-3350; Fax: +039-02-2399-3360. Received: 11 September 2014 / Accepted: 21 October 2014 / Published: 3 November 2014 Abstract: EPM is a high operative and didactic versatile tool and new application areas are envisaged continuosly. In turn, this new awareness has allowed to enlarge our panorama for neurocognitive system behaviour understanding, and to develop information conservation and regeneration systems in a numeric self-reflexive/reflective evolutive reference framework. Unfortunately, a logically closed model cannot cope with ontological uncertainty by itself; it needs a complementary logical aperture operational support extension. To achieve this goal, it is possible to use two coupled irreducible information management subsystems, based on the following ideal coupled irreducible asymptotic dichotomy: "Information Reliable Predictability" and "Information Reliable Unpredictability" subsystems. To behave realistically, overall system must guarantee both Logical Closure and Logical Aperture, both fed by environmental "noise" (better… from what human beings call "noise"). So, a natural operating point can emerge as a new Trans-disciplinary Reality Level, out of the Interaction of Two Complementary Irreducible Information Management Subsystems within their environment. In this way, it is possible to extend the traditional EPM approach in order to profit by both classic EPM intrinsic Self-Reflexive Functional Logical Closure and new numeric CICT Self-Reflective Functional Logical Aperture. -

Initial Steps in Development of Computer Science in Macedonia

Initial Steps in Development of Computer Science in Macedonia and Pioneering Contribution to Human-Robot Interface using Signals Generated by a Human Head Stevo Bozinovski 2 Abstract – This plenary keynote paper gives overview of the were engaged in support of nuclear energy research. People pioneering steps in the development of computer science in from Macedonia interested in advanced research and study Macedonia between 1959 and 1988, period of the first 30 years. were part of this effort. Most significant work was done by As part of it, the paper describes the worldwide pioneering Aleksandar Hrisoho, who working together with Branko results achieved in Macedonia. Emphasize is on Human-Robot Soucek in Zagreb, wrote a paper about magnetic core Interface using signals generated by a human head. memories in 1959 [1]. He received formal recognition for this Keywords – pioneering results, Computer Science in work, the award “Nikola Tesla” [2]. He also worked on Macedonia analog-to-digital conversion on which he wrote a paper [3], received a PhD [4], and was cited [5]. So it can be said that authors from Macedonia in early 1960’s already achieved PhD and citation for work related to computer sciences. Their I. INTRODUCTION work was in languages other than Macedonian because they worked outside Macedonia. This paper represents a research work on the history of development of Computer Science in Macedonia from 1959 till 1988, the first thirty years period. The history can be B. 1961-1967: People from Macedonia visiting programming presented in many ways and in this paper the measure of courses outside Macedonia historical events are pioneering steps. -

Biomedical Engineering Graduate Program

Faculty of Medicine and Medical Center (FM/AUBMC) 505 Biomedical Engineering Graduate Program Coordinator: Dawy, Zaher (Electrical & Computer Engineering, SFEA) Co-coordinator: Jaffa, Ayad (Biochemistry & Molecular Genetics, FM) Coordinating Committee Daou, Arij (Biomedical Engineering, SFEA) Members: Darwiche, Nadine (Biochemistry & Molecular Genetics, FM) Kadara, Humam (Biochemistry & Molecular Genetics, FM) Khoueiry, Pierre (Biochemistry & Molecular Genetics,FM) Mhanna, Rami (Biomedical Engineering, SFEA) Oweis, Ghanem (Mechanical Engineering, SFEA) Background The Biomedical Engineering Graduate Program (BMEP) is a joint SFEA and FM interdisciplinary program that offers two degrees that are: Master of Science (MS) in Biomedical Engineering and Doctor of Philosophy (PhD) in Biomedical Engineering. The BMEP is housed in the SFEA and administered by both SFEA and FM via a joint program coordinating committee (JPCC). The mission of the BMEP is to provide excellent education and promote innovative research enabling students to apply knowledge and approaches from the biomedical and clinical sciences in conjunction with design and quantitative principles, methods, and tools from the engineering disciplines, to address human health related challenges of high relevance to Lebanon, the Middle East, and beyond. The program prepares its students to be leaders in their chosen areas of specialization committed to lifelong learning, critical thinking, and intellectual honesty. The curricula of the MS and PhD degrees are composed of core and elective -

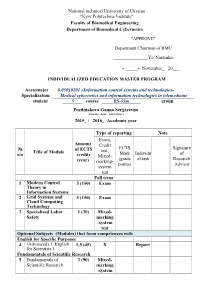

National Technical University of Ukraine "Kyiv Polytechnic Institute" Faculty of Biomedical Engineering Department of Biomedical Cybernetics "APPROVE"

National technical University of Ukraine "Kyiv Polytechnic Institute" Faculty of Biomedical Engineering Department of Biomedical Cybernetics "APPROVE" Department Chairman of BMC _______________Ye. Nastenko « _____» November_ 20___ INDIVIDUALIZED EDUCATION MASTER PROGRAM Area/major 8.05010101 «Information control systems and technologies» Specialization Medical cybernetics and information technologies in telemedicine student 5 course BS-51m group Pozdniakova Ganna Sergeyevna (surname, name, patronymic) 2015_ / 2016_ Academic year Type of reporting Note Exam, Amount Credit ECTS Signature № of ECTS test, Title of Module Mark Individu of s/n credits Mixed- (grade al task Research (year) marking- points) Advisor system test Fall term 1 Modern Control 5 (150) Exam Theory in Information Systems 2 Grid Systems and 5 (150) Exam Cloud Computing Technology 3 Specialized Labor 1 (30) Mixed- Safety marking -system test Optional Subjects (Modules) that form competences with English for Specific Purposes 4 (Advanced).1. English 1,5 (45) Х Report for Scientists 1. Fundamentals of Scientific Research 5 Fundamentals of 3 (90) Mixed- Scientific Research marking -system Type of reporting Note Exam, Amount Credit ECTS Signature № of ECTS test, Title of Module Mark Individu of s/n credits Mixed- (grade al task Research (year) marking- points) Advisor system test test Professional and Practical training Biomedical Cybernetics 6 Fundamentals of 6,5 (195) Exam Settle Synergetics. Models of ment Nonlinear Dynamics. and graphi c work Medical informational systems -

ENGLISH PROFICIENCY in CYBERNETICS

MINISTRY OF EDUCATION AND SCIENCE OF UKRAINE TARAS SHEVCHENKO NATIONAL UNIVERSITY OF KYIV Maryna Rebenko ENGLISH PROFICIENCY in CYBERNETICS B2 – C1 Textbook for Students of Computer Science and Cybernetics UDC R Reviewers: Candidate of Economic Sciences, Docent P. V. Dz iuba, Doctor of Economic Sciences, Full Professor R. O. Kostyrk o, Doctor of Economic Sciences, Full Professor V. G. Shvets , Candidate of Pedagogic Sciences, Docent L. M. Ruban Recommended by the Academic Council Faculty of Economics (Protocol No 2 dated 25 October 20160 Adopted by the Scientific and Methodological Council Taras Shevchenko National University of Kyiv (Protocol No 3 dated 25 November 2016) Rebenko Maryna R English proficiency in Cybernetic: Textbook / M. Rebenko. – K. : Publishing and Polygraphic Centre "The University of Kyiv", 2017. – 160 р. ISBN 978-966-439-881-4 The English Proficiency in Cybernetics textbook has been designed for the students completing their 4th year of undergraduate study in Applied Mathematics, Computer Sciences, System Analysis, and Software Engineering. The course ap- plies for those students who have a specific area of academic or professional in- terest. Technology-integrated English for Specific Purposes content of the text- book gives the students the opportunity to develop their English competence suc- cessfully. It does this by meeting national and international academic standards, professional requirements and students’ personal needs. UDC ISBN 978-966-439-881-4 © Rebenko M., 2017 © Taras Shevchenko National University of Kyiv, Publishing and Polygraphic Centre "The University of Kyiv ", 2017 2 Contents Preface ...............................................................................................5 Unit 1. Cybernetics ...........................................................................6 Part I. Birth of the Science ...........................................................6 Part II. Norbert Wiener, the Father of Cybernetics ....................11 Unit 2. -

Intelligent Machines Symposium

MACHINE LEARNING IN RESEARCH AND APPLICATIONS CALL FOR POSTERS AND DEMONSTRATIONS INTELLIGENT MACHINES SYMPOSIUM 17 NOVEMBER 2010 “DE VEREENIGING” IN NIJMEGEN Organisation Prof. dr. H.J. Kappen | Radboud Universiteit Nijmegen / SNN A. Wanders | SNN SNN SNN is a non-for-profit organization that aims to stimulate research and applications on machine learning, Bayesian inference and neural networks in the Netherlands. SNN facilitates a network of researchers, organizations and companies in this field. Current participants are: Prof. Dr. H.J. Kappen, dr. W. Wiegerinck | Radboud Universiteit Nijmegen / SNN Prof. Dr. T. Heskes | Radboud Universiteit Nijmegen Prof. Dr. H.J. van den Herik | Tilburg center for Cognition and Communication Prof. Dr. E. Postma | Tilburg center for Cognition and Communication Prof. Dr. A. Van den Bosch | Tilburg center for Cognition and Communication Prof. Dr. J.N. Kok | Universiteit Leiden Prof. P. Grünwald | Universiteit Leiden Prof. Dr. R. Gill | Universiteit Leiden Prof. Dr.L. Schomaker, dr. M. Wiering | Rijksuniversiteit Groningen Prof. Dr. G. Weiss, dr. K. Tuyls | Universiteit Maastricht Prof. Dr. F. Groen, dr. B. Krose, dr. S. Whiteson | Universiteit van Amsterdam Prof. Dr. H. La Poutre, dr. S. Bohte | Centrum voor Wiskunde en Informatica Dr. K. Nieuwenhuis, dr. G. Pavlin | Thales Research Prof. Dr. M. Reijnders, dr. B. Duin, dr. M. Loog | Technische Universiteit Delft Prof. Dr. A. van der Vaart | Vrije Universiteit Amsterdam Intelligent Machines Can machines think? This has been a conundrum for philosophers for years, but the answer to this question also has real social importance. Modern robots can assist us in our homes and have human-like qualities. The internet provides us with personalized tools that learn from our behavior. -

Mathematical - Mathematical Modelling of Systems – Experimental - Building Actual Miniature Models Or Computer Based Models (Simulation) Cybernetics and Informatics

Lectures on Medical Biophysics Department of Biophysics, Medical Faculty, Masaryk University in Brno Norbert Wiener 26.11.1894 - 18.03.1964 Biocybernetics Lecture outline • Cybernetics • Cybernetic systems • Feedback • Principles of information theory • Information system • Information processes in living organism • Control and regulation • Principles of modelling Norbert Wiener • N. Wiener: (1948) Definitions • Cybernetics is the science dealing with general features and laws regulating information flow, information processing and control processes in organised systems (technical, biological or social character). • System - a set of elements, between which certain relations exist • Modelling – the main method in cybernetics: – Simplified expression of objective reality. – It should be understood as a set of relations between elements – Choice of model must reflect the specific goal – For an accurate modelling of a system, it is necessary to know its structure and function • Applied cybernetics involves the modelling of systems in specific regions of human activity, e.g. technical cybernetics, biocybernetics and social cybernetics. These models can be: – Mathematical - mathematical modelling of systems – Experimental - building actual miniature models or computer based models (simulation) Cybernetics and informatics The cybernetics can be assumed a broad theoretical background of informatics and some other branches of science or knowledge (economy, management, sociology etc.) Biomedical Cybernetics • Main goal: analysis and modelling -

Systemist, Vol

Systemist, Vol. 40 (1), Jun. 2019 ISSN 0961-8309 UKSS International Conference Executive Business Centre, Bournemouth University June 24th 2019 Proceedings Edited G. A. Evans SYSTEMIST The Publication of The UK Systems Society Systemist, Vol. 40 (1), Jun. 2019 UK SYSTEMS SOCIETY Registered office: Registered Charity, No: 1078782 President Editor: Systemist Professor Frank Stowell Professor Frank Stowell Treasurer & Company Secretary University of Portsmouth Ian Roderick Portsmouth Secretaries to the Board Hampshire PO1 2EG Pauline Roberts and Gary Evans Tel: 02392 846021 Email: [email protected] Submission of Material Where possible all material should be submitted electronically and written using one of the popular word-processing packages, e.g. Microsoft Word. The font size must be 12 and in Times New Roman, with all figures and tables in a format that will be still legible if reduced by up to 50%. All materials must conform with the Harvard Referencing System. A title page must be provided and should include the title of the paper, authors name(s), affiliation, address and an abstract of 100-150 words. The material published in the conference proceeding does not necessarily reflect the views of the Management Committee or the Chief Editor. The responsibility for the contents of any material posted within the Proceedings remains with the author(s). Copyright for that material is also with the author(s). The Chief Editor reserves the right to select submitted content and to make amendments where necessary. i Systemist, Vol. 40 -

October 2010 ~ September 2011

DEPARTMENT OF SYSTEMS AND CONTROL ENGINEERING ACTIVITY REPORT October 2010 ~ September 2011 University of Malta Department of Systems and Control Engineering Contents 1. INTRODUCTION ........................................................................................................... 2 2. STAFF MEMBERS ......................................................................................................... 4 3. RESEARCH ACTIVITIES ................................................................................................... 5 3.1 LOW COST 3D HEAD ACQUISITION ........................................................................... 5 3.2 INTELLIGENT CONTROL OF SOLAR WATER HEATERS ...................................................... 6 3.3 EARLY STAGE DESIGN FOR RAPID PROTOTYPING .......................................................... 7 3.4 BRAIN COMPUTER INTERFACING ............................................................................... 8 3.5 SPATIO-TEMPORAL MODELLING FOR SYSTEMS BIOLOGY .............................................. 10 3.6 SPATIO-TEMPORAL ANALYSIS OF POLLUTION DATA ..................................................... 11 3.7 COMPUTER VISION FOR PLANETARY EXPLORATION ..................................................... 12 3.8 COGNITIVE VISION FOR SKETCH UNDERSTANDING ...................................................... 13 3.9 VISION FOR REAL-TIME AUTONOMOUS MOBILE ROBOT GUIDANCE ............................... 13 4. INFRASTRUCTURAL PROJECTS: ..................................................................................... -

A Solution Proposal

Would the Big Government Approach Increasingly Fail to Lead to Good Decision? A Solution Proposal. Abstract Purpose – The present paper offers an innovative and original solution methodology proposal to the problem of arbitrary complex multiscale (ACM) ontological uncertainty management (OUM). Our solution is based on the postulate that society is an ACM system of purposive actors within continuous change. Present social problems are multiscale-order deficiencies, which cannot be fixed by the traditional hierarchical approach alone, by doing what we do better or more intensely, but rather by changing the way we do. Design/methodology/approach – This paper treasures several past guidelines, from McCulloch, Wiener, Conant, Ashby and von Foerster to Bateson, Beer and Rosen's concept of a non-trivial system to arrive to an indispensable and key anticipatory learning system (ALS) component for managing unexpected perturbations by an antifragility approach as defined by Taleb. This ALS component is the key part of our new methodology called "CICT OUM" approach, based on brand new numeric system behavior awareness from computational information conservation theory (CICT). Findings – In order to achieve an antifragility behavior, next generation system must use new CICT OUM-like approach to face the problem of multiscale OUM effectively and successfully. In this way homeostatic operating equilibria can emerge out of a self-organizing landscape of self-structuring attractor points, in a natural way. Originality/value – Specifically, advanced wellbeing applications (AWA), high reliability organization (HRO), mission critical project (MCP) system, very low technological risk (VLTR) and crisis management (CM) system can benefit highly from our new methodology called "CICT OUM" approach and related techniques.