Recording Sound Effects

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

How to Tape-Record Primate Vocalisations Version June 2001

How To Tape-Record Primate Vocalisations Version June 2001 Thomas Geissmann Institute of Zoology, Tierärztliche Hochschule Hannover, D-30559 Hannover, Germany E-mail: [email protected] Key Words: Sound, vocalisation, song, call, tape-recorder, microphone Clarence R. Carpenter at Doi Dao (north of Chiengmai, Thailand) in 1937, with the parabolic reflector which was used for making the first sound- recordings of wild gibbons (from Carpenter, 1940, p. 26). Introduction Ornithologists have been exploring the possibilities and the methodology of tape- recording and archiving animal sounds for many decades. Primatologists, however, have only recently become aware that tape-recordings of primate sound may be just as valuable as traditional scientific specimens such as skins or skeletons, and should be preserved for posterity. Audio recordings should be fully documented, archived and curated to ensure proper care and accessibility. As natural populations disappear, sound archives will become increasingly important. This is an introductory text on how to tape-record primate vocalisations. It provides some information on the advantages and disadvantages of various types of equipment, and gives some tips for better recordings of primate vocalizations, both in the field and in the zoo. Ornithologists studying bird sound have to deal with very similar problems, and their introductory texts are recommended for further study (e.g. Budney & Grotke 1997; © Thomas Geissmann Geissmann: How to Tape-Record Primate Vocalisations 2 Kroodsman et al. 1996). For further information see also the websites listed at the end of this article. As a rule, prices for sound equipment go up over the years. Prices for equipment discussed below are in US$ and should only be used as very rough estimates. -

LP Phono CD Recorder

LP Phono CD Recorder Installation and User’s Manual Item Number: 62904150 SKU# 01366 * Important Notice: Please read this manual carefully All brand names and trademarks are the property of their respective owners Contents Overview.............................................................................................................................................3 Quick Start Guide............................................................................................................................3-4 Music System Controls Identification ........................................................................................5-10 Operating Instructions ...............................................................................................................11-18 Using the Remote Control .........................................................................................................19-22 Recording CDs............................................................................................................................23-29 Specifications ..................................................................................................................................30 Troubleshooting...............................................................................................................................31 Records, Stylus, CD’s ................................................................................................................32-34 Important Safety Instructions/Precautions........................................................................... -

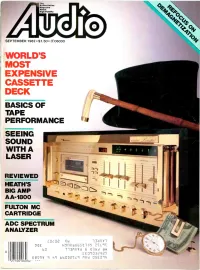

Expensive Cassette Deck Basics of Tape Performance

Authoritative Megezlne About High Fidelity SEPTEMBER 1982 $1.50 ®06030 WORLD'S MOST EXPENSIVE CASSETTE DECK BASICS OF TAPE PERFORMANCE SEEING -:, SOUND 1000ZXL j:..f. L ¡ D 0 ó -i I WITH A I. , LASER a l REVIEWED HEATH'S i BIG AMP 1-1- ` q AA -1800 dir .; , FULTON MC 4er CARTRIDGE ADC SPECTRUM ANALYZER ZOz02 QW n3nvn 9QE H9n0b06SQn09 22L9'I 60 113111XdW E USA 21W 0 EE09032929 i E89n11 9 h9 6605211.9 1T1X14 OSE09h 2 loo 0603u- - --:-Wa j r ' Oreal NuNoIOSfUrlACODMMVIIN , f I^ 1# 1' i r+ ma belong.. 41 ti 41.411ffili. a 111" - 1 4 1 J ~4" 41 _._ . f : Y- ' G , 4 1 4 1 .r ?;71,- 1 -1;4- iks- L GHT& 8 mg. "tar. 07 mg. nicotine ay. percigareie,FTC Report DEC. '81; FILTERS: 15 mg." lar",1.1 mg. nicotine ay. per cigarette by FTC method. u, Warning: The Surgeon General Has Determined Experience the That Cigarette Smoking Is Dangerous to Your Health. Camel taste in Lights and Filters. K. LISTEN. Me tr Cassettea 1411 dard sG 4 a. 1401e -$i$ Nr s - VSSelE /5VPPC'' to iii ivi i.00 -'i j,==Z_ Stop. You're in for And each tape in the a very delightful Professional Reference surprise. Because Series comes with something exciting has TDK's ultra -reliable, happened to TDK's high-performance Professional Reference cassette mechanism Series of audio cassettes. ha" =sue' which assures you of Someth ng exciting for superior tape -to -head too-4 saodagj your eats...and inviting for rVoo contact. -

We Service Many of the Major Brands That We Carry. Call Our Authorized

244 CASSETTE RECORDERS DIGITAL AUDIO RECORDERS ION AUDIO TAPE2PC USB DUAL-WELL CASSETTE DECK This is a dubbing and playback deck with digital-audio output via plug-and-play USB audio interface which requires no drivers for use with most computers. It comes with EZ Tape Converter software for PC, and EZ Audio converter software for Mac, which allow you to import your music into iTunes. When you're finished archiving your cassettes to your computer, you can connect the unit to your stereo system using standard RCA cables. System require- ments are: a USB port, CD drive, WinXP/Vista, or Mac OS 10.4 or later. ITEM DESCRIPTION PRICE TAPE2PC ...................USB dual-well-cassette deck ...................................................... 119.99 360 SYSTEMS INSTANT REPLAY2 DIGITAL RECORDER This self-contained digital audio hard- disc recorder gives you instant access to 1000 cuts. Now with 100MB Ethernet for file transfer and backup. Hours of stereo, 16/24bit, 44.1/48kHz audio can be recorded, titled and organized into 10 banks and then assigned to any of 50 MAXELL AUDIO CASSETTES Features epitaxial magnetic particles combined hotkeys. Import or export WAV files as well. The automatic head trim feature with Maxell's unique Multi-Orientation Technology, to produce high output with removes dead space before a cut for zero-delay playback and any cut can be low noise. High endurance binder, high resonance damping cassette mechanism looped. Other features include XLR and RCA analog inputs, AES/EBU and digital and fixed low distortion screening shield. Communicator series features normal I/O (XLR & RCA), GPI control, headphone jack and SCSI I/O for connection to bias, packed in jewel case. -

Stereo Cassette Deck

3-866-255-11(1) Stereo Cassette Deck Operating Instructions TC-WE435 1999 by Sony Corporation INFORMATION Warning This equipment has been tested and Welcome! found to comply with the limits for a To prevent fire or shock Class B digital device, pursuant to Part Thank you for purchasing the Sony 15 of the FCC Rules. These limits are hazard, do not expose Stereo Cassette Deck. Before operating designed to provide reasonable the unit, please read this manual the unit to rain or protection against harmful interference thoroughly and retain it for future moisture. in a residential installation. This reference. equipment generates, uses, and can radiate radio frequency energy and, if NOTICE FOR THE CUSTOMERS IN THE not installed and used in accordance U.S.A. with the instructions, may cause harmful interference to radio About This Manual communications. However, there is no guarantee that interference will not The instructions in this manual are for occur in a particular installation. If this model TC-WE435. equipment does cause harmful interference to radio or television Convention reception, which can be determined by The following icon is used in this turning the equipment off and on, the manual: user is encouraged to try to correct the interference by one or more of the Indicates hints and tips for following measures: z making the task easier. This symbol is intended to alert the user —Reorient or relocate the receiving to the presence of uninsulated antenna. “dangerous voltage” within the —Increase the separation between the product’s enclosure that may be of equipment and receiver. -

Media Draft Appendix

Media Draft Appendix October, 2001 P C Hariharan Media Historical evidence for written records dates from about the middle of the third millennium BC. The writing is on media1 like a rock face, cave wall, clay tablets, papyrus scrolls and metallic discs. Writing, which was at first logographic, went through various stages such as ideography, polyphonic syllabary, monophonic syllabary and the very condensed alphabetic systems used by the major European languages today. The choice of the medium on which the writing was done has played a significant part in the development of writing. Thus, the Egyptians used hieroglyphic symbols for monumental and epigraphic writing, but began to adopt the slightly different hieratic form of it on papyri where it coexisted with hieroglyphics. Later, demotic was derived from hieratic for more popular uses. In writing systems based on the Greek and Roman alphabet, monumental writing made minimal use of uncials and there was often no space between words; a soft surface, and a stylus one does not have to hammer on, are conducive to cursive writing. Early scribes did not have a wide choice of media or writing instruments. Charcoal, pigments derived from mineral ores, awls and chisels have all been used on hard media. Cuneiform writing on clay tablets, and Egyptian hieroglyphic and hieratic writing on papyrus scrolls, permitted the use of a stylus made from reeds. These could be shaped and kept in writing trim by the scribe, and the knowledge and skill needed for their use was a cherished skill often as valuable as the knowledge of writing itself. -

24-Bit Digital Portastudio 788 788 24-Bit Digital Portastudio Portastudio Digital 24-Bit Curr J78 038 Tascam 788 12/9/00 12:44 Pm Page 3

Curr J78_038 Tascam 788 12/9/00 12:43 pm Page 2 DIGITAL 24-bit Digital Portastudio 788 788 24-bit Digital Portastudio Curr J78_038 Tascam 788 12/9/00 12:44 pm Page 3 DIGITAL Download your imagination direct to hard disk with the ultimate digital Portastudio The backlit LCD display provides comprehensive information Portastudios are all about getting an idea out of your uses 24-bit digital converters. This means lower distortion, offers a true parametric midrange EQ. This critical head and on to something permanent - without getting much higher headroom and dynamic range, adding up to frequency range, where most of the tones for your vocals, tangled in technology. The remarkable Tascam 788 allows audio quality beyond that of standard Compact Discs. guitars and keyboards reside, can be minutely adjusted using you to download your imagination like never before: independent gain, frequency and bandwidth (Q) controls. Great Digital Multieffects and Parametric EQ straight on to hard disk with professional-quality You can expect a digital workstation to have great- 6 Versatile Inputs uncompressed 24-bit digital audio resolution! sounding reverb for vocals and instruments. But the 788 Routing Inputs on the 788 are six 0.25" jacks (4 balanced There are 250 virtual tracks for multiple takes that can be also offers killer distortions, lush choruses, delays, pitch for Mic/Line and 2 unbalanced for Line). One of these is designated for direct connection to guitars. Stereo combined into an 8-track multitrack master. Random shifters, flangers, phasers and more. Many of the presets outputs for mixing down, monitor outputs to send audio access operation and non-destructive digital editing tools are designed to let you emulate your favourite guitar to your speakers and aux outputs are all included on allow you to cut, copy and paste your song to your tones with very little tweaking required, although all of individual connectors. -

Common Tape Manipulation Techniques and How They Relate to Modern Electronic Music

Common Tape Manipulation Techniques and How They Relate to Modern Electronic Music Matthew A. Bardin Experimental Music & Digital Media Center for Computation & Technology Louisiana State University Baton Rouge, Louisiana 70803 [email protected] ABSTRACT the 'play head' was utilized to reverse the process and gen- The purpose of this paper is to provide a historical context erate the output's audio signal [8]. Looking at figure 1, from to some of the common schools of thought in regards to museumofmagneticsoundrecording.org (Accessed: 03/20/2020), tape composition present in the later half of the 20th cen- the locations of the heads can be noticed beneath the rect- tury. Following this, the author then discusses a variety of angular protective cover showing the machine's model in the more common techniques utilized to create these and the middle of the hardware. Previous to the development other styles of music in detail as well as provides examples of the reel-to-reel machine, electronic music was only achiev- of various tracks in order to show each technique in process. able through live performances on instruments such as the In the following sections, the author then discusses some of Theremin and other early predecessors to the modern syn- the limitations of tape composition technologies and prac- thesizer. [11, p. 173] tices. Finally, the author puts the concepts discussed into a modern historical context by comparing the aspects of tape composition of the 20th century discussed previous to the composition done in Digital Audio recording and manipu- lation practices of the 21st century. Author Keywords tape, manipulation, history, hardware, software, music, ex- amples, analog, digital 1. -

Dual Digital Audio Tape Deck OWNER's MANUAL

» DA-302 Dual Digital Audio Tape Deck OWNER’S MANUAL D00313200A Important Safety Precautions CAUTION: TO REDUCE THE RISK OF ELECTRIC SHOCK, DO NOT REMOVE COVER (OR BACK). NO USER-SERVICEABLE PARTS INSIDE. REFER SERVICING TO QUALI- Ü FIED SERVICE PERSONNEL. The lightning flash with arrowhead symbol, within equilateral triangle, is intended to alert the user to the presence of uninsulated “dangerous voltage” within the product’s enclosure ÿ that may be of sufficient magnitude to constitute a risk of electric shock to persons. The exclamation point within an equilateral triangle is intended to alert the user to the pres- ence of important operating and maintenance (servicing) instructions in the literature Ÿ accompanying the appliance. This appliance has a serial number located on the rear panel. Please record the model number and WARNING: TO PREVENT FIRE OR SHOCK serial number and retain them for your records. Model number HAZARD, DO NOT EXPOSE THIS Serial number APPLIANCE TO RAIN OR MOISTURE. For U.S.A Important (for U.K. Customers) TO THE USER DO NOT cut off the mains plug from this equip- This equipment has been tested and found to com- ment. If the plug fitted is not suitable for the power ply with the limits for a Class A digital device, pur- points in your home or the cable is too short to suant to Part 15 of the FCC Rules. These limits are reach a power point, then obtain an appropriate designed to provide reasonable protection against safety approved extension lead or consult your harmful interference when the equipment is operat- dealer. -

AW2400 Owner's Manual

Owner’s Manual EN FCC INFORMATION (U.S.A.) 1. IMPORTANT NOTICE: DO NOT MODIFY THIS devices. Compliance with FCC regulations does not guar- UNIT! antee that interference will not occur in all installations. If This product, when installed as indicated in the instruc- this product is found to be the source of interference, tions contained in this manual, meets FCC requirements. which can be determined by turning the unit “OFF” and Modifications not expressly approved by Yamaha may “ON”, please try to eliminate the problem by using one of void your authority, granted by the FCC, to use the prod- the following measures: uct. Relocate either this product or the device that is being 2. IMPORTANT: When connecting this product to acces- affected by the interference. sories and/or another product use only high quality Utilize power outlets that are on different branch (circuit shielded cables. Cable/s supplied with this product MUST breaker or fuse) circuits or install AC line filter/s. be used. Follow all installation instructions. Failure to fol- In the case of radio or TV interference, relocate/reorient low instructions could void your FCC authorization to use the antenna. If the antenna lead-in is 300 ohm ribbon this product in the USA. lead, change the lead-in to co-axial type cable. 3. NOTE: This product has been tested and found to com- If these corrective measures do not produce satisfactory ply with the requirements listed in FCC Regulations, Part results, please contact the local retailer authorized to dis- 15 for Class “B” digital devices. -

Direct-To-Master Recording

Direct-To-Master Recording J. I. Agnew S. Steldinger Magnetic Fidelity http://www.magneticfidelity.com info@magneticfidelity.com July 31, 2016 Abstract Direct-to-Master Recording is a method of recording sound, where the music is performed entirely live and captured directly onto the master medium. This is usually done entirely in the analog domain using either magnetic tape or a phonograph disk as the recording medium. The result is an intense and realistic sonic image of the performance with an outstandig dynamic range. 1 The evolution of sound tracks can now also be edited note by note to recording technology compile a solid performance that can be altered or \improved" at will. Sound recording technology has greatly evolved This technological progress has made it pos- since the 1940's, when Direct-To-Master record- sible for far less competent musicians to make ing was not actually something special, but more a more or less competent sounding album and like one of the few options for recording music. for washed out rock stars who, if all put in the This evolution has enabled us to do things that same room at the same time, would probably would be unthinkable in those early days, such as murder each other, to make an album together. multitrack recording, which allows different in- Or, at least almost together. This ability, how- struments to be recorded at different times, and ever, comes at a certain cost. The recording pro- mixed later to create what sounds like a perfor- cess has been broken up into several stages, per- mance by many instruments at the same time. -

Be Ready. * Battery Life Subject to Conditions PMD661 | Professional High-Resolution Audio Recorder PMD620 | Professional Handheld Recorder

PORTABLE FLASH RECORDERS The Widest Selection of Portable Recorders is at BSW! This Pro Portable Recorder is BSW Every Reporter’s First Choice! Industry-Favorite Field Recorder CUSTOMER The PMD661 records MP3/WAV to SDHC and The solid-state Marantz PMD620 digital audio FAVORITE features built-in condenser mics, a pair field recorder boasts an easy-to-use interface, of XLR inputs, a stereo line-level minijack two built-in omnidirectional condenser port, and an S/PDIF digital connection. microphones for stereo recording and a speaker • Records direct to MP3 or WAV formats in 16- or 24- • Switchable balanced XLR Mic/Line inputs with bit resolution on SD and SDHC flash memory cards +48V phantom power • 1/8" input for external mics (provides +5v phantom • Large, easy-to-use multi-function interface with power for electret condensers) improved navigation • Non-destructive editing to create customized files • 1/4" headphone jack with independent level control • Runs on 2 AA or NiMH batteries for up to 5 hours of operation • Built-in stereo speakers • Mark Editor software included for quick referencing. • USB 2.0 data port for fast transfer of files to PC/ PMD620 List $429.00 Mac • Advanced editing features, including Timer Re- Contact BSW For Lowest Prices cord/Playback, Copy segment, and File divide Accessories: PRC620 Carry Case For PMD620 $45.00 PMD661 List $649.00 Accessories: Contact BSW For Lowest Prices PRC661 Carry Case For PMD661 $69.00 Rugged, Reliable CompactFlash Recorder The PMD660 handheld digital recorder combines convenient solid-state CompactFlash digital recording with Marantz audio know-how, for rugged and reliable performance in the field.