Propaganda and Fake News

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

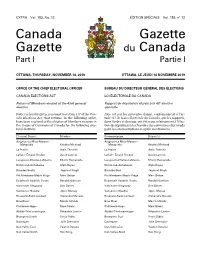

Canada Gazette, Part I

EXTRA Vol. 153, No. 12 ÉDITION SPÉCIALE Vol. 153, no 12 Canada Gazette Gazette du Canada Part I Partie I OTTAWA, THURSDAY, NOVEMBER 14, 2019 OTTAWA, LE JEUDI 14 NOVEMBRE 2019 OFFICE OF THE CHIEF ELECTORAL OFFICER BUREAU DU DIRECTEUR GÉNÉRAL DES ÉLECTIONS CANADA ELECTIONS ACT LOI ÉLECTORALE DU CANADA Return of Members elected at the 43rd general Rapport de député(e)s élu(e)s à la 43e élection election générale Notice is hereby given, pursuant to section 317 of the Can- Avis est par les présentes donné, conformément à l’ar- ada Elections Act, that returns, in the following order, ticle 317 de la Loi électorale du Canada, que les rapports, have been received of the election of Members to serve in dans l’ordre ci-dessous, ont été reçus relativement à l’élec- the House of Commons of Canada for the following elec- tion de député(e)s à la Chambre des communes du Canada toral districts: pour les circonscriptions ci-après mentionnées : Electoral District Member Circonscription Député(e) Avignon–La Mitis–Matane– Avignon–La Mitis–Matane– Matapédia Kristina Michaud Matapédia Kristina Michaud La Prairie Alain Therrien La Prairie Alain Therrien LaSalle–Émard–Verdun David Lametti LaSalle–Émard–Verdun David Lametti Longueuil–Charles-LeMoyne Sherry Romanado Longueuil–Charles-LeMoyne Sherry Romanado Richmond–Arthabaska Alain Rayes Richmond–Arthabaska Alain Rayes Burnaby South Jagmeet Singh Burnaby-Sud Jagmeet Singh Pitt Meadows–Maple Ridge Marc Dalton Pitt Meadows–Maple Ridge Marc Dalton Esquimalt–Saanich–Sooke Randall Garrison Esquimalt–Saanich–Sooke -

Acentury Inc. 120 West Beaver Creek Rd., Unit 13 Richmond Hill, Ontario Canada L4B 1L2

Acentury Inc. 120 West Beaver Creek Rd., Unit 13 Richmond Hill, Ontario Canada L4B 1L2 Director General, Telecommunications and Internet Policy Branch Innovation, Science and Economic Development Canada 235 Queen Street, 10th Floor Ottawa, Ontario K1A 0H5 February 13, 2020 Subject: Petition to the Governor in Council to Vary Telecom Order CRTC 2019-288, Follow-up to Telecom Orders 2016-396 and 2016-448 – Final rates for aggregated wholesale high-speed access services, Reference: Canadian Gazette, Part 1, August 2019, (TIPB-002-2019) Dear Director General, Telecommunications and Internet Policy Branch, Innovation, Science and Economic Development Canada: I’m writing this letter in response to the CRTC decision on August 2019 under section 12 of the Telecommunications Act issued by the Canadian Radio-television and Telecommunications Commission (CRTC) concerning final rates for aggregated wholesale high-speed access services. As a valued supplier for all the major Canadian Telecommunication companies, I felt obliged to communicate the impact this decision will have on a growing Canadian technology company like ourselves. Acentury is an aspiring technology company who is currently one of the top 500 Canadian growing businesses as reported by Canadian Business (2019) and also one of the top 400 Canadian growing companies as reported by the Globe and Mail (2019). Our achievement and continued success are a direct result of the investment commitment made to next generation 5G and IoT wireless communications led by Bell Canada, Rogers and Telus. Canadian suppliers like us have been supported by Canadian Tier 1 telcos to help build and innovate our technical core competencies and capabilities; it has helped cultivate the growth of a Canadian-led, global organization that can keep pace and compete with our global technology peers. -

Core 1..16 Journalweekly (PRISM::Advent3b2 17.25)

HOUSE OF COMMONS OF CANADA CHAMBRE DES COMMUNES DU CANADA 42nd PARLIAMENT, 1st SESSION 42e LÉGISLATURE, 1re SESSION Journals Journaux No. 22 No 22 Monday, February 22, 2016 Le lundi 22 février 2016 11:00 a.m. 11 heures PRAYER PRIÈRE GOVERNMENT ORDERS ORDRES ÉMANANT DU GOUVERNEMENT The House resumed consideration of the motion of Mr. Trudeau La Chambre reprend l'étude de la motion de M. Trudeau (Prime Minister), seconded by Mr. LeBlanc (Leader of the (premier ministre), appuyé par M. LeBlanc (leader du Government in the House of Commons), — That the House gouvernement à la Chambre des communes), — Que la Chambre support the government’s decision to broaden, improve, and appuie la décision du gouvernement d’élargir, d’améliorer et de redefine our contribution to the effort to combat ISIL by better redéfinir notre contribution à l’effort pour lutter contre l’EIIL en leveraging Canadian expertise while complementing the work of exploitant mieux l’expertise canadienne, tout en travaillant en our coalition partners to ensure maximum effect, including: complémentarité avec nos partenaires de la coalition afin d’obtenir un effet optimal, y compris : (a) refocusing our military contribution by expanding the a) en recentrant notre contribution militaire, et ce, en advise and assist mission of the Canadian Armed Forces (CAF) in développant la mission de conseil et d’assistance des Forces Iraq, significantly increasing intelligence capabilities in Iraq and armées canadiennes (FAC) en Irak, en augmentant theatre-wide, deploying CAF medical personnel, -

Core 1..176 Hansard

House of Commons Debates VOLUME 148 Ï NUMBER 140 Ï 1st SESSION Ï 42nd PARLIAMENT OFFICIAL REPORT (HANSARD) Tuesday, February 14, 2017 Speaker: The Honourable Geoff Regan CONTENTS (Table of Contents appears at back of this issue.) 8879 HOUSE OF COMMONS Tuesday, February 14, 2017 The House met at 10 a.m. QUESTIONS ON THE ORDER PAPER Mr. Kevin Lamoureux (Parliamentary Secretary to the Leader of the Government in the House of Commons, Lib.): Prayer Mr. Speaker, I would ask that all questions be allowed to stand. The Speaker: Is that agreed? ROUTINE PROCEEDINGS Some hon. members: Agreed. SUPPLEMENTARY ESTIMATES (C), 2016-17 A message from His Excellency the Governor General transmit- GOVERNMENT ORDERS ting supplementary estimates (C) for the financial year ending March [English] 31, 2017, was presented by the President of the Treasury Board and read by the Speaker to the House. CONTROLLED DRUGS AND SUBSTANCES ACT *** BILL C-37—TIME ALLOCATION MOTION Ï (1005) Hon. Bardish Chagger (Leader of the Government in the House of Commons and Minister of Small Business and [English] Tourism, Lib.): Mr. Speaker, an agreement has been reached PETITIONS between a majority of the representatives of recognized parties under the provisions of Standing Order 78(2) with respect to the report TAXATION stage and third reading stage of Bill C-37, an act to amend the Mrs. Cheryl Gallant (Renfrew—Nipissing—Pembroke, CPC): Controlled Drugs and Substances Act and to make related Mr. Speaker, I am pleased to present a petition signed by campers amendments to other acts. Therefore I move: who stayed at Mirror Lake Resort Campground in Pass Lake, That, in relation to Bill C-37, an act to amend the Controlled Drugs and Substances Ontario, located in the riding of Thunder Bay—Superior North. -

A Parliamentarian's

A Parliamentarian’s Year in Review 2018 Table of Contents 3 Message from Chris Dendys, RESULTS Canada Executive Director 4 Raising Awareness in Parliament 4 World Tuberculosis Day 5 World Immunization Week 5 Global Health Caucus on HIV/AIDS, Tuberculosis and Malaria 6 UN High-Level Meeting on Tuberculosis 7 World Polio Day 8 Foodies That Give A Fork 8 The Rush to Flush: World Toilet Day on the Hill 9 World Toilet Day on the Hill Meetings with Tia Bhatia 9 Top Tweet 10 Forging Global Partnerships, Networks and Connections 10 Global Nutrition Leadership 10 G7: 2018 Charlevoix 11 G7: The Whistler Declaration on Unlocking the Power of Adolescent Girls in Sustainable Development 11 Global TB Caucus 12 Parliamentary Delegation 12 Educational Delegation to Kenya 14 Hearing From Canadians 14 Citizen Advocates 18 RESULTS Canada Conference 19 RESULTS Canada Advocacy Day on the Hill 21 Engagement with the Leaders of Tomorrow 22 United Nations High-Level Meeting on Tuberculosis 23 Pre-Budget Consultations Message from Chris Dendys, RESULTS Canada Executive Director “RESULTS Canada’s mission is to create the political will to end extreme poverty and we made phenomenal progress this year. A Parliamentarian’s Year in Review with RESULTS Canada is a reminder of all the actions decision makers take to raise their voice on global poverty issues. Thank you to all the Members of Parliament and Senators that continue to advocate for a world where everyone, no matter where they were born, has access to the health, education and the opportunities they need to thrive. “ 3 Raising Awareness in Parliament World Tuberculosis Day World Tuberculosis Day We want to thank MP Ziad Aboultaif, Edmonton MPs Dean Allison, Niagara West, Brenda Shanahan, – Manning, for making a statement in the House, Châteauguay—Lacolle and Senator Mobina Jaffer draw calling on Canada and the world to commit to ending attention to the global tuberculosis epidemic in a co- tuberculosis, the world’s leading infectious killer. -

George Committees Party Appointments P.20 Young P.28 Primer Pp

EXCLUSIVE POLITICAL COVERAGE: NEWS, FEATURES, AND ANALYSIS INSIDE HARPER’S TOOTOO HIRES HOUSE LATE-TERM GEORGE COMMITTEES PARTY APPOINTMENTS P.20 YOUNG P.28 PRIMER PP. 30-31 CENTRAL P.35 TWENTY-SEVENTH YEAR, NO. 1322 CANADA’S POLITICS AND GOVERNMENT NEWSWEEKLY MONDAY, FEBRUARY 22, 2016 $5.00 NEWS SENATE REFORM NEWS FINANCE Monsef, LeBlanc LeBlanc backs away from Morneau to reveal this expected to shed week Trudeau’s whipped vote on assisted light on deficit, vision for non- CIBC economist partisan Senate dying bill, but Grit MPs predicts $30-billion BY AbbaS RANA are ‘comfortable,’ call it a BY DEREK ABMA Senators are eagerly waiting to hear this week specific details The federal government is of the Trudeau government’s plan expected to shed more light on for a non-partisan Red Cham- Charter of Rights issue the size of its deficit on Monday, ber from Government House and one prominent economist Leader Dominic LeBlanc and Members of the has predicted it will be at least Democratic Institutions Minister Joint Committee $30-billion—about three times Maryam Monsef. on Physician- what the Liberals promised dur- The appearance of the two Assisted ing the election campaign—due to ministers at the Senate stand- Suicide, lower-than-expected tax revenue ing committee will be the first pictured at from a slow economy and the time the government has pre- a committee need for more fiscal stimulus. sented detailed plans to reform meeting on the “The $10-billion [deficit] was the Senate. Also, this is the first Hill. The Hill the figure that was out there official communication between Times photograph based on the projection that the the House of Commons and the by Jake Wright economy was growing faster Senate on Mr. -

Debates of the House of Commons

43rd PARLIAMENT, 1st SESSION House of Commons Debates Official Report (Hansard) VOLUME 149 NUMBER 005 Wednesday, December 11, 2019 Speaker: The Honourable Anthony Rota CONTENTS (Table of Contents appears at back of this issue.) 263 HOUSE OF COMMONS Wednesday, December 11, 2019 The House met at 2 p.m. tude to the people of Bellechasse—Les Etchemins—Lévis for plac‐ ing their trust in me for the fifth time in a row. I would also like to thank our amazing team of volunteers, my Prayer family and my wonderful wife, Marie. My entire team and I are here to help the people in our riding. We are facing major chal‐ ● (1405) lenges, but, unfortunately, the throne speech was silent on subjects [English] such as the labour shortage, shipbuilding and high-speed Internet and cell service in the regions. The Speaker: It being Wednesday, we will now have the singing of O Canada led by the hon. member for Kitchener—Conestoga. People say that election campaigns begin on election night, but in Quebec, in Canada and in my riding, Bellechasse—Les [Members sang the national anthem] Etchemins—Lévis, we are rolling up our sleeves and focusing on sustainable prosperity. * * * STATEMENTS BY MEMBERS [English] [Translation] NEWMARKET—AURORA CLOSURE OF BRUNSWICK SMELTER Mr. Tony Van Bynen (Newmarket—Aurora, Lib.): Mr. Speak‐ Mr. Serge Cormier (Acadie—Bathurst, Lib.): Mr. Speaker, I er, I am proud to rise in the House for the first time as the member want to start by thanking the voters of Acadie—Bathurst for giving of Parliament for Newmarket—Aurora in the 43rd Parliament. -

Core 1..230 Hansard

House of Commons Debates VOLUME 148 Ï NUMBER 435 Ï 1st SESSION Ï 42nd PARLIAMENT OFFICIAL REPORT (HANSARD) Monday, June 17, 2019 Speaker: The Honourable Geoff Regan CONTENTS (Table of Contents appears at back of this issue.) 29155 HOUSE OF COMMONS Monday, June 17, 2019 The House met at 11 a.m. therapy because they cannot afford the costs of the supplies, devices and medications. The impacts of this are far reaching. Unable to comply with their therapy, it puts people at increased risk of serious health complications. In addition to the human impact, this adds Prayer strain to our health care system, as it must deal with completely avoidable emergency interventions. It does not need to be this way. New Democrats, since the time we won the fight for medicare in PRIVATE MEMBERS' BUSINESS this country under Tommy Douglas, believe that our work will not Ï (1100) be done until we also have a universal public pharmacare plan. The [English] health and financial impacts of not having a universal public pharmacare plan are as clear as day when we look at the impacts of DIABETES AWARENESS MONTH diabetes in this country. We must also keep in mind that prevention The House resumed from May 28 consideration of the motion. is cheaper than intervention. We know that there are other social Ms. Jenny Kwan (Vancouver East, NDP): Mr. Speaker, I am policies we can engage in to reduce the risk of people developing very happy to engage in this important discussion. In 2014, the Steno diabetes in the first place. -

EUDO Citizenship Observatory

EUDO CITIZENSHIP OBSERVATORY ACCESS TO ELECTORAL RIGHTS CANADA Willem Maas July 2015 CITIZENSHIP http://eudo-citizenship.eu European University Institute, Florence Robert Schuman Centre for Advanced Studies EUDO Citizenship Observatory Access to Electoral Rights Canada Willem Maas July 2015 EUDO Citizenship Observatory Robert Schuman Centre for Advanced Studies Access to Electoral Rights Report, RSCAS/EUDO-CIT-ER 2015/9 Badia Fiesolana, San Domenico di Fiesole (FI), Italy © Willem Maas This text may be downloaded only for personal research purposes. Additional reproduction for other purposes, whether in hard copies or electronically, requires the consent of the authors. Requests should be addressed to [email protected] The views expressed in this publication cannot in any circumstances be regarded as the official position of the European Union Published in Italy European University Institute Badia Fiesolana I – 50014 San Domenico di Fiesole (FI) Italy www.eui.eu/RSCAS/Publications/ www.eui.eu cadmus.eui.eu Research for the EUDO Citizenship Observatory Country Reports has been jointly supported, at various times, by the European Commission grant agreements JLS/2007/IP/CA/009 EUCITAC and HOME/2010/EIFX/CA/1774 ACIT, by the European Parliament and by the British Academy Research Project CITMODES (both projects co-directed by the EUI and the University of Edinburgh). The financial support from these projects is gratefully acknowledged. For information about the project please visit the project website at http://eudo-citizenship.eu Access to Electoral Rights Canada Willem Maas 1. Introduction Canada has a Westminster-style system of government and, like the bicameral British Parliament, members of Canada’s upper house, the Senate (Senators), are appointed while members of the lower house, the House of Commons (Members of Parliament, MPs), are elected in single-seat constituencies.1 Each MP is elected to represent a specific constituency in a plurality, first-past-the-post election for a term not to exceed five years. -

LOBBY MONIT R the 43Rd Parliament: a Guide to Mps’ Personal and Professional Interests Divided by Portfolios

THE LOBBY MONIT R The 43rd Parliament: a guide to MPs’ personal and professional interests divided by portfolios Canada currently has a minority Liberal government, which is composed of 157 Liberal MPs, 121 Conservative MPs, 32 Bloc Québécois MPs, 24 NDP MPs, as well as three Green MPs and one Independent MP. The following lists offer a breakdown of which MPs have backgrounds in the various portfolios on Parliament Hill. This information is based on MPs’ official party biographies and parliamentary committee experience. Compiled by Jesse Cnockaert THE LOBBY The 43rd Parliament: a guide to MPs’ personal and professional interests divided by portfolios MONIT R Agriculture Canadian Heritage Children and Youth Education Sébastien Lemire Caroline Desbiens Kristina Michaud Lenore Zann Louis Plamondon Martin Champoux Yves-François Blanchet Geoff Regan Yves Perron Marilène Gill Gary Anandasangaree Simon Marcil Justin Trudeau Claude DeBellefeuille Julie Dzerowicz Scott Simms Filomena Tassi Sean Casey Lyne Bessette Helena Jaczek Andy Fillmore Gary Anandasangaree Mona Fortier Lawrence MacAulay Darrell Samson Justin Trudeau Harjit Sajjan Wayne Easter Wayne Long Jean-Yves Duclos Mary Ng Pat Finnigan Mélanie Joly Patricia Lattanzio Shaun Chen Marie-Claude Bibeau Yasmin Ratansi Peter Schiefke Kevin Lamoureux Francis Drouin Gary Anandasangaree Mark Holland Lloyd Longfield Soraya Martinez Bardish Chagger Pablo Rodriguez Ahmed Hussen Francis Scarpaleggia Karina Gould Jagdeep Sahota Steven Guilbeault Filomena Tassi Kevin Waugh Richard Lehoux Justin Trudeau -

Progressive Canadian Party Opening Remarks Concerning Bill C-76, The

Progressive Canadian party opening remarks concerning Bill C-76, The Election Modernization Act, An Act to amend the Canada Elections Act and other Acts and to make certain consequential amendments. Remarks Delivered to the Standing Committee on Procedure and House Affairs, June 6, 2018, by Brian Marlatt, Communications and Policy Director, Progressive Canadian Party. Thank you to the chair and to the committee for inviting the Progressive Canadian party, who I represent here today, to present important evidence in our view concerning Bill C-76, the Election Modernization Act, An Act to amend the Canada Elections Act and certain other Acts effected by the bill, for study within the committee’s mandate. The Progressive Canadian party is a continuation of the tradition in Canadian politics of a Tory party willing “to embrace every person desirous of being counted as a ‘progressive Conservative’ ” (Macdonald to Strachan, Correspondence, February 9, 1854: National Archives). The PC Party was led, until his recent passing, by the Hon. Sinclair Stevens who was a minister in the Clark and Mulroney Progressive Conservative governments, and is now led by former PC MP Joe Hueglin. I am speaking today as Communications and Policy Director on PC Party National Council but I have also contributed to the Elections Canada Advisory Committee of Political Parties in 2015 and again in meetings in 2018, yesterday in fact, and previously have also served, before political involvement, as an Elections Canada DRO and Elections BC Voting Officer and Clerk. I hope this experience adds value to our witness testimony. Evidence and comments today will be limited largely to implications of Bill C-76 in the context of today’s fixed date election law introduced in 2006, the Fair Elections Act, sometimes described as the Voter Suppression Act by Progressive Canadians, introduced as Bill C-23 in the 41st Parliament, and other proposed electoral reforms which have been part of public discussion of this bill. -

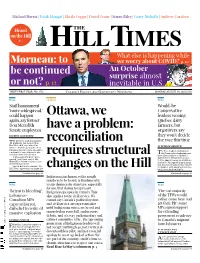

Ottawa, We Have a Problem: Reconciliation Requires Structural Changes on the Hill

Michael Harris | Palak Mangat | Sheila Copps | David Crane | Susan Riley | Gerry Nicholls | Andrew Cardozo Heard on the Hill p.2 What else is happening while Morneau: to we worry about COVID? p. 10 be continued An October surprise almost or not? p. 12 inevitable in U.S. p. 11 THIRTY-FIRST YEAR, NO. 1752 CANADA’S POLITICS AND GOVERNMENT NEWSPAPER MONDAY, AUGUST 10, 2020 $5.00 News Opinion News Staff harassment Would-be ‘more widespread,’ Conservative could happen Ottawa, we leaders wooing again, say former Quebec dairy Don Meredith farmers, but Senate employees have a problem: organizers say BY PETER MAZERREUW they won’t decide he Senate’s staff harassment reconciliation the race this time Tproblems run deeper than Don Meredith, say two of his BY PETER MAZEREEUW former employees, and the Red Chamber has not done enough to he three leading candidates in confront its own failures to pro- Tthe Conservative leadership race tect staff from their bosses. requires structural have all reached out to Quebec’s “I would say it’s more wide- dairy farmers with promises to pro- spread,” said Jane, one of Mr. tect the supply management system, Meredith’s former staffers, in an in a bid to win support from a group interview last week. that may have swung the last contest Jane is not her real name. The in favour of now outgoing Conserva- Hill Times agreed not to name the changes on the Hill tive Leader Andrew Scheer. Continued on page 14 Continued on page 16 Indigenous inclusion, with enough numbers to be heard, is fundamental News to any democratic structure, especially News for one that claims to represent ‘Beirut is bleeding’: Indigenous peoples in Canada.