2019 Color and Imaging Conference Final Program and Abstracts

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Investigating the Effect of Color Gamut Mapping Quantitatively and Visually

Rochester Institute of Technology RIT Scholar Works Theses 5-2015 Investigating the Effect of Color Gamut Mapping Quantitatively and Visually Anupam Dhopade Follow this and additional works at: https://scholarworks.rit.edu/theses Recommended Citation Dhopade, Anupam, "Investigating the Effect of Color Gamut Mapping Quantitatively and Visually" (2015). Thesis. Rochester Institute of Technology. Accessed from This Thesis is brought to you for free and open access by RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. Investigating the Effect of Color Gamut Mapping Quantitatively and Visually by Anupam Dhopade A thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Print Media in the School of Media Sciences in the College of Imaging Arts and Sciences of the Rochester Institute of Technology May 2015 Primary Thesis Advisor: Professor Robert Chung Secondary Thesis Advisor: Professor Christine Heusner School of Media Sciences Rochester Institute of Technology Rochester, New York Certificate of Approval Investigating the Effect of Color Gamut Mapping Quantitatively and Visually This is to certify that the Master’s Thesis of Anupam Dhopade has been approved by the Thesis Committee as satisfactory for the thesis requirement for the Master of Science degree at the convocation of May 2015 Thesis Committee: __________________________________________ Primary Thesis Advisor, Professor Robert Chung __________________________________________ Secondary Thesis Advisor, Professor Christine Heusner __________________________________________ Graduate Director, Professor Christine Heusner __________________________________________ Administrative Chair, School of Media Sciences, Professor Twyla Cummings ACKNOWLEDGEMENT I take this opportunity to express my sincere gratitude and thank all those who have supported me throughout the MS course here at RIT. -

Prediction of Munsell Appearance Scales Using Various Color-Appearance Models

Prediction of Munsell Appearance Scales Using Various Color- Appearance Models David R. Wyble,* Mark D. Fairchild Munsell Color Science Laboratory, Rochester Institute of Technology, 54 Lomb Memorial Dr., Rochester, New York 14623-5605 Received 1 April 1999; accepted 10 July 1999 Abstract: The chromaticities of the Munsell Renotation predict the color of the objects accurately in these examples, Dataset were applied to eight color-appearance models. a color-appearance model is required. Modern color-appear- Models used were: CIELAB, Hunt, Nayatani, RLAB, LLAB, ance models should, therefore, be able to account for CIECAM97s, ZLAB, and IPT. Models were used to predict changes in illumination, surround, observer state of adapta- three appearance correlates of lightness, chroma, and hue. tion, and, in some cases, media changes. This definition is Model output of these appearance correlates were evalu- slightly relaxed for the purposes of this article, so simpler ated for their uniformity, in light of the constant perceptual models such as CIELAB can be included in the analysis. nature of the Munsell Renotation data. Some background is This study compares several modern color-appearance provided on the experimental derivation of the Renotation models with respect to their ability to predict uniformly the Data, including the specific tasks performed by observers to dimensions (appearance scales) of the Munsell Renotation evaluate a sample hue leaf for chroma uniformity. No par- Data,1 hereafter referred to as the Munsell data. Input to all ticular model excelled at all metrics. In general, as might be models is the chromaticities of the Munsell data, and is expected, models derived from the Munsell System per- more fully described below. -

ROCK-COLOR CHART with Genuine Munsell® Color Chips

geological ROCK-COLOR CHART with genuine Munsell® color chips Produced by geological ROCK-COLOR CHART with genuine Munsell® color chips 2009 Year Revised | 2009 Production Date put into use This publication is a revision of the previously published Geological Society of America (GSA) Rock-Color Chart prepared by the Rock-Color Chart Committee (representing the U.S. Geological Survey, GSA, the American Association of Petroleum Geologists, the Society of Economic Geologists, and the Association of American State Geologists). Colors within this chart are the same as those used in previous GSA editions, and the chart was produced in cooperation with GSA. Produced by 4300 44th Street • Grand Rapids, MI 49512 • Tel: 877-888-1720 • munsell.com The Rock-Color Chart The pages within this book are cleanable and can be exposed to the standard environmental conditions that are met in the field. This does not mean that the book will be able to withstand all of the environmental conditions that it is exposed to in the field. For the cleaning of the colored pages, please make sure not to use a cleaning agent or materials that are coarse in nature. These materials could either damage the surface of the color chips or cause the color chips to start to delaminate from the pages. With the specifying of the rock color it is important to remember to replace the rock color chart book on a regular basis so that the colors being specified are consistent from one individual to another. We recommend that you mark the date when you started to use the the book. -

Ph.D. Thesis Abstractindex

COLOR REPRODUCTION OF FACIAL PATTERN AND ENDOSCOPIC IMAGE BASED ON COLOR APPEARANCE MODELS December 1996 Francisco Hideki Imai Graduate School of Science and Technology Chiba University, JAPAN Dedicated to my parents ii COLOR REPRODUCTION OF FACIAL PATTERN AND ENDOSCOPIC IMAGE BASED ON COLOR APPEARANCE MODELS A dissertation submitted to the Graduate School of Science and Technology of Chiba University in partial fulfillment of the requirements for the degree of Doctor of Philosophy by Francisco Hideki Imai December 1996 iii Declaration This is to certify that this work has been done by me and it has not been submitted elsewhere for the award of any degree or diploma. Countersigned Signature of the student ___________________________________ _______________________________ Yoichi Miyake, Professor Francisco Hideki Imai iv The undersigned have examined the dissertation entitled COLOR REPRODUCTION OF FACIAL PATTERN AND ENDOSCOPIC IMAGE BASED ON COLOR APPEARANCE MODELS presented by _______Francisco Hideki Imai____________, a candidate for the degree of Doctor of Philosophy, and hereby certify that it is worthy of acceptance. ______________________________ ______________________________ Date Advisor Yoichi Miyake, Professor EXAMINING COMMITTEE ____________________________ Yoshizumi Yasuda, Professor ____________________________ Toshio Honda, Professor ____________________________ Hirohisa Yaguchi, Professor ____________________________ Atsushi Imiya, Associate Professor v Abstract In recent years, many imaging systems have been developed, and it became increasingly important to exchange image data through the computer network. Therefore, it is required to reproduce color independently on each imaging device. In the studies of device independent color reproduction, colorimetric color reproduction has been done, namely color with same chromaticity or tristimulus values is reproduced. However, even if the tristimulus values are the same, color appearance is not always same under different viewing conditions. -

Medtronic Brand Color Chart

Medtronic Brand Color Chart Medtronic Visual Identity System: Color Ratios Navy Blue Medtronic Blue Cobalt Blue Charcoal Blue Gray Dark Gray Yellow Light Orange Gray Orange Medium Blue Sky Blue Light Blue Light Gray Pale Gray White Purple Green Turquoise Primary Blue Color Palette 70% Primary Neutral Color Palette 20% Accent Color Palette 10% The Medtronic Brand Color Palette was created for use in all material. Please use the CMYK, RGB, HEX, and LAB values as often as possible. If you are not able to use the LAB values, you can use the Pantone equivalents, but be aware the color output will vary from the other four color breakdowns. If you need a spot color, the preference is for you to use the LAB values. Primary Blue Color Palette Navy Blue Medtronic Blue C: 100 C: 99 R: 0 L: 15 R: 0 L: 31 M: 94 Web/HEX Pantone M: 74 Web/HEX Pantone G: 30 A: 2 G: 75 A: -2 Y: 47 #001E46 533 C Y: 17 #004B87 2154 C B: 70 B: -20 B: 135 B: -40 K: 43 K: 4 Cobalt Blue Medium Blue C: 81 C: 73 R: 0 L: 52 R: 0 L: 64 M: 35 Web/HEX Pantone M: 12 Web/HEX Pantone G: 133 A: -12 G: 169 A: -23 Y: 0 #0085CA 2382 C Y: 0 #00A9E0 2191 C B: 202 B: -45 B: 224 B: -39 K: 0 K : 0 Sky Blue Light Blue C: 55 C: 29 R: 113 L: 75 R: 185 L: 85 M: 4 Web/HEX Pantone M: 5 Web/HEX Pantone G: 197 A: -20 G: 217 A: -9 Y: 4 #71C5E8 297 C Y: 5 #B9D9EB 290 C B: 232 B: -26 B: 235 B: -13 K: 0 K: 0 Primary Neutral Color Palette Charcoal Gray Blue Gray C: 0 Pantone C: 68 R: 83 L: 36 R: 91 L: 51 M: 0 Web/HEX Cool M: 40 Web/HEX Pantone G: 86 A: 0 G: 127 A: -9 Y: 0 #53565a Gray Y: 28 #5B7F95 5415 -

Psychophysical Determination of the Relevant Colours That Describe the Colour Palette of Paintings

Journal of Imaging Article Psychophysical Determination of the Relevant Colours That Describe the Colour Palette of Paintings Juan Luis Nieves * , Juan Ojeda, Luis Gómez-Robledo and Javier Romero Department of Optics, Faculty of Science, University of Granada, 18071 Granada, Spain; [email protected] (J.O.); [email protected] (L.G.-R.); [email protected] (J.R.) * Correspondence: [email protected] Abstract: In an early study, the so-called “relevant colour” in a painting was heuristically introduced as a term to describe the number of colours that would stand out for an observer when just glancing at a painting. The purpose of this study is to analyse how observers determine the relevant colours by describing observers’ subjective impressions of the most representative colours in paintings and to provide a psychophysical backing for a related computational model we proposed in a previous work. This subjective impression is elicited by an efficient and optimal processing of the most representative colour instances in painting images. Our results suggest an average number of 21 subjective colours. This number is in close agreement with the computational number of relevant colours previously obtained and allows a reliable segmentation of colour images using a small number of colours without introducing any colour categorization. In addition, our results are in good agreement with the directions of colour preferences derived from an independent component analysis. We show Citation: Nieves, J.L.; Ojeda, J.; that independent component analysis of the painting images yields directions of colour preference Gómez-Robledo, L.; Romero, J. aligned with the relevant colours of these images. Following on from this analysis, the results suggest Psychophysical Determination of the that hue colour components are efficiently distributed throughout a discrete number of directions Relevant Colours That Describe the and could be relevant instances to a priori describe the most representative colours that make up the Colour Palette of Paintings. -

Digital Color Workflows and the HP Dreamcolor Lp2480zx Professional Display

Digital Color Workflows and the HP DreamColor LP2480zx Professional Display Improving accuracy and predictability in color processing at the designer’s desk can increase productivity and improve quality of digital color projects in animation, game development, film/video post production, broadcast, product design, graphic arts and photography. Introduction ...................................................................................................................................2 Managing color .............................................................................................................................3 The property of color ...................................................................................................................3 The RGB color set ....................................................................................................................3 The CMYK color set .................................................................................................................3 Color spaces...........................................................................................................................4 Gamuts..................................................................................................................................5 The digital workflow ....................................................................................................................5 Color profiles..........................................................................................................................5 -

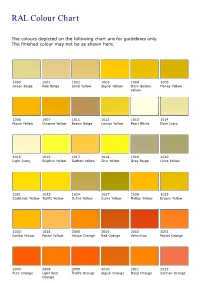

RAL Colour Chart

RAL Colour Chart The colours depicted on the following chart are for guidelines only. The finished colour may not be as shown here. 1000 1001 1002 1003 1004 1005 Green Beige Pale Beige Sand Yellow Signal Yellow Dark Golden Honey Yellow Yellow 1006 1007 1011 1012 1013 1014 Maize Yellow Chrome Yellow Brown Beige Lemon Yellow Pearl White Dark Ivory 1015 1016 1017 1018 1019 1020 Light Ivory Sulphur Yellow Saffron Yellow Zinc Yellow Grey Beige Olive Yellow 1021 1023 1024 1027 1028 1032 Cadmium Yellow Traffic Yellow Ochre Yellow Curry Yellow Mellon Yellow Broom Yellow 1033 1034 2000 2001 2002 2003 Dahlia Yellow Pastel Yellow Yellow Orange Red Orange Vermillion Pastel Orange 2004 2008 2009 2010 2011 2012 Pure Orange Light Red Traffic Orange Signal Orange Deep Orange Salmon Orange Orange 3000 3001 3002 3003 3004 3005 Flame Red RAL Signal Red Carmine Red Ruby Red Purple Red Wine Red 3007 3009 3011 3012 3013 3014 Black Red Oxide Red Brown Red Beige Red Tomato Red Antique Pink 3015 3016 3017 3018 3020 3022 Light Pink Coral Red Rose Strawberry Red Traffic Red Dark Salmon Red 3027 3031 4001 4002 4003 4004 Raspberry Red Orient Red Red Lilac Red Violet Heather Violet Claret Violet 4005 4006 4007 4008 4009 4010 Blue Lilac Traffic Purple Purple Violet Signal Violet Pastel Violet Telemagenta 5000 5001 5002 5003 5004 5005 Violet Blue Green Blue Ultramarine Blue dark Sapphire Black Blue Signal Blue Blue 5007 5008 5009 5010 5011 5012 Brilliant Blue Grey Blue Light Azure Blue Gentian Blue Steel Blue Light Blue 5013 5014 5015 5017 5018 5019 Dark Cobalt Blue -

Fast and Stable Color Balancing for Images and Augmented Reality

Fast and Stable Color Balancing for Images and Augmented Reality Thomas Oskam 1,2 Alexander Hornung 1 Robert W. Sumner 1 Markus Gross 1,2 1 Disney Research Zurich 2 ETH Zurich Abstract This paper addresses the problem of globally balanc- ing colors between images. The input to our algorithm is a sparse set of desired color correspondences between a source and a target image. The global color space trans- formation problem is then solved by computing a smooth Source Image Target Image Color Balanced vector field in CIE Lab color space that maps the gamut of the source to that of the target. We employ normalized ra- dial basis functions for which we compute optimized shape parameters based on the input images, allowing for more faithful and flexible color matching compared to existing RBF-, regression- or histogram-based techniques. Further- more, we show how the basic per-image matching can be Rendered Objects efficiently and robustly extended to the temporal domain us- Tracked Colors balancing Augmented Image ing RANSAC-based correspondence classification. Besides Figure 1. Two applications of our color balancing algorithm. Top: interactive color balancing for images, these properties ren- an underexposed image is balanced using only three user selected der our method extremely useful for automatic, consistent correspondences to a target image. Bottom: our extension for embedding of synthetic graphics in video, as required by temporally stable color balancing enables seamless compositing applications such as augmented reality. in augmented reality applications by using known colors in the scene as constraints. 1. Introduction even for different scenes. With today’s tools this process re- quires considerable, cost-intensive manual efforts. -

Sensory and Instrument-Measured Ground Chicken Meat Color

Sensory and Instrument-Measured Ground Chicken Meat Color C. L. SANDUSKY1 and J. L. HEATH2 Department of Animal and Avian Sciences, University of Maryland, College Park, Maryland 20742 ABSTRACT Instrument values were compared to scores were compared using each of the backgrounds. sensory perception of ground breast and thigh meat The sensory panel did not detect differences in yellow- color. Different patty thicknesses (0.5, 1.5, and 2.0) and ness found by the instrument when samples on white background colors (white, pink, green, and gray), and pink backgrounds were compared to samples on previously found to cause differences in instrument- green and gray backgrounds. A majority of panelists (84 measured color, were used. Sensory descriptive analysis of 85) preferred samples on white or pink backgrounds. scores for lightness, hue, and chroma were compared to Red color of breast patties was associated with fresh- instrument-measured L* values, hue, and chroma. ness. Sensory ordinal rank scores for lightness, redness, and Reflective lighting was compared to transmission yellowness were compared to instrument-generated L*, lighting using patties of different thicknesses. Sensory a*, and b* values. Sensory descriptive analysis scores evaluation detected no differences in lightness due to and instrument values agreed in two of six comparisons breast patty thickness when reflective lighting was used. using breast and thigh patties. They agreed when thigh Increased thickness caused the patties to appear darker hue and chroma were measured. Sensory ordinal rank when transmission lighting was used. Decreased trans- scores were different from instrument color values in the mission lighting penetrating the sample made the patties ability to detect color changes caused by white, pink, appear more red. -

A Thesis Presented to Faculty of Alfred University PHOTOCHROMISM in RARE-EARTH OXIDE GLASSES by Charles H. Bellows in Partial Fu

A Thesis Presented to Faculty of Alfred University PHOTOCHROMISM IN RARE-EARTH OXIDE GLASSES by Charles H. Bellows In Partial Fulfillment of the Requirements for The Alfred University Honors Program May 2016 Under the Supervision of: Chair: Alexis G. Clare, Ph.D. Committee Members: Danielle D. Gagne, Ph.D. Matthew M. Hall, Ph.D. SUMMARY The following thesis was performed, in part, to provide glass artists with a succinct listing of colors that may be achieved by lighting rare-earth oxide glasses in a variety of sources. While examined through scientific experimentation, the hope is that the information enclosed will allow artists new opportunities for creative experimentation. Introduction Oxides of transition and rare-earth metals can produce a multitude of colors in glass through a process called doping. When doping, the powdered oxides are mixed with premade pieces of glass called frit, or with glass-forming raw materials. When melted together, ions from the oxides insert themselves into the glass, imparting a variety of properties including color. The color is produced when the electrons within the ions move between energy levels, releasing energy. The amount of energy released equates to a specific wavelength, which in turn determines the color emitted. Because the arrangement of electron energy levels is different for rare-earth ions compared to transition metal ions, some interesting color effects can arise. Some glasses doped with rare-earth oxides fluoresce under a UV “black light”, while others can express photochromic properties. Photochromism, simply put, is the apparent color change of an object as a function of light; similar to transition sunglasses. -

Hiding Color Watermarks in Halftone Images Using Maximum-Similarity

Signal Processing: Image Communication 48 (2016) 1–11 Contents lists available at ScienceDirect Signal Processing: Image Communication journal homepage: www.elsevier.com/locate/image Hiding color watermarks in halftone images using maximum- similarity binary patterns Pedro Garcia Freitas a,n, Mylène C.Q. Farias b, Aletéia P.F. Araújo a a Department of Computer Science, University of Brasília (UnB), Brasília, Brazil b Department of Electrical Engineering, University of Brasília (UnB), Brasília, Brazil article info abstract Article history: This paper presents a halftoning-based watermarking method that enables the embedding of a color Received 3 June 2016 image into binary black-and-white images. To maintain the quality of halftone images, the method maps Received in revised form watermarks to halftone channels using homogeneous dot patterns. These patterns use a different binary 25 August 2016 texture arrangement to embed the watermark. To prevent a degradation of the host image, a max- Accepted 25 August 2016 imization problem is solved to reduce the associated noise. The objective function of this maximization Available online 26 August 2016 problem is the binary similarity measure between the original binary halftone and a set of randomly Keywords: generated patterns. This optimization problem needs to be solved for each dot pattern, resulting in Color embedding processing overhead and a long running time. To overcome this restriction, parallel computing techni- Halftone ques are used to decrease the processing time. More specifically, the method is tested using a CUDA- Color restoration based parallel implementation, running on GPUs. The proposed technique produces results with high Watermarking Enhancement visual quality and acceptable processing time.