Enabling Rootless Linux Containers in Multi-User Environments: the Udocker Tool

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Soluciones Para Entornos HPC

Soluciones para entornos HPC Dr. Abel Francisco Paz Gallardo. IT Manager / Project Leader @ CETA-Ciemat [email protected] V Jornadas de Supercomputación y Avances en Tecnología CETA-Ciemat/ Noviembre 2012 Soluciones para entornos HPC Abel Francisco Paz Gallardo EXTREMADURA RESEARCH CENTER FOR ADVANCED TECHNOLOGIES INDICE 1 HPC… ¿Qué? ¿Cómo? . 2 Computación. (GPGPU,. UMA/NUMA,. etc.) 3 Almacenamiento en HPC . 4 Gestión de recursos . Soluciones para entornos HPC CETA-Ciemat/ Noviembre 2012 Soluciones para entornos HPC Abel Francisco Paz Gallardo 2 EXTREMADURA RESEARCH CENTER FOR ADVANCED TECHNOLOGIES 1 HPC… ¿Qué? ¿Cómo? ¿Qué? o HPC – High Performance Computing (Computación de alto rendimiento) o Objetivo principal: Resolución de problemas complejos ¿Cómo? Tres pilares fundamentales: o Procesamiento = Cómputo o Almacenamiento o Gestión de recursos HPC = + + CETA-Ciemat/ Noviembre 2012 Soluciones para entornos HPC Abel Francisco Paz Gallardo EXTREMADURA RESEARCH CENTER FOR ADVANCED TECHNOLOGIES 2 Computación (GPGPU, NUMA, etc.) ¿Qué es una GPU? Primera búsqueda en 2006: - Gas Particulate Unit Unidad de partículas de gas ¿? GPU = Graphics Processing Unit (Unidad de procesamiento gráfico). CETA-Ciemat/ Noviembre 2012 Soluciones para entornos HPC Abel Francisco Paz Gallardo EXTREMADURA RESEARCH CENTER FOR ADVANCED TECHNOLOGIES 2 Computación (GPGPU, NUMA, etc.) La cuestión es… Si una GPU en un videojuego procesa miles de polígonos, texturas y sombras en tiempo real… ¿Por qué no utilizar esta tecnología para procesamiento de datos? CETA-Ciemat/ -

GNU Guix Cookbook Tutorials and Examples for Using the GNU Guix Functional Package Manager

GNU Guix Cookbook Tutorials and examples for using the GNU Guix Functional Package Manager The GNU Guix Developers Copyright c 2019 Ricardo Wurmus Copyright c 2019 Efraim Flashner Copyright c 2019 Pierre Neidhardt Copyright c 2020 Oleg Pykhalov Copyright c 2020 Matthew Brooks Copyright c 2020 Marcin Karpezo Copyright c 2020 Brice Waegeneire Copyright c 2020 Andr´eBatista Copyright c 2020 Christine Lemmer-Webber Copyright c 2021 Joshua Branson Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled \GNU Free Documentation License". i Table of Contents GNU Guix Cookbook ::::::::::::::::::::::::::::::: 1 1 Scheme tutorials ::::::::::::::::::::::::::::::::: 2 1.1 A Scheme Crash Course :::::::::::::::::::::::::::::::::::::::: 2 2 Packaging :::::::::::::::::::::::::::::::::::::::: 5 2.1 Packaging Tutorial:::::::::::::::::::::::::::::::::::::::::::::: 5 2.1.1 A \Hello World" package :::::::::::::::::::::::::::::::::: 5 2.1.2 Setup:::::::::::::::::::::::::::::::::::::::::::::::::::::: 8 2.1.2.1 Local file ::::::::::::::::::::::::::::::::::::::::::::: 8 2.1.2.2 `GUIX_PACKAGE_PATH' ::::::::::::::::::::::::::::::::: 9 2.1.2.3 Guix channels ::::::::::::::::::::::::::::::::::::::: 10 2.1.2.4 Direct checkout hacking:::::::::::::::::::::::::::::: 10 2.1.3 Extended example :::::::::::::::::::::::::::::::::::::::: -

Bash Guide for Beginners

Bash Guide for Beginners Machtelt Garrels Garrels BVBA <tille wants no spam _at_ garrels dot be> Version 1.11 Last updated 20081227 Edition Bash Guide for Beginners Table of Contents Introduction.........................................................................................................................................................1 1. Why this guide?...................................................................................................................................1 2. Who should read this book?.................................................................................................................1 3. New versions, translations and availability.........................................................................................2 4. Revision History..................................................................................................................................2 5. Contributions.......................................................................................................................................3 6. Feedback..............................................................................................................................................3 7. Copyright information.........................................................................................................................3 8. What do you need?...............................................................................................................................4 9. Conventions used in this -

Functional Package and Configuration Management with GNU Guix

Functional Package and Configuration Management with GNU Guix David Thompson Wednesday, January 20th, 2016 About me GNU project volunteer GNU Guile user and contributor since 2012 GNU Guix contributor since 2013 Day job: Ruby + JavaScript web development / “DevOps” 2 Overview • Problems with application packaging and deployment • Intro to functional package and configuration management • Towards the future • How you can help 3 User autonomy and control It is becoming increasingly difficult to have control over your own computing: • GNU/Linux package managers not meeting user needs • Self-hosting web applications requires too much time and effort • Growing number of projects recommend installation via curl | sudo bash 1 or otherwise avoid using system package managers • Users unable to verify that a given binary corresponds to the source code 1http://curlpipesh.tumblr.com/ 4 User autonomy and control “Debian and other distributions are going to be that thing you run Docker on, little more.” 2 2“ownCloud and distribution packaging” http://lwn.net/Articles/670566/ 5 User autonomy and control This is very bad for desktop users and system administrators alike. We must regain control! 6 What’s wrong with Apt/Yum/Pacman/etc.? Global state (/usr) that prevents multiple versions of a package from coexisting. Non-atomic installation, removal, upgrade of software. No way to roll back. Nondeterminstic package builds and maintainer-uploaded binaries. (though this is changing!) Reliance on pre-built binaries provided by a single point of trust. Requires superuser privileges. 7 The problem is bigger Proliferation of language-specific package managers and binary bundles that complicate secure system maintenance. -

Introduction to Shell Programming Using Bash Part I

Introduction to shell programming using bash Part I Deniz Savas and Michael Griffiths 2005-2011 Corporate Information and Computing Services The University of Sheffield Email [email protected] [email protected] Presentation Outline • Introduction • Why use shell programs • Basics of shell programming • Using variables and parameters • User Input during shell script execution • Arithmetical operations on shell variables • Aliases • Debugging shell scripts • References Introduction • What is ‘shell’ ? • Why write shell programs? • Types of shell What is ‘shell’ ? • Provides an Interface to the UNIX Operating System • It is a command interpreter – Built on top of the kernel – Enables users to run services provided by the UNIX OS • In its simplest form a series of commands in a file is a shell program that saves having to retype commands to perform common tasks. • Shell provides a secure interface between the user and the ‘kernel’ of the operating system. Why write shell programs? • Run tasks customised for different systems. Variety of environment variables such as the operating system version and type can be detected within a script and necessary action taken to enable correct operation of a program. • Create the primary user interface for a variety of programming tasks. For example- to start up a package with a selection of options. • Write programs for controlling the routinely performed jobs run on a system. For example- to take backups when the system is idle. • Write job scripts for submission to a job-scheduler such as the sun- grid-engine. For example- to run your own programs in batch mode. Types of Unix shells • sh Bourne Shell (Original Shell) (Steven Bourne of AT&T) • csh C-Shell (C-like Syntax)(Bill Joy of Univ. -

Using Octave and Sagemath on Taito

Using Octave and SageMath on Taito Sampo Sillanpää 17 October 2017 CSC – Finnish research, education, culture and public administration ICT knowledge center Octave ● Powerful mathematics-oriented syntax with built- in plotting and visualization tools. ● Free software, runs on GNU/Linux, macOS, BSD, and Windows. ● Drop-in compatible with many Matlab scripts. ● https://www.gnu.org/software/octave/ SageMath ● SageMath is a free open-source mathematics software system licensed under the GPL. ● Builds on top of many existing open-source packages: NumPy, SciPy, matplotlib, Sympy, Maxima, GAP, FLINT, R and many more. ● http://www.sagemath.org/ Octave on Taito ● Latest version 4.2.1 module load octave-env octave Or octave --no-gui ● Interactive sessions on Taito-shell via NoMachine client https://research.csc.5/-/nomachine Octave Forge ● A central location for development of packages for GNU Octave. ● Packages can be installed on Taito module load octave-env octave --no-gui octave:> pkg install -forge package_name octave:> pkg load package_name SageMath on Taito ● installed version 7.6. module load sagemath sage ● Interactive sessions on Taito-shell via NoMachine client. ● Browser-based notebook sage: notebook() Octave Batch Jobs #!/bin/bash -l #mytest.sh #SBATCH --constraint="snb|hsw" #SBATCH -o output.out #SBATCH -e stderr.err #SBATCH -p serial #SBATCH -n 1 #SBATCH -t 00:05:00 #SBATCH --mem-per-cpu=1000 module load octave-env octave --no-gui/wrk/user_name/example.m used_slurm_resources.bash [user@taito-login1]$ sbatch mytest.sh SageMath Batch Jobs #!/bin/bash -l #mytest.sh #SBATCH --constraint="snb|hsw" #SBATCH -o output.out #SBATCH -e stderr.err #SBATCH -p serial #SBATCH -n 1 #SBATCH -t 00:05:00 #SBATCH --mem-per-cpu=1000 module load sagemath sage /wrk/user_name/example.sage used_slurm_resources.bash [user@taito-login1]$ sbatch mytest.sh Instrucons and Documentaon ● Octave – https://research.csc.5/-/octave – https://www.gnu.org/software/octave/doc/interp reter/ ● SageMath – https://research.csc.5/-/sagemath – http://doc.sagemath.org/ [email protected]. -

BASH Programming − Introduction HOW−TO BASH Programming − Introduction HOW−TO

BASH Programming − Introduction HOW−TO BASH Programming − Introduction HOW−TO Table of Contents BASH Programming − Introduction HOW−TO.............................................................................................1 by Mike G mikkey at dynamo.com.ar.....................................................................................................1 1.Introduction...........................................................................................................................................1 2.Very simple Scripts...............................................................................................................................1 3.All about redirection.............................................................................................................................1 4.Pipes......................................................................................................................................................1 5.Variables...............................................................................................................................................2 6.Conditionals..........................................................................................................................................2 7.Loops for, while and until.....................................................................................................................2 8.Functions...............................................................................................................................................2 -

Application-Level Fault Tolerance and Resilience in HPC Applications

Doctoral Thesis Application-level Fault Tolerance and Resilience in HPC Applications Nuria Losada 2018 Application-level Fault Tolerance and Resilience in HPC Applications Nuria Losada Doctoral Thesis July 2018 PhD Advisors: Mar´ıaJ. Mart´ın Patricia Gonz´alez PhD Program in Information Technology Research Dra. Mar´ıaJos´eMart´ınSantamar´ıa Dra. Patricia Gonz´alezG´omez Profesora Titular de Universidad Profesora Titular de Universidad Dpto. de Ingenier´ıade Computadores Dpto. de Ingenier´ıade Computadores Universidade da Coru~na Universidade da Coru~na CERTIFICAN Que la memoria titulada \Application-level Fault Tolerance and Resilience in HPC Applications" ha sido realizada por D~na.Nuria Losada L´opez-Valc´arcelbajo nuestra direcci´onen el Departamento de Ingenier´ıade Computadores de la Universidade da Coru~na,y concluye la Tesis Doctoral que presenta para optar al grado de Doctora en Ingenier´ıaInform´aticacon la Menci´onde Doctor Internacional. En A Coru~na,a 26 de Julio de 2018 Fdo.: Mar´ıaJos´eMart´ınSantamar´ıa Fdo.: Patricia Gonz´alezG´omez Directora de la Tesis Doctoral Directora de la Tesis Doctoral Fdo.: Nuria Losada L´opez-Valc´arcel Autora de la Tesis Doctoral A todos los que lo hab´eishecho posible. Acknowledgments I would especially like to thank my advisors, Mar´ıaand Patricia, for all their support, hard work, and all the opportunities they've handed me. I consider my- self very lucky to have worked with them during these years. I would also like to thank Gabriel and Basilio for their collaboration and valuable contributions to the development of this work. I would like to say thanks to all my past and present colleagues in the Computer Architecture Group and in the Faculty of Informatics for their fellowship, support, and all the coffee breaks and dinners we held together. -

Introduction to BASH: Part II

Introduction to BASH: Part II By Michael Stobb University of California, Merced February 17th, 2017 Quick Review ● Linux is a very popular operating system for scientific computing ● The command line interface (CLI) is ubiquitous and efficient ● A “shell” is a program that interprets and executes a user's commands ○ BASH: Bourne Again SHell (by far the most popular) ○ CSH: C SHell ○ ZSH: Z SHell ● Does everyone have access to a shell? Quick Review: Basic Commands ● pwd ○ ‘print working directory’, or where are you currently ● cd ○ ‘change directory’ in the filesystem, or where you want to go ● ls ○ ‘list’ the contents of the directory, or look at what is inside ● mkdir ○ ‘make directory’, or make a new folder ● cp ○ ‘copy’ a file ● mv ○ ‘move’ a file ● rm ○ ‘remove’ a file (be careful, usually no undos!) ● echo ○ Return (like an echo) the input to the screen ● Autocomplete! Download Some Example Files 1) Make a new folder, perhaps ‘bash_examples’, then cd into it. Capital ‘o’ 2) Type the following command: wget "goo.gl/oBFKrL" -O tutorial.tar 3) Extract the tar file with: tar -xf tutorial.tar 4) Delete the old tar file with rm tutorial.tar 5) cd into the new director ‘tutorial’ Input/Output Redirection ● Typically we give input to a command as follows: ○ cat file.txt ● Make the input explicit by using “<” ○ cat < file.txt ● Change the output by using “>” ○ cat < file.txt > output.txt ● Use the output of one function as the input of another ○ cat < file.txt | less BASH Utilities ● BASH has some awesome utilities ○ External commands not -

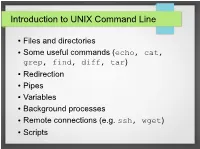

Introduction to UNIX Command Line

Introduction to UNIX Command Line ● Files and directories ● Some useful commands (echo, cat, grep, find, diff, tar) ● Redirection ● Pipes ● Variables ● Background processes ● Remote connections (e.g. ssh, wget) ● Scripts The Command Line ● What is it? ● An interface to UNIX ● You type commands, things happen ● Also referred to as a “shell” ● We'll use the bash shell – check you're using it by typing (you'll see what this means later): ● echo $SHELL ● If it doesn't say “bash”, then type bash to get into the bash shell Files and Directories / home var usr mcuser abenson drmentor science catvideos stuff data code report M51.fits simulate.c analyze.py report.tex Files and Directories ● Get a pre-made set of directories and files to work with ● We'll talk about what these commands do later ● The “$” is the command prompt (yours might differ). Type what's listed after hit, then press enter. $$ wgetwget http://bit.ly/1TXIZSJhttp://bit.ly/1TXIZSJ -O-O playground.tarplayground.tar $$ tartar xvfxvf playground.tarplayground.tar Files and directories $$ pwdpwd /home/abenson/home/abenson $$ cdcd playgroundplayground $$ pwdpwd /home/abenson/playground/home/abenson/playground $$ lsls animalsanimals documentsdocuments sciencescience $$ mkdirmkdir mystuffmystuff $$ lsls animalsanimals documentsdocuments mystuffmystuff sciencescience $$ cdcd animals/mammalsanimals/mammals $$ lsls badger.txtbadger.txt porcupine.txtporcupine.txt $$ lsls -l-l totaltotal 88 -rw-r--r--.-rw-r--r--. 11 abensonabenson abensonabenson 19441944 MayMay 3131 18:0318:03 badger.txtbadger.txt -rw-r--r--.-rw-r--r--. 11 abensonabenson abensonabenson 13471347 MayMay 3131 18:0518:05 porcupine.txtporcupine.txt Files and directories “Present Working Directory” $$ pwdpwd Shows the full path of your current /home/abenson/home/abenson location in the filesystem. -

Functional Package Management with Guix

Functional Package Management with Guix Ludovic Courtès Bordeaux, France [email protected] ABSTRACT 1. INTRODUCTION We describe the design and implementation of GNU Guix, a GNU Guix1 is a purely functional package manager for the purely functional package manager designed to support a com- GNU system [20], and in particular GNU/Linux. Pack- plete GNU/Linux distribution. Guix supports transactional age management consists in all the activities that relate upgrades and roll-backs, unprivileged package management, to building packages from source, honoring the build-time per-user profiles, and garbage collection. It builds upon the and run-time dependencies on packages, installing, removing, low-level build and deployment layer of the Nix package man- and upgrading packages in user environments. In addition ager. Guix uses Scheme as its programming interface. In to these standard features, Guix supports transactional up- particular, we devise an embedded domain-specific language grades and roll-backs, unprivileged package management, (EDSL) to describe and compose packages. We demonstrate per-user profiles, and garbage collection. Guix comes with a how it allows us to benefit from the host general-purpose distribution of user-land free software packages. programming language while not compromising on expres- siveness. Second, we show the use of Scheme to write build Guix seeks to empower users in several ways: by offering the programs, leading to a \two-tier" programming system. uncommon features listed above, by providing the tools that allow users to formally correlate a binary package and the Categories and Subject Descriptors \recipes" and source code that led to it|furthering the spirit D.4.5 [Operating Systems]: Reliability; D.4.5 [Operating of the GNU General Public License|, by allowing them to Systems]: System Programs and Utilities; D.1.1 [Software]: customize the distribution, and by lowering the barrier to Applicative (Functional) Programming entry in distribution development. -

Enabling the Deployment of Virtual Clusters on the VCOC Experiment of the Bonfire Federated Cloud

CLOUD COMPUTING 2012 : The Third International Conference on Cloud Computing, GRIDs, and Virtualization Enabling the Deployment of Virtual Clusters on the VCOC Experiment of the BonFIRE Federated Cloud Raul Valin, Luis M. Carril, J. Carlos Mouri˜no, Carmen Cotelo, Andr´es G´omez, and Carlos Fern´andez Supercomputing Centre of Galicia (CESGA) Santiago de Compostela, Spain Email: rvalin,lmcarril,jmourino,carmen,agomez,[email protected] Abstract—The BonFIRE project has developed a federated OpenCirrus. BonFIRE offers an experimenter control of cloud that supports experimentation and testing of innovative available resources. It supports dynamically creating, updat- scenarios from the Internet of Services research community. ing, reading and deleting resources throughout the lifetime Virtual Clusters on federated Cloud sites (VCOC) is one of the supported experiments of the BonFIRE Project whose main of an experiment. Compute resources can be configured with objective is to evaluate the feasibility of using multiple Cloud application-specific contextualisation information that can environments to deploy services which need the allocation provide important configuration information to the virtual of a large pool of CPUs or virtual machines to a single machine (VM); this information is available to software user (as High Throughput Computing or High Performance applications after the machine is started. BonFIRE also Computing). In this work, we describe the experiment agent, a tool developed on the VCOC experiment to facilitate the supports elasticity within an experiment, i.e., dynamically automatic deployment and monitoring of virtual clusters on create, update and destroy resources from a running node of the BonFIRE federated cloud. This tool was employed in the experiment, including cross-testbed elasticity.