Petter Sandvik Formal Modelling for Digital Media Distribution

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

New York University Annual Survey of American Law New York of American Law University Annual Survey

nys69-2_cv_nys69-2_cv 9/22/2014 8:41 AM Page 2 (trap 04 plate) Vol. 69 No. 2 Vol. New York University Annual Survey of American Law New York University Annual Survey of American Law New York ARTICLES TOWARD ADEQUACY Sarah L. Brinton SHOULD EVIDENCE OF SETTLEMENT NEGOTIATIONS AFFECT ATTORNEYS’ FEES AWARDS? Seth Katsuya Endo A LOOK INSIDE THE BUTLER’S CUPBOARD: HOW THE EXTERNAL WORLD REVEALS INTERNAL STATE OF MIND IN LEGAL NARRATIVES Cathren Koehlert-Page NOTES EXPERTISE AND IMMIGRATION ADMINISTRATION: WHEN DOES CHEVRON APPLY TO BIA INTERPRETATIONS OF THE INA? Paul Chaffin WHAT MOTIVATES ILLEGAL FILE SHARING? EMPIRICAL AND THEORETICAL APPROACHES Joseph M. Eno 2013 Volume 69 Issue 2 2013 35568-nys_69-2 Sheet No. 1 Side A 10/28/2014 12:36:12 \\jciprod01\productn\n\nys\69-2\FRONT692.txt unknown Seq: 1 23-OCT-14 9:10 NEW YORK UNIVERSITY ANNUAL SURVEY OF AMERICAN LAW VOLUME 69 ISSUE 2 35568-nys_69-2 Sheet No. 1 Side A 10/28/2014 12:36:12 NEW YORK UNIVERSITY SCHOOL OF LAW ARTHUR T. VANDERBILT HALL Washington Square New York City 35568-nys_69-2 Sheet No. 1 Side B 10/28/2014 12:36:12 \\jciprod01\productn\n\nys\69-2\FRONT692.txt unknown Seq: 2 23-OCT-14 9:10 New York University Annual Survey of American Law is in its seventy-first year of publication. L.C. Cat. Card No.: 46-30523 ISSN 0066-4413 All Rights Reserved New York University Annual Survey of American Law is published quarterly at 110 West 3rd Street, New York, New York 10012. -

Cisco SCA BB Protocol Reference Guide

Cisco Service Control Application for Broadband Protocol Reference Guide Protocol Pack #60 August 02, 2018 Cisco Systems, Inc. www.cisco.com Cisco has more than 200 offices worldwide. Addresses, phone numbers, and fax numbers are listed on the Cisco website at www.cisco.com/go/offices. THE SPECIFICATIONS AND INFORMATION REGARDING THE PRODUCTS IN THIS MANUAL ARE SUBJECT TO CHANGE WITHOUT NOTICE. ALL STATEMENTS, INFORMATION, AND RECOMMENDATIONS IN THIS MANUAL ARE BELIEVED TO BE ACCURATE BUT ARE PRESENTED WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED. USERS MUST TAKE FULL RESPONSIBILITY FOR THEIR APPLICATION OF ANY PRODUCTS. THE SOFTWARE LICENSE AND LIMITED WARRANTY FOR THE ACCOMPANYING PRODUCT ARE SET FORTH IN THE INFORMATION PACKET THAT SHIPPED WITH THE PRODUCT AND ARE INCORPORATED HEREIN BY THIS REFERENCE. IF YOU ARE UNABLE TO LOCATE THE SOFTWARE LICENSE OR LIMITED WARRANTY, CONTACT YOUR CISCO REPRESENTATIVE FOR A COPY. The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB’s public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California. NOTWITHSTANDING ANY OTHER WARRANTY HEREIN, ALL DOCUMENT FILES AND SOFTWARE OF THESE SUPPLIERS ARE PROVIDED “AS IS” WITH ALL FAULTS. CISCO AND THE ABOVE-NAMED SUPPLIERS DISCLAIM ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING, WITHOUT LIMITATION, THOSE OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THIS MANUAL, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. -

Apache TOMCAT

LVM Data Migration • XU4 Fan Control • OSX USB-UART interfacing Year Two Issue #22 Oct 2015 ODROIDMagazine Apache TOMCAT Your web server and servlet container running on the world’s most power-efficient computing platform Plex Linux Gaming: Emulate Sega’s last Media console, the Dreamcast Server What we stand for. We strive to symbolize the edge of technology, future, youth, humanity, and engineering. Our philosophy is based on Developers. And our efforts to keep close relationships with developers around the world. For that, you can always count on having the quality and sophistication that is the hallmark of our products. Simple, modern and distinctive. So you can have the best to accomplish everything you can dream of. We are now shipping the ODROID-U3 device to EU countries! Come and visit our online store to shop! Address: Max-Pollin-Straße 1 85104 Pförring Germany Telephone & Fax phone: +49 (0) 8403 / 920-920 email: [email protected] Our ODROID products can be found at http://bit.ly/1tXPXwe EDITORIAL his month, we feature two extremely useful servers that run very well on the ODROID platform: Apache Tom- Tcat and Plex Media Server. Apache Tomcat is an open- source web server and servlet container that provides a “pure Java” HTTP web server environment for Java code to run in. It allows you to write complex web applications in Java without needing to learn a specific server language such as .NET or PHP. Plex Media Server organizes your vid- eo, music, and photo collections and streams them to all of your screens. -

Biographies of Computer Scientists

1 Charles Babbage 26 December 1791 (London, UK) – 18 October 1871 (London, UK) Life and Times Charles Babbage was born into a wealthy family, and started his mathematics education very early. By . 1811, when he went to Trinity College, Cambridge, he found that he knew more mathematics then his professors. He moved to Peterhouse, Cambridge from where he graduated in 1814. However, rather than come second to his friend Herschel in the final examinations, Babbage decided not to compete for an honors degree. In 1815 he co-founded the Analytical Society dedicated to studying continental reforms of Newton's formulation of “The Calculus”. He was one of the founders of the Astronomical Society in 1820. In 1821 Babbage started work on his Difference Engine designed to accurately compile tables. Babbage received government funding to construct an actual machine, but they stopped the funding in 1832 when it became clear that its construction was running well over-budget George Schuetz completed a machine based on the design of the Difference Engine in 1854. On completing the design of the Difference Engine, Babbage started work on the Analytical Engine capable of more general symbolic manipulations. The design of the Analytical Engine was complete in 1856, but a complete machine would not be constructed for over a century. Babbage's interests were wide. It is claimed that he invented cow-catchers for railway engines, the uniform postal rate, a means of recognizing lighthouses. He was also interested in locks and ciphers. He was politically active and wrote many treatises. One of the more famous proposed the banning of street musicians. -

13 Cool Things You Can Do with Google Chromecast Chromecast

13 Cool Things You Can Do With Google Chromecast We bet you don't even know half of these Google Chromecast is a popular streaming dongle that makes for an easy and affordable way of throwing content from your smartphone, tablet, or computer to your television wirelessly. There’s so much you can do with it than just streaming Netflix, Hulu, Spotify, HBO and more from your mobile device and computer, to your TV. Our guide on How Does Google Chromecast Work explains more about what the device can do. The seemingly simple, ultraportable plug and play device has a few tricks up its sleeve that aren’t immediately apparent. Here’s a roundup of some of the hidden Chromecast tips and tricks you may not know that can make casting more magical. Chromecast Tips and Tricks You Didn’t Know 1. Enable Guest Mode 2. Make presentations 3. Play plenty of games 4. Cast videos using your voice 5. Stream live feeds from security cameras on your TV 6. Watch Amazon Prime Video on your TV 7. Create a casting queue 8. Cast Plex 9. Plug in your headphones 10. Share VR headset view with others 11. Cast on the go 12. Power on your TV 13. Get free movies and other perks Enable Guest Mode If you have guests over at your home, whether you’re hosting a family reunion, or have a party, you can let them cast their favorite music or TV shows onto your TV, without giving out your WiFi password. To do this, go to the Chromecast settings and enable Guest Mode. -

Digital Fountain Erasure-Recovery in Bittorrent

UNIVERSITÀ DEGLI STUDI DI BERGAMO Facoltà di Ingegneria Corso di Laurea Specialistica in Ingegneria Informatica Classe n. 35/S – Sistemi Informatici Digital Fountain Erasure Recovery in BitTorrent: integration and security issues Relatore: Chiar.mo Prof. Stefano Paraboschi Correlatore: Chiar.mo Prof. Andrea Lorenzo Vitali Tesi di Laurea Specialistica Michele BOLOGNA Matricola n. 56108 ANNO ACCADEMICO 2007 / 2008 This thesis has been written, typeset and prepared using LATEX 2". Printed on December 5, 2008. Alla mia famiglia “Would you tell me, please, which way I ought to go from here?” “That depends a good deal on where you want to get to,” said the Cat. “I don’t much care where —” said Alice. “Then it doesn’t matter which way you go,” said the Cat. “— so long as I get somewhere,” Alice added as an explanation. “Oh, you’re sure to do that,” said the Cat, “if you only walk enough.” Lewis Carroll Alice in Wonderland Acknowledgments (in Italian) Ci sono molte persone che mi hanno aiutato durante lo svolgimento di questo lavoro. Il primo ringraziamento va ai proff. Stefano Paraboschi e Andrea Vitali per la disponibilità, la competenza, i consigli, la pazienza e l’aiuto tecnico che mi hanno saputo dare. Grazie di avermi dato la maggior parte delle idee che sono poi confluite nella mia tesi. Un sentito ringraziamento anche a Andrea Rota e Ruben Villa per l’aiuto e i chiarimenti che mi hanno gentilmente fornito. Vorrei ringraziare STMicroelectronics, ed in particolare il gruppo Advanced System Technology, per avermi offerto le infrastrutture, gli spa- zi e tutto il necessario per svolgere al meglio il mio periodo di tirocinio. -

Roamio ® Series Viewer's Guide

® ® ® ® Viewer’s Guide This Viewer’s Guide describes features of the TiVo® service running on the TiVo Roamio™ family of Digital Video Recorders. Your use of this product is subject to TiVo's User Agreement and Privacy Policy. Visit www.tivo.com/legal for the latest versions. Patented. U.S. pat. nos. at www.tivo.com/patents. © 2016 TiVo Inc. Reproduction in whole or in part without written permission is prohibited. All rights reserved. TiVo, TiVo Roamio, the TiVo logo, QuickMode, OnePass, Season Pass, SkipMode, TiVo Central, WishList, the Jump logo, the Instant Replay logo, the Thumbs Up logo, the Thumbs Down logo, Overtime Scheduler, Overlap Protection, Swivel, the TiVo Circle logo, and the sounds used by the TiVo service are trademarks or registered trademarks of TiVo Inc. or its subsidiaries worldwide, 2160 Gold Street, P.O. Box 2160, San Jose, CA 95002-2160. Amazon, Amazon.com, and the Amazon.com logo are registered trademarks of Amazon.com, Inc. or its affiliates. CableCARD is a trademark of Cable Television Laboratories, Inc. Manufactured under license from Dolby Laboratories. “Dolby” and the Double-D symbol are trademarks of Dolby Laboratories. ® HDMI, the HDMI logo, and High-Definition Multimedia Interface are trademarks or registered trademarks of HDMI Licensing LLC in the United States and other countries. HBO GO is a registered trademark of Home Box Office, Inc. Hulu is a registered trademark of Hulu, LLC. MoCA is a registered trademark of Multimedia over Coax Alliance. Netflix is a registered trademark of Netflix, Inc. Vudu is a registered trademark of Vudu, Inc. YouTube is a trademark of Google Inc. -

Antifragile White Paper Draft 3.Pages

Piracy as an Antifragile System tech WP 01/2015 July 2015 Executive Summary Attacks on the piracy economy have thus far been unsuccessful. The piracy community has not only shown resilience to these attacks, but has also become more sophisticated and resilient as a result of them. Systems that show this characteristic response to ex- ternal stressors are defined as antifragile. Traditional centralized attacks are not only ineffective against such systems, but are counter-productive. These systems are not impervious to attacks, however. Decentralized attacks that warp the connections between nodes destroy the system from within. Some system-based attacks on piracy have been attempted, but lacked the technology required to be effec- tive. A new technology, CustosTech, built on the Bitcoin blockchain, attacks the system by turning pirates against each other. The technology enables and incentivizes anyone in the world to anonymously act as an informant, disclosing the identity of the first in- fringer – the pirate uploader. This internal decentralized attack breaks the incentive structures governing the uploader-downloader relationship, and thus provides a sus- tainable deterrent to piracy. Table of Contents Introduction to Antifragility 1 Features of Antifragile Systems 1 Piracy as an Antifragile System 2 Sophisticated Pirates 3 Popcorn Time 5 Attacking 5 Antifragile Systems 5 Attacking Piracy 5 Current Approaches 6 New Tools 6 How it Works 7 Conclusion 7 White paper 01/2015 Introduction to Antifragility Antifragility refers to a system that becomes bet- ter, or stronger, in response to shocks or attacks. Nassim Taleb developed the term1 to explain sys- tems that were not only resilient, but also thrived under stress. -

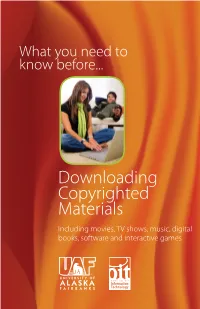

Downloading Copyrighted Materials

What you need to know before... Downloading Copyrighted Materials Including movies, TV shows, music, digital books, software and interactive games The Facts and Consequences Who monitors peer-to-peer file sharing? What are the consequences at UAF The Motion Picture Association of America for violators of this policy? (MPAA), Home Box Office, and other copyright Student Services at UAF takes the following holders monitor file-sharing on the Internet minimum actions when the policy is violated: for the illegal distribution of their copyrighted 1st Offense: contents. Once identified they issue DMCA Loss of Internet access until issue is resolved. (Digital Millennium Copyright Act) take-down 2nd Offense: notices to the ISP (Internet Service Provider), in Loss of Internet access pending which the University of Alaska is considered as resolution and a $100 fee assessment. one, requesting the infringement be stopped. If 3rd Offense: not stopped, lawsuit against the user is possible. Loss of Internet access pending resolution and a $250 fee assessment. What is UAF’s responsibility? 4th, 5th, 6th Offense: Under the Digital Millennium Copyright Act and Loss of Internet access pending resolution and Higher Education Opportunity Act, university a $500 fee assessment. administrators are obligated to track these infractions and preserve relevent logs in your What are the Federal consequences student record. This means that if your case goes for violators? to court, your record may be subpoenaed as The MPAA, HBO and similar organizations are evidence. Since illegal file sharing also drains becoming more and more aggressive in finding bandwidth, costing schools money and slowing and prosecuting alleged offenders in criminal Internet connections, for students trying to use court. -

Convention Program

AAEESS 112244tthh CCOONNVVEENNTTIIOONN PPRROOGGRRAAMM May 17 – 20, 2008 RAI International Exhibition & Congress Centre, Amsterdam The AES has launched a new opportunity to recognize 1University of Salford, Salford, Greater student members who author technical papers. The Stu- Manchester, UK dent Paper Award Competition is based on the preprint 2ICW, Ltd., Wrexham, Wales, UK manuscripts accepted for the AES convention. Forty-two student-authored papers were nominated. This paper gives an account of work carried out The excellent quality of the submissions has made the to assess the effects of metallized film selection process both challenging and exhilarating. polypropylene crossover capacitors on key sonic The award-winning student paper will be honored dur- attributes of reproduced sound. The capacitors ing the convention, and the student-authored manuscript under investigation were found to be mechani- will be published in a timely manner in the Journal of the cally resonant within the audio frequency band, Audio Engineering Society. and results obtained from subjective listening Nominees for the Student Paper Award were required tests have shown this to have a measurable to meet the following qualifications: effect on audio delivery. The listening test methodology employed in this study evolved (a) The paper was accepted for presentation at the from initial ABX type tests with set program AES 124th Convention. material to the final A/B tests where trained test (b) The first author was a student when the work was subjects used program material that they were conducted and the manuscript prepared. familiar with. The main findings were that (c) The student author’s affiliation listed in the manu- capacitors used in crossover circuitry can exhibit script is an accredited educational institution. -

I Know What You Streamed Last Night: on the Security and Privacy of Streaming

Digital Investigation xxx (2018) 1e12 Contents lists available at ScienceDirect Digital Investigation journal homepage: www.elsevier.com/locate/diin DFRWS 2018 Europe d Proceedings of the Fifth Annual DFRWS Europe I know what you streamed last night: On the security and privacy of streaming * Alexios Nikas a, Efthimios Alepis b, Constantinos Patsakis b, a University College London, Gower Street, WC1E 6BT, London, UK b Department of Informatics, University of Piraeus, 80 Karaoli & Dimitriou Str, 18534 Piraeus, Greece article info abstract Article history: Streaming media are currently conquering traditional multimedia by means of services like Netflix, Received 3 January 2018 Amazon Prime and Hulu which provide to millions of users worldwide with paid subscriptions in order Received in revised form to watch the desired content on-demand. Simultaneously, numerous applications and services infringing 15 February 2018 this content by sharing it for free have emerged. The latter has given ground to a new market based on Accepted 12 March 2018 illegal downloads which monetizes from ads and custom hardware, often aggregating peers to maximize Available online xxx multimedia content sharing. Regardless of the ethical and legal issues involved, the users of such streaming services are millions and they are severely exposed to various threats, mainly due to poor Keywords: fi Security hardware and software con gurations. Recent attacks have also shown that they may, in turn, endanger Privacy others as well. This work details these threats and presents new attacks on these systems as well as Streaming forensic evidence that can be collected in specific cases. Malware © 2018 Elsevier Ltd. All rights reserved. -

Defense Against the Dark Arts of Copyright Trolling Matthew As G

Loyola University Chicago, School of Law LAW eCommons Faculty Publications & Other Works 2018 Defense Against the Dark Arts of Copyright Trolling Matthew aS g Jake Haskell Follow this and additional works at: https://lawecommons.luc.edu/facpubs Part of the Civil Procedure Commons, and the Intellectual Property Law Commons Defense Against the Dark Arts of Copyright Trolling Matthew Sag &Jake Haskell * ABSTRACT: In this Article, we offer both a legal and a pragmaticframework for defending against copyright trolls. Lawsuits alleging online copyright infringement by John Doe defendants have accounted for roughly half of all copyright casesfiled in the United States over the past threeyears. In the typical case, the plaintiffs claims of infringement rely on a poorly substantiatedform pleading and are targeted indiscriminately at noninfringers as well as infringers. This practice is a subset of the broaderproblem of opportunistic litigation, but it persists due to certain unique features of copyright law and the technical complexity of Internet technology. The plaintiffs bringing these cases target hundreds or thousands of defendants nationwide and seek quick settlements pricedjust low enough that it is less expensive for the defendant to pay rather than to defend the claim, regardless of the claim's merits. We report new empirical data on the continued growth of this form of copyright trolling in the United States. We also undertake a detailed analysis of the legal andfactual underpinnings of these cases. Despite theirunderlying weakness, plaintiffs have exploited information asymmetries, the high cost of federal court litigation, and the extravagant threat of statutory damages for copyright infringement to leverage settlementsfrom the guilty and the innocent alike.