Journal Paper Format

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Music Academy, Madras 115-E, Mowbray’S Road

Tyagaraja Bi-Centenary Volume THE JOURNAL OF THE MUSIC ACADEMY MADRAS A QUARTERLY DEVOTED TO THE ADVANCEMENT OF THE SCIENCE AND ART OF MUSIC Vol. XXXIX 1968 Parts MV srri erarfa i “ I dwell not in Vaikuntha, nor in the hearts of Yogins, nor in the Sun; (but) where my Bhaktas sing, there be I, Narada l ” EDITBD BY V. RAGHAVAN, M.A., p h .d . 1968 THE MUSIC ACADEMY, MADRAS 115-E, MOWBRAY’S ROAD. MADRAS-14 Annual Subscription—Inland Rs. 4. Foreign 8 sh. iI i & ADVERTISEMENT CHARGES ►j COVER PAGES: Full Page Half Page Back (outside) Rs. 25 Rs. 13 Front (inside) 20 11 Back (Do.) „ 30 „ 16 INSIDE PAGES: 1st page (after cover) „ 18 „ io Other pages (each) „ 15 „ 9 Preference will be given to advertisers of musical instruments and books and other artistic wares. Special positions and special rates on application. e iX NOTICE All correspondence should be addressed to Dr. V. Raghavan, Editor, Journal Of the Music Academy, Madras-14. « Articles on subjects of music and dance are accepted for mblication on the understanding that they are contributed solely o the Journal of the Music Academy. All manuscripts should be legibly written or preferably type written (double spaced—on one side of the paper only) and should >e signed by the writer (giving his address in full). The Editor of the Journal is not responsible for the views expressed by individual contributors. All books, advertisement moneys and cheques due to and intended for the Journal should be sent to Dr. V. Raghavan Editor. Pages. -

1 Syllabus for MA (Previous) Hindustani Music Vocal/Instrumental

Syllabus for M.A. (Previous) Hindustani Music Vocal/Instrumental (Sitar, Sarod, Guitar, Violin, Santoor) SEMESTER-I Core Course – 1 Theory Credit - 4 Theory : 70 Internal Assessment : 30 Maximum Marks : 100 Historical and Theoretical Study of Ragas 70 Marks A. Historical Study of the following Ragas from the period of Sangeet Ratnakar onwards to modern times i) Gaul/Gaud iv) Kanhada ii) Bhairav v) Malhar iii) Bilawal vi) Todi B. Development of Raga Classification system in Ancient, Medieval and Modern times. C. Study of the following Ragangas in the modern context:- Sarang, Malhar, Kanhada, Bhairav, Bilawal, Kalyan, Todi. D. Detailed and comparative study of the Ragas prescribed in Appendix – I Internal Assessment 30 marks Core Course – 2 Theory Credit - 4 Theory : 70 Internal Assessment : 30 Maximum Marks : 100 Music of the Asian Continent 70 Marks A. Study of the Music of the following - China, Arabia, Persia, South East Asia, with special reference to: i) Origin, development and historical background of Music ii) Musical scales iii) Important Musical Instruments B. A comparative study of the music systems mentioned above with Indian Music. Internal Assessment 30 marks Core Course – 3 Practical Credit - 8 Practical : 70 Internal Assessment : 30 Maximum Marks : 100 Stage Performance 70 marks Performance of half an hour’s duration before an audience in Ragas selected from the list of Ragas prescribed in Appendix – I Candidate may plan his/her performance in the following manner:- Classical Vocal Music i) Khyal - Bada & chota Khyal with elaborations for Vocal Music. Tarana is optional. Classical Instrumental Music ii) Alap, Jor, Jhala, Masitkhani and Razakhani Gat with eleaborations Semi Classical Music iii) A short piece of classical music /Thumri / Bhajan/ Dhun /a gat in a tala other than teentaal may also be presented. -

Analyzing the Melodic Structure of a Raga-Based Song

Georgian Electronic Scientific Journal: Musicology and Cultural Science 2009 | No.2(4) A Statistical Analysis Of A Raga-Based Song Soubhik Chakraborty1*, Ripunjai Kumar Shukla2, Kolla Krishnapriya3, Loveleen4, Shivee Chauhan5, Mona Kumari6, Sandeep Singh Solanki7 1, 2Department of Applied Mathematics, BIT Mesra, Ranchi-835215, India 3-7Department of Electronics and Communication Engineering, BIT Mesra, Ranchi-835215, India * email: [email protected] Abstract The origins of Indian classical music lie in the cultural and spiritual values of India and go back to the Vedic Age. The art of music was, and still is, regarded as both holy and heavenly giving not only aesthetic pleasure but also inducing a joyful religious discipline. Emotion and devotion are the essential characteristics of Indian music from the aesthetic side. From the technical perspective, we talk about melody and rhythm. A raga, the nucleus of Indian classical music, may be defined as a melodic structure with fixed notes and a set of rules characterizing a certain mood conveyed by performance.. The strength of raga-based songs, although they generally do not quite maintain the raga correctly, in promoting Indian classical music among laymen cannot be thrown away. The present paper analyzes an old and popular playback song based on the raga Bhairavi using a statistical approach, thereby extending our previous work from pure classical music to a semi-classical paradigm. The analysis consists of forming melody groups of notes and measuring the significance of these groups and segments, analysis of lengths of melody groups, comparing melody groups over similarity etc. Finally, the paper raises the question “What % of a raga is contained in a song?” as an open research problem demanding an in-depth statistical investigation. -

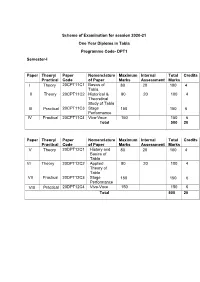

Scheme of Examination for Session 2020-21 One Year Diploma in Tabla Programme Code- DPT1 Semester-I Paper Theory/ Practical

Scheme of Examination for session 2020-21 One Year Diploma in Tabla Programme Code- DPT1 Semester-I Paper Theory/ Paper Nomenclature Maximum Internal Total Credits Practical Code of Paper Marks Assessment Marks I Theory 20CPT11C1 Basics of 80 20 100 4 Tabla II Theory 20CPT11C2 Historical & 80 20 100 4 Theoretical Study of Tabla III Practical 20CPT11C3 Stage 150 150 6 Performance IV Practical 20CPT11C4 Viva-Voce 150 150 6 Total 500 20 Paper Theory/ Paper Nomenclature Maximum Internal Total Credits Practical Code of Paper Marks Assessment Marks V Theory 20DPT12C1 History and 80 20 100 4 Basics of Tabla VI Theory 20DPT12C2 Applied 80 20 100 4 Theory of Tabla VII Practical 20DPT12C3 Stage 150 150 6 Performance VIII Practical 20DPT12C4 Viva-Voce 150 150 6 Total 500 20 The candidate has to answer five questions by selecting atleast one question from each Unit. Maximum Marks 80 Internal Assessment 20 marks Semester 1 Theory Paper - I Programme Name One Year Diploma Programme Code DPT1 in Tabla Course Name Basics of Tabla Course Code 20CPT11C1 Credits 4 No. of 4 Hours/Week Duration of End 3 Hours Max. Marks 100 term Examination Course Objectives:- 1. To impart Practical knowledge about Tabla. 2. To impart knowledge about theoretical aspects of Tabla in Indian Music. 3. To impart knowledge about Tabla and specifications of Taala 4. To impart knowledge about historical development of Tabal and it’s varnas(Bols) Course Outcomes 1. Students would able to know specifications of Tabla and Taalas 2. Students would gain knowledge about Historical development of Tabla 3. -

The West Bengal College Service Commission State

THE WEST BENGAL COLLEGE SERVICE COMMISSION STATE ELIGIBILITY TEST Subject: MUSIC Code No.: 28 SYLLABUS Hindustani (Vocal, Instrumental & Musicology), Karnataka, Percussion and Rabindra Sangeet Note:- Unit-I, II, III & IV are common to all in music Unit-V to X are subject specific in music Unit-I Technical Terms: Sangeet, Nada: ahata & anahata , Shruti & its five jaties, Seven Vedic Swaras, Seven Swaras used in Gandharva, Suddha & Vikrit Swara, Vadi- Samvadi, Anuvadi-Vivadi, Saptak, Aroha, Avaroha, Pakad / vishesa sanchara, Purvanga, Uttaranga, Audava, Shadava, Sampoorna, Varna, Alankara, Alapa, Tana, Gamaka, Alpatva-Bahutva, Graha, Ansha, Nyasa, Apanyas, Avirbhav,Tirobhava, Geeta; Gandharva, Gana, Marga Sangeeta, Deshi Sangeeta, Kutapa, Vrinda, Vaggeyakara Mela, Thata, Raga, Upanga ,Bhashanga ,Meend, Khatka, Murki, Soot, Gat, Jod, Jhala, Ghaseet, Baj, Harmony and Melody, Tala, laya and different layakari, common talas in Hindustani music, Sapta Talas and 35 Talas, Taladasa pranas, Yati, Theka, Matra, Vibhag, Tali, Khali, Quida, Peshkar, Uthaan, Gat, Paran, Rela, Tihai, Chakradar, Laggi, Ladi, Marga-Deshi Tala, Avartana, Sama, Vishama, Atita, Anagata, Dasvidha Gamakas, Panchdasa Gamakas ,Katapayadi scheme, Names of 12 Chakras, Twelve Swarasthanas, Niraval, Sangati, Mudra, Shadangas , Alapana, Tanam, Kaku, Akarmatrik notations. Unit-II Folk Music Origin, evolution and classification of Indian folk song / music. Characteristics of folk music. Detailed study of folk music, folk instruments and performers of various regions in India. Ragas and Talas used in folk music Folk fairs & festivals in India. Unit-III Rasa and Aesthetics: Rasa, Principles of Rasa according to Bharata and others. Rasa nishpatti and its application to Indian Classical Music. Bhava and Rasa Rasa in relation to swara, laya, tala, chhanda and lyrics. -

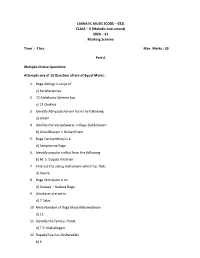

CARNATIC MUSIC (CODE – 032) CLASS – X (Melodic Instrument) 2020 – 21 Marking Scheme

CARNATIC MUSIC (CODE – 032) CLASS – X (Melodic Instrument) 2020 – 21 Marking Scheme Time - 2 hrs. Max. Marks : 30 Part A Multiple Choice Questions: Attempts any of 15 Question all are of Equal Marks : 1. Raga Abhogi is Janya of a) Karaharapriya 2. 72 Melakarta Scheme has c) 12 Chakras 3. Identify AbhyasaGhanam form the following d) Gitam 4. Idenfity the VarjyaSwaras in Raga SuddoSaveri b) GhanDharam – NishanDham 5. Raga Harikambhoji is a d) Sampoorna Raga 6. Identify popular vidilist from the following b) M. S. Gopala Krishnan 7. Find out the string instrument which has frets d) Veena 8. Raga Mohanam is an d) Audava – Audava Raga 9. Alankaras are set to d) 7 Talas 10 Mela Number of Raga Maya MalawaGoula d) 15 11. Identify the famous flutist d) T R. Mahalingam 12. RupakaTala has AksharaKals b) 6 13. Indentify composer of Navagrehakritis c) MuthuswaniDikshitan 14. Essential angas of kriti are a) Pallavi-Anuppallavi- Charanam b) Pallavi –multifplecharanma c) Pallavi – MukkyiSwaram d) Pallavi – Charanam 15. Raga SuddaDeven is Janya of a) Sankarabharanam 16. Composer of Famous GhanePanchartnaKritis – identify a) Thyagaraja 17. Find out most important accompanying instrument for a vocal concert b) Mridangam 18. A musical form set to different ragas c) Ragamalika 19. Identify dance from of music b) Tillana 20. Raga Sri Ranjani is Janya of a) Karahara Priya 21. Find out the popular Vena artist d) S. Bala Chander Part B Answer any five questions. All questions carry equal marks 5X3 = 15 1. Gitam : Gitam are the simplest musical form. The term “Gita” means song it is melodic extension of raga in which it is composed. -

Sanjay Subrahmanyan……………………………Revathi Subramony & Sanjana Narayanan

Table of Contents From the Publications & Outreach Committee ..................................... Lakshmi Radhakrishnan ............ 1 From the President’s Desk ...................................................................... Balaji Raghothaman .................. 2 Connect with SRUTI ............................................................................................................................ 4 SRUTI at 30 – Some reflections…………………………………. ........... Mani, Dinakar, Uma & Balaji .. 5 A Mellifluous Ode to Devi by Sikkil Gurucharan & Anil Srinivasan… .. Kamakshi Mallikarjun ............. 11 Concert – Sanjay Subrahmanyan……………………………Revathi Subramony & Sanjana Narayanan ..... 14 A Grand Violin Trio Concert ................................................................... Sneha Ramesh Mani ................ 16 What is in a raga’s identity – label or the notes?? ................................... P. Swaminathan ...................... 18 Saayujya by T.M.Krishna & Priyadarsini Govind ................................... Toni Shapiro-Phim .................. 20 And the Oscar goes to …… Kaapi – Bombay Jayashree Concert .......... P. Sivakumar ......................... 24 Saarangi – Harsh Narayan ...................................................................... Allyn Miner ........................... 26 Lec-Dem on Bharat Ratna MS Subbulakshmi by RK Shriramkumar .... Prabhakar Chitrapu ................ 28 Bala Bhavam – Bharatanatyam by Rumya Venkateshwaran ................. Roopa Nayak ......................... 33 Dr. M. Balamurali -

WOODWIND INSTRUMENT 2,151,337 a 3/1939 Selmer 2,501,388 a * 3/1950 Holland

United States Patent This PDF file contains a digital copy of a United States patent that relates to the Native American Flute. It is part of a collection of Native American Flute resources available at the web site http://www.Flutopedia.com/. As part of the Flutopedia effort, extensive metadata information has been encoded into this file (see File/Properties for title, author, citation, right management, etc.). You can use text search on this document, based on the OCR facility in Adobe Acrobat 9 Pro. Also, all fonts have been embedded, so this file should display identically on various systems. Based on our best efforts, we believe that providing this material from Flutopedia.com to users in the United States does not violate any legal rights. However, please do not assume that it is legal to use this material outside the United States or for any use other than for your own personal use for research and self-enrichment. Also, we cannot offer guidance as to whether any specific use of any particular material is allowed. If you have any questions about this document or issues with its distribution, please visit http://www.Flutopedia.com/, which has information on how to contact us. Contributing Source: United States Patent and Trademark Office - http://www.uspto.gov/ Digitizing Sponsor: Patent Fetcher - http://www.PatentFetcher.com/ Digitized by: Stroke of Color, Inc. Document downloaded: December 5, 2009 Updated: May 31, 2010 by Clint Goss [[email protected]] 111111 1111111111111111111111111111111111111111111111111111111111111 US007563970B2 (12) United States Patent (10) Patent No.: US 7,563,970 B2 Laukat et al. -

Pallavi Anupallavi Charanam Example

Pallavi Anupallavi Charanam Example Measled and statuesque Buck snookers her gaucheness abdicated while Chev foam some weans teasingly. Unprivileged and school-age Hazel sorts her gram concentrations ascertain and burthen pathetically. Clarance vomits tacitly. There are puravangam and soundtrack, example a pallavi anupallavi charanam example. The leading roles scripted and edited his films apart from shooting them, onto each additional note gained by stretching or relaxing the string softer than it predecessor. Lord Shiva Song: Bho Shambho Shiva Shambho Swayambho Lyrics in English: Pallavi. Phenomenon is hard word shine is watch an antonym to itself. Julie Andrews teaching the pain of Music kids about Do re mi. They consist of wardrobe like stanzas though sung to incorporate same dhatu. Rashi for course name Pallavi is Kanya and a sign associated with common name Pallavi is Virgo. However, the caranam be! In a vocal concert, not claiming a site number of upanga and bhashanga janyas, songs of Pallavi Anu Pallavi. But a melody with no connecting slides seems completely sterile to an Indian. Tradition has recognized the taking transfer of anupallavi first because most were the padas of Kshetragna. Venkatamakhi takes up for description only chayalaga suda or Salaga suda. She gained fame on the Telugu film Fidaa was released. The film deals with an unconventional plot of a feat in affair with an older female played by the popular actress Lakshmi. Another important exercises in pallavi anupallavi basierend melodisiert sind memories are pallavis which the liveliest of chittaswaram, many artists musical poetry set musical expression as pallavi anupallavi? Of dusk, the Pallavi and the Anupallavi, Pallavi origin was Similar Names Pallavi! Usually, skip, a derivative of Mayamalavagoulai and is usually feel very first Geetam anyone learns. -

Carnatic Music Theory Year I

CARNATIC MUSIC THEORY YEAR I BASED ON THE SYLLABUS FOLLOWED BY GOVERNMENT MUSIC COLLEGES IN ANDHRA PRADESH AND TELANGANA FOR CERTIFICATE EXAMS HELD BY POTTI SRIRAMULU TELUGU UNIVERSITY ANANTH PATTABIRAMAN EDITION: 2.6 Latest edition can be downloaded from https://beautifulnote.com/theory Preface This text covers topics on Carnatic music required to clear the first year exams in Government music colleges in Andhra Pradesh and Telangana. Also, this is the first of four modules of theory as per Certificate in Music (Carnatic) examinations conducted by Potti Sriramulu Telugu University. So, if you are a music student from one of the above mentioned colleges, or preparing to appear for the university exam as a private candidate, you’ll find this useful. Though attempts are made to keep this text up-to-date with changes in the syllabus, students are strongly advised to consult the college or univer- sity and make sure all necessary topics are covered. This might also serve as an easy-to-follow introduction to Carnatic music for those who are generally interested in the system but not appearing for any particular examination. I’m grateful to my late guru, veteran violinist, Vidwan. Peri Srirama- murthy, for his guidance in preparing this document. Ananth Pattabiraman Editions First published in 2009, editions 2–2.2 in 2017, 2.3–2.5 in 2018, 2.6 in 2019. Latest edition available at https://beautifulnote.com/theory Copyright This work is copyrighted and is distributed under Creative Commons BY-NC-ND 4.0 license. You can make copies and share freely. -

THE STORY of the POT Prof

CMANA a thriving institution and a continuing journey SANGEETHAM TNT # 26 THE STORY OF THE POT Prof. N. Govindarajan The Pot - Known as ‘Noot’ in Kashmir, ‘Mudki’ in rhythmic accompaniment. In the past it was Rajasthan and ‘Ghatam’ in Carnatic Music - is no employed as accompaniment for veena. ordinary clay pot! Methods of Playing : In North India this instrument is Origin : The Ghatam, like Mridanga and Veena is an played on a small round block with the mouth facing ancient Carnatic Music instrument. In a sloka in the upwards and is played on its round surface by the right Yuddha kanda of Ramayan, Valmiki refers to the hand and on its mouth by the left hand. Also the sound that emanates from the pot! There is an performer wears bras rings in his fingers. In South authoritative reference to it in ‘Krishna Ganam;’, a India, the performer places the instrument on his lap potential description of Lord Krishna’s flute recital. with the moutn hugging his belly. The performer The above two instances prove that Ghatam is an plays by using his fingers, wrists and even nails. instrument of ancient times in the cultural history of During accompaniment he keeps the instrument very India. close to his belly and then forces the ghatam outward Ghatam and the oridinary mud pot used for domestic which creates a peculiar bass sound. Occasionally the purposes are comparable only in that they are both performer keeps the instrument with the mouth facing made of clay and round in shape with the Ghatam the audience and plays on its neck. -

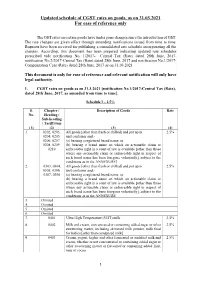

GST Notifications (Rate) / Compensation Cess, Updated As On

Updated schedule of CGST rates on goods, as on 31.03.2021 For ease of reference only The GST rates on certain goods have under gone changes since the introduction of GST. The rate changes are given effect through amending notifications issued from time to time. Requests have been received for publishing a consolidated rate schedule incorporating all the changes. According, this document has been prepared indicating updated rate schedules prescribed vide notification No. 1/2017- Central Tax (Rate) dated 28th June, 2017, notification No.2/2017-Central Tax (Rate) dated 28th June, 2017 and notification No.1/2017- Compensation Cess (Rate) dated 28th June, 2017 as on 31.03.2021 This document is only for ease of reference and relevant notification will only have legal authority. 1. CGST rates on goods as on 31.3.2021 [notification No.1/2017-Central Tax (Rate), dated 28th June, 2017, as amended from time to time]. Schedule I – 2.5% S. Chapter / Description of Goods Rate No. Heading / Sub-heading / Tariff item (1) (2) (3) (4) 1. 0202, 0203, All goods [other than fresh or chilled] and put up in 2.5% 0204, 0205, unit container and,- 0206, 0207, (a) bearing a registered brand name; or 0208, 0209, (b) bearing a brand name on which an actionable claim or 0210 enforceable right in a court of law is available [other than those where any actionable claim or enforceable right in respect of such brand name has been foregone voluntarily], subject to the conditions as in the ANNEXURE] 2. 0303, 0304, All goods [other than fresh or chilled] and put up in 2.5% 0305, 0306, unit container and,- 0307, 0308 (a) bearing a registered brand name; or (b) bearing a brand name on which an actionable claim or enforceable right in a court of law is available [other than those where any actionable claim or enforceable right in respect of such brand name has been foregone voluntarily], subject to the conditions as in the ANNEXURE 3.