Facial Recognition Technology in New Zealand

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

JOSH SIEGEL Line Producer / UPM Directors Guild of America, Producers Guild of America

Screen Talent Agency 818 206 0144 JOSH SIEGEL Line Producer / UPM Directors Guild of America, Producers Guild of America Josh Siegel knew at an early age he wanted to make movies. Proactively, he raised money while at Johns Hopkins University to create his first film. Siegel has since gone on to produce more than 20 projects, and budgeted in excess of fifty, with budgets of $250k to $30m. His pleasant personality, wit and ability were key in his appointment as head of production for Hannibal Pictures. Josh intimately knows all the stages of production from development through production, post and distribution. He knows how to make your money appear on screen and where you can cut to save funds. And he can do it all with a smile. A member of both the PGA and the DGA, Josh lives in Los Angeles and is available for work worldwide. selected credits as line producer production director / producer / production company SHOT Noah Wyle, Sharon Leal Jeremy Kagan / Josh Siegel / AC Transformative Media A ROLE TO DIE FOR (in prep) John Asher / Conrad Goode / ARTDF Films ANY DAY Eva Longoria, Tom Arnold, Sean Bean, Kate Walsh Rustam Branaman / Andrew Sugerman / Jaguar Ent. BETWEEN Poppy Montgomery (shot entirely in Mexico) David Ocanas / Frank Donner / Lifetime TV RUNNING WITH ARNOLD Alec Baldwin (documentary) Dan Cox / Mike Gabrawy / Endless World Films THE LAST LETTER William Forsythe, Yancy Butler Russell Gannon / Demetrius Navarro / MTI PURPLE HEART William Sadler, Mel Harris Bill Birrell / Russell Gannon / Vision Films OH, MR. FAULKNER, DO YOU WRITE? John Maxwell Jimbo Barnett (prod/director) / MaxBo television 24 Carlos Bernard, Xander Berkeley (DVD bonus episode) Jordan Goldman / Manny Coto, Evan Katz / Fox GUTSY FROG Mischa Barton, Julie Brown Mark A.Z.Dippé / Kevin Jonas, Alan Sacks / Jonas Bros. -

Clearview AI Has Billions of Our Photos. Its Entire Client List Was Just Stolen by Jordan Valinsky, CNN Business Updated 1:24 PM ET, Wed February 26, 2020

Clearview AI has billions of our photos. Its entire client list was just stolen By Jordan Valinsky, CNN Business Updated 1:24 PM ET, Wed February 26, 2020 New York (CNN Business)Clearview AI, a startup that compiles billions of photos for facial recognition technology, said it lost its entire client list to hackers. The company said it has patched the unspecified flaw that allowed the breach to happen. In a statement, Clearview AI's attorney Tor Ekeland said that while security is the company's top priority, "unfortunately, data breaches are a part of life. Our servers were never accessed." He added that the company continues to strengthen its security procedures and that the flaw has been patched. Clearview AI continues "to work to strengthen our security," Ekeland said. In a notification sent to customers obtained by Daily Beast, Clearview AI said that an intruder "gained unauthorized access" to its customer list, which includes police forces, law enforcement agencies and banks. The company said that the person didn't obtain any search histories conducted by customers, which include some police forces. The company claims to have scraped more than 3 billion photos from the internet, including photos from popular social media platforms like Facebook, Instagram, Twitter and YouTube. The firm garnered controversy in January after a New York Times investigation revealed that Clearview AI's technology allowed law enforcement agencies to use its technology to match photos of unknown faces to people's online images. The company also retains those photos in its database even after internet users delete them from the platforms or make their accounts private. -

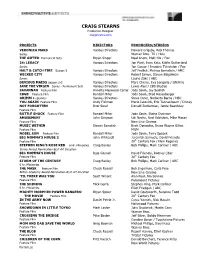

CRAIG STEARNS Production Designer Craigstearns.Com

CRAIG STEARNS Production Designer craigstearns.com PROJECTS DIRECTORS PRODUCERS/STUDIOS VERONICA MARS Various Directors Howard Grigsby, Rob Thomas Series Warner Bros. TV / Hulu THE GIFTED Permanent Sets Bryan Singer Neal Ahern, Matt Nix / Fox 24: LEGACY Various Directors Jon Paré, Evan Katz, Kiefer Sutherland Series Jon Cassar / Imagine Television / Fox HALT & CATCH FIRE Season 3 Various Directors Jeff Freilich, Melissa Bernstein / AMC WICKED CITY Various Directors Robert Simon, Steven Baigelman Series Laurie Zaks / ABC DEVIOUS MAIDS Season 2-3 Various Directors Marc Cherry, Eva Longoria / Lifetime JANE THE VIRGIN Series - Permanent Sets Various Directors Lewis Abel / CBS Studios SAVANNAH Feature Film Annette Haywood-Carter Jody Savin, Jay Sedrish CBGB Feature Film Randall Miller Jody Savin, Brad Rosenberger GRIMM 8 episodes Various Directors Steve Oster, Norberto Barba / NBC YOU AGAIN Feature Film Andy Fickman Mario Iscovich, Eric Tannenbaum / Disney NOT FORGOTTEN Dror Soref Donald Zuckerman, Jamie Beardsley Feature Film BOTTLE SHOCK Feature Film Randall Miller Jody Savin, Elaine Dysinger AMUSEMENT John Simpson Udi Nedivi, Neal Edelstein, Mike Macari Feature Film New Line Cinema MUSIC WITHIN Steven Sawalich Brett Donowho, Bruce Wayne Gillies Feature Film MGM NOBEL SON Feature Film Randall Miller Jody Savin, Terry Spazek BIG MOMMA’S HOUSE 2 John Whitesell Jeremiah Samuels, David Friendly th Feature Film 20 Century Fox / New Regency STEPHEN KING’S ROSE RED 6 Hr. Miniseries Craig Baxley Bob Phillips, Mark Carliner / ABC Emmy Award Nomination-Best Art Direction BIG MOMMA'S HOUSE Raja Gosnell David Friendly, Rodney Liber th Feature Film 20 Century Fox STORM OF THE CENTURY Craig Baxley Bob Phillips, Mark Carliner / ABC 6 hr. -

Report Legal Research Assistance That Can Make a Builds

EUROPEAN DIGITAL RIGHTS A LEGAL ANALYSIS OF BIOMETRIC MASS SURVEILLANCE PRACTICES IN GERMANY, THE NETHERLANDS, AND POLAND By Luca Montag, Rory Mcleod, Lara De Mets, Meghan Gauld, Fraser Rodger, and Mateusz Pełka EDRi - EUROPEAN DIGITAL RIGHTS 2 INDEX About the Edinburgh 1.4.5 ‘Biometric-Ready’ International Justice Cameras 38 Initiative (EIJI) 5 1.4.5.1 The right to dignity 38 Introductory Note 6 1.4.5.2 Structural List of Abbreviations 9 Discrimination 39 1.4.5.3 Proportionality 40 Key Terms 10 2. Fingerprints on Personal Foreword from European Identity Cards 42 Digital Rights (EDRi) 12 2.1 Analysis 43 Introduction to Germany 2.1.1 Human rights country study from EDRi 15 concerns 43 Germany 17 2.1.2 Consent 44 1 Facial Recognition 19 2.1.3 Access Extension 44 1.1 Local Government 19 3. Online Age and Identity 1.1.1 Case Study – ‘Verification’ 46 Cologne 20 3.1 Analysis 47 1.2 Federal Government 22 4. COVID-19 Responses 49 1.3 Biometric Technology 4.1 Analysis 50 Providers in Germany 23 4.2 The Convenience 1.3.1 Hardware 23 of Control 51 1.3.2 Software 25 5. Conclusion 53 1.4 Legal Analysis 31 Introduction to the Netherlands 1.4.1 German Law 31 country study from EDRi 55 1.4.1.1 Scope 31 The Netherlands 57 1.4.1.2 Necessity 33 1. Deployments by Public 1.4.2 EU Law 34 Entities 60 1.4.3 European 1.1. Dutch police and law Convention on enforcement authorities 61 Human Rights 37 1.1.1 CATCH Facial 1.4.4 International Recognition Human Rights Law 37 Surveillance Technology 61 1.1.1.1 CATCH - Legal Analysis 64 EDRi - EUROPEAN DIGITAL RIGHTS 3 1.1.2. -

EPIC FOIA Request 1 Facial Recognition PIA June 10, 2020 ICE C

VIA EMAIL June 10, 2020 Fernando Pineiro FOIA Officer U.S. Immigration and Customs Enforcement Freedom of Information Act Office 500 12th Street, S.W., Stop 5009 Washington, D.C. 20536-5009 Email: [email protected] Dear Mr. Pineiro: This letter constitutes a request under the Freedom of Information Act (“FOIA”), 5 U.S.C. § 552(a)(3), and is submitted on behalf of the Electronic Privacy Information Center (“EPIC”) to U.S. Immigration and Customs Enforcement (“ICE”). EPIC seeks records related to the agency’s use of facial recognition software (“FRS”). Documents Requested 1. All contracts between ICE and commercial FRS vendors; 2. All documents related to ICE’s approval process for new commercial FRS vendors, including but not limited to: a. Document detailing the approval process and requirements for commercial FRS vendors b. Documents related to the approval of all commercial FRS vendors currently in use by ICE c. Documents related to reviewing commercial FRS vendors that have been used under “exigent circumstances” without prior approval; 3. All training materials and standard operating procedures for Homeland Security Investigations (“HSI”) agents regarding use of FRS, including but not limited to the following: a. Existing training materials and standard operating procedures around HSI agent use of FRS b. Existing training materials and standard operating procedures regarding restrictions on the collection of probe photos EPIC FOIA Request 1 Facial Recognition PIA June 10, 2020 ICE c. Training materials and standard operating procedures being developed for HSI agent use of FRS d. Training materials and standard operating procedures being developed regarding the use of probe photos; 4. -

1:21-Cv-00135 Document #: 31 Filed: 04/09/21 Page 1 of 18 Pageid #:232

Case: 1:21-cv-00135 Document #: 31 Filed: 04/09/21 Page 1 of 18 PageID #:232 IN THE UNITED STATES DISTRICT COURT FOR THE NORTHERN DISTRICT OF ILLINOIS EASTERN DIVISION Civil Action File No.: 1:21-cv-00135 In re: Clearview AI, Inc. Consumer Privacy Litigation Judge Sharon Johnson Coleman Magistrate Judge Maria Valdez PLAINTIFFS’ MEMORANDUM OF LAW IN SUPPORT OF MOTION FOR PRELIMINARY INJUNCTION Case: 1:21-cv-00135 Document #: 31 Filed: 04/09/21 Page 2 of 18 PageID #:233 INTRODUCTION Without providing notice or receiving consent, Defendants Clearview AI, Inc. (“Clearview”); Hoan Ton-That; and Richard Schwartz (collectively, “Defendants”): (a) scraped billions of photos of people’s faces from the internet; (b) harvested the subjects’ biometric identifiers and information (collectively, “Biometric Data”); and (c) created a searchable database of that data (the “Biometric Database”) which they made available to private entities, friends and law enforcement in order to earn profits. Defendants’ conduct violated, and continues to violate, Illinois’ Biometric Information Privacy Act (“BIPA”), 740 ILCS 14/1, et seq., and tramples on Illinois residents’ privacy rights. Unchecked, Defendants’ conduct will cause Plaintiffs David Mutnick, Mario Calderon, Jennifer Rocio, Anthony Hall and Isela Carmean and Illinois class members to suffer irreparable harm for which there is no adequate remedy at law. Defendants have tacitly conceded the need for injunctive relief. In response to Plaintiff Mutnick’s preliminary injunction motion in his underlying action, Defendants desperately sought to avoid judicial oversight by claiming to have self-reformed. But Defendants have demonstrated that they cannot be trusted. While Defendants have represented that they were cancelling all non- law enforcement customer accounts, a recent patent application reveals Defendants’ commercial aspirations. -

EPIC FOIA Request 1 Clearview AI March 6, 2020 FBI VIA FACSIMILE

VIA FACSIMILE March 6, 2020 David M. Hardy, Section Chief Record/Information Dissemination Section Federal Bureau of Investigation 170 Marcel Drive Winchester, VA 22602-4843 Fax: (540) 868-4997 Attn: FOIA Request Dear Mr. Hardy: This letter constitutes a request under the Freedom of Information Act (“FOIA”), 5 U.S.C. § 552(a)(3), and is submitted on behalf of the Electronic Privacy Information Center (“EPIC”) to the Federal Bureau of Investigation (“FBI”). EPIC seeks records related to the agency’s use of Clearview AI technology.1 Documents Requested 1. Emails and communications about Clearview AI, Inc., including but not limited to the following individuals and search terms: a. Clearview b. Clearview AI c. Hoan Ton-That d. Tor Ekeland e. Third parties with an email address ending in “@clearview.ai” 2. All contracts or agreements between the FBI and Clearview AI, Inc.; 3. All presentations mentioning Clearview; 4. All Clearview sales materials; 5. All privacy assessments, including but not limited to Privacy Threshold Analysis and/or Privacy Impact Assessments, that discuss the use of Clearview AI technology. Background A recent New York Times investigation revealed that a secretive New York-based company, Clearview AI, Inc., has developed an application that allows law enforcement agencies to identify people in public spaces without actually requesting identity documents or without a legal basis to 1 Clearview AI, Inc., https://clearview.ai/. EPIC FOIA Request 1 Clearview AI March 6, 2020 FBI determine the person’s identity.2 Clearview -

California Activists Challenge Clearview AI on Biometric Surveillance

For Immediate Release March 9, 2021 Press contact: Joe Rivano Barros, [email protected] Sejal Zota, [email protected] Ellen Leonida, [email protected] California Activists Challenge Clearview AI on Biometric Surveillance Mijente, NorCal Resist, and local activists sued the facial recognition company Clearview AI today over its unlawful surveillance activities. The lawsuit alleges that the company’s surveillance technology violates privacy rights and facilitates government monitoring of protesters, immigrants, and communities of color. Clearview’s facial recognition tool—which allows instantaneous identification and tracking of people targeted by law enforcement—chills political speech and other protected activities, the suit argued. The lawsuit was filed in the Alameda County Superior Court on behalf of Mijente, NorCal Resist, and four individual plaintiffs, including a DACA recipient, who allege that Clearview AI violated their rights under the California constitution, California’s consumer protection and privacy laws, and California common law. “Privacy is enshrined in the California constitution, ensuring all Californians can lead their lives without the fear of surveillance and monitoring,” said Sejal Zota, a lead attorney in the case and the legal director of Just Futures Law, a law project working to support grassroots organizations. “Clearview AI upends this dynamic, making it impossible to walk down the street without fear your likeness can be captured, stored indefinitely by the company, and used against you any time in the future. There can be no meaningful privacy in a society with Clearview AI.” Clearview AI has amassed a database of over three billion facial scans by scraping photos from websites like Facebook, Twitter, and Venmo, violating these companies’ terms of service and storing user images without permission. -

ACLU Facial Recognition–Jeffrey

Thursday, May 7, 2020 at 12:58:27 PM Eastern Daylight Time SubjeCt: Iden%fying the Impostor - October 10, 2019 Date: Monday, September 30, 2019 at 10:09:08 AM Eastern Daylight Time From: NESPIN Training To: jeff[email protected] Training Announcement Identifying the Impostor October 10, 2019 Location: Gardner Police Department, Gardner, MA Time: 8:00 am - 4:00 pm This program is a Universal Law Enforcement Identity Theft recognition program that will assist you in identifying the presence of an Impostor. This one-day course will teach participants a new and dynamic method of identifying identity thieves, aka Impostors, using multiple State and Federal databases. The instructor will focus on foreign national impostors using stolen identities to hide in plain sight and commit various offenses in the New England area and beyond with a focus on trafficking drugs. This program teaches a comprehensive study of NCIC Triple I and how to understand the search results, along with combining all this information provided from the RMV, BOP, AFISR and “DQs” from Puerto Rico. We also cover facial recognition and altered print recognition. Please go to the following link for complete details: https://extranet.riss.net/public/1419b76f-32ff-49df-a514-513433d66fb7 The views, thoughts, materials and opinions articulated by the instructor(s) of this training belong solely to the instructor(s) and do not necessarily represent the official position or views of NESPIN. ---------------------------------------------------------------- Please do not reply to this e-mail as it is an unmonitored alias. If you do not wish to receive these training mailings, please choose the Opt-out feature at the bottom of this email. -

Evaluate Stu-A

Academic Dishonesty-- What Can he Done? by Kevin R. Convey In a dinner meeting held last week , in Dana dining hall, the Committee on Academic Dishonesty recommended that more precautions be taken by professors* to prevent cheating during exams. The student committee is presently seeking faculty members to work with them in the forma- tion of formal recommendations to be presented to President Strider within the next few weeks. According to Committee member Dean Earl Smith, the committee was formed after m any stu- dents had expressed to hirn their anxiety concern- ing the prevalence of academic dishonesty during the last exam period in December. Smith and Strider selected the eight members of the committee as a cross section of "good students" to make recommendations on how such occurences might be reduced in the future. photo by Susie Gernert The Committee on Academic Dishonesty These two assumptions and the recommendations and other precautions against cheating during Robin Kess- includes: Lisa Hall, Pau l Harvey, which follow from tli em are aimed at improving fmal exams at Colby. ler, Anne Kohlbry , David Linsky, Dana Russian, conditions which presently do little to limit the Although the committee is presently awaiting David Simonds and Lisa Triplet. incidence of academic dishonesty. One problem , faculty input regarding the nature and scope of The committee is making its recommendations according to Dean Smith, is the failure on the part the formal recommendations to be made to the based on two assumptions: the first supposes of many faculty members to directly confront President, the students have come up with some that students have the right to have all examinations students they believe to have cheated. -

Eulyn C. Hufkie

EULYN C. HUFKIE Costume Designer eulyn.com PROJECTS Partial List DIRECTORS STUDIOS/PRODUCERS TATTERED HEARTS Brea Grant BLUMHOUSE / EPIX Feature Film Paige Pemberton, John Ierardi UNHUMAN Marcus Dunstan BLUMHOUSE / EPIX Feature Film Paige Pemberton, Alex Kruener AMERICAN REFUGEE Ali LeRoi BLUMHOUSE / EPIX Feature Film Paige Pemberton, Lisa Bruce A HOUSE ON THE BAYOU Alex McAulay BLUMHOUSE / EPIX Feature Film Paige Pemberton, Mary Margaret Kunze BINGO HELL Gigi Saul Guerrero BLUMHOUSE / AMAZON Feature Film Ian Watermeier, Lisa Bruce ORACLE Daniel di Grado WILL PACKER PRODUCTIONS Feature Film Erika Hampson, James Lopez MADRES Ryan Zaragoza BLUMHOUSE / AMAZON Feature Film John H. Brister, Lisa Bruce BLACK AS NIGHT Maritte Go BLUMHOUSE / AMAZON / John H. Brister Feature Film Lisa Bruce, Guy Stodel, Maggie Malina BLACK BOX Feature Film Emmanuel Osei-Kuffour BLUMHOUSE / AMAZON / John H. Brister EVIL EYE Elan Dassani BLUMHOUSE / AMAZON Feature Film Rajeev Dassani Ian Watermeier THE PURGE Various Directors BLUMHOUSE TELEVISION / USA Season 2 Alissa Kantrow THE RESIDENT Phillip Noyce FOX Seasons 1-2 Various Directors Rob Corn HELLFEST Gregory Plotkin CBS FILMS / VALHALLA ENT. Feature Film Jolly Dale, Willoughby Douglas MAYANS MC Pilot Kurt Sutter FX / Jon Paré, Kurt Sutter, James Elgin FOREVER MY GIRL Bethany Ashton Wolf LD ENTERTAINMENT Feature Film Alison Semenza RYAN HANSEN SOLVES CRIMES Various Directors BLUEGRASS FILMS / YOUTUBE RED ON TV Season 1 Stephanie Meurer, Tracey Baird 24: LEGACY Various Directors IMAGINE TELEVISION / FOX Series -

SCRAPING PHOTOGRAPHS Maggie King

SCRAPING PHOTOGRAPHS Maggie King INTRODUCTION ................................................................ 188 I. TECHNICAL BACKGROUND ON SCRAPING ................................... 190 A. How to Scrape a Photograph ....................................... 190 B. Why Scrape a Photograph? ......................................... 192 II. THE LAW OF SCRAPING ................................................... 193 A. CFAA Claims ......................................................... 194 1. CFAA Background: Ambiguous Statutory Language ......... 194 2. CFAA Scraping Claims in Caselaw .............................. 195 B. Contract Claims ..................................................... 197 C. Copyright Claims .................................................... 198 III. SCRAPING PHOTOGRAPHS ............................................. 200 A. Copyright and Photographs ........................................ 201 1. Analogous scraping activities are fair use ..................... 202 2. Face Scans are not protectible derivative works. ............ 203 B. Data Ownership ..................................................... 205 1. Non-exclusive licenses bar platforms from asserting claims for user-generated data ............................................... 205 2. Paths to asserting user claims directly ........................ 206 IV. UNRESTRICTED SURVEILLANCE AND STRONGER PROTECTIONS FOR CYBERPROPERTY .............................................................. 207 CONCLUSION .................................................................